As times have evolved, so have our data challenges — but we have been trying to solve it for ages. Giving it dedicated resources, robust hardware, etc. we still end up missing SLAs in our traditional On-Premise & Cloud-hosted worlds. Add to the fact the constant dependency on personnel to manage the data loads, data ops, size of the database, and on and on. When you look at a modern data platform, you are basically looking at something that will scale to your pipeline needs, be agile in the works, fully managed, does not need data shape restrictions, provides dependable performance at scale and is easy to query in SQL and via APIs! Enter Snowflake DB – it’s like the founders sat down around a round table, put all the traditional problems on paper, and literally solved it. Their motto moves data once and accesses it multiple times in various ways at various scale and capacity.

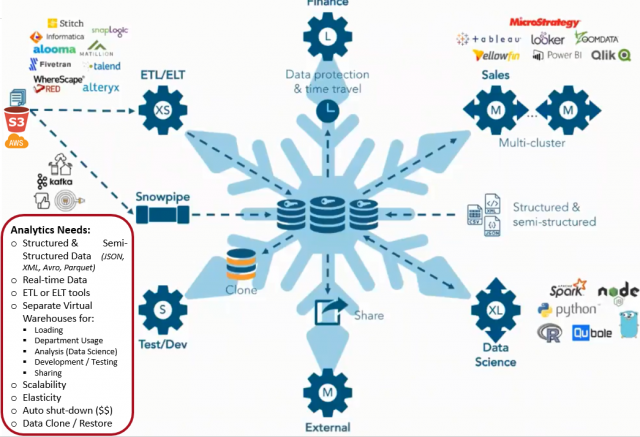

That leads them into many personas, and their own explicit compute surrounding themselves around the data on the cloud.

- The data analysts

- The traditional data pipelines

- The Data Science teams

- The Application teams

- The BI and Analytics teams

They truly separated compute and storage and automated the management of the service. That reduces the code/script you will have to handwrite to make these various computes (Snowflake calls them Virtual Warehouses) dance to the needs of more and more data.

Their storage is in the center surrounded by computes of various sizes catering to each of those personas listed above. Add to the fact that the service literally shuts down and does not cost you a penny when nothing is being queried by the compute virtual warehouses. You are billed based on a credit matrix that aligns with the size of the compute. Storage costs are flat — they took the guessing game out for enterprises across the board. Snowflake has an ecosystem that supports many third-party products and ETL tools.

Bring data of any shape and store it into Snowflake – you can basically Lake and Mart on the same service and provide access to a multitude of Analytical Sandboxes to your various end-users across the board.

You can preset and baseline the number of Virtual Warehouses you need and set up an option to add scale when required. When queries start to queue up, the new Virtual Warehouse kicks into gear without disrupting existing queries and finishes the data load. This is the best part of Snowflake, and it can ingest billions of rows of data without any performance issues.

Add time travel ( retention of your data states ) and capability to clone data at no cost for Dev and Test instances. It really changes the game when it comes to serving Organizations of various sizes and needs.

All in all, a Shared data architecture that scales to the needs and manages itself without much overhead.

Analytics Workloads via Connectors and Virtual Warehouses addressed at scale –

Snowflake has a federated connector to Domo that can be leveraged query real-time data and support mobile analytics needs in Apps. With Snowflake, you can literally fire up live queries using Tableau and Power BI and probably get away from loading data into Tableau Extracts or Power BI Data Sets in case the need is for Real-Time Dashboards. Helping you reduce the size of your analytical models in BI tools. Snowflake can be provisioned for HIPPA and PII regulatory needs as well on Azure, AWS, and Google. Add metadata management, and you get a well rounded managed data service for modern times.

We at Perficient are working with many existing and potential clients across various Verticals with Snowflake as a Partner to tell the story of data and reduce age-old overheads – please feel free to reach out to us. We have worked closely with Informatica, Talend, for enterprise implementations to leverage Snowflake across the board. In modern times Snowflake is a real disrupter in the industry.