In this article, I will show you how you can set up your own Matillion instance, but before we get started let’s talk about the Matillion ETL tool.

What is Matillion, and why should you care?

Matillion is an ETL tool that is available on the Cloud Marketplace. It is completely cloud-based, billed at an hourly rate, and comes with the most significant advantages when coupled with Snowflake, Redshift, and Delta Lake. To get that significant advantage, I will be showing you the coupling of Matillion with Snowflake in this article.

We have been using the Matillion ETL tool on the data warehouse, such as Snowflake on the ETL project for our clients. Recently I worked on a project in which I was working on Matillion for data transformation and orchestration from source to target.

Matillion has two main flavors of jobs:

- Orchestration: Orchestration is primarily concerned with DDL statements (especially Creating, Dropping, and Altering resources), loading data from external sources.

- Transformation: Transformation is used for transforming data that already exists within tables. This includes filtering data, changing data types, and removing rows.

It has various types of functional components, that are used as a toolkit for your ETL journey. These components range from DDL SQL commands to Python scripts, and some of these components are designed to perform some of the most complexes of tasks. Also, it gives us the flexibility of creating variables that we can use in any query editor component. Matillion provides variables in two flavors – The Environmental variable and the Job variable.

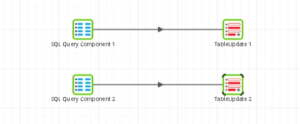

I will be showing you the example of a Transformation Job and Orchestration Job created in my recent project.

This is the Transformation job in which I have used SQL Query Component (Blue) for writing SQL script. The outcome is passed on to the next component that is Table Update Component (Red), which updated the outcome of the SQL component into the target table that is defined in Table Update Component.

This is the Orchestration job in which we are using several components, but the main task of this job is to execute the transformation job. As you can see, we have a Start Component that starts the job run, then we have a transformation job that is performing the transformation (shown above), then we have an End Success Component that ends the job run. If there is a failure in the Transformation Job component, then the OR Component will wait for any of its input to be completed before continuing the job. So when the failure occurs the OR component adds the SNS Message Component to the job that sends the notification of failure over mail using SNS service of AWS and the End Failure Component ends the job run in the failed state.

Now that you have a basic understanding of the Matillion tool, you are probably excited to build your job for data transformation, so let me give you the complete procedure of how you can create your own Matillion instance and start getting your hands dirty.

Let’s Begin!!! The creation of your Matillion ETL Journey.

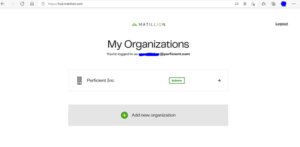

First, create a Matillion Free Trail account on Matillion Hub here. (Note: Matillion account required organization domain ID. It will not allow a free domain ID like gmail.com or yahoo.com).

Matillion will send you a confirmation email on your organization id after successful account creation. You need to make sure your organization is added properly to your account as shown below in the image.

If not added you can add your organization with the “Add new organization” button as shown in the image above.

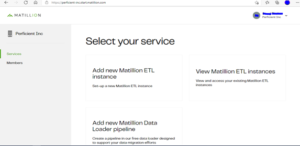

Once you have added your organization and click into your organization panel, you will need to select a service as shown below.

Select the “Add new Matillion ETL instance” option for creating a new instance/VM to work on.

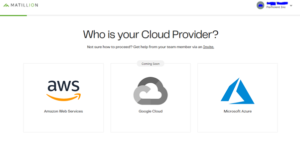

On the next page, you will need to select the appropriate cloud provider as per your requirement option (AWS and Azure). Please note: GCP is currently not supported, as shown below.

For now, let’s go with the AWS option as a cloud provider, next you will need to choose your cloud data platform.

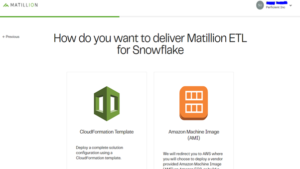

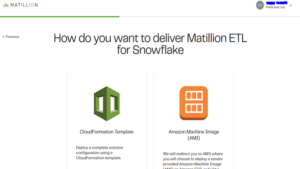

In this instance we are going to select Snowflake as the data platform. Then, you will need to choose the launching method of your Matillion as per your AWS service. You will receive two options, as shown below.

Option 1 – CloudFormation Template There are several prerequisites you will need to define in the next couple pages.

First, let’s select the region in which you want to host an instance.

Next, select VPC for your instance to launch AWS resources in a virtual network that you define.

The next page will allow you to select CloudFormation Template and choose the template accordingly as per your requirement.

You will then be redirected to the AWS console page where you will need to log in with your AWS credentials.

Once logged into AWS, you will be asked to create a stack for your template, please provide details as required to create a stack. After which it will create a stack and will launch your Matillion instance with the selected region and VPC. The stack status should be create_complete status as shown below.

Option 2

If you select the AMI option to launch your instance, it will redirect you to the AWS console page where you will need to log in with your AWS credentials.

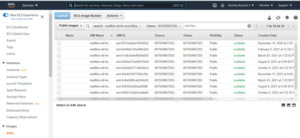

After logging into your AWS account, you will get a list of AMIs for the Matillion ETL tool, you just need to select one AMI for the launching instance.

After successfully creating an instance (whether by AMI or CloudFormation Template), you are done with the Mtillion ETL tool creation and now will need to access that tool over the internet as a SaaS platform.

Accessing Matillion ETL Tool

For accessing the Matillion tool you need to make sure that your created instance is up and running continuously. You will require an instance Public IP to load the Matillion ETL Tool login page on your browser.

Use the copy to clipboard option and paste the public IP on any browser. You will get a Matillion Tool login page asking for a Username and Password. The username depends on the OS on which the AMI is built.

As in my scenario, my AMI is Linux based so my Username will be ec2-user. Password will be the instance id as shown in the above image.

Please make sure you use the copy-to-clipboard option to avoid failed login attempts. Below image showing the login page of Matillion ETL Tool.

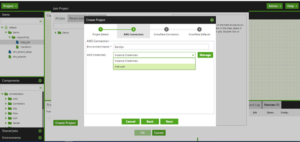

Once logged in into the Matillion ETL tool console you will get a pop-up window, use the Create Project Button to create a new project. You will get windows with 4 stages required for the project.

Fill in the required details as shown below.

The next stage is AWS connection. As shown below, the environment name and AWS credentials are required to proceed. In my case I have created an IAM user in the AWS account (i.e., mat-user so I have selected that user as AWS credentials). For adding an IAM user to Matillion use the Manage button. You will need the access key ID and secret access key of that IAM user.

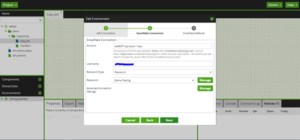

The next stage is setting up the Snowflake Connection, which provides details for the options, as shown below. For these, you will require a Snowflake account. If you don’t have an account, please create a 30-day free trial account here.

The last stage is setting up Snowflake Defaults Environment, which means the snowflake default data warehouse setting. (See below for a reference). If Snowflake is connected successfully you will get your default warehouse options in the drop-down list automatically.

Click on Finish and your project will be created. Now, you can work on Matillion ETL for data transformation using Orchestration Jobs and Transform Jobs. Use components for transformation and data flow as shown in the image below.

Some basic panel is provided on the console page of million.

- At the bottom right you get task status (success or failed) for failed you get the descriptive error also for troubleshooting.

- At the center, towards the bottom, you will get properties of all activities you are using in the Grid panel for data flow. Select that activity and the properties tab will show you all properties of that activity.

Here you have successfully created a Matillion ETL Tool instance using AWS and Snowflake. You have now been able to successfully create a Matillion ETL Tool instance using AWS and Snowflake. To learn more about our AWS cloud capabilities and practices and how Perficient can help you further your enterprise’s digital transformation visit us here!

Keep Learning!!!!!

How Can Perficient Help You?

Perficient is a certified Amazon Web Services partner with more than 10 years of experience delivering enterprise-level applications and expertise in cloud platform solutions, contact center, application modernization, migrations, data analytics, mobile, developer and management tools, IoT, serverless, security, and more. Paired with our industry-leading strategy and team, Perficient is equipped to help enterprises tackle the toughest challenges and get the most out of their implementations and integrations.

Learn more about our AWS practice and get in touch with our team here!

This blog really help me for creating Matillion ETL Tool instance.

“Great share”, It will help to know various functionality of Matillion tool.

Excellent information shared with the clear visualization, Thank Suraj , Great Job!!

Very well written, keep it up

Great write-up suraj👍

Well explained, keep it up suraj, sure it will help d peoples who belongs for same field.