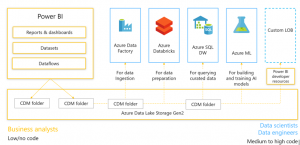

Context – Bring data together from various web, cloud and on-premise data sources and rapidly drive insights. The biggest challenge Business Analysts and BI developers have is the need to ingest and process medium to large data sets on a regular basis. They spend the most time gathering the data rather than analyzing the data.

Power BI Dataflow, the Azure Data Lake Storage Gen 2 makes this a very intuitive, and result based exercise. Prior to Power BI Data flow data prepping was restricted to the Power BI Desktop.

Power BI Desktop

Power BI Desktop users use Power Query to connect to data, ingest it, and transform it before it lands in the dataset.

There are some limitations to the Power BI Desktop data prep

- The relevant data is self- contained in individual data sets.

- Difficulty to reuse data transformation logic – restricted to the data set that is being prepared.

- Inability to set up transformations for incremental data loads – as they just transform the data.

- Capability to do data load in scale.

- It lacks the open data approach accomplished by moving the data to the Tenant’s storage account.

- Open data approach gives access to multiple Azure based services to help data analysts and scientists.

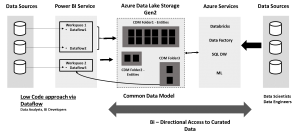

The below diagram depicts how Dataflows aide the Business Analysts when they on-board data into the Azure Data Lake Storage Gen2 and then can leverage all the other services they have access to. How is this possible – the short answer is Common Data Model.

Common Data Model

The Common Data Model (CDM) provides a shared data language for business and analytical applications to use. The CDM metadata system enables consistency of data and its meaning across applications and business processes (such as PowerApps, Power BI, Dynamics 365, and Azure), which store data in conformance with the CDM.

The diagram above shows a bi-directional approach to enterprise analytics on the cloud.

Low Code approach

Left hand side shows the low code approach where Data Analysts from different Lines of Business can access, prep and curate datasets for their analytics needs – create Analytics content.

- They can also leverage content that has been loaded into the Data Lake by the Enterprise Data Engineers in their Power BI Reports

- With this approach there will be a need for data governance and guard railing

- Data Analysts will have dedicated CDM Folder they will have access to map to the workspace.

- Data Analysts will not write to a CDM Folder that Data Engineers are writing to but will have read access.

- Limit the use of Dataflows to data preparation – only to analysts who use the data to create datasets to support enterprise analytics.

- To allow easy data exchange, Power BI stores the data in ADLSg2 in a standard format (CSV files) and with a rich metadata file describing the data structures and semantics.

Power BI Dataflows allows you to ingest data into the CDM form from a variety of sources such as Dynamics 365, Salesforce, Azure SQL Database, Excel, or SharePoint. Once connected prepare the data and load it as a custom entity in CDM form in Azure Data Lake Storage Gen2.

Enterprise Data Workloads approach

Right hand side shows the various Azure services that potentially leveraged the Enterprise Data Engineers to drive analytical workloads.

Conclusion and Insight

The details outlined in this blog enables rapid insight to enterprise data. This approach drives adoption and establishes standards that drives sustainable analytics’ projects leveraging Power BI. This also enables access to curated and cataloged data. The semantic standardization enhances ML and pushes forth AI on the platform.

The Gartner Magic Quadrant for Analytics and BI released in February 2019 named Microsoft Power BI as the leader in the analytics quadrant. One of the driving factors listed in it is Microsoft’s Comprehensive product vision. It draws attention to Common and open data model, AutoML, Cognitive Services like text, sentiment analytics via Power BI. I tried to draw attention to the same in this blog and simplify it.