In this and the following post, I will cover the steps entailed in adding dimension and fact tasks in Oracle Data Sync. The latest releases of Data Sync included a few important features such as performing look-ups during an ETL job. So I intend to cover these best practices when adding new dimension and fact tasks. These instructions are based on Oracle BI Data Sync version 2.3.2 and may not apply to previous versions of Data Sync.

In this example, I already have 2 DataSync Tasks in my project, one to load the dimension table: W_OTLIS_STATE_D and one to load the fact table: W_OTLIS_TRANSACTIONS_F. In the following step by step instructions, I will demonstrate how to add a second dimension table. Refer to my following post if interested about the steps of adding a Fact task.

- In this example, I will add a dimension table called W_OTLIS_METRO_STATION_D to be loaded from the source table: OTLIS_METRO_STATION.

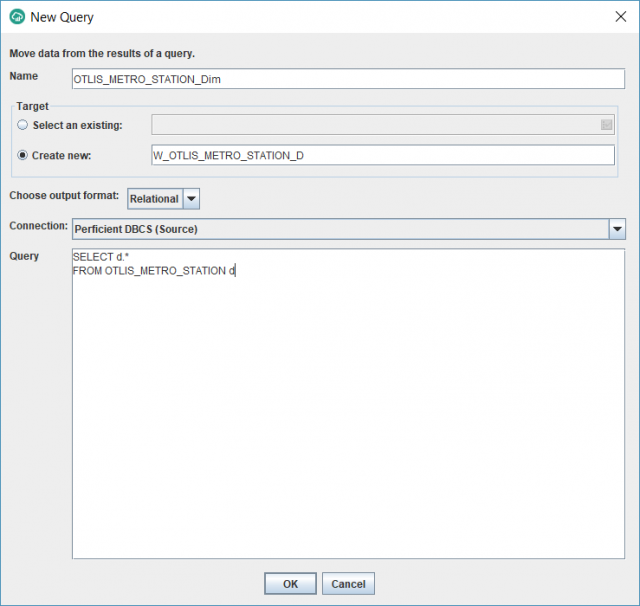

- Under the Project tab, select Relational Data, and then Data From SQL. (Other options work, but in this example I will use a SQL query to source data for the dimension since that allows maximum flexibility to customize the source query in the future if needed.)

- In the new window, enter the name of the new task (without any spaces), enter the name of the new target table for the dimension (follow your naming convention), chose the Relational output format, select the source Connection, and enter a SQL statement to select the columns needed from the source table. In my example, I am selecting all the columns. Click on OK.

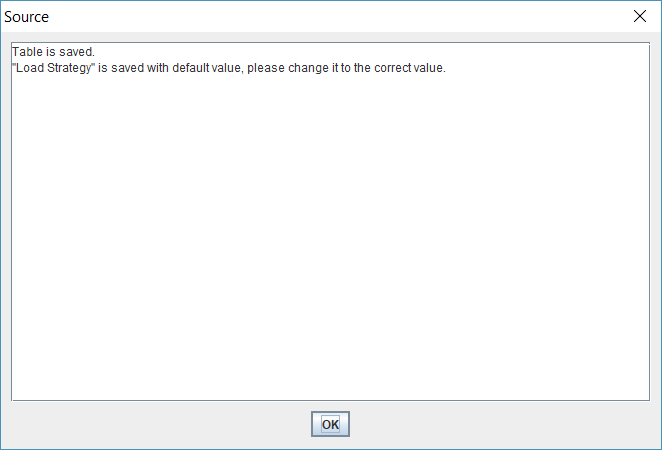

- Wait for a message like the following to confirm the operation was successful. Then click OK.

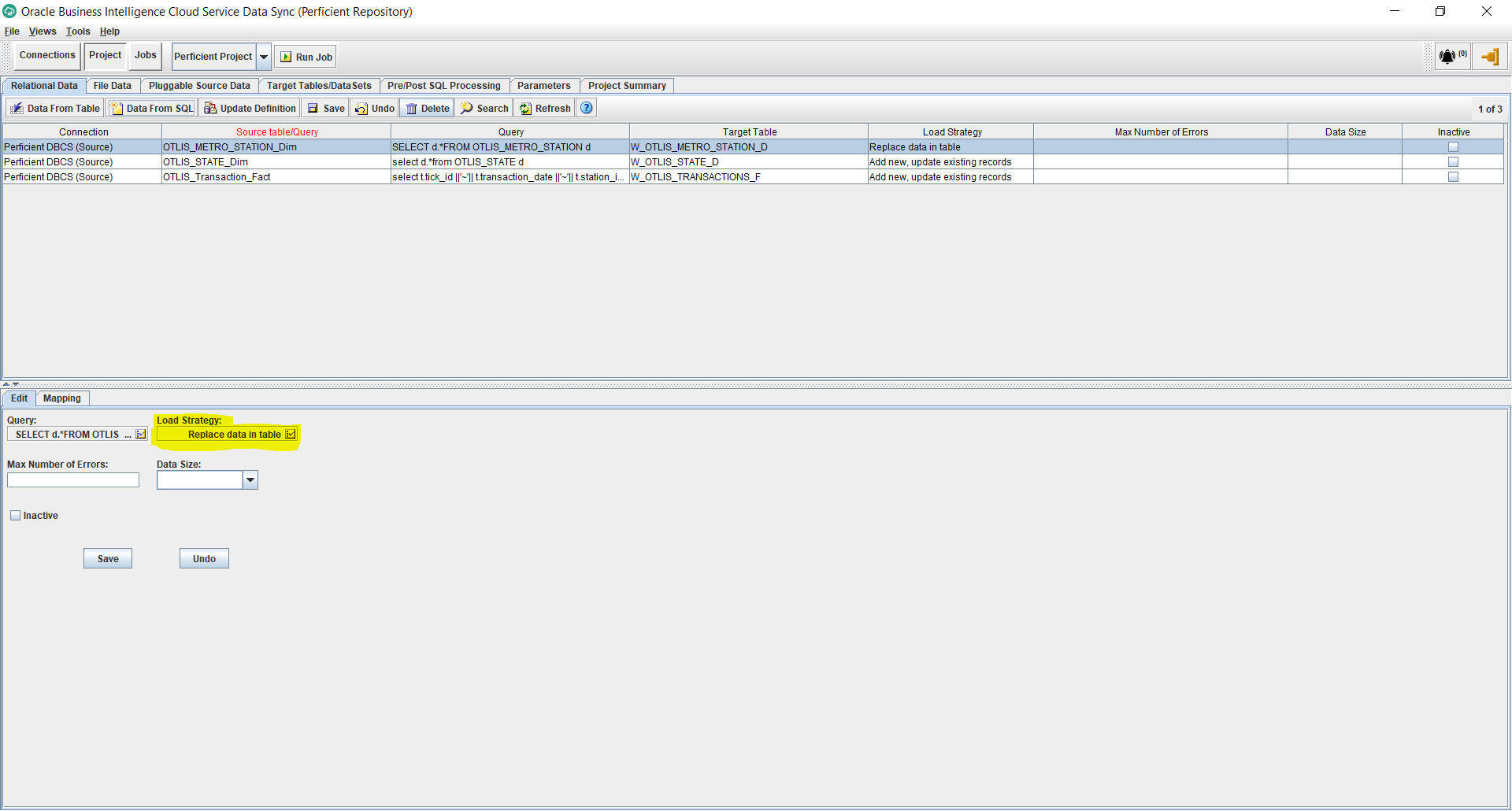

- Under Project, you should now see a new record added for the new task. Click on the newly created task and click on the Load Strategy from below to edit it.

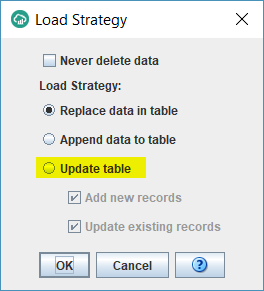

- By default the task does a full load of the table because the Load Strategy is set to “Replace data in table” option. Ideally, if your source table supports incremental tracking using a last update date, you want to switch the Load Strategy to support incremental loads and therefore achieve faster job run times. To do that, select the “Update table” option. Keep both checkboxes below that checked. Click Ok.

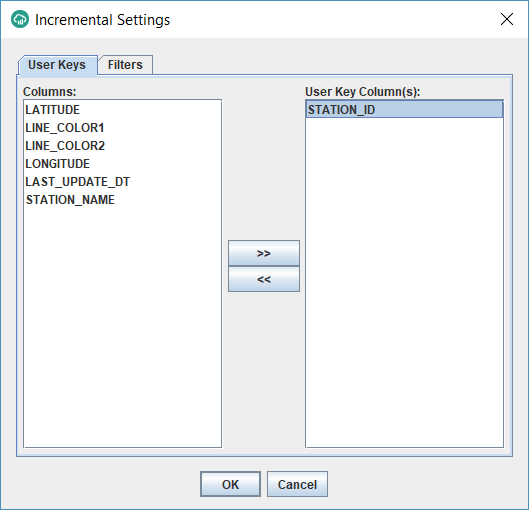

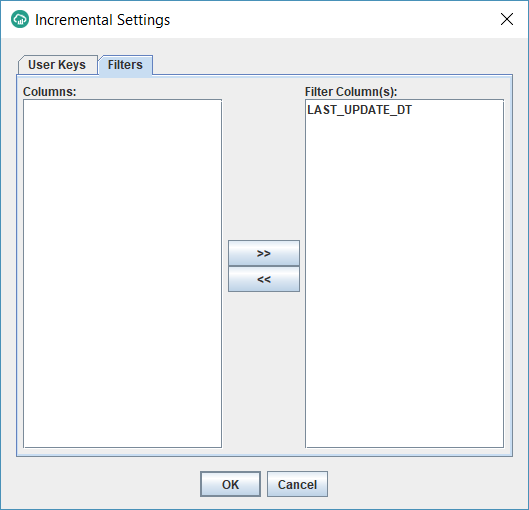

- You should now get another window with 2 tabs as follows. On the User Keys tab, select the column(s) that constitute the unique identifier of the source table. In my example, it is made up of one column: STATION_ID. This is the column that will be used to update the target dimension table on an incremental basis to avoid duplicating the same record once its updated in the source. On the Filters tab, select the date column that tracks the date/time of any updates/inserts that happen on the source table. When running incremental, Datasync will only extract source table records that are inserted/updated after the last DataSync job run time. Click OK.

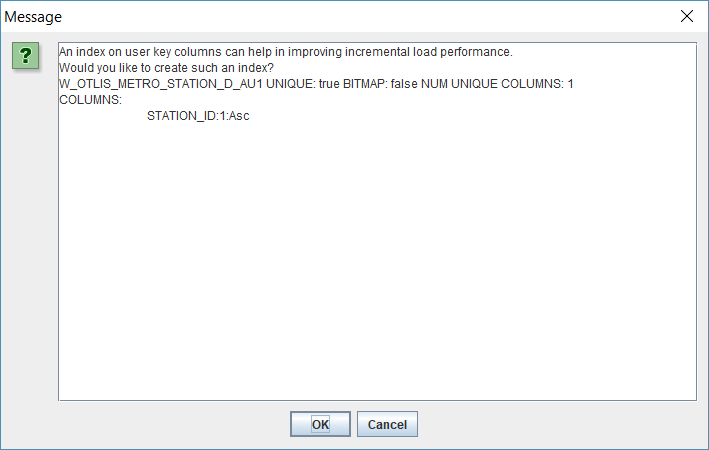

- You should see a message as the following. Click OK to create the unique index on the user key. The purpose of this is to enhance the performance of incremental loads by creating an index on the matching criteria columns.

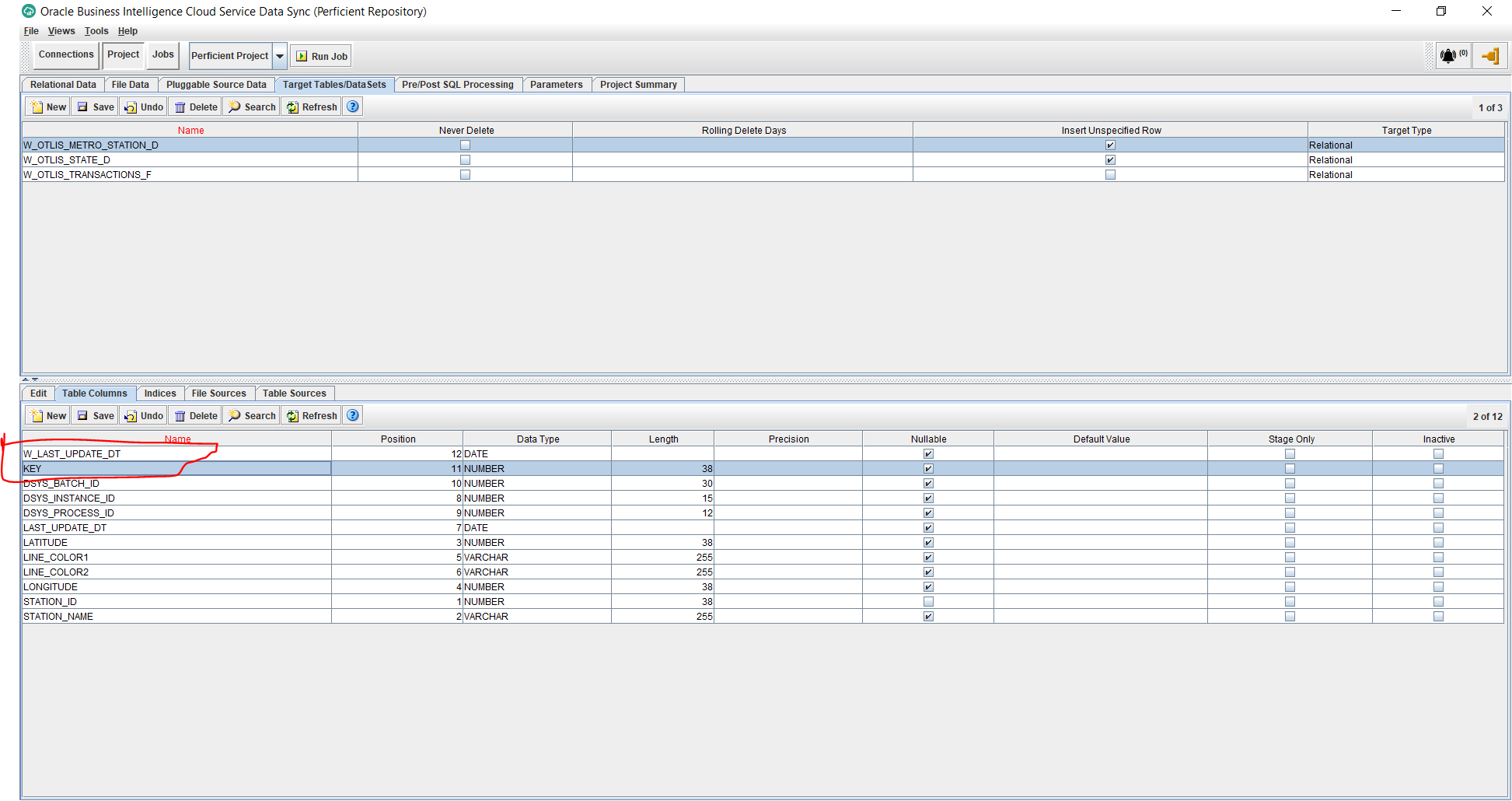

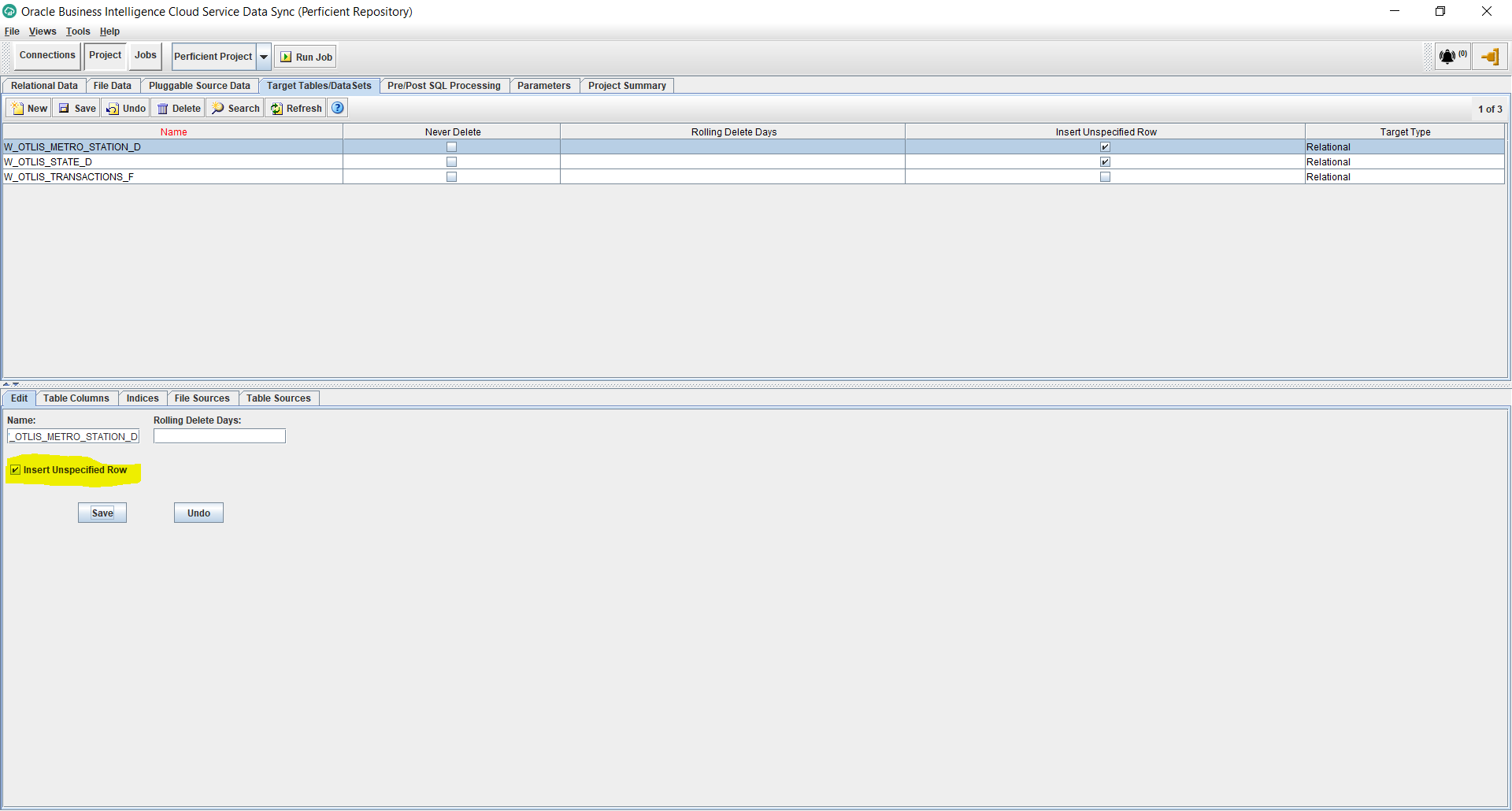

- Under Projects, click on the Target Tables/Data Sets tab, and select the newly added dimension table. Check the box: “Insert Unspecified Row”. The purpose of this option is to insert an “Unspecified” row into the dimension which has a primary key = 0. This allows us not to lose fact table records whose dimension foreign keys don’t exist in the dimension. Instead of losing these fact records, they will get loaded against the Unspecified dimension value.

- Still under Target Tables, select the newly created dimension table and then select the Table Columns tab from the bottom half of the screen. We will now add 2 standard columns to the dimension table. Click on the New button from the bottom half of the screen and add the following 2 columns:

- KEY – Data Type: NUMBER – Length: 38 – Uncheck Nullable

- W_LAST_UPDATE_DT – Data Type: Date – Uncheck Nullable

- Click on the Relational Data tab under Projects. Select the newly created task for the dimension. In the bottom half of the screen, click on the “Unmapped Columns” button. This will show you a window with the 2 columns you just created. Move them over to the right side under Selected Columns and click OK.

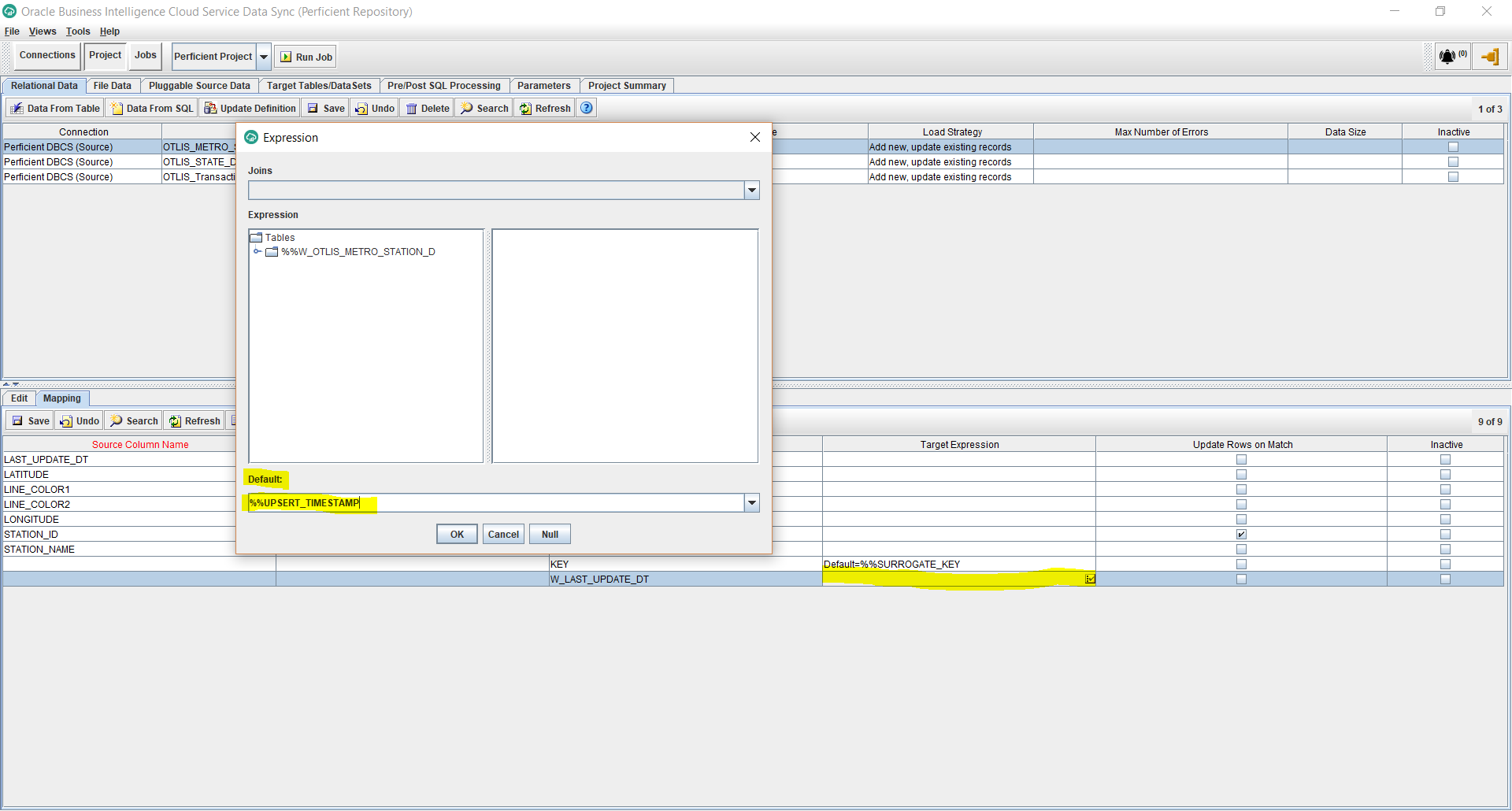

- Edit the Target Expression for the KEY column, and enter the Default as: %%SURROGATE_KEY. This will generate a primary numeric unique key for the new dimension table. Click OK.

- Edit the Target Expression for the W_LAST_UPDATE_DT column, and enter the Default as: %%UPSERT_TIMESTAMP. This will automatically populate the date and time that the rows will be populated at in the target dimension table. Click OK.

- Click Save.

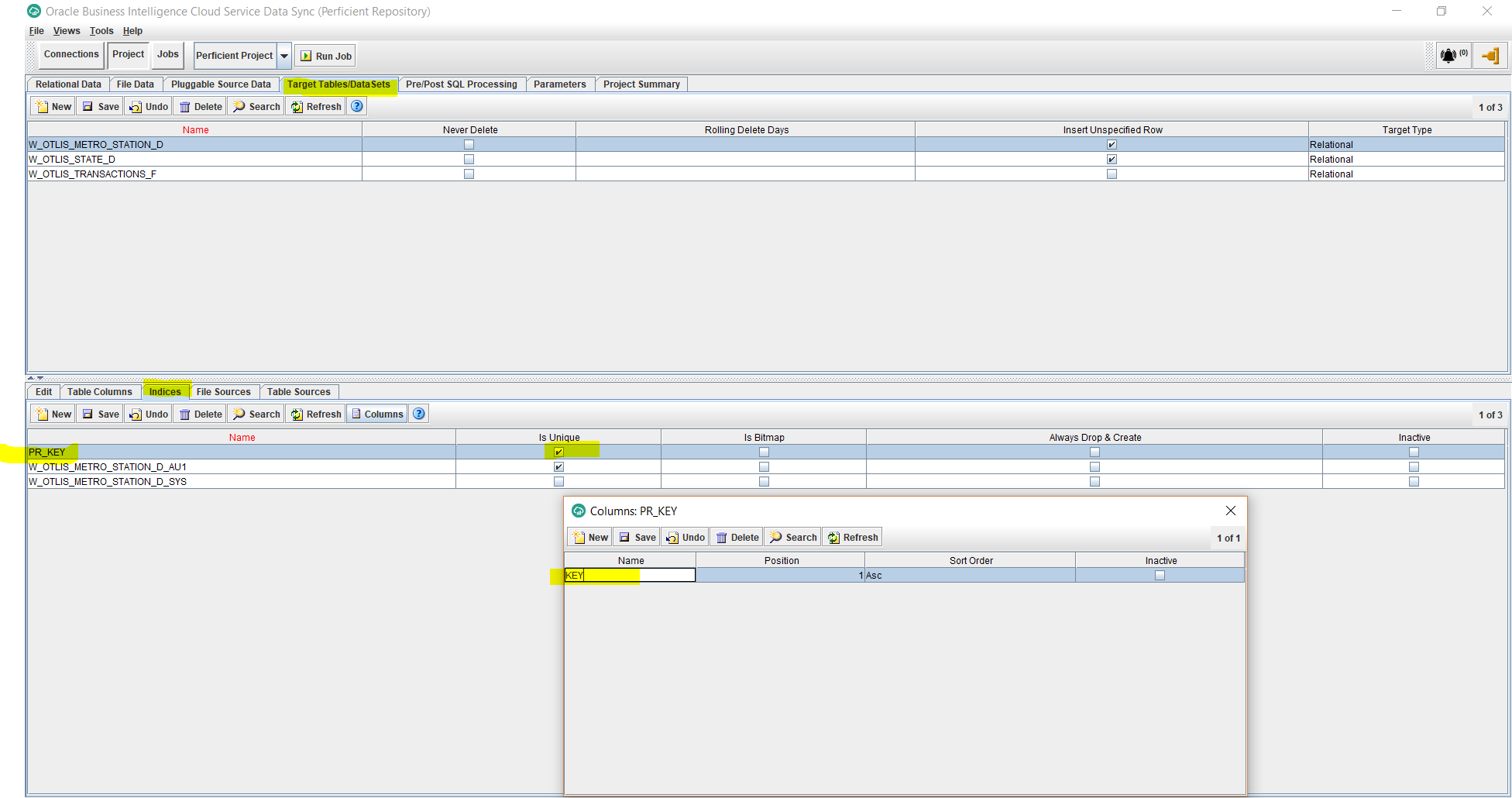

- Under the Projects, Target Tables tab, select the newly added dimension table and then select Indices from the bottom half of the screen. We will add a primary key index. So click New and add a Unique Index by checking the Is Unique checkbox. Click Save. Then click on the Columns button to add the name of the column: KEY. This is the same column name added in the previous steps as the surrogate key of the dimension. Save the changes.

- This is it for adding the dimension task. We are ready now to run the job. This should create the new dimension table with the indices and populate the table with the source data. Check to make sure that the record count in the target dimension table matches the record count from the source, plus one additional row where the KEY = 0 and the text attributes are “Unspecified”.

- Once the first job run is complete and validated, add some test incremental data in the source table, re-run the job and you should see the new changes updated into the target dimension table.