Optimizely Configured Commerce

SEO is a vital aspect of any ecommerce application, as having a good SEO ranking increases traffic to your website and brings in revenue opportunities. Optimizely Configured Commerce (formerly Optimizely B2B Commerce) comes packaged with SEO features built-in, providing SEO configuration settings without requiring development code. Don’t worry if the native capabilities do not fit your needs, as there are extension points available to customize your version to achieve your desired outcome.

Generally, companies expose all of the content on their website to search engines to earn top hits. Static content pages such as About Us and Terms & Conditions and dynamic content pages like the Product Catalog, Product Listing Pages (PLP), and Product Detail Pages (PDP) are also exposed to search engines. The search bots then crawl and index these pages into the search directory to be displayed in search results based on relevant keywords.

PLP and PDP

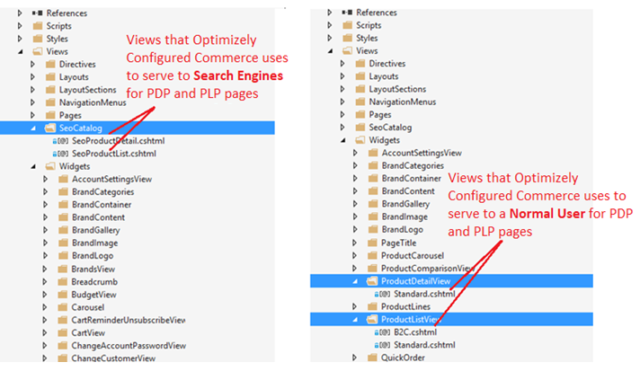

Optimizely Configured Commerce renders server-side static pages and can be directly exposed to search engines. The dynamic pages like Product Listing and Product Detail pages are retendered at the client side (using AngularJs in Classic CMS and using ReactJs in Spire CMS). They hence cannot be exposed to search engines directly as it requires content to be rendered at the server side. Because of this reason, Optimizely Configured Commerce maintains two different variants for PLP and PDP, one to serve the normal user that uses a client-side approach to render the page content dynamically to make the page load faster, and the other one for search bots that render entirely on the server-side.

SearchCrawlerRouteConstraint

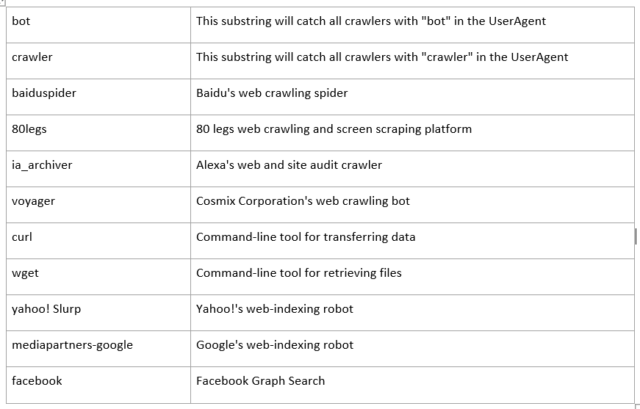

Optimizely Configured Commerce looks at the User-Agent string from the request object sent to the web server to identify if the request is from search bots or an average user. Then the Optimizely Configured Commerce framework runs this check while resolving the route through route collection using route constraint. This check happens in the SearchCrawlerRouteConstraint class located in the Insite.WebFramework.Mvc assembly. The match method returns a Boolean response of whether the incoming request, precisely the User-Agent object, contains any of the crawlers listed below.

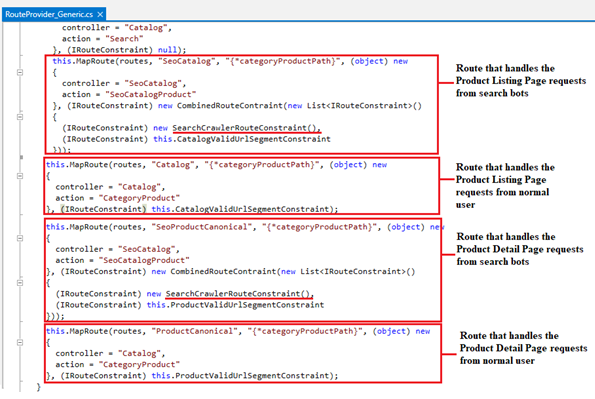

The Optimizely Configured Commerce framework maintains two entries each in route collection to handle the incoming requests for PLPs and PDPs, as shown below:

This group’s first route handles requests from search bots, and the second route handles requests from normal users.

So, if the request is from search bots, that request is redirected to the SEOCatalogController that serves the SEO Catalog pages. If it’s from a regular user, it redirects the request to the CatalogController.

Customize and Debug

Sometimes based on requirements, there is a need to customize or add some additional information to the SEO Catalog pages being indexed by search crawlers. You can customize and debug these pages by following these steps:

- If your custom theme does not have Views/SEOCatalog folder, copy it from the parent theme to your custom theme folder, maintaining the same folder hierarchy (Themes/<yourthemename>/Views/SEOCatalog)

- Make any required changes to these pages and save the file

- Now hit your website URL from Chrome or any of your preferred browsers and navigate to any product detail page

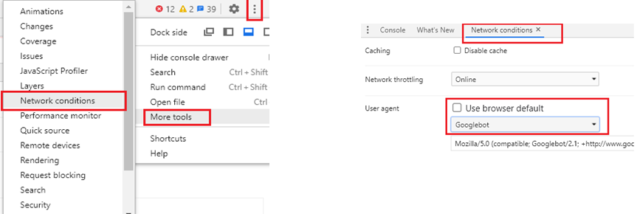

- Press F12 on your browser that will open a developer console (Steps from here could be different based on the browser you are using)

- Click on three vertical dots from the top right corner of your browser developer console and select the More Tools -> Networking Conditions option.

- From networking conditions settings, uncheck the Use Browser Default checkbox for User Agent, enabling the user agent dropdown list for manual selection.

- Select Google Bot from the user agent list and refresh your product detail page.

- This will open a SEOProductDetails page instead of our regular Product Details page in your browser.

- Now you can see what data is being exposed to Search Bots from your Product Detail page and add or modify any information to this page by customizing the SEOProductDetail.cshtml page.

- Just go ahead and put the debugger anywhere on SEOProductDetail.cshtml page and attach the project solution to your website to debug

- Refresh your product detail page in the browser, and you should now have a debug pointer on your page.

If you use Google Chrome, you can add the User Agent Changer Chrome extension to easily change the browser’s user agent.

Note – If you cannot find the SEOCatalog folder in either your custom theme or the Responsive theme (from the _SystemResources folder), please consider upgrading your project solution to the latest Optimizely versions.

Conclusion

By following these steps and harnessing the power of Optimizely Configured Commerce, you will be better positioned to customize and debug your SEO PDPs and PLPs.

Nice Blog Mangesh, very informative.

Informative blog sir