We’ve looked before at the steps necessary to build a holiday calendar in DynamoDB. One of the advantages of keeping all your closure times tracked in a database is that you can easily update when the call center should be available, modify the closure reasons or add a new holiday with minimum effort and no changes to code. However, because of the way DynamoDB is structured, uploading a lot of items at once can be a bit cumbersome. Here are, for example, the steps necessary to accomplish a batch upload using the AWS CLI.

To help with this issue and make it easier to automate maintaining your holiday calendar we will walk you through a few lambda functions that can bulk upload data from S3. This will be a two part blog post with this section focused on uploading a JSON file specific to the holiday calendar while the second part will cover uploading a CSV file.

1.Prerequisites

Before we can look at the code itself there are a few items that need to be configured. You will need a DynamoDB table to write the dates into, an S3 bucket to store the data input and a Lambda role that can interact with both.

Get started by creating a DynamoDB table and an S3 bucket. Make sure they will be in the same region as the Lambda function and name them anything that makes sense for your environment. Also make sure the DynamoDB table primary key is a number. To make it easier later on name the primary key dateStart or make sure you update the code below appropriately.

Once you have your resources created navigate to IAM and set up a role for Lambda. In order to have a function update your DynamoDB table Lambda must have “dynamodb:PutItem” permissions for DynamoDB and “s3:Get*”,”s3:List*” action permissions for the S3 bucket we will use to upload our files. I recommend creating two separate policies and only granting access to the relevant resource.

For example in my environment for the role used by Lambda I have a “DynamoDBPutAccess” policy that looks like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"dynamodb:PutItem"

],

"Resource": [

"arn:aws:dynamodb:us-east-1:553456133668:table/DYANMODBTABLENAME"

],

"Effect": "Allow"

}

]

}

As well as a “S3ReadAccess” policy that looks like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:Get*",

"s3:List*"

],

"Resource": "arn:aws:s3:::UPLOADBUCKET/*",

"Effect": "Allow"

}

]

}

As always when working with Lambda it also makes sense to grant your role a policy that will allow it to interact with CloudWatch and write logs.

Once you have your Lambda role it’s time to decide what kind of data you will upload.

2.Uploading a JSON file from S3

The easiest way to upload data into our holiday calendar is by using a JSON file as a starting point, this will require the minimum amount of conversion and we can use the built in JSON functions.

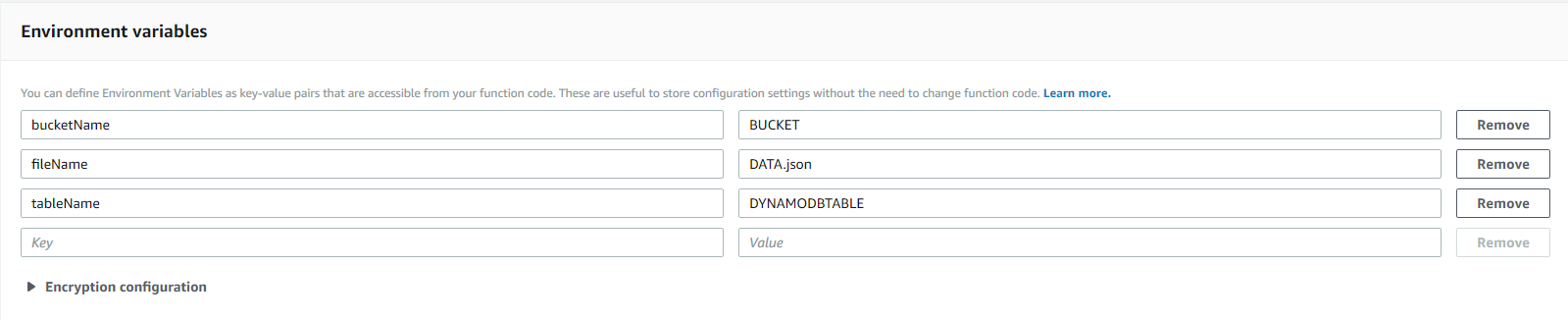

Start off by declaring the resources we will use and load in two environmental variables “bucketName” and “fileName”. These will be the source of our holiday file (don’t forget to also create these two variables in your environment. Alternatively you can decide to hardcore the path. Keep in mind the way variables are used in the code below is at times unnecessarily verbose in hopes of making it easier to follow).

const AWS = require('aws-sdk');

const s3 = new AWS.S3();

const docClient = new AWS.DynamoDB.DocumentClient({region: 'us-east-1'});

exports.handler = (event, context, callback) => {

const bucketName = process.env.bucketName;

const keyName = process.env.fileName;

readFile(bucketName, keyName, readFileContent);

};

After declaring all our variables we call a readFile function that takes in our bucket, our file name and a function to be called if we successfully find data.

function readFile (bucketName, fileName, onFileContent) {

const params = { Bucket: bucketName, Key: fileName };

s3.getObject(params, function (err, data) {

if (!err)

onFileContent(filename, data.Body.toString());

else

console.error("Unable to find data object. Error JSON:", JSON.stringify(err, null, 2));

});

}

We can simply use the s3 method getObject to pull in data, log any error we encounter or send the data we collected from the fileName to our readFileContent function.

function readFileContent(filename, content) {

let jsonContent = JSON.parse(content);

for (let i in jsonContent){

let holidayStart = jsonContent[i]['holidayStart'];

let holidayEnd = jsonContent[i]['holidayEnd'];

let dateStart = new Date(holidayStart).getTime();

let dateEnd = new Date(holidayEnd).getTime();

let reason = jsonContent[i]['reason']

let params = {

TableName:process.env.tableName,

Item:{

"dateStart" :dateStart,

"dateEnd":dateEnd,

"reason":reason,

"holidayStart":holidayStart,

"holidayEnd":holidayEnd

}

};

addData(params);

}

}

This is where the data from our JSON file is actually parsed and associated with the right attributes in DynamoDB. We are assuming the DynamoDB table you created has dateStart as the primary key and the JSON file you will use has the following format:

"holiday1": {

"holidayStart" : "August 10, 2018 00:00 AM GMT+09:00",

"holidayEnd" : "August 10, 2018 11:59:00 PM GMT+09:00",

"reason" : "Test holiday"

},

"holiday2":{...},

"holiday3":{...}

As you can tell our function transforms the date in holidayStart to an epoch timestamp and writes it into the dateStart, but also uploads the date string so you can easily tell what dates are already entered. Finally we will also enter a reason that Amazon Connect can use to dynamically inform the caller why the call center is closed.

Something else to note in the params variable we build is that we tell our function what table it should update. Make sure you add an environmental variable named tableName and enter the name of your DynamoDB table.

Now that data has been collected and parsed into the right format and we know where it needs to eventually live we just need to actually add it into the DynamoDB table. To do this we’ll invoke the addData function after reading each element in the JSON file. This function will use the DynamoDB client to put an item into our database, or log more details if we hit an error.

function addData(params) {

docClient.put(params, function(err, data) {

if (err) {

console.error("Unable to add item. Error JSON:", JSON.stringify(err, null, 2));

} else {

console.log("Added item:", JSON.stringify(params.Item, null, 2));

}

});

}

That should be all the code needed. Make sure you create the 3 variables below and point them to the right resources and you should be ready to upload your JSON file into S3.

Typically I would recommend setting a trigger on the S3 bucket whenever an object is created and invoke this function, but you can also just run it manually with an empty JSON event and your table should populate.

Using a JSON might not be the most convenient way to enter holiday data, so in this blog post we take a look at uploading data from a CSV file. We will use the core concepts detailed here, but we will need to parse CSV to a format Node.js can work with.