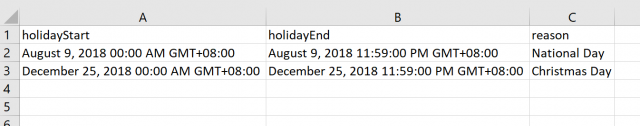

We’ve previously detailed the steps necessary to build a holiday calendar and looked at how we can easily upload all your holidays at once. However, so far we’ve only worked with JSON, which is an easy to understand format for Node.js, but not necessarily the most intuitive for a human reader. To avoid making mistakes you might want to use a CSV file with dedicated headers for your items. In this blog post we will show you how to set up a Lambda function that can parse a table similar to the screenshot below.

1.Prerequisites

To get started you will need to deploy most of the prerequisites detailed in the two blog posts linked at the top of this article. We won’t walk through creating a DynamoDB or how to configure a Lambda function that can read it, both both of these steps are necessary for the full holiday calendar solution to work.

You will also need to prepare a deployment package for Lambda. We will need to do this because unfortunately Node.js doesn’t have an easy way to parse CSV files so we will use an external package to help us. For this blog post I chose to use csvtojson, but numerous other modules are available and the steps to set everything up will be very similar.

If you are already familiar with installing a node package feel free to skip to the code section below, otherwise please follow along.

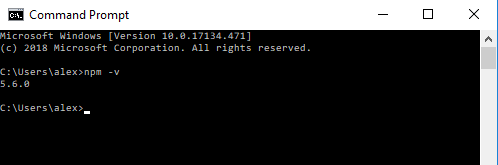

To get started install Node.js on your local machine. You can find the instructions to do so here. Simply grab the most recent version and run the installer. This will also install npm, which is what we need in order to set up the csvtojson package. Once Node is installed open a command line terminal and type npm -v. You should see the version of npm you have installed.

This means everything we need is installed and we can move on. If you get an error try to follow the installation steps again.

Once you have everything working navigate to a new folder and run the following command: npm init -y. You should see something similar to the following screen.

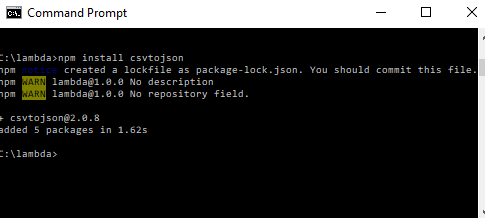

This will set everything up so you can run the next command which will actually download the csvtojson package. Simply run: npm install csvtojson

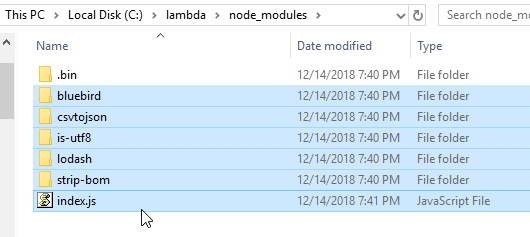

This will download the csvtojson package and you can now use file explorer to navigate to the folder you set up earlier. You should now see a folder named node_modules, go ahead and create a new file and name it index.js.

Select everything except the .bin folder and archive it into a zip file. This will be your deployment package and it should now be ready to upload into Lambda.

2.Uploading a CSV file from S3

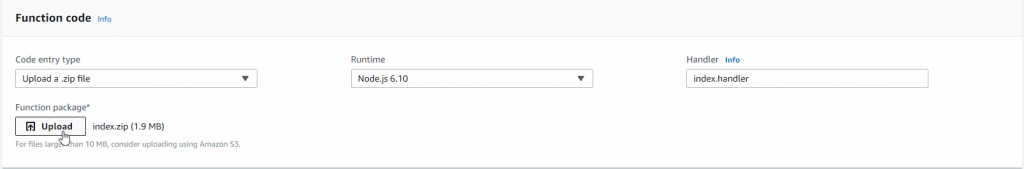

Now that we have all the basic steps in place, navigate to AWS Lambda and select “create a new function”. Name it something that will make sense, select Node.js 6.10 and use a role that has access to S3 and DynamoDB. If you need help creating such a role, check out our post on managing your holiday calendar.

Once everything looks good create the function and under Code entry type select upload a. zip file. Upload the deployment package containing the csvtojson package and your empty index.js file.

Once the package is uploaded you can open index.js file and start adding code. For the most part we will re-use the code we previously wrote to upload data from a JSON file. However, there are a few small changes that will allow us to stream each row of the CSV file and convert it to JSON so we can push it into DynamoDB.

We previously used the S3 getObject method to select entries in our S3 bucket. This time around we will use the getObject and create a read stream. This will allow us to stream raw data to the csvtojson object which will in turn parse each row to JSON. Finally, we will use the same addData fucntion we used previously to update our DynamoDB table.

Here is the code in it’s entirety. Just paste it into the index file, save the function and you should be ready to test uploading a CSV file.

const AWS = require('aws-sdk');

const s3 = new AWS.S3();

const docClient = new AWS.DynamoDB.DocumentClient({region: 'us-east-1'});

exports.handler = (event, context) => {

const bucketName = process.env.bucketName;

const keyName = process.env.key;

const params = { Bucket: bucketName, Key: keyName };

const csv=require('csvtojson');

//grab the csv file from s3

const s3Stream = s3.getObject(params).createReadStream()

csv().fromStream(s3Stream)

.on('data', (row) => {

//read each row

let jsonContent = JSON.parse(row);

console.log(JSON.stringify(jsonContent));

//push each row into DynamoDB

let paramsToPush = {

TableName:process.env.tableName,

Item:{

"dateStart" :new Date(jsonContent.holidayStart).getTime(),

"dateEnd":new Date(jsonContent.holidayEnd).getTime(),

"reason":jsonContent.reason,

"holidayStart":jsonContent.holidayStart,

"holidayEnd":jsonContent.holidayEnd

}

};

addData(paramsToPush);

});

};

function addData(params) {

console.log("Adding a new item based on: ");

docClient.put(params, function(err, data) {

if (err) {

console.error("Unable to add item. Error JSON:", JSON.stringify(err, null, 2));

} else {

console.log("Added item:", JSON.stringify(params.Item, null, 2));

}

});

}

Once you save this code in your function make sure you create the 3 environmental variables pointing to the bucket, the file and the DynamoDB table. Once that is complete you should be ready to upload your JSON file into S3. You can of course configure a trigger on the bucket for any new objects or simply run this function with an empty test event.

Hopefully this will help you better manage your DynamoDB holiday calendar and easily upload changes. For assistance configuring this solution or help with any Amazon Connect related topic please Request an Amazon Connect Demo.