Gone are the days when several spreadsheets, pen and paper, and calculators are needed to reconcile financial reports. A common challenge in financial reporting is validating that what gets reported on executive dashboards matches with more detailed financial statements and transaction level reporting. What aggravates this endeavor is the fact that executive level dashboards are typically generated from different systems than what is used for transactional reporting. For instance, your Profit & Loss Statement is most likely generated from a financial consolidation application like Hyperion Financial Management. Such high level statements tend to be consolidated at a General Ledger (GL) Account level which leaves you in the dark when you want to understand where a certain metric like Net Sales or COGs is coming from. Getting to the detailed information you need usually means you have to switch over to a totally different application, your ERP system, to collect transactional level data such as the customers, regions, or projects that make up a figure on your P&L. Ideally you want to be able to drill down and identify discrepancies at the click of a button, irrespective of where the data is located. In the rest of this blog, I aim to show you how this happens with Oracle’s Business Intelligence Cloud Service (BICS).

The Consolidation Application (e.g. Oracle HFM)

The key to the solution is identifying the dimensional commonalities between the different source applications. And this can scale out to include any number of source systems, but for the sake of simplicity, I will assume that we have a case where a P&L statement is generated out of an Oracle HFM (Hyperion Financial Management) application, while the transactional AR, AP and the rest of the GL data is sourced from a separate ERP application. I want to be able to trace back a GL Account in HFM to the corresponding transactions in the ERP system. To do that, I break down the GL account to its constituent segments. Let’s take the example of a GL account consisting of 3 segments as follows: Company-Cost Center-Department. This means I have these 3 dimensions that should allow me to link back to where an HFM piece of information is coming from.

The Source Transactional Systems (e.g. ERP Application)

Once a list of dimensions from the GL account segments has been identified from the consolidation application, the next step is to map these dimensions back to the ERP source system. This may mean that for Receivable accounts I have to investigate the Accounts Receivables tables and identify a mapping criteria in these tables for each of the 3 dimensions. For Sales figures, I may want to look at invoicing tables and so forth. Once all the data sources that feed into HFM have been identified, it’s now time to model the data structures and load these data sets into BICS.

BI Cloud Data Model

Here is when things start getting a little technical. If you’ve worked with data warehouses before, BICS follows a star schema data model. You can add several star models and have the dimensions conform across the different facts. So to follow through from my above example, I have to have at least 2 stars (2 facts). I say at least 2, because there may be a need to develop multiple facts to capture all the ERP system data to drill down to, while in this example I assumed one fact for AR Invoices.

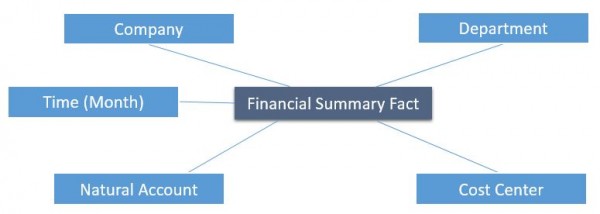

- Financial Summary Fact: This is what gets loads from HFM. In addition to actuals, you may want to also load budgets and forecasts whether from HFM or another planning application like from Hyperion Planning and Essbase. Plans and forecasts can be loaded as well into the same fact in BICS. And all these data loads can be automated but this may be something to go through into more detail in another blog. For the sake of this posting, I have loaded a fact table with consolidated financial data and created a star model consisting of a Financial Summary Fact table and the 4 dimensions: Time, Company, Cost Center and Department.

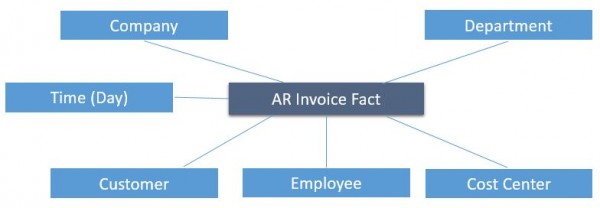

- AR Invoice Fact: This is now transactional data that is sourced from the ERP application and more specifically the transaction table of AR invoice lines. This is expected to be a very detailed level of information that includes the date of an invoice, the customer and other such relevant attributes. Getting more dimensions into BICS enables drilling down to view these attributes and therefore identify where the anomalies are and which ERP transactions require adjustments/corrections to arrive at accurate and complete financial reporting. Here I end up with a second star model consisting of the AR Invoice Fact table and several dimensions, which in addition to the 4 dimensions that conform to the Financial Summary Fact, also support new dimensions such as Time at day level, customer, employee, etc…

Drill-down Functionality

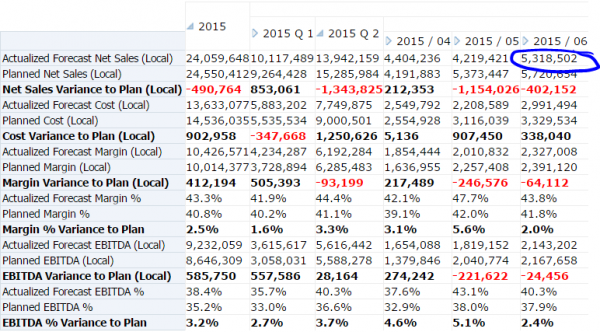

- Financial Summary Report: After data gets loaded into the BICS star data models, it’s time to think about the drilldown flow. In my example, the Financial Summary report is the starting point. I have consolidated financial data grouped by the different account categories. A powerful feature in BICS is its hierarchical dimension capability which allows expanding for example a Margin measure and seeing what the underlying Sales and COGS figures are. Each measure can further be expanded repetitively to get to the lowest level which is the natural account number as recorded in GL.

- Reconciliation Report: My use case now is to validate the Net Sales measure. So I click on 2015 / 06 on the 5,318,502 amount from the above Financial Summary Report. This action navigates to a Reconciliation Report that displays the Net Sales figure derived from both the Financial Summary Fact and the AR Invoice Fact and the difference between the two. In other words, I am able to see the difference in how Net Sales is calculated from both HFM and the ERP System. In an ideal situation, you would expect these to match, however, due to manual adjustments that may be applied or special business practices, these measures can be expected to differ. And that is fine as long as you know that the difference is accounted for and is justifiable. For instance, the reconciliation report below already accounts for the known anomalies between the Hyperion Sales measure and the ERP Sales measure. One of the reasons for the deviation in this case are Discounts & Allowances. The ERP System Net Sales measure and everything above it are obtained from the AR Invoice Fact while the Hyperion Actual Net Sales is from the Financial Summary Fact. Any non-zero variance means there is a non-accounted for difference that needs further attention. I can drill down further on the -71,861 Discounts & Allowances to get to a transaction level report.

- Transaction Detail Report: This report is built entirely from the ERP sourced model, AR Invoice Fact and serves as a drill-to report from each of the AR Invoice Fact measures on the Reconciliation Report. On this report we have all the dimensional attributes that exist in the ERP System but not in HFM.

What I presented here may be a simple example of how to trace a metric on a financial summary report back to its transactional source, but with leveraging Oracle BICS and with the same design concept you can expand on this to cover more than 2 data source systems and more complicated metrics.