In the contemporary era of digital platforms, capturing and maintaining user interest stands as a pivotal element determining the triumph of any software. Whether it’s websites or mobile applications, delivering engaging and tailored encounters to users holds utmost significance. In this project, we aim to implement DevSecOps for deploying an OpenAI Chatbot UI, leveraging Kubernetes (EKS) for container orchestration, Jenkins for Continuous Integration/Continuous Deployment (CI/CD), and Docker for containerization.

What is ChatBOT?

A ChatBOT is an artificial intelligence-driven conversational interface that draws from vast datasets of human conversations for training. Through sophisticated natural language processing methods, it comprehends user inquiries and furnishes responses akin to human conversation. By emulating the nuances of human language, ChatBOTs elevate user interaction, offering tailored assistance and boosting engagement levels.

What Makes ChatBOTs a Compelling Choice?

The rationale behind opting for ChatBOTs lies in their ability to revolutionize user interaction and support processes. By harnessing artificial intelligence and natural language processing, ChatBOTs offer instantaneous and personalized responses to user inquiries. This not only enhances user engagement but also streamlines customer service, reduces response times, and alleviates the burden on human operators. Moreover, ChatBOTs can operate round the clock, catering to users’ needs at any time, thus ensuring a seamless and efficient interaction experience. Overall, the adoption of ChatBOT technology represents a strategic move towards improving user satisfaction, operational efficiency, and overall business productivity.

Key Features of a ChatBOT Include:

- Natural Language Processing (NLP): ChatBOTs leverage NLP techniques to understand and interpret user queries expressed in natural language, enabling them to provide relevant responses.

- Conversational Interface: ChatBOTs utilize a conversational interface to engage with users in human-like conversations, facilitating smooth communication and interaction.

- Personalization: ChatBOTs can tailor responses and recommendations based on user preferences, past interactions, and contextual information, providing a personalized experience.

- Multi-channel Support: ChatBOTs are designed to operate across various communication channels, including websites, messaging platforms, mobile apps, and voice assistants, ensuring accessibility for users.

- Integration Capabilities: ChatBOTs can integrate with existing systems, databases, and third-party services, enabling them to access and retrieve relevant information to assist users effectively.

- Continuous Learning: ChatBOTs employ machine learning algorithms to continuously learn from user interactions and improve their understanding and performance over time, enhancing their effectiveness.

- Scalability: ChatBOTs are scalable and capable of handling a large volume of concurrent user interactions without compromising performance, ensuring reliability and efficiency.

- Analytics and Insights: ChatBOTs provide analytics and insights into user interactions, engagement metrics, frequently asked questions, and areas for improvement, enabling organizations to optimize their ChatBOT strategy.

- Security and Compliance: ChatBOTs prioritize security and compliance by implementing measures such as encryption, access controls, and adherence to data protection regulations to safeguard user information and ensure privacy.

- Customization and Extensibility: ChatBOTs offer customization options and extensibility through APIs and development frameworks, allowing organizations to adapt them to specific use cases and integrate additional functionalities as needed.

Through the adoption of DevSecOps methodologies and harnessing cutting-edge technologies such as Kubernetes, Docker, and Jenkins, we are guaranteeing the safe, scalable, and effective rollout of ChatBOT. This initiative aims to elevate user engagement and satisfaction levels significantly.

I extend our heartfelt appreciation to McKay Wrigley, the visionary behind this project. His invaluable contributions to the realm of DevSecOps have made endeavors like the ChatBOT UI project achievable.

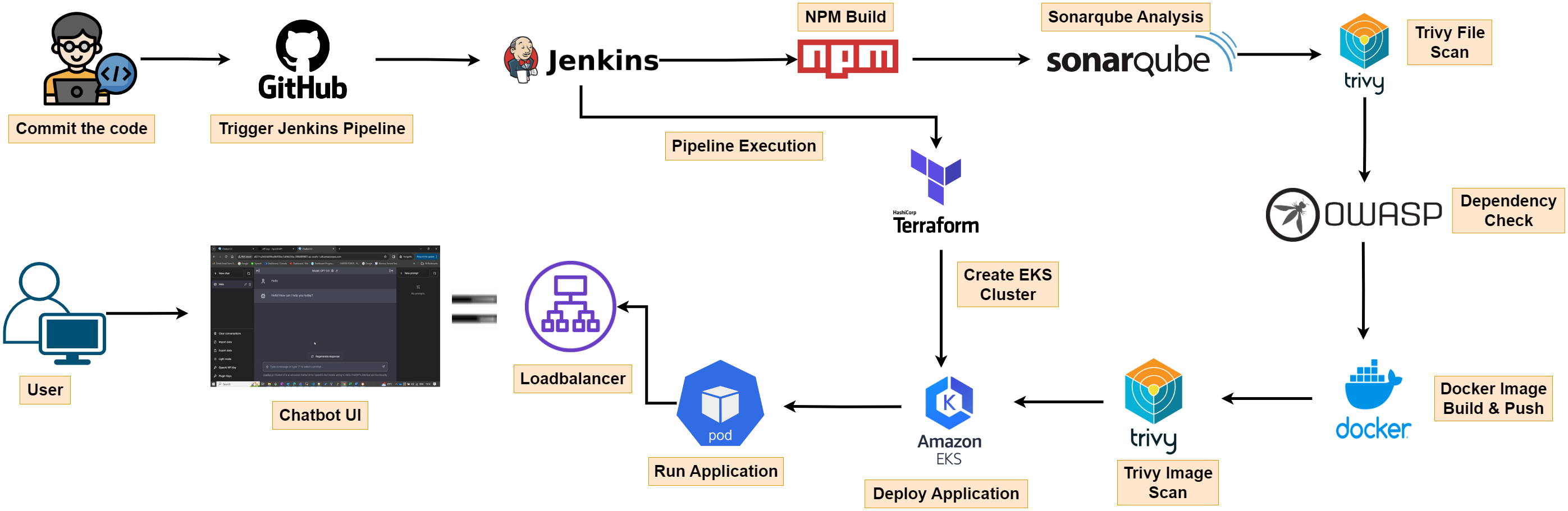

Pipeline Workflow

Let’s start, building our pipelines for the deployment of OpenAI Chatbot application. I will be creating two pipelines in Jenkins,

- Creating an infrastructure using terraform on AWS cloud.

- Deploying the Chatbot application on EKS cluster node.

Prerequisite: Jenkins Server configured with Docker, Trivy, Sonarqube, Terraform, AWS CLI, Kubectl.

Once, we successfully established and configured a Jenkins server, equipped with all necessary tools to create a DevSecOps pipeline for deployment by following my previous blog. We can start building our DevSecOps pipeline for OpenAI chatbot deployment.

First thing, we need to do is configure terraform remote backend.

- Create a S3 bucket with any name.

- Create a DynamoDB table with name “Lock-Files” and Partition Key as “LockID”.

- Update the S3 bucket name and DynamoDB table name in backend.tf file, which is in EKS-TF folder in Github Repo.

Create Jenkins Pipeline

Let’s login into our Jenkins Server Console as you have completed the prerequisite. Click on “New Item” and give it a name, select pipeline and then ok.

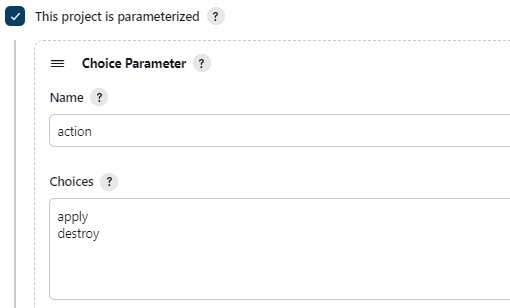

I want to create this pipeline with build parameters to apply and destroy while building only. You must add this inside job like the below image.

Let’s add a pipeline, Definition will be Pipeline Script.

pipeline{

agent any

stages {

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/sunsunny-hub/Chatbot-UIv2.git'

}

}

stage('Terraform version'){

steps{

sh 'terraform --version'

}

}

stage('Terraform init'){

steps{

dir('EKS-TF') {

sh 'terraform init'

}

}

}

stage('Terraform validate'){

steps{

dir('EKS-TF') {

sh 'terraform validate'

}

}

}

stage('Terraform plan'){

steps{

dir('EKS-TF') {

sh 'terraform plan'

}

}

}

stage('Terraform apply/destroy'){

steps{

dir('EKS-TF') {

sh 'terraform ${action} --auto-approve'

}

}

}

}

}

Let’s apply and save and build with parameters and select action as apply.

Stage view it will take max 10mins to provision.

Blue ocean output

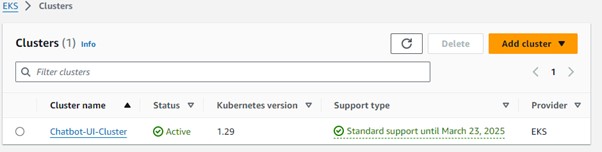

Check in Your Aws console whether it created EKS cluster or not.

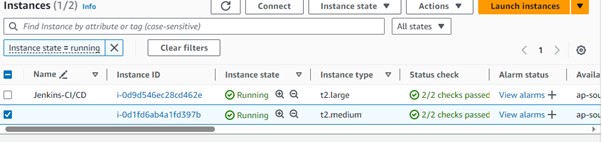

Ec2 instance is created for the Node group.

Now let’s create new pipeline for chatbot clone. In this pipeline will deploy chatbot application on docker container after successful deployment, will deploy the same docker image on above provisioned eks cluster.

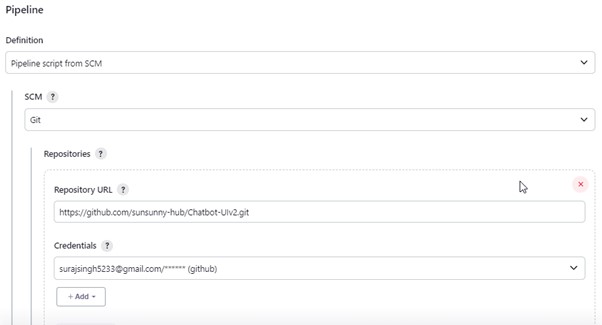

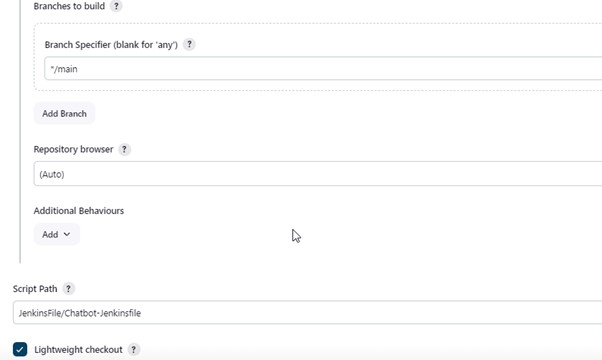

Under Pipeline section Provide below details.

Definition: Pipeline script from SCM SCM : Git Repo URL : Your GitHub Repo Credentials: Created GitHub Credentials Branch: Main Path: Your Jenkinsfile path in GitHub repo.

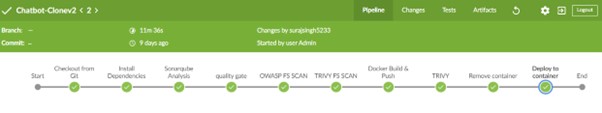

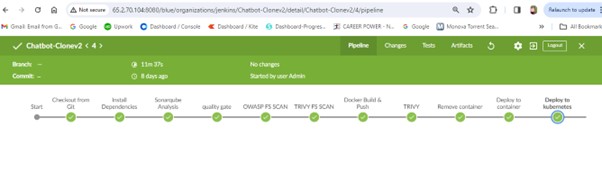

Apply and Save and click on Build. Upon successful execution you can see all stages as green.

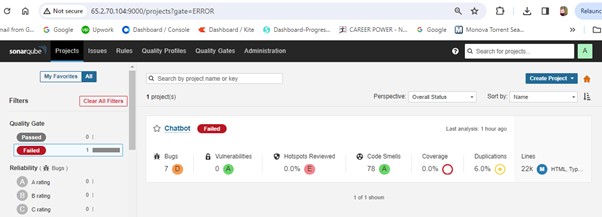

Sonar- Console:

You can see the report has been generated and the status shows as failed. You can ignore this as of now for this POC, but in real time project all this quality profile/gates need to be passed.

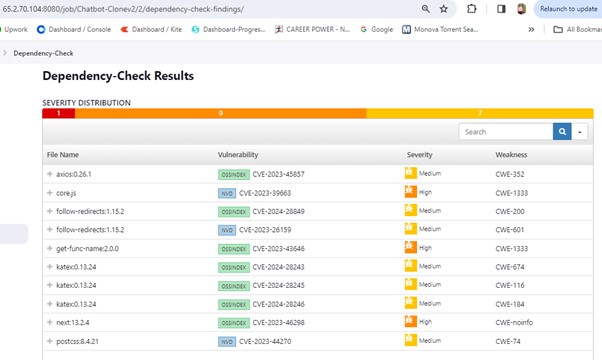

Dependency Check:

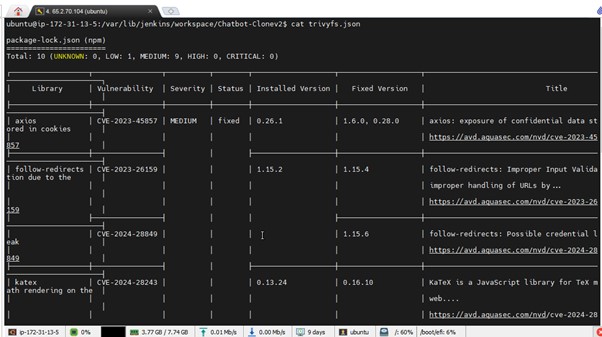

Trivy File scan:

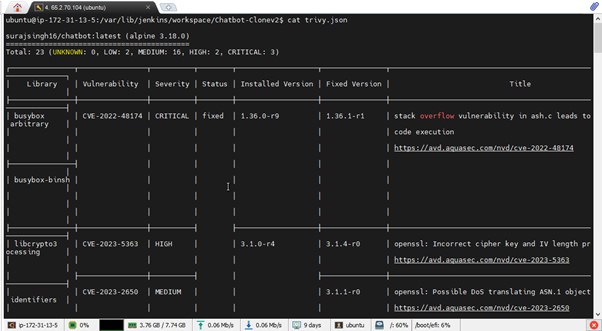

Trivy Image Scan:

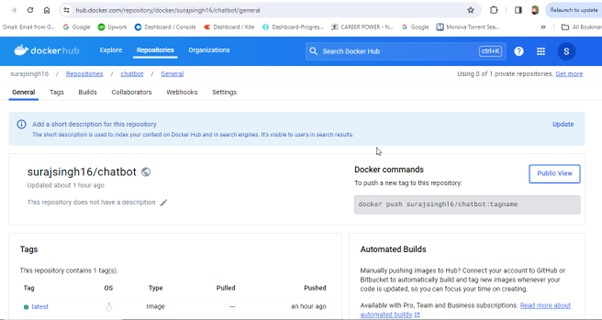

Docker Hub:

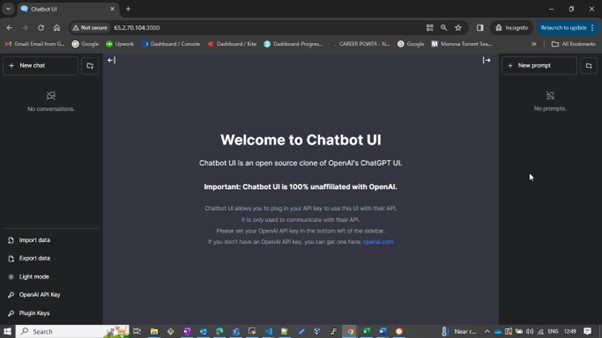

Now access the application on port 3000 of Jenkins Server Ec2 Instance public IP.

Note: Ensure that port 3000 is permitted in the Security Group of the Jenkins server.

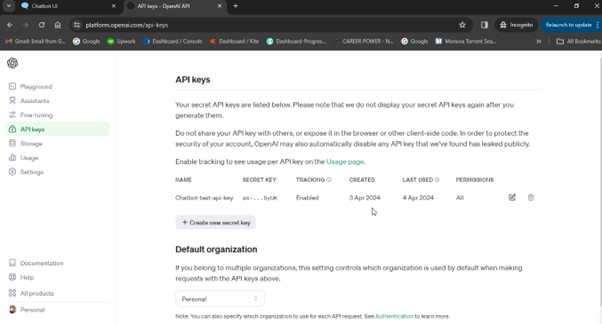

Click on openai.com(Blue in colour)

This will redirect you to the ChatGPT login page where you can enter your email and password. In the API Keys section, click on “Create New Secret Key.”

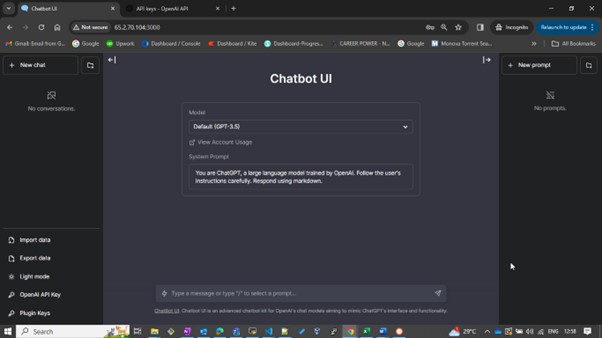

Give a name and copy it. Come back to chatbot UI that we deployed and bottom of the page you will see OpenAI API key and give the Generated key and click on save (RIGHT MARK).

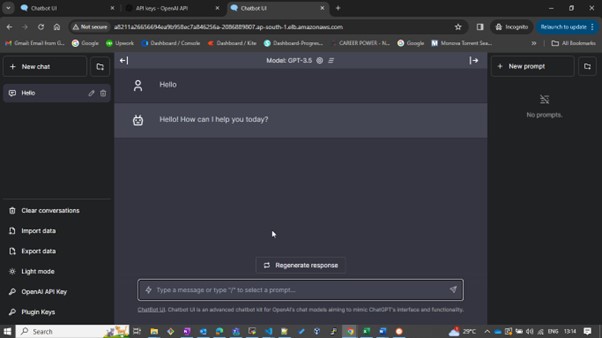

UI look like:

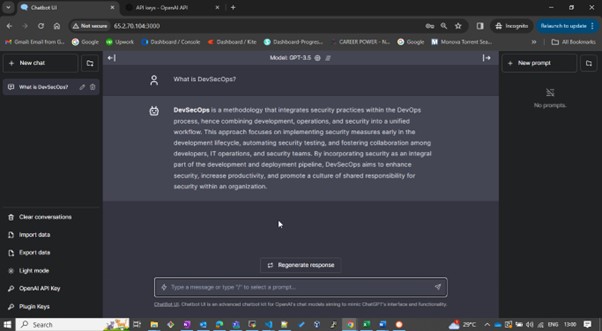

Now, You can ask questions and test it.

Deployment on EKS

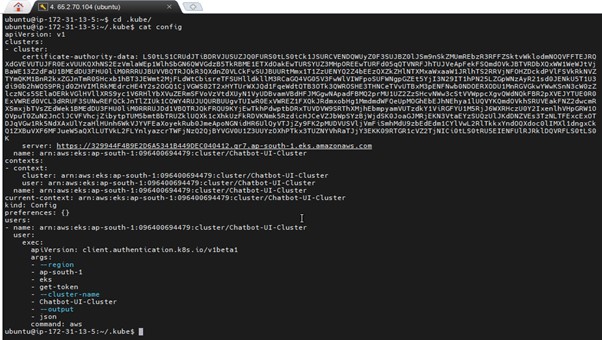

Now we need to add credential for eks cluster, which will be used for deploying application on eks cluster node. For that ssh into Jenkins server. Give this command to add context.

aws eks update-kubeconfig --name <clustername> --region <region>

It will generate a Kubernetes configuration file. Navigate to the directory where the config file is located and copy its contents.

cd .kube cat config

Save the copied configuration in your local file explorer at your preferred location and name it as a text file.

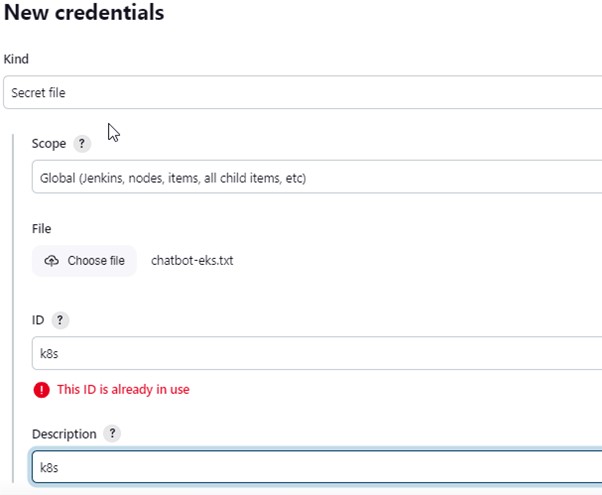

Next, in the Jenkins Console, add this file to the Credentials section with the ID “k8s” as a secret file.

Finally, incorporate this deployment stage into your Jenkins file.

stage('Deploy to kubernetes'){

steps{

withAWS(credentials: 'aws-key', region: 'ap-south-1'){

script{

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'k8s', namespace: '', restrictKubeConfigAccess: false, serverUrl: '') {

sh 'kubectl apply -f k8s/chatbot-ui.yaml'

}

}

}

}

}

Now rerun the Jenkins Pipeline again.

Upon Success:

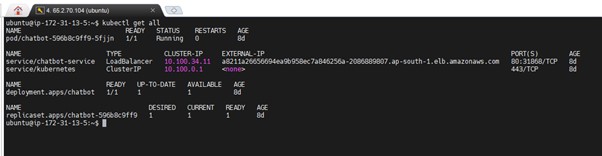

In the Jenkins give this command

kubectl get all kubectl get svc #use anyone

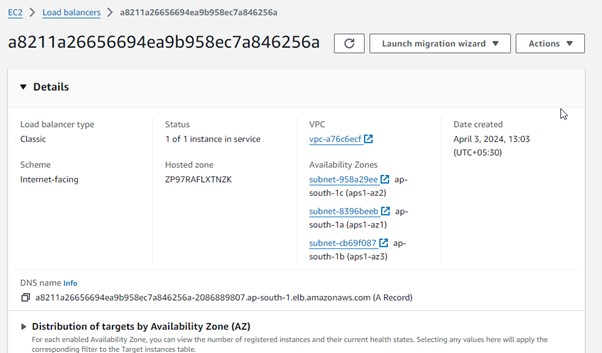

This will create a Classic Load Balancer on the AWS Console.

Copy the DNS name and paste it into your browser to use it.

Note: Do the same process to get OpenAI API Key and add key to get output on Chatbot UI.

The Complete Jenkins file:

pipeline{

agent any

tools{

jdk 'jdk17'

nodejs 'node19'

}

environment {

SCANNER_HOME=tool 'sonar-scanner'

}

stages {

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/sunsunny-hub/Chatbot-UIv2.git'

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage("Sonarqube Analysis "){

steps{

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Chatbot \

-Dsonar.projectKey=Chatbot '''

}

}

}

stage("quality gate"){

steps {

script {

waitForQualityGate abortPipeline: false, credentialsId: 'Sonar-token'

}

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('TRIVY FS SCAN') {

steps {

sh "trivy fs . > trivyfs.json"

}

}

stage("Docker Build & Push"){

steps{

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh "docker build -t chatbot ."

sh "docker tag chatbot surajsingh16/chatbot:latest "

sh "docker push surajsingh16/chatbot:latest "

}

}

}

}

stage("TRIVY"){

steps{

sh "trivy image surajsingh16/chatbot:latest > trivy.json"

}

}

stage ("Remove container") {

steps{

sh "docker stop chatbot | true"

sh "docker rm chatbot | true"

}

}

stage('Deploy to container'){

steps{

sh 'docker run -d --name chatbot -p 3000:3000 surajsingh16/chatbot:latest'

}

}

stage('Deploy to kubernetes'){

steps{

withAWS(credentials: 'aws-key', region: 'ap-south-1'){

script{

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'k8s', namespace: '', restrictKubeConfigAccess: false, serverUrl: '') {

sh 'kubectl apply -f k8s/chatbot-ui.yaml'

}

}

}

}

}

}

}

I hope you have successfully deployed the OpenAI Chatbot UI Application. You can also delete the resources using the same Terraform pipeline by selecting the action as “destroy” and running the pipeline.