The Rise of AI in Banking

AI adoption in banking has accelerated dramatically. Predictive analytics, generative AI, and autonomous agentic systems are now embedded in core banking functions such as loan underwriting, compliance including fraud detection and AML, and customer engagement.

A recent White Paper by Perficient affiliate Virtusa Agentic Architecture in Banking – White Paper | Virtusa documented that when designed with modularity, composability, Human-in-the-Loop (HITL), and governance, agentic AI agents empower a more responsive, data-driven, and human-aligned approach in financial services.

However, the rollout of agentic and generative AI tools without proper controls poses significant risks. Without a unified strategy and governance structure, Strategically Important Financial Institutions (“SIFIs”) risk deploying AI in ways that are opaque, biased, or non-compliant. As AI becomes the engine of next-generation banking, institutions must move beyond experimentation and establish enterprise-wide controls.

Key Components of AI Data Governance

Modern AI data governance in banking encompasses several critical components:

1. Data Quality and Lineage: Banks must ensure that the data feeding AI models is accurate, complete, and traceable.

Please refer to Perficient’s recent blog on this topic here:

2. Model Risk Management: AI models must be rigorously tested for fairness, accuracy, and robustness. It has been documented many times in lending decision-making software that the bias of coders can result in biased lending decisions.

3. Third-Party Risk Oversight: Governance frameworks now include vendor assessments and continuous monitoring. Large financial institutions do not have to develop AI technology solutions themselves (Buy vs Build) but they do need to monitor the risks of having key technology infrastructure owned and/or controlled by third parties.

4. Explainability and Accountability: Banks are investing in explainable AI (XAI) techniques. Not everyone is a tech expert, and models need to be easily explainable to auditors, regulators, and when required, customers.

5. Privacy and Security Controls: Encryption, access controls, and anomaly detection are essential. These are all done already in legacy systems and extending it to the AI environment, whether it is narrow AI, machine learning, or more advanced agentic and/or generative AI it is natural to ensure these proven controls are extended to the new platforms.

Industry Collaboration and Standards

The FINOS Common Controls for AI Services initiative is a collaborative, cross-industry effort led by the FINtech Open-Source Foundation (FINOS) to develop open-source, technology-neutral baseline controls for safe, compliant, and trustworthy AI adoption in financial services. By pooling resources from major banks, cloud providers, and technology vendors, the initiative creates standardized, open-source technology-neutral controls, peer-reviewed governance frameworks, and real-time validation mechanisms to help financial institutions meet complex regulatory requirements for AI.

Key participants of FINOS include financial institutions such as BMO, Citibank, Morgan Stanley, and RBC, and key Technology & Cloud Providers include Perficient’s technology partners including Microsoft, Google Cloud, and Amazon Web Services (AWS). The FINOS Common Controls for AI Services initiative aims to create vendor-neutral standards for secure AI adoption in financial services.

At Perficient, we have seen leading financial institutions, including some of the largest SIFIs, establishing formal governance structures to oversee AI initiatives. Broadly, these governance structures typically include:

– Executive Steering Committees at the legal entity level

– Working Groups, at the legal entity as well as the divisional, regional and product levels

– Real-Time Dashboards that allow customizable reporting for boards, executives, and auditors

This multi-tiered governance model promotes transparency, agility, and accountability across the organization.

Regulatory Landscape in 2025

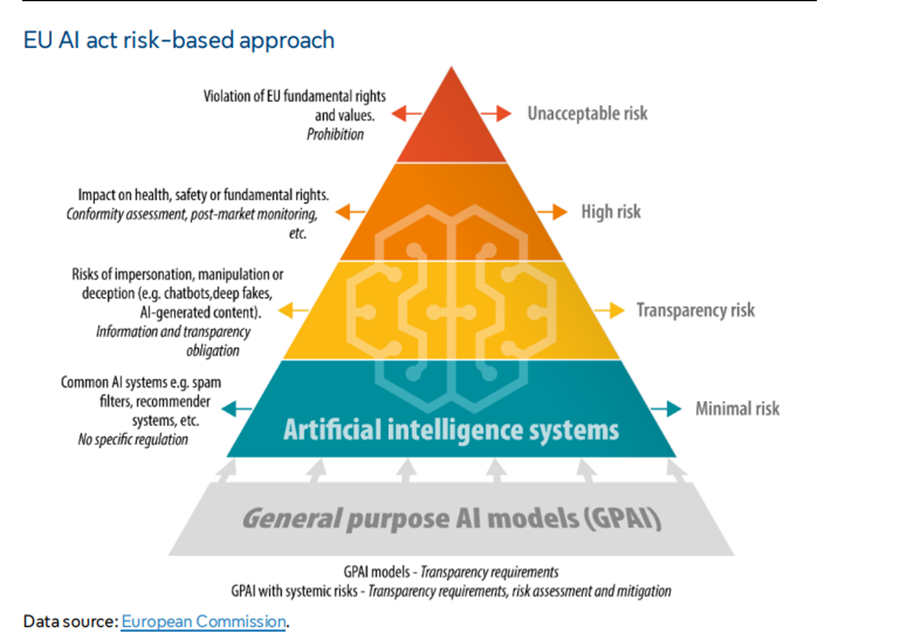

Regulators worldwide are intensifying scrutiny of Artificial Intelligence in banking. The EU AI Act, the U.S. SEC’s cybersecurity disclosure rules, and the National Insititute of Standards and Technology (“NIST”) AI Risk Management Framework are shaping how financial institutions must govern AI systems.

Key regulatory expectations include:

– Risk-Based Classification

– Human Oversight

– Auditability

– Bias Mitigation

Some of these, and other regulatory regimes have been documented and summarized by Perficient at the following links:

AI Regulations for Financial Services: Federal Reserve / Blogs / Perficient

AI Regulations for Financial Services: European Union / Blogs / Perficient

The Road Ahead

As AI becomes integral to banking operations, data governance will be the linchpin of responsible innovation. Banks must evolve from reactive compliance to proactive risk management, embedding governance into every stage of the AI lifecycle.

The journey begins with data—clean, secure, and well-managed. From there, institutions must build scalable frameworks that support ethical AI development, align with regulatory mandates, and deliver tangible business value.

Readers are urged to read the links contained in this blog and then contact Perficient, a global AI-first digital consultancy to discuss how partnering with Perficient can help run a tailored assessment and pilot design that maps directly to your audit and governance priorities and ensure all new tools are rolled out in a well-designed data governance environment.

This is an insightful and timely piece — it clearly explains how SIFIs are adapting data governance for an AI-driven world, balancing innovation with strong oversight. A sharp, forward-looking analysis that highlights both challenges and smart solutions.