Salesforce has been giving us a ‘No Code’ way to have Data Cloud notify Sales Cloud of changes through Data Actions and Flows. But did you know you can go the other direction too?

The Data Cloud Ingestion API allows us to setup a ‘No Code’ way of sending changes in Sales Cloud to Data Cloud.

Why would you want to do this with the Ingestion API?

- You are right that we could surely setup a ‘normal’ Salesforce CRM Data Stream to pull data from Sales Cloud into Data Cloud. This is also a ‘No Code’ way to integrate the two. But maybe you want to do some complex filtering or logic before sending the data onto Sales Cloud where a Flow could really help.

- CRM Data Streams only run on a schedule with every 10 minutes. With the Ingestion API we can send to Data Cloud immediately, we just need to wait until the Ingestion API can run for that specific request. The current wait time for the Ingestion API to run is 3 minutes, but I have seen it run faster at times. It is not ‘real-time’, so do not use this for ‘real-time’ use cases. But this is faster than CRM Data Streams for incremental and smaller syncs that need better control.

- You could also ingest data into Data Cloud easily through an Amazon S3 bucket. But again, here we have data in Sales Cloud that we want to get to Data Cloud with no code.

- We can do very cool integrations by leveraging the Ingestion API outside of Salesforce like in this video, but we want a way to use Flows (No Code!) to send data to Data Cloud.

Use Case:

You have Sales Cloud, Data Cloud and Marketing Cloud Engagement. As a Marketing Campaign Manager you want to send an email through Marketing Cloud Engagement when a Lead fills out a certain form.

You only want to send the email if the Lead is from a certain state like ‘Minnesota’ and that Email address has ordered a certain product in the past. The historical product data lives in Data Cloud only. This email could come out a few minutes later and does not need to be real-time.

Solution A:

If you need to do this in near real-time, I would suggest to not use the Ingestion API. We can query the Data Cloud product data in a Flow and then update your Lead or other record in a way that triggers a ‘Journey Builder Salesforce Data Event‘ in Marketing Cloud Engagement.

Solution B:

But our above requirements do not require real-time so let’s solve this with the Ingestion API. Since we are sending data to Data Cloud we will have some more power with the Salesforce Data Action to reference more Data Cloud data and not use the Flow ‘Get Records’ for all data needs.

We can build an Ingestion API Data Stream that we can use in a Salesforce Flow. The flow can check to make sure that the Lead is from a certain state like ‘Minnesota’. The Ingestion API can be triggered from within the flow. Once the data lands in the DMO object in Data Cloud we can then use a ‘Data Action’ to listen for that data change, check if that Lead has purchased a certain product before and then use a ‘Data Action Target’ to push to a Journey in Marketing Cloud Engagement. All that should occur within a couple of minutes.

Sales Cloud to Data Cloud with No Code! Let’s do this!

Here is the base Salesforce post sharing that this is possible through Flows, but let’s go deeper for you!

The following are those deeper steps of getting the data to Data Cloud from Sales Cloud. In my screen shots you will see data moving between a VIN (Vehicle Identification Number) custom object to a VIN DLO/DMO in Data Cloud, but the same process could be used for our ‘Lead’ Use Case above.

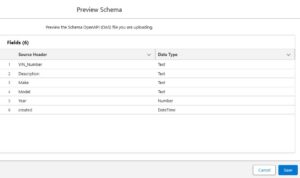

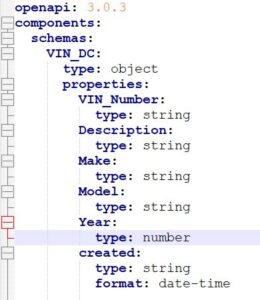

- Create a YAML file that we will use to define the fields in the Data Lake Object (DLO). I put an example YAML structure at the bottom of this post.

- Go to Setup, Data Cloud, External Integrations, Ingestion API. Click on ‘New’

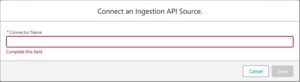

- Give your new Ingestion API Source a Name. Click on Save.

- In the Schema section click on the ‘Upload Files’ link to upload your YAML file.

- You will see a screen to preview your Schema. Click on Save.

- After that is complete you will see your new Schema Object

- Note that at this point there is no Data Lake Object created yet.

- Give your new Ingestion API Source a Name. Click on Save.

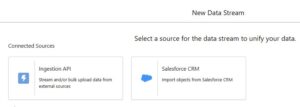

- Create a new ‘Ingestion API’ Data Stream. Go to the ‘Data Steams’ tab and click on ‘New’. Click on the ‘Ingestion API’ box and click on ‘Next’.

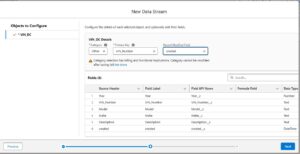

- Select the Ingestion API that was created in Step 2 above. Select the Schema object that is associated to it. Click Next.

- Configure your new Data Lake Object by setting the Category, Primary Key and Record Modified Fields

- Set any Filters you want with the ‘Set Filters’ link and click on ‘Deploy’ to create your new Data Stream and the associated Data Lake Object.

- If you want to also create a Data Model Object (DMO) you can do that and then use the ‘Review’ button in the ‘Data Mapping’ section on the Data Stream detail page to do that mapping. You do need a DMO to use the ‘Data Action’ feature in Data Cloud.

- Select the Ingestion API that was created in Step 2 above. Select the Schema object that is associated to it. Click Next.

- Now we are ready to use this new Ingestion API Source in our Flow! Yeah!

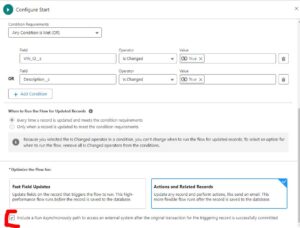

- Create a new ‘Start from Scratch’, ‘Record-Triggered Flow’ on the Standard or Custom object you want to use to send data to Data Cloud.

- Configure an Asynchronous path. We cannot connect to this ‘Ingestion API’ from the ‘Run Immediately’ part of the Flow because this Action will be making an API to Data Cloud. This is similar to how we have to use a ‘Future’ call with an Apex Trigger.

- Once you have configured your base Flow, add the ‘Action’ to the ‘Run Asynchronously’ part of the Flow. Select the ‘Send to Data Cloud’ Action and then map your fields to the Ingestion API inputs that are available for that ‘Ingestion API’ Data Stream you created.

- Save and Activate your Flow.

- To test, update your record in a way that will trigger your Flow to run.

- Go into Data Cloud and see your data has made it there by using the ‘Data Explorer’ tab.

- The standard Salesforce Debug Logs will show the details of your Flow steps if you need to troubleshoot something.

Congrats!

You have sent data from Sales Cloud to Data Cloud with ‘No Code’ using the Ingestion API!

Setting up the Data Action and connecting to Marketing Cloud Journey Builder is documented here to round out the use case.

Here is the base Ingestion API Documentation.

At Perficient we have experts in Sales Cloud, Data Cloud and Marketing Cloud Engagement. Please reach out and let’s work together to reach your business goals on these platforms and others.

Example YAML Structure:

openapi: 3.0.3

components:

schemas:

VIN_DC:

type: object

properties:

VIN_Number:

type: string

Description:

type: string

Make:

type: string

Model:

type: string

Year:

type: number

created:

type: string

format: date-time