–Prabha Ranganathan and Ruby Lin contributed to this blog.

Understanding how AI/ML can be used for innovative life sciences-related use cases is an area our industry lags. In this blog post, we’ll discuss several use cases in which unique AI models can be used to resolve critical issues effectively:

- NLQ (Natural Language Querying) – We deal with extensive clinical and operational data in life sciences. How can business users search the data without prior knowledge of the data structure and writing complex SQL-like queries? Can they type in a question in a human-readable format and use AI/ML models to convert it into a query that can be executed?

- Intake – How can a consumer type in a text box when reporting concerns after taking medication, and how can AEs be extracted from it? Can this information be coded with MedDRA, and can an E2B XML file be generated and sent to the safety system as a potential AE?

- Virtual Assistants – How can virtual assistants intelligently understand the conversation and provide users with the information they are looking for? Can the conversation flow be enhanced so that it is more human-like, and can the user experience ensure that the customer journey is exceptional?

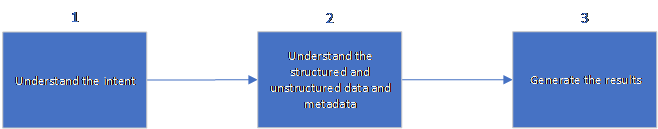

While evaluating solutions for all these use cases, we observed a design pattern emerging for multiple models to solve these use cases. We also determined that the models used in some use cases can be reused for others, of course, depending on the problem we are trying to address.

AI/ML Models as a Design Pattern

- Intent detection – This AI/ML model detects the user intent. This model tackles a classification problem and is trained using representative questions/utterances that users would ask or submit. In the case of NLQ, the user requests data (show me a list of enrolled subjects) or aggregate information (how many subjects failed screening for oncology ONC4765 trial?). This intent could be different for the virtual assistant: is the user reporting an adverse event, a product complaint, or requesting information regarding certain medications? For the intake use case, it is usually reporting a potential AE or product complaint.

- Understanding data/metadata – Also known as named entity extraction (NER), this AI/ML model looks to find specific patterns or concepts expressed in the user utterance to convert it to metadata. For NLQ, the metadata is used to inform another model on how to generate the corresponding search queries. In the case of the virtual assistant, the model should have a clear understanding of the data/documents it has access to so that it can provide a meaningful response to the end user. In the intake use case, the model should be able to extract key information like an indication from the text and code using MedDRA. Those NER models can be trained and reused across use cases. For more information on how to curate data, see our Leveraging AI for Knowledge Repositories and Content Curation in Life Sciences blog.

- Executing the intent – This model is the final step where it executes the intent and provides the user with results. In the NLQ use case, this executes the back-end query, potentially self/auto-correcting any errors in the queries and executing them to generate the results. For the intake application, this might be a simple API call to the safety system, with the possibility of self/auto-correcting when there are issues. In the virtual assistant use case, it provides the user with a response to their inquiries, using user response and sentiment analysis to self/auto-correct the conversation so that the end user is provided with the information they are looking for.

This design pattern applied to the selected use cases:

NLQ:

- What is being queried (intent)

- Generating the queries

- Executing the queries to fetch the results

Intake:

- Parsing the input

- Identifying potential AE, supporting info, and coding indication

- Sending the information to the safety system with source doc

Virtual Assistants:

- Intent of the conversation: information gathering, providing details (AE, product complaints)

- Detecting potential AEs, PCs, underlying conditions, information requested

- Fetching the information from curated data, escalating AEs/PCs per company policies

Benefits

- One NER model can be used across multiple solutions – i.e., AE detection in Intake and Virtual Assistant, NLQ (across all use cases)

- NLP tex-to-data (for NLQ) or data-to-text (in Virtual Assistant response)

Challenges

- Training and training data – Ensuring that we have the right data and SMEs available to train the various models for the use cases identified

- Understanding metadata and data (structured and unstructured)

- Applying life sciences knowledge to understand the intent and to curate data based on the language structure to determine the intent of the question: e.g., AE vs. preexisting conditions, information request vs. information provided (I have a heart condition, can I take this medication? vs. I have this heart condition after taking the medication)

- AI validation – for validated solutions, we need a unique and acceptable process to validate non-deterministic AI solutions

In conclusion, understanding the use cases, applying the design pattern for the identified use cases, and reusing trained models across use cases will determine the success of rolling out AI microservices and defining an AI strategy to address your business requirements.