Migration from one code management system to another is a non-trivial exercise. Most of the time the team wishes to maintain code history, branch structure, team permissions, and integrations. This blog post investigates one such migration from Bitbucket to GitHub for a large health maintenance organization.

Due to growth and acquisition over time, the organization found that development teams were using multiple source control systems. This led to increased expense from duplicate support efforts and license costs. This included platform management, automated Continuous Integration / Continuous Delivery (CI/CD) integration, and end-user support. To resolve these issues, GitHub was chosen as the single platform for source control. The GitHub enterprise product offers multiple benefits, including tool integrations (e.g., web-hooks, SSH key based access, workflow plugins), an intuitive UI for team and project management, and notifications on specific behavior driven events (e.g., pull-request, merge, branch creation). Additionally, there is the option for cloud or on-premises deployment of their source code management (SCM) platform.

The migration of several thousand repositories presented a significant challenge. Beyond the logistics of coordination, it was also required that the DevOps team meet the tight timeframe around license renewal. To avoid this additional expense, teams were required to migrate not just the code base, but all of the associated meta-data (e.g., branch history, user permissions, tool integrations, etc.). In the approach detailed below we extensively leveraged CloudBees Jenkins™ workflows, Red Hat Ansible™ playbooks, and Python™ scripting to perform much of the required setup and migration work.

Approach

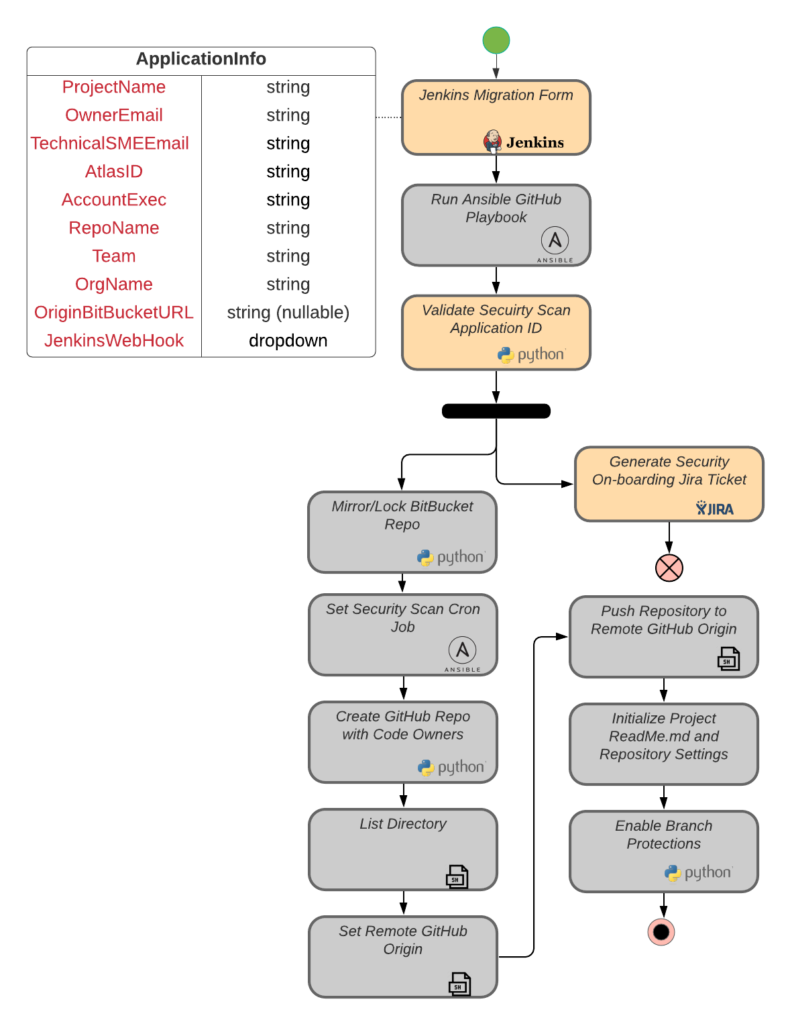

As shown in Figure 1, the migration effort involved creating a Jenkins migration workflow driven by user-provided information to define the Bitbucket source project, the GitHub target project, team ownership information, repository information, and additional integration requirements. This migration information was stored into a new file added at the root of the source tree (‘app-info.yml’). This approach facilitates future automation integration and provides a simple way to track application metadata within the code base itself.

Figure 1. GitHub migration automation workflow

There were multiple considerations to address in the GitHub migration automation, including ensuring the target GitHub project had proper visibility permissions (e.g., public/private), using consistent project naming standards, integrating with pre-existing or to-be established security scanning automation, applying organization defined branch protection rules, and maintaining all necessary CI/CD pipeline automation.

Code Transfer

While technically the most straightforward migration operation was to clone the code into the new repository, this required significant manual modifications to several key automation files maintained at the root of the project folder structure. For example, the pre-existing Jenkins configuration (‘Jenkinsfile’) was updated post migration to point to the correct shared library project; these had been previously migrated to GitHub from Bitbucket. Unfortunately, given that each development team used a specific library version this step was a manual rather than automated onboarding activity.

Branch Protection Rules

The organization had established a set of consistent branch management rules for source control trees. For example, the policy requires that a pull-request be approved by at least one reviewer prior to code merges for the ‘master’, ‘release’, and ‘develop’ branches within the repository. These rules were encoded within the migration Python scripts and pulled from the Ansible playbook during GitHub project creation.

Automated CI/CD Pipeline Modifications

To support the existing CI/CD pipelines, the migrated code bases required pipeline configuration file updates. This included configuration links for automated Jira issue updates, proper Jenkins master/agent execution (i.e., web-hooks), security automation scans, and integration with library package control (e.g., JFrog Artifactory™). These modifications were captured in migration Python scripts and pulled from the Ansible playbook during GitHub code migration.

Access Key and Service Account Management

Automated CI/CD processes often require the use of service accounts and shared-secret access keys to function properly. During the GitHub migration it was critically important to maintain these access keys to prevent improper exposure to logs, notifications, or any other insecure reporting. The GitHub migration team used the Ansible vault feature and Groovy scripts to update built-in Jenkins credential management to ensure that project specific secrets/accounts/keys were securely transferred to the newly created GitHub linked jobs during the migration process.

GitHub Pre-Migration Setup

The GitHub Jenkins integration was built as a separate job to create the GitHub ‘team’. This included configuration of the team with a proper name, administration users, and match in the Jenkins build folder. For each repository we also set a Jenkins “web-hook” to ensure the proper Jenkins master is used to run each CI/CD pipeline.

Automated Testing Integration

As a part of code quality control, SonarQube code scanning is tied to a defined repository and required as part of the Jenkins CI/CD workflow. The scan results are reported to a separate GitHub tab which needed to be matched up with the project team. In this way, the newly created GitHub project could directly report to developers the results of the automated code quality analysis.

Results

The DevOps enablement team was required to meet a very tight deadline of four months to complete the full migration from Bitbucket to GitHub and avoid the expense of license renewals. Given the scope of the challenge, the only viable solution was to automate as much of the migration as possible. Where manual intervention was required, the DevOps team clearly communicated a checklist of activities to the affected teams for both pre- and post-migration changes. Using the combined tool set of scripted Jenkins jobs, Ansible playbooks, and Python scripting, the DevOps team successfully completed all migrations and modifications to all code bases several weeks prior to the deadline. The organization’s information technology team has reported that all teams are active on GitHub and the Bitbucket repositories have been archived.