The hosting of an enterprise application is always challenging. It needs many complex components to work correctly and achieve agility, reliability, and security. In the past couple of years, container technologies have evolved rapidly to do the heavy lifting for enterprise applications in the cloud. The container has played a significant role in delivering robust distributed microservice architecture and continues to integrate into the DevOps space. But on a large scale, container management needs lots of effort.

Kubernetes is built to manage the complexity for you. The cloud providers also came up with Kubernetes Services, which can remove maintenance tasks and other processes from the Kubernetes workload. The combination of a cloud platform and Kubernetes let you focus on strategy, deployment, and integration for enterprise applications.

The Kubernetes for the on-premises Magento 2 Enterprise application is still challenging because of its long downtime during deployment and IO intensive tasks. This guide points out some of the key elements for infrastructure and deployment, which will help you deploy Magento 2 faster. Other enterprise applications can also benefit from this guide to build their infrastructure.

Strategy

The strategy is a crucial part of creating an architecture and building a solution around an enterprise application. The top considerations would be the requirement, selection of toolsets, and flexible integration capability. The solution you are building should be flexible enough to integrate easily with future releases. The dynamic approach will help provide a scalable infrastructure, which can scale up or down on demand. At the same time, the solution must not impact your application, deployment, or security. Kubernetes is a great fit to manage such intricacy.

The strategic approach and strong continuous integration/continuous delivery (CI/CD) approach could help reduce Magento 2 deployment time. In CI/CD, the strong deployment pipeline could create a Magento 2 Docker Image, install Nginx and PHP packages, and set configurations using Dockerfile. You can also prepare composer install, static content deploy, patching, and module installation. Try to keep the Docker image size as small as possible using the compress file system and build a Docker image. In the deploy stage, perform Kubernetes deployment using helm or kubectl and set the Magento media folder as the mount point with Elastic File System (EFS). The CDN or Varnish can serve static content faster.

Platform Decision

Initially, I had a similar basic question: What platform should I select? There are quite a few good options available such as dedicated and cloud server hosting solutions (e.g., Amazon Web Services, Google Cloud Platform, and Rackspace), Magento Cloud hosting, self-hosted Docker solutions, and Kubernetes on-prem. I decided to go with the cloud offer Kubernetes service to offload the Kubernetes management workload. I wanted to have a scripted and automation-supportive platform so that would integrate smoothly with the rest of the toolset. The top cloud Kubernetes services are Google Cloud Platform (GCP) with Google Kubernetes Engine (GKE), Azure with Azure Kubernetes Service (AKS), and Amazon Web Services with Amazon Elastic Kubernetes Service (EKS). These options are all competitive enough and could be a good choice for your solution. I personally like GKE for its out-of-box features, but I ended up choosing AWS EKS service due to other requirements (which I will cover later).

Architecture and Implementation

With the platform chosen, it’s now time to focus on architecture. I wanted to keep in the solution as private as possible for security reasons, but at the same time create a fully scalable infrastructure to pump resources on demand. These are the few simple but effective tools I ended up choosing.

Tools used to build Magento EE Kubernetes solution:

- AWS – Cloud Infrastructure

- AWS CloudFormation

- EKS – Kubernetes

- Nodes Autoscaling

- Cluster Autoscaler

- Elastic File System (EFS)

- Istio

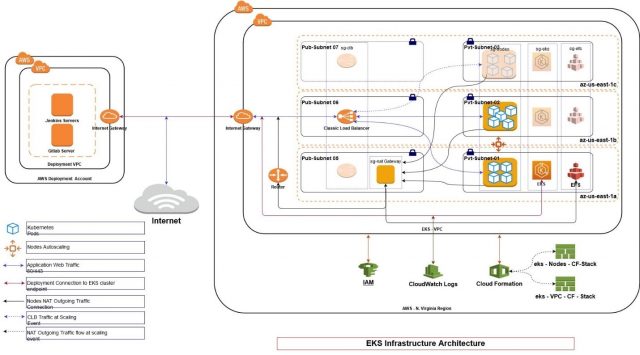

The infrastructure was prepared to host more than 100 different applications and 2,600 Docker containers. The application should be distrusted and isolated. Here is the infrastructure architecture of Kubernetes solution:

Fig. Infrastructure Architecture for Kubernetes Solution

GKE vs. EKS

In the above architectural map, the EKS cluster is placed in a private segment of Amazon Virtual Private Cloud (VPC) where nodes reside. To accomplish high availability and durability, the nodes are provisioned in multiple availability zones. The nodes’ scalability is managed by AWS Autoscaling and tied up with the Cluster Autoscaler. The Cluster Autoscaler keeps track of the healthy nodes and probes for resource requirements. It also supports to readiness and liveness probes configured for pods deployment in the cluster.

One of the significant reasons to go with EKS is the volume mount capability with multiple nodes offered by EFS service. The GCP Cloud Filestore (beta) offers the same feature, but requires a 1TB minimum fileshare size, whereas EFS priced by GB/month. Yes, there will be a latency penalty compared to EBS volumes, but that is something you can manage with your application design and specified mount points. As an alternative, you can configure the network file system (NFS) in GCP and AWS, but there will be an extra effort required to manage the NFS service. In this architecture, the EFS is provisioned in the private segment as intended. The public segment of VPC has load balancing, which will receive external traffic for port 80/443. Then Istio is able to manage the rest of the routing and communication between EKS cluster pods.

The scaling and auto-healing is handled by Cluster Autoscaler. The EKS logs are uploaded in the CloudWatch Logs. The nodes/pods are monitored by the CloudWatch matrix and Kubernetes monitoring tools.

Kubernetes Node Structure

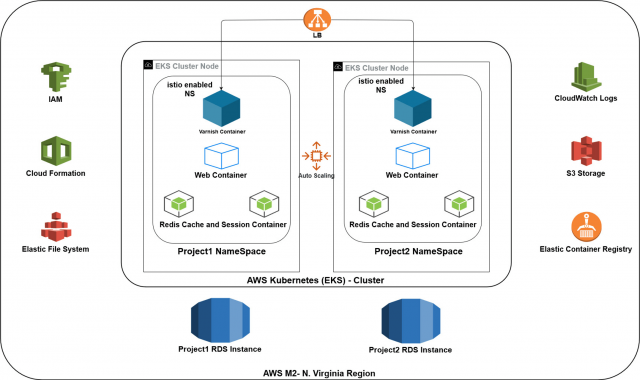

The following map explains more about internal pods isolation and the flow of requests handled by microservices. The infrastructure is very flexible and closely integrated with several components to provide security.

Fig. Insight of K8s Nodes

The containerized projects are easily deployable using CICD tools without affecting other environments. The CICD build phase packages Docker container image and ships as a part of the deployment release. 95% of the deployment workload is handled by the CICD tool for the Magento M2 application and will finish within a couple of minutes. The dev team is provided with the necessary access to the application using the jump box server and can access the container. The access rights are securely managed and restricted for their specific projects.

This solution can work with any enterprise-level applications and deploy at any scale in a staging, UAT, or production environment using CloudFormation or Terraform templates.