To convert a text file from UTF-8 encoded data to ANSI using AWS Glue, you will typically work with Python or PySpark. However, it’s important to understand that ANSI is not a specific encoding but often refers to Windows-1252 (or similar 8-bit encodings) in a Windows context.

AWS Glue, running on Apache Spark, uses UTF-8 as the default encoding. Converting to ANSI requires handling the character encoding during the writing phase, because Spark itself doesn’t support writing files in encodings other than UTF-8 natively. But there are a few workarounds.

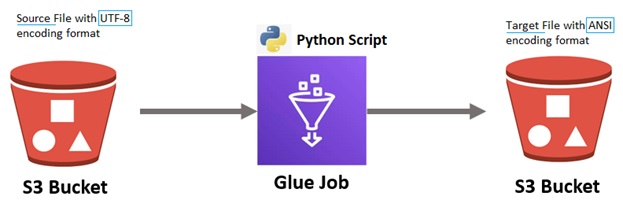

Here’s a step-by-step guide to converting a text file from UTF-8 to ANSI using Python in AWS Glue. Assume you’re working with a plain text file and want to output a similarly formatted file in ANSI encoding.

General Process Flow

Technical Approach Step-By-Step Guide

Step 1: Add the import statements to the code

import boto3 import codecs

Step 2: Specify the source/target file paths & S3 bucket details

# Initialize S3 client

s3_client = boto3.client('s3')

s3_key_utf8 = ‘utf8_file_path/filename.txt’

s3_key_ansi = 'ansi_file_path/filename.txt'

# Specify S3 bucket and file paths

bucket_name = outgoing_bucket #'your-s3-bucket-name'

input_key = s3_key_utf8 #S3Path/name of input UTF-8 encoded file in S3

output_key = s3_key_ansi #S3 Path/name to save the ANSI encoded file

Step 3: Write a function to convert the text file from UTF-8 to ANSI, based on the parameters supplied (S3 bucket name, source-file, target-file)

# Function to convert UTF-8 file to ANSI (Windows-1252) and upload back to S3

def convert_utf8_to_ansi(bucket_name, input_key, output_key):

# Download the UTF-8 encoded file from S3

response = s3_client.get_object(Bucket=bucket_name, Key=input_key)

# Read the file content from the response body (UTF-8 encoded)

utf8_content = response['Body'].read().decode('utf-8')

# Convert the content to ANSI encoding (Windows-1252)

ansi_content = utf8_content.encode('windows-1252', 'ignore') # 'ignore' to handle invalid characters

# Upload the converted file to S3 (in ANSI encoding)

s3_client.put_object(Bucket=bucket_name, Key=output_key, Body=ansi_content)

Step 4: Call the function that converts the text file from UTF-8 to ANSI

# Call the function to convert the file convert_utf8_to_ansi(bucket_name, input_key, output_key)

Summary:

The above steps are useful for any role (developer, tester, analyst…etc.) who needs to convert an UTF-8 encoded TXT file to ANSI format, make their job easy part of file validation.