SERPs will change, AI will be a big part of the changes, and SEO remains relevant.

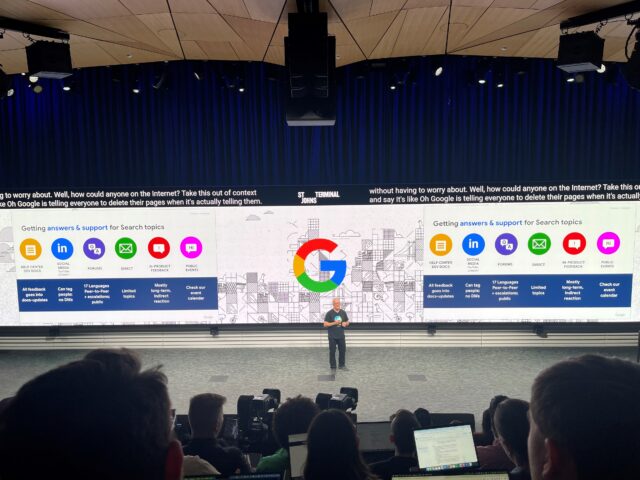

Those were key points made at Google’s Search Central Live in New York City on March 20.

“Search is never a solved problem,” according to Liz Reid (quoted at the event). John Mueller pointed out that “15% of all searches are new every day.“ This means Google has to work hard to keep a fresh index and run algorithms able to serve results that meet user needs.

AI Overview

The biggest change in SERPs over the past year is the introduction of what Google calls AI Overview (AIO), a form of featured snippet on ‘roids. Unlike featured snippets, AIO is generated text based on multiple sources and often with no easily found links to other web sites.

The impact of AIO on Click-Through Rates is unclear, but client data I have seen and studies by SEO pros and vendors show a 50-75% decrease in CTR, depending on the method. AIO can improve CTR for pages that rank so low that even the meager rates for links listed as citations in AIO are better than for the organic result.

Attendees harshly criticized Google’s refusal to share AIO traffic data in Search Console’s Performance report. A Google rep argued that AIO is still new and changing so much that it doesn’t make sense to share the data at this point. That argument did not seem to go over well.

Spam, Penalties, and Site Reputation Abuse

Site Reputation Abuse is a manual action that also entered the chat last year. It penalizes websites that use third-party content to rank with the help of signals earned by first-party work. (Some SEO practitioners refer to this as “parasite SEO,” which makes little sense since the relationship is symbiotic.)

The quality of the third-party content, as is publishers’ intent, is irrelevant in the assessment.

Google’s Danny Sullivan stressed that the spam team works hard to decide if a site is guilty of reputation abuse.

Sullivan stressed that Google “does not hate freelancers.” In some freelance and publisher circles, there is a fear that freelance work is third-party content in Google’s eyes.

He also stated that Google penalizes spam practices, not people or sites (although a site would obviously be affected when penalized for using spam practices).

Freelancers who write for a site that has been penalized will not have their work on other sites penalized, nor will those sites be affected because they have content created by someone who has also created content for a site that has been penalized.

How big is the spam problem for Google?

50% of the documents that Google encounters on the web is spam, said Sullivan, but 99% of search sessions are free of spam. These numbers are, of course, based on Google’s definitions and measurements. You may view it differently.

Brand, ranking, and E-E-A-T

Brand has become a catch-all cure-all term in the SEO industry. It isn’t quite that, but it is important. I define a brand as a widely recognized solution for a specific problem. People prefer brands when looking for a solution to a problem. It takes work to become a brand.

Sullivan said Google’s “systems don’t say brand at all,” but “if you’re recognized as a brand… that correlates with search success.” The signals Google collects tend to line up with brands.

Mueller pointed out that you can’t “add E-E-A-T” to a website; it’s not something you sprinkle on a page. Putting an “About” link on your site isn’t helping you rank.

The acronym E-E-A-T stands for experience, expertise, authoritativeness, and trustworthiness. Google primarily uses these concepts to explain to its search quality raters what to look for when evaluating the quality of pages linked from the search results,

Google’s search quality rater guidelines give many examples of companies with high E-E-A-T. For example, Visa, Discover, and HSBC are mentioned as companies with high E-E-A-T for credit card services. Brand is a more common and sweeping, if vaguer, way to say E-E-A-T.

Visa did not become a brand by having a Ph.D. in financial services write essay-length web pages on the wonders of credit cards. E-E-A-T, like brand, is an outcome, not an action.

Sullivan said Google is looking for ways ”to give more exposure to smaller publishers.” He also noted that many sites just aren’t good. He asked why Google should rank your site if thousands of other sites are just as good or better.

This is where brand becomes the answer. Become a widely recognized solution, and the signals Google uses will work to your advantage (though not always to the extent you want them to work).

Sundry Points Made at the Event

- Google doesn’t have a notion of toxic links

- Use the link disavow tool only to disavow links you have purchased

- Google does not crawl or index every page on every site

- If a page is not “helpful,” the answer is not simply to make it longer. More unhelpful content does not make the page more helpful.

- Google Partners does not have an internal connection with Google for SEO help.

- You don’t need to fill your site with topical content to rank. This is especially true for local SEO (the specific question was about “content marketing,” which is a lot broader than putting content on your site, but Mueller spoke only about on-site content).

- Duplicate content is more of a technical issue than a quality issue. Duplicate content can have an unwanted impact on crawling, indexing, and ranking.

- Structured data is much more precise than LLM. It’s also much cheaper.

- Structured data isn’t helpful unless a lot of people use it. Google structured data documentation tells you what types are used by Google and how.

- Structured data is not a ranking signal but can help you get into SERPP features that drive traffic.

- Google Search does not favor websites that buy AdWords or carry AdSense.