In a previous blog post I talked about how administrators and architects should place more emphasis on planning for application sharing bandwidth in their Skype4B deployments. Armed with that information, the next logical progression of this blog series continues the focus on application sharing and discusses the available methods within Skype4B to manage and control the bandwidth requirements for application sharing.

General Methods of Controlling Bandwidth

Speaking broadly, there are typically two methods of controlling any sort of application bandwidth across enterprise networks. Both methods are not mutually exclusive and can be used in concert with one another, but it is largely up to network engineers and the application engineers to work together to find the best solution for your environment.

Control the traffic at the network

For most of the network engineers out there, this is the “preferred method”. Like controlling traffic on highways via “normal lanes” and “HOV lanes”, network traffic is separated, classified and handled in a manner that is configured by the network engineers to give preferential treatment (and bandwidth) to high priority traffic while giving normal treatment to non-priority traffic. This is most generally referred to as Quality of Service (QoS). QoS is typically seen in two forms:

- Differentiated Services – This is a QoS designation split into two layers of the OSI stack

- Class of Service (CoS) – This is a Data Link Layer classification method whereby a 3-bit CoS field is included in Ethernet headers in a 802.1Q VLAN

- Differentiated Services Code Point (DSCP) – This is a Network Layer classification method whereby a 6-bit DSCP value is included in the 8-bit Differentiated Services field within the IP headers of traffic

- This is typically the more common QoS model and the preferred model today.

- Integrated Services – This is a QoS designation where the traffic specification part (TSPEC) and request specification part (RSPEC) help to define and classify the traffic into unique flows. This is not a common QoS model still in deployment.

In either case above, network engineers can control, on a per-hop basis, how each classification of traffic is treated along the entire network path. While flexible and powerful, this method requires engineers to know all the various types of traffic on the network and to classify it accordingly (and ensure it is classified across all devices), which can be an arduous task and result in traffic being incorrectly classified. In addition to the work required, as more and more applications move to SaaS available across the Internet, QoS is lost as the traffic leaves the corporate network and moves across the Internet which restricts QoS from being available from end-to-end.

Control the traffic through the application

For most of the application engineers out there, this is the “preferred method”. Think of this as the “honor system” where some type of built-in application configuration tells the application to limit itself to X bandwidth when utilizing the network. While undoubtedly easy to deploy, this method has no awareness of other traffic around it and no integration with network devices that send/receive traffic, which results in a more limited “one-size-fits-all” approach.

How does Skype4B fit in?

In almost all enterprise deployments, architects and engineers desire to identify the traffic produced by Skype4B and fit that traffic within the available enterprise network configurations. Skype4B offers both of the configurations above and can be configured for one or both to suit the needs of the enterprise network.

Limit Application Sharing Bandwidth Within On-Premises Skype4B Conference Policies

This method is by far the easiest option to limiting application sharing bandwidth within Skype4B. The only built-in method to control application sharing bandwidth is via the Conferencing Policies within Skype4B. By default, the Global policy has no functional restriction on bitrate and is set to 50000.

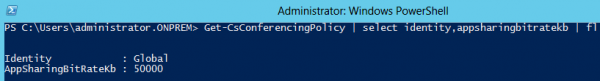

Get-CsConferencingPolicy | select Identity,AppSharingBitRateKb | fl

If you decide to limit bandwidth, two approaches could be taken:

- Alter the Global policy – while this approach is easiest, it will impact all users that don’t have a site or user policy assigned to them. As a result, you may limit users to an artificially low bandwidth in certain sites that have sufficient bandwidth.

- Create a Site or User policy – this approach allows flexibility in deployment by tailoring bandwidth requirements to a per-site or a per-user basis. As a result, you can limit users in a low bandwidth site to a low bitrate while allowing users in high bandwidth sites to a higher bitrate.

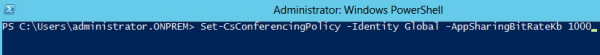

Set-CsConferencingPolicy –Identity Global –AppSharingBitRateKb 1000

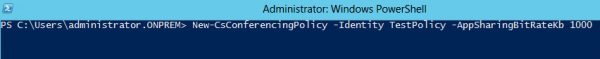

New-CsConferencingPolicy –Identity TestPolicy –AppSharingBitRateKb 1000

Limits of this approach

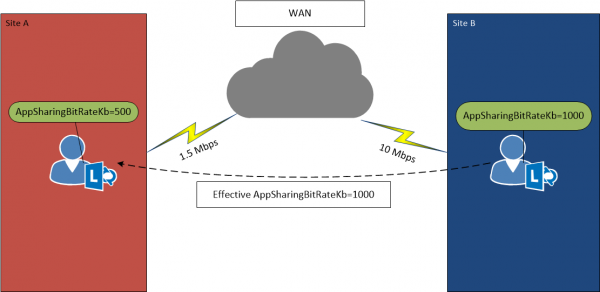

While the second option above is my preferred option for handling bandwidth natively within Skype4B, it does come with limits. The biggest current limitation is that the AppSharingBitRateKb parameter is handled per-user and only is applicable to the presenter. Where this becomes a challenge is with branch sites that have much lower bandwidth than other larger branch sites with high bandwidth.

In the figure above, when the user in Site B goes to share their desktop with the user in Site A, the effective limitation is based on the user in Site B. With Site A limited to 1.5 Mbps, that could result in 67% of available bandwidth being consumed for a single desktop share. When you add in the possibility of multiple users sharing desktops between the two sites, oversubscription of the 1.5 Mbps circuit becomes a very real risk. Also of note is that this AppSharingBitRateKb behavior is applied both in P2P calls and within multiparty calls.

Impact of this approach with Lync for Mac

Through several of my deployments I’ve noticed there seems to be a significant difference with RDP performance on Mac OS clients, especially the Retina display models, when the AppSharingBitRateKb setting is reduced to a low value. Interestingly enough, the low value doesn’t seem to impact Windows-based clients but users on Lync 2011 for Mac would report that application sharing was largely unusable. In scenarios where there are Lync for Mac clients I would strongly suggest only using the Optimal numbers referenced in my previous post for the AppSharingBitRateKb setting.

Limit Application Sharing Bandwidth Within Skype4B Online Conferencing Policies

Sadly, this is a bit of a bait-and-switch option because it really isn’t an option at all. Microsoft pre-creates and manages all conferencing policies within Skype4B Online and as a result you cannot create new policies or edit existing ones. As a result of this any user accounts that are homed online are restricted to using the AppSharingBitRateKb value Microsoft has defined, which is currently 50000. For all intents and purposes, the application sharing bandwidth is not restricted for Skype4B Online deployments so architects and engineers should carefully plan your network ingress/egress points to ensure sufficient bandwidth is available. If bandwidth restrictions are required in Skype4B Online deployments, you must begin to examine QoS restrictions for each modality.

Limit Application Sharing Bandwidth for On-Premises Skype4B Deployments through QoS

There are a number of articles on the Internet that already talk about this overall process but it boils down to telling the Skype4B client to utilize certain port ranges for each modality and then configuring client/network devices to treat each modality in a certain way by dedicating queues (loosely equal to bandwidth) for each network hop. The pre-requisite to all of this is that individual port ranges must be specified first. The overall process of doing so consists of:

- Configure port ranges for clients – Remember that each port range should be unique and not overlap. Additionally, you should have 20 ports per modality.

- Configure port ranges for the MCUs – Remember that each port range should be unique and not overlap. Since MCUs handle modalities from multiple clients be very careful reducing the port numbers to a small number of ports per modality. Best practices should be observed here and stick as closely to what Microsoft puts on TechNet.

- Configure port ranges for Mediation Servers – Remember that the audio port range should be unique and not overlap any other server port ranges, such as video or application sharing. Since the Mediation Server handles all traffic to/from IP-PBXs and the PSTN, be very careful reducing the port numbers. Best practices should be observed here and stick as closely to what Microsoft puts on TechNet.

Once port ranges are defined, the clients and servers will begin utilizing those ranges for each modality when communicating on the network. With individual port ranges in use, architects can then begin to classify the traffic using QoS using two main methods:

- Mark DSCP utilizing Group Policy based QoS policies on Windows clients and servers – This method is typically easiest but requires that access-layer switches trust DSCP markings coming from the endpoints. If switches aren’t configured for mls qos trust dscp, then DSCP markings will be stripped and classification is lost.

- Mark DSCP utilizing class-map policies based off the port ranges per modality – This method requires more work to configure every access-layer switch across the enterprise to mark DSCP based on either source or destination port ranges, but is sometimes the only available option if network engineers choose not to trust client/server DSCP markings.

A typical DSCP/CoS classification of traffic is listed below:

| Modality | DSCP Value | CoS Value |

| Audio | 46 | 6 |

| Video | 34 | 4 |

| SIP Signaling | 24 | 3 |

| Application Sharing | 18 | 2 |

| File Transfer | 10 | 1 |

Once you have calculated the expected application sharing bandwidth through the Lync Bandwidth Calculator for each site in your environment, network engineers can configure QoS queues appropriately to handle the expected traffic.

Limit Application Sharing Bandwidth for Online Skype4B Deployments through QoS

This method is similar to on-premises deployments but is limited because administrators cannot define the port ranges utilized for Online Skype4B deployments. Microsoft has the following port ranges pre-created for clients that are homed Online:

| Source Port | Protocol | Usage |

| 50000-50019 | UDP | Audio |

| 50020-50039 | UDP | Video |

| 50040-50059 | TCP | Application Sharing and File Transfer |

Architects still have the option to utilize Group Policy based QoS policies or class-map policies, but those have two major limitations:

- Having QoS in place mostly benefits P2P traffic – In this scenario the application sharing never leaves your corporate network so it can be controlled end-to-end.

- Skype4B Meetings and multi-party communications lose QoS upon egressing to the Internet – In this scenario you can maintain QoS up to the point where the traffic leaves your network. This can be helpful on your network, especially for endpoints that may need to traverse the corporate WAN before egressing to the Internet, but you are still at the mercy of traffic patterns on the Internet for a great deal of the hops and thus cannot guarantee QoS end-to-end.

Despite not being able to maintain QoS end-to-end in an Online deployment, you can still classify the traffic into discrete queues to ensure the bandwidth is allocated for application sharing which in turn ensures that application sharing bandwidth doesn’t impact other traffic on your network. Without reinventing the wheel, check out this blog post for information on configuring QoS for Online deployments.

The best option: utilize both application limits and QoS

For every on-premises deployment out there you should absolutely be coordinating application limiting in concert with QoS. In doing so you gain the following benefits:

- Predictable application BW limits – by configuring the AppSharingBitRateKb parameter you ensure that a maximum amount of bandwidth will be requested by Skype4B clients

- Predictable QoS queue utilization – by having expected bandwidth from the Skype4B team network engineers can map expected bandwidth consumption to available queues.

- Better management through improved communication between network and application teams – this one may not seem obvious but the more the two teams talk the less chance there is of misconfiguration and the better the solution is for the end user.

- If queues become saturated, should the application reduce bandwidth (and potentially harm the end user performance) or should queues be increased or potentially both options?

- If network bandwidth increases at a site, are queues going to be increased and if so, how will that impact the configuration within the application?

- Based on usage patterns of existing sites, how should network engineers plan for bandwidth for new sites that come online?

Note: There’s a number of other options here I’ve left out, but in every case the benefits are helpful to both the network team AND the application team.

For Online-only deployments, you should still utilize QoS even though you are limited in configuring the application. Despite granular application control not being available, network engineers can still classify traffic and give a roughly-predictable utilization for each queue of traffic (application sharing included).

But what about Call Admission Control?

An astute reader would point out that Skype has Call Admission Control that can be used to restrict traffic as well, and you would be correct to say that additional control may be available. Call Admission Control is only available for the following configurations:

- On-premises deployments – CAC is currently not available for users that are homed Online

- Audio/Video modalities – CAC is currently available for audio and video modalities only. Application sharing is not a supported CAC modality today.

If CAC is available for your deployment, configure it in tandem with your QoS configuration. In fact, CAC is my preferred approach to bandwidth limits for audio and video modalities because it allows flexibility per-site AND includes reporting. Conferencing Policy configurations of audio and video are applicable to each user regardless of where the user physically resides on the network, which can result in a user using more bandwidth than may be available if the user is travelling between sites. Since application sharing currently isn’t supported in CAC today, architects need to utilize Conference Policies for the foreseeable future.