With the popularity of Oracle Planning and Budgeting Cloud Service (PBCS) and Enterprise Planning and Budgeting Cloud Service (EPBCS), we have seen an increase in the number of rather complex deployments. Often, the complex deployments require substantially more data to be loaded into the application resulting in a larger application.

Recently, we encountered some very long database refresh times where the application refresh would take several hours instead of minutes, as most environments typically require.

This blog will examine an alternate approach that can be utilized to address this challenge via EPM Automate.

Foundation

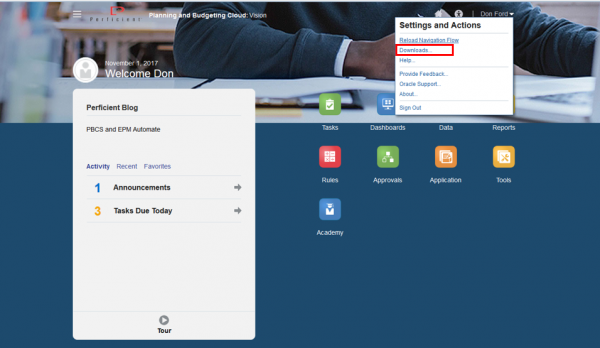

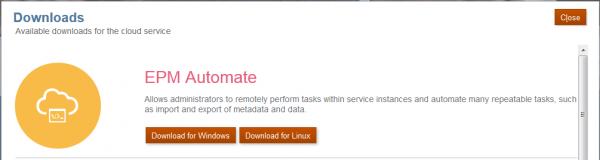

The first task is to download the latest version of EPM Automate. You can download EPM Automate from the profile menu in PBCS/EPBCS – “Downloads” – second option from the top.

For more details on EPM Automate and its other capabilities – please see this blog post from my colleague Celeste Qian.

Step One – Execute Lev0 Data Export

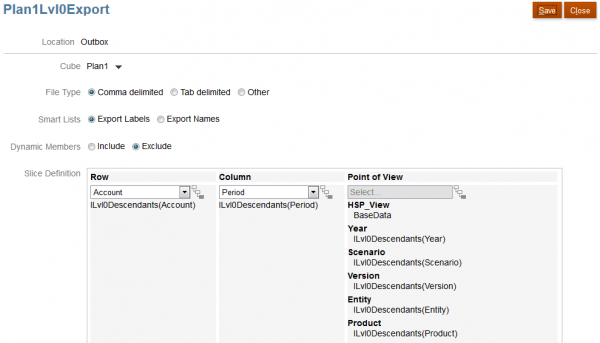

The easiest approach to automate this task is to create a job in PBCS/EPBCS with the relevant selections for each dimension per plan type. In the illustration below, I want a level zero export from Plan Type 1.

One key aspect of this job is to specify the Inbox/Outbox Explorer in PBCS/EPBCS as the location for the data export. This will provide the ability to bring the data back into the Plan Type without being dependent upon a file stored in a location outside of the cloud environment.

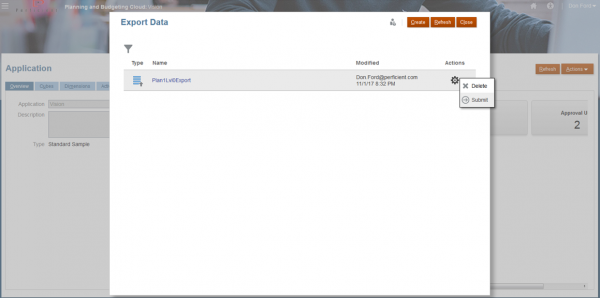

The “Plan1Lvl0Export” job is now available for execution. By clicking on the “Action” options – I have the option to “Submit” this job.

After successful creation of this job, I can now reference this job to export the level zero data from Plan Type 1.

I can use the following EPM Automate commands to execute the job:

….

REM Step 1 – Execute Lev0 Data Exports

Call epmautomate exportdata Plan1Lvl0Export

…

After the job is completed, the planner can navigate to the Inbox/Outbox Explorer and validate that the export was successful.

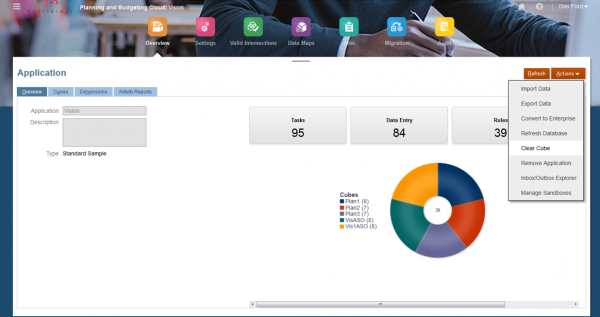

Step Two – Execute Clear Data in Cube

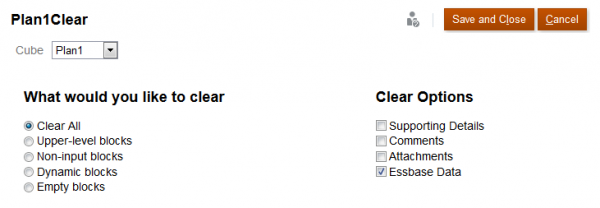

The next task is to clear all of the data in the cube. Similarly, the best approach to this task is to create a job in PBCS/EPBCS to clear the data in the cube. In the illustration below, I want a level zero export from Plan Type 1.

The job in PBCS/EPBCS will clear all blocks and all of the Essbase data as indicated by the options selected below.

After successful creation of this job, I can now reference this job to clear all of the data from Plan Type 1.

Consider the updated script below:

REM Step 2 – Execute Clear Data in Cube

Call epmautomate clearcube Plan1Clear

Step Three – Execute Refresh Database

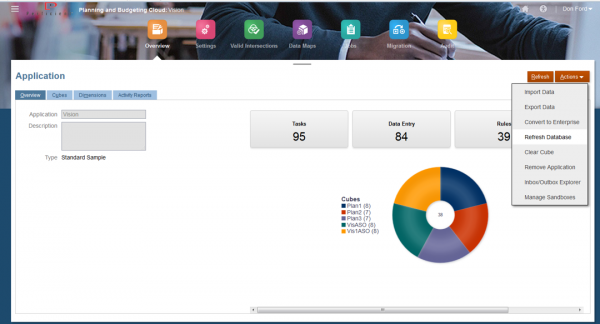

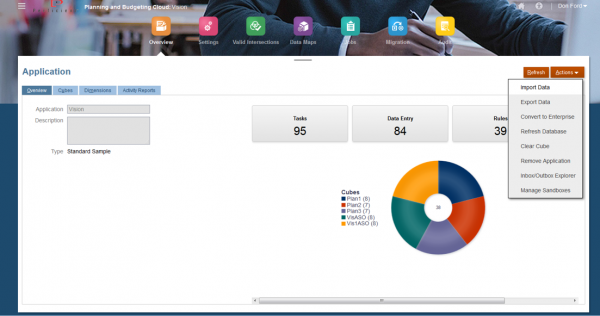

With the data export completed and the data cleared, we can now refresh the cube. Just as we have done previously, the best approach is to create a job in PBCS/EPBCS to refresh the database.

The new refresh database job is setup in PBCS/EPBCS and given the name ‘RefreshVision.’

The next step is to reference this new job in the EPM Automate script.

REM Step 3 – Execute Refresh Database

Call epmautomate refreshcube RefreshVision

The efficiency of this step is due to the clear cube job (Step 2). The efficiency of this step is evident in large (from a data standpoint) applications because a restructure or refresh of the database is completed in a shorter amount of time because the cube is empty.

Step Four – Execute Level 0 Data Import from the Inbox/Outbox Explorer

With the database refresh complete, the next step is to restore or import the data back into the cube.

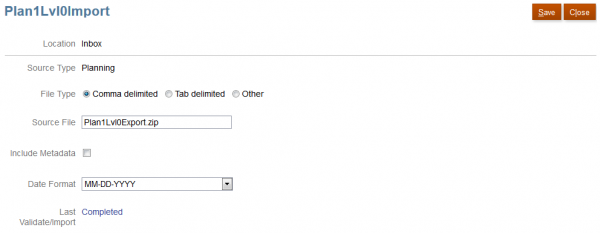

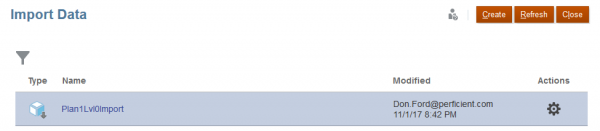

As you can see from the pattern we have established, the best approach is to create a job in PBCS/EPBCS to import the data. This job will reference the export completed (Step 1).

As you can see from the image below, the File Type is comma delimited and the Source File is the name of the exported file (Step 1). The only modification we make here is to add the file extension ‘zip’ to the end of the file name.

Lastly, we give this import a name – Plan1Lvl0Import.

Just as we have done before, we reference this job in our EPM Automate script. The format for import step is slightly different in that we reference both the name of the job and the name of the data file to be imported.

REM Step 4 – Execute Level 0 Data Import from the Inbox/Outbox Explorer

Call epmautomate importdata Plan1Lvl0Import Plan1Lvl0Export.zip

Conclusion

After, breaking down each of the four steps – we are able to put it all together in a complete EPM Automate script.

@echo on

REM This is a demo script for discussing basic strategies to leverage EPM Automate

SET url=https://fastforward-perficient.pbcs.us2.oraclecloud.com

SET username=Don.Ford@perficient.com

SET password=[password]

SET identity_domain=perficient

Call epmautomate login %username% %password% %url% %identity_domain%

if %ERRORLEVEL% NEQ 0 goto :ERROR

REM Step 1 – Execute Lev0 Data Exports

Call epmautomate exportdata Plan1Lvl0Export

REM Step 2 – Execute Clear Data in Cube

Call epmautomate clearcube Plan1Clear

REM Step 3 – Execute Refresh Database

Call epmautomate refreshcube RefreshVision

REM Step 4 – Execute Level 0 Data Import from the Inbox/Outbox Explorer

Call epmautomate importdata Plan1Lvl0Import Plan1Lvl0Export.zip

REM Step 5 – Aggregate Data

Call epmautomate runbusinessrule “Aggregate Strategic Plan Versions”

REM Logout

Call epmautomate logout

if %ERRORLEVEL% NEQ 0 goto :ERROR

:EOF

echo Script Completed Successfully

exit /b %ERRORLEVEL%

:ERROR

echo Failed with error #%ERRORLEVEL%.

exit /b %ERRORLEVEL%

Note: The password is intentionally removed from this script. If you would like to learn how to encrypt your password when using EPM Automate, please see this blog post from my colleague Eddie Cisneros.

I did include one additional step in the above script – an aggregation step (Step 5). This step is included to ensure we roll-up our data to non-level zero sparse dimensions. This is necessary because our clear script cleared all data.

In summary, the EPM Automate script now contains each of the four steps we identified in the introduction:

- Export

- Clear

- Refresh

- Import

You can leverage this script in your PBCS/EPBCS Implementations where long database refresh times have become commonplace.