Performance testing a TM1 model begins with understanding performance testing in general.

Performance testing is an iterative process and the expected outcome (acceptable performance) will be based upon both the TM1 application and the environment it is deployed in. The goal of performance testing is to identify response times, throughputs, and resource utilization of the overall application. (In general, response time is a user concern, throughput is a business concern, and resource utilization is a system concern).

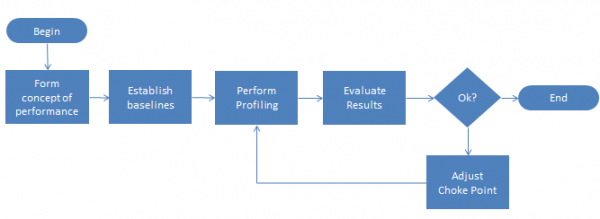

The following image outlines the flow of the performance testing process:

Performance & Performance Testing

The first step in performance testing is always to formulate a clear understanding of exactly what performance and performance testing really is. An applications performance plays a key role in its overall value and a performance testing strategy is critical in establishing and maintaining acceptable application performance levels.

Performance

- Is Specific and Measurable (multiple performance KPI’s should therefore be established for the application and these KPI’s should reflect the effect of time zones, the time of day or day of the month, the type of user, etc.).

- Includes intangibles (like the level of effort required to maintain the application being tested and the general usability of the application).

- Will change over time due to system maturity and other factors (and therefore requires regular (re)profiling and review of all established performance KPI’s).

- Is Comparable (to similar applications or older versions of itself and this is key to ensuring that performance expectations continue to be met over time).

- Is Multidimensional (application performance can be measured in speed (in specific units) to complete specific application tasks, in overall application memory usage and consumption during normal operations, etc.).

Performance Testing

- Is an iterative process (profile, evaluate, adjust, repeat!) and an ongoing process (to be re-measured over time),

- requires controlled, consistent and meaningful profiling methods (the same explicit process must be used for each profiling session),

- Is dependent upon established baselines (baselines are used to determine (the degree of) success or failure,

- Must be recorded and kept in a consistent manner (always use a standardized collection method and save everything in the same, safe repository (preferably a searchable database) and

- Test results should always be presented in a consistent, readable format (the format should summarize the data that has been collected to enable its analysis. The format should allow a knowledgeable reader to understand what was reported and easily draw a conclusion).

Now that we have aligned to what is really meant by performance (and performance testing) we can proceed with establishing our baselines (which I will explain in my next post).

Until next time.