This is my second blog in a two-part series to introduce Data Quality Services (DQS) in SQL Server 2012. Please refer to my first blog which discusses the interactive client for DQS – Introduction to Data Quality Services (DQS) – Part I. In this blog, I will introduce the SQL Server Integration Services (SSIS) component which you can use for automating the data cleansing process versus using the client tool.

Note: this blog assumes that you have a basic understanding of SSIS and its basic functionality. Additionally, the DQS client install must be complete in order to use DQS in SSIS.

The DQS, SSIS component requires that a public Knowledge Base be created in order to cleanse the source data. I will be using the same Knowledge Base that I created in Part I. The component does not need to be installed on the same machine as the DQS Server.

(Click on each image to enlarge it.)

Identify the Data to be Cleansed

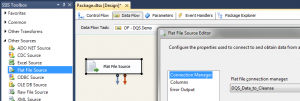

The DQS Cleansing Component can be found in the Data Flow Task SSIS Toolbox – not the Control Flow. So, don’t waste 10 minutes looking for it there like I did. Create an SSIS package and drag the Data Flow component onto the work area. Double-click the Data Flow Task to open the tab.

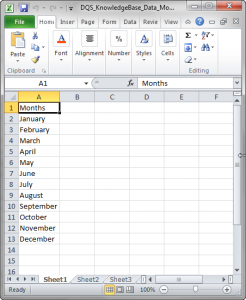

First, I need to identify the data source which needs to be cleansed. I will be using the same Excel file that was used in my first blog – below. However, please note that I have saved the file as a CSV. I have highlighted the rows which are incorrect and need cleansing.

Before I begin the DQS process, I need to create a new Connection Manager and a Flat File Source for the data which needs to be cleansed. I will be creating a new Connection Manager as indicated below. This will point to the CSV in the above screenshot. NOTE: the connection manager name – DQS_Data_to_Cleanse – this will be used in the next step.

Next, drag a Flat File Source from the SSIS Toolbox to the work area. Double-click on the component and select the Flat file connection manager from the drop-down that you previously created. In my example, I selected “DQS_Data_to_Cleanse.”

Using the DQS Cleansing Component in SSIS

The DQS Cleansing Component ingests input records, sends them to the DQS server, and returns the processed records. This component can output the corrected data, as well as additional columns that may be useful. For example, each mapped record and field contains a status. This status can be used to identify records which need further processing. According to MSDN,

it is recommended to use a Conditional Split component below the DQS Cleansing component, and configure it to split the records to groups based on the record status (or based on other columns such as specific field status).

For the Knowledge Base to cleanse the data, I will be using the same one (DQS_Example) that I created in my previous blog – below. This Knowledge Base contains the Months domain.

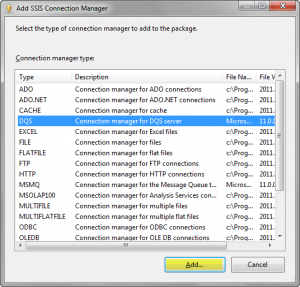

First, I will create a new DQS Connection Manager. Right-click in the connection managers tab and select “New Connection.” Select “DQS” for the Type and click “Add.”

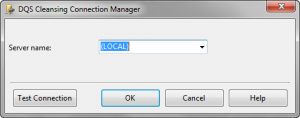

Type the Server Name and click “OK.” In this example, the DQS Server is on my LOCAL machine.

I have renamed the DQS connection manager to “DQS_LOCAL.” This will be used in the subsequent step.

In the SSIS Toolbox in the Data Flow Task, you will see the new DQS Cleansing component. Drag the component onto the work area and connect the Flat File Source Data Flow Path to the DQS Cleansing component.

Now, double-click the new component to edit it. I will now set the appropriate properties to use our Knowledge Base to cleanse the data. I have set the Data quality connection manager to “DQS_LOCAL” and the Data Quality Knowledge Base to “DQS_Example”; which was created in my previous blog.

The Mapping tab allows you to select the columns you want to cleanse, and map them to domains in your Knowledge Base. I have mapped the Flat File Source input column Months to the Months Domain in our Knowledge Base. NOTE: you can also change the names in the Alias columns. Additionally, the input data type must match the data type of the Knowledge Base in order to be considered for DQS processing.

The Advanced tab has different options which control which columns will be included in the output.

The below tables were taken from Matt Masson – MSFT blog, “Overview of the DQS Cleansing Transform.” The second table will be useful for understanding the appropriate split logic used in the Conditional Split component.

| Option | Description |

| Standardize output | Enables to standardize output according to domain settings (that can be defined in the DQS client application). There are two kinds of standardization. First is formatting output to Upper/Lower/Capitalize. Second is correcting to leading value (this is relevant in case of synonyms). You can see how this is defined in the client, Domain Management | Domain Properties tab. |

| Confidence | A score that is given to any correction or suggestion. This score reflects to what extent the DQS server (or the relevant Reference Data Source) has confidence in the correction/suggestion. |

| Reason | In case that the output is different than the original value, this field explains why. For example, it can be Invalid because of domain rule. Or, it can be Corrected because of utilizing DQS Cleansing algorithm, standardized to leading value, etc. |

| Appended Data (additional data received from the reference data provider) | This setting is used when there are domains attached to a Reference Data Source (RDS). In this case, sometimes the RDS returns additional information – not only values associated with the mapped domains. For example, when sending address, it can return also Lat/Long. The Appended Data field includes this additional information. |

| Appended Data Schema | If the RDS returned additional information, the schema field consists of the schema of how to interpret this data. |

| Encrypt connection | This determines whether the connection to SQL Server will be encrypted (using SSL). (Note, this setting will most likely be moved to the Connection Manager in the future) |

NOTE: The original values presented in his blog for the below table were not correct. For example, he had Corrected, but my value was Auto suggest.

| Status | Description |

| Correct | The value was already correct, and was not modified |

| Invalid | The value was marked as invalid for this domain |

| Auto Suggest | The value was incorrect, but DQS was able to correct it. The Corrected column will contain the modified value. |

| Unknown | The value wasn’t in the current domain, and did not match any domain rules. DQS is unsure whether or not it is valid. |

| Suggestion | The value wasn’t an exact match, but DQS has provided a suggestion. If you include the Confidence field, you could automatically accept rows above a certain confidence level, and redirect others to a separate table for later review. |

Next, I will setup a Conditional Split component to handle the Record Status values. As you can see in the below screenshot, I have 5 separate outputs based on the Record Status values.

Next, I will handle the outputs differently depending on their status. In my example, I will UNION ALL records with Record Status values of “Correct” and “Auto suggest” and UNION ALL records with Record Status values of “Invalid,” “Unknown,” and “Suggestion” separately. I will be outputting these records to separate flat files. This will demonstrate the ability to process the Record Statuses separately. For example, “Invalid,” “Unknown,” and “Suggestion” values may need manual intervention.

Upon execution of the package, you will see that out of the 12 records in the Source csv file, 3 records had the status of “Auto Suggest” and were therefore, corrected by the Knowledge Base.

Below are the 12 records that can be found in the “DQS_Destination_Correct_Corrected.txt” file which was created in the “Flat File Destination – Correct and Auto Suggest” component.

What’s Next?

This concludes my blog series on this topic. What additional topics related to SSIS and/or DQS are you interested in learning?

Great post Brett! I noticed Matt Masson is missing a couple record statuses in his table, New and Corrected.