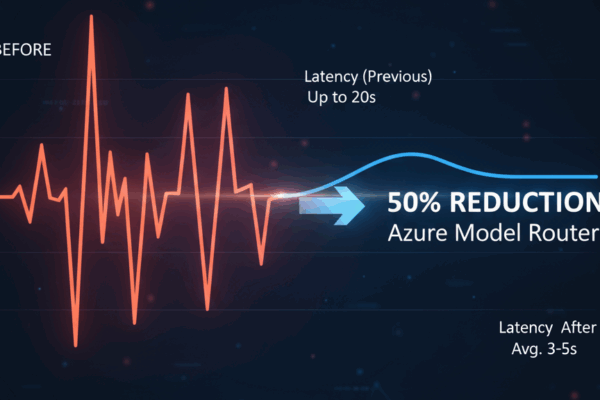

In any long-term enterprise AI deployment, you eventually hit a critical trade-off between model capability and operational performance. This was precisely the challenge we faced with Scarlett, Perficient ‘s internal chatbot that has been operating 24×7 for the better part of a decade. While leveraging a powerful model like GPT-5-mini provided the quality of responses we needed, the associated inference latency (peaking at nearly 20 seconds) was becoming a significant friction point for our users.

When you’re architecting a solution meant to increase productivity, a 20-second delay is more than an inconvenience; it’s a barrier to adoption. We needed a solution that could maintain response quality while drastically improving performance. This led us to test the preview of Microsoft Azure ‘s AI Foundry Model Router. Our goal was to see if this new orchestration layer could intelligently route traffic to mitigate our latency problem.

The Architectural Shift: From Single Endpoint to Intelligent Orchestration

Our previous architecture was straightforward: Scarlett’s application logic made calls directly to a provisioned Azure OpenAI gpt-5-mini endpoint. This is a standard pattern, but it means every single query, simple or complex, bears the full performance and cost overhead of that one large model.

The Model Router introduces a new abstraction layer. Instead of calling a specific model, we now direct all traffic to the Model Router’s single endpoint. Under the hood, the router analyzes the incoming prompt and routes it to the most appropriate model from a predefined pool.

The implementation was a simple change of endpoint, but the result was a fundamental shift in how our requests were processed. The data from the first few days of operation was compelling. We saw a dramatic and immediate reduction in end-user latency, from a volatile 10-20 seconds down to a stable 3-5 seconds.

This wasn’t just a marginal improvement; it was a greater than 50% reduction in average response time, which directly translates to a better user experience.

Analyzing the Routing Logic and Business Impact

Digging into the metrics, we could see the “how” behind this performance gain. Over thousands requests, the router distributed the load as follows:

-

85% of requests were routed to gpt-5-mini, confirming that most of our user queries still required a capable model.

-

15% of requests were offloaded to gpt-5-nano, a smaller and faster model, for simpler tasks that didn’t require the overhead of its larger counterpart.

This dynamic allocation is the key. By intelligently offloading 15% of the queries, the router frees up resources and reduces the overall system latency. This technical efficiency produced a tangible business outcome. We track a user satisfaction score for Scarlett, and in the days following the implementation, we saw a clear uptick in satisfaction and overall message volume. Employees were not only happier with the tool, they were using it more.

An Architect’s Perspective and Next Steps

The most significant takeaway from this test was that the primary benefit of the Model Router, in our case, was the dramatic improvement in performance, even more so than the potential for cost optimization. It effectively solved our latency problem without requiring complex, custom-built orchestration logic in our application.

However, its “black box” nature presents new questions for architects. While the results are excellent, we lack direct control or detailed documentation on the internal routing logic. Looking ahead, I am particularly interested in stress-testing its behavior with more complex scenarios, such as prompts containing very large system messages (+2,000 tokens) or those with extensive conversation histories (+10,000 tokens). Understanding how context size impacts the router’s model selection will be crucial for architecting the next generation of complex AI agents.

This implementation has proven to be a highly effective solution for a common enterprise challenge.