Introduction

Latency, the time it takes for data to travel from one point to another in a network or system, is a critical factor in the performance and user experience of various applications and technologies. In this blog, we’ll delve into the world of latency, its role in testing, and strategies for optimizing performance.

Understanding Latency

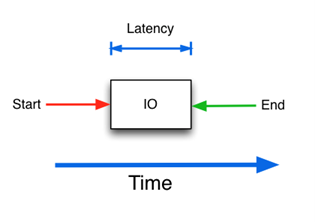

Latency is essentially a measure of delay. It’s the time it takes for a data packet, a signal, or any form of information to traverse a medium or network and reach its destination. In the context of computing and networking, latency can be caused by various factors, and it can significantly impact the overall performance and responsiveness of systems. Here are some common types of latency:

Pic courtesy: cisco.com

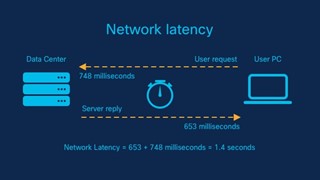

Network Latency

Network latency is perhaps the most familiar type of latency. It’s the delay that occurs when data packets travel across a network. Network latency can be influenced by several factors, including:

- Distance: The physical distance between the sender and receiver can introduce latency. Data travels at the speed of light, but even at that speed, it can take significant time to traverse long distances, especially in the case of global networks.

- Network Congestion: When multiple data packets compete for the same network resources, such as bandwidth, routers, or switches, congestion can occur. This congestion leads to delays as packets wait their turn to be processed.

- Network Infrastructure Quality: The quality and efficiency of the network infrastructure, including cables, routers, and switches, can impact latency. Higher-quality components typically result in lower latency.

Storage Latency

Pic courtesy: Louwrentius.com

Pic courtesy: Louwrentius.com

Storage latency refers to the time it takes to read from or write to storage devices, such as hard drives (HDDs) or solid-state drives (SSDs). Storage latency is influenced by factors like rotational delay in HDDs, seek times, and the speed of data transfer.

Application Latency

Application latency encompasses delays that occur within an application. This can include the time it takes to process requests, execute code, or retrieve data from a database. Application latency can vary widely depending on the complexity and efficiency of the application’s code.

The Role of Latency in Testing

Latency plays a crucial role in testing, particularly in the context of evaluating the performance and reliability of systems and applications. Here’s how latency factors into testing:

1. Performance Testing

Performance testing aims to assess how a system or application performs under various conditions. Latency is a key metric in performance testing as it directly impacts the responsiveness of the system. Common types of performance testing include:

- Load Testing: This involves subjecting a system to its expected load and measuring its response times. High latency can cause delays and impact performance under heavy loads.

- Stress Testing: Stress testing pushes a system beyond its limits to see how it behaves under extreme conditions. Latency is a critical factor to monitor during stress testing.

- Latency Testing: Specific tests can be designed to evaluate the impact of latency on system performance. This helps identify how delays affect user experience and system behavior.

2. User Experience Testing

For applications that require real-time interactions, such as online gaming, video conferencing, or trading platforms, latency can directly impact the user experience. Testing latency in these scenarios is essential to ensure a smooth and responsive user interface.

3. Network Performance Testing

In networking, latency testing is vital to assess the quality of network connections. High latency can result in poor voice and video quality in communication applications and can hinder the performance of online services.

Measuring and Simulating Latency

To effectively test and manage latency, various tools and techniques are employed:

1. Ping

Ping is a simple command-line tool used to measure network latency. It sends a packet to a target host and measures the round-trip time. Ping is useful for quick latency assessments.

2. Load Generators

Load generators are tools that simulate user traffic to measure system response times under load. They help assess how latency affects user interactions and system performance when subjected to varying levels of traffic.

3. Latency Simulation Tools

Some tools are designed to artificially introduce latency into a system. These tools help assess how a system behaves under adverse conditions, such as high network latency or slow storage access. They are valuable for identifying performance bottlenecks.

4. Profiling and Monitoring Tools

Profiling and monitoring tools help identify and analyze latency bottlenecks in applications or systems. They provide insights into where delays are occurring, enabling developers to make targeted optimizations.

Impact on Different Applications

The impact of latency varies depending on the type of application or technology:

Low-Latency Applications

Low latency is crucial for real-time applications where responsiveness is paramount. Examples include:

- Online Gaming: In multiplayer games, high latency can lead to lag and disrupt gameplay.

- Video Conferencing: Low latency ensures smooth and synchronized video and audio communication.

- Financial Trading: In trading platforms, even milliseconds of delay can result in significant financial losses.

Tolerable Latency Applications

For some applications, higher latency may be tolerable, but it can still impact the user experience to some extent. These include:

- Web Browsing: While web browsing can tolerate some latency, excessively slow page load times can frustrate users.

- Email: Email applications can handle moderate latency without significant issues.

Reducing Latency

To optimize performance and provide a seamless user experience, various strategies are employed to reduce latency:

1. Edge Computing

Edge computing brings processing closer to the data source, reducing the need for data to travel over long distances. This approach is particularly beneficial for real-time applications.

2. Code and Infrastructure Optimization

Efficient coding practices and optimized infrastructure can help reduce application and network latency. This includes minimizing database queries, optimizing algorithms, and using high-performance hardware.

Conclusion

Whether you’re testing the performance of a system, troubleshooting network issues, or optimizing an application, latency will always be a key factor to consider. By measuring, simulating, and addressing latency effectively, you can ensure that your systems and applications provide the best possible user experience and performance.

Thank you for sharing good information.