Amazon Elastic Container Service (ECS): It is a highly scalable, high-performance container management service that supports Docker containers and allows to run applications easily on a managed cluster of Amazon EC2 instances.

Cluster: Amazon ECS cluster is basically a logical grouping of tasks or services. All these tasks and services run on infrastructure that is registered to a cluster.

Fargate: AWS Fargate, which is a serverless infrastructure that AWS administers, Amazon EC2 instances that you control, on-premises servers, or virtual machines (VMs) that you manage remotely are all options for providing the infrastructure capacity.

How to Deploy Tomcat App using AWS ECS Fargate with Load Balancer

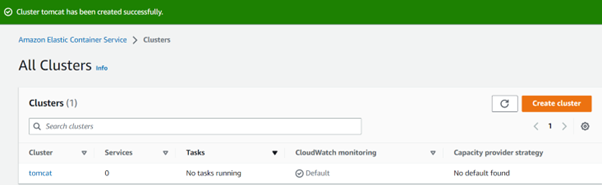

Let’s go to the Amazon Elastic Container Service dashboard and create a cluster with the Cluster name “tomcat”

The cluster is automatically configured for AWS Fargate (serverless) with two capacity providers. We can add ‘Amazon EC2 instances’ option which is used for large workloads with consistent resource demands or ‘External instances using ECS Anywhere’ option which is used to add data center computing if needed.

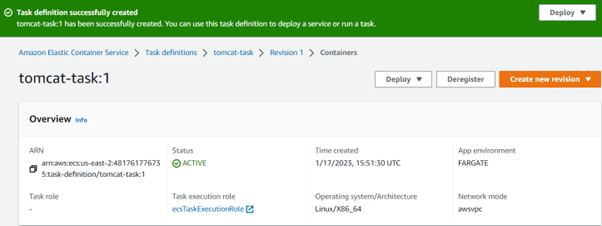

Now from the Amazon Elastic Container Service let’s create a new task definition:

Now, we will have to create a service to connect the task. Before that, let’s create a load balancer by performing the following steps.

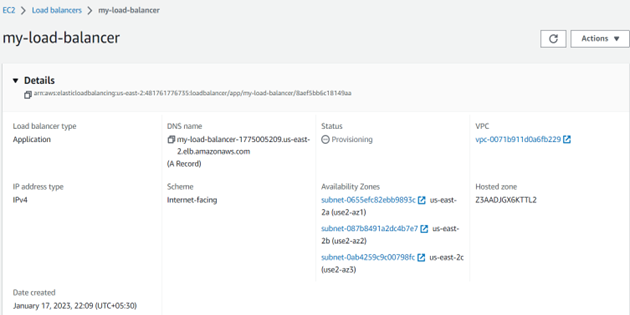

Step 1: On the EC2 dashboard, let’s go to the Load balancers and Select load balancer type as ‘Application Load Balancer’ with the name “my-load-balancer”.

Step 2: For the Security Group, we can move further with the ‘Create new security group’ option and name it as “my-security-group-lb”.

Step 3: For the Target Group, we can go with the ‘Create target group’ option and name it as “my-target-group-lb”.

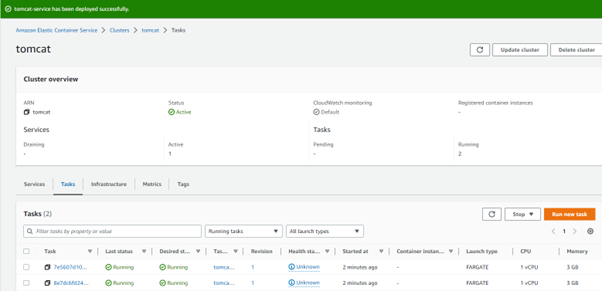

Step 4: From the Amazon Elastic Container Service dashboard, let’s select the ‘tomcat’ cluster and create a service with Capacity Provider type as Fargate with Service Name as “tomcat-service”.

Step 5: For Desired tasks, which specify the number of tasks to launch, can be taken as 2.

Step 6: For Deployment options, the ‘Min running tasks’, which specifies the minimum percentage of running tasks allowed during a service deployment, can be taken as 2, and ‘Max running tasks’ which specifies the maximum percent of running tasks allowed during a service deployment can be taken as 200.

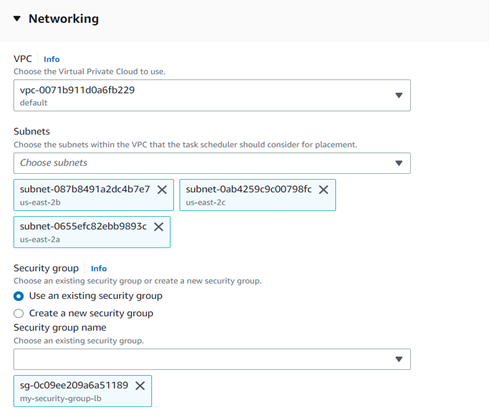

In terms of networking, we can go with the default VPC option with all the available subnets and the existing security group i.e., “my-security-group-lb”.

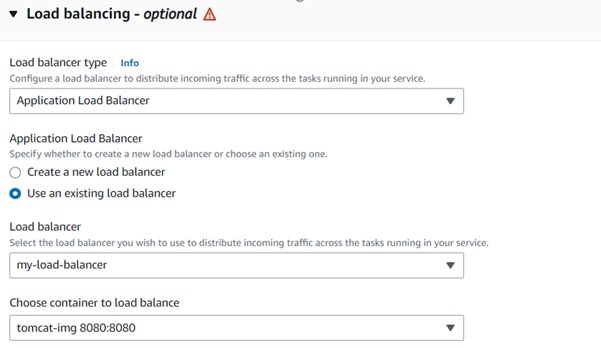

While for the Load Balancer, we must select the existing Application Load Balancer and a container to load balance.

For the Listener option either we can go with the existing target group named “my-target-group-lb” and listener with port 8080 and protocol HTTP.

Finally, we have 2 tasks running with active status, and we are routing our traffic through the load balancers with limited CPU and Memory storage, as seen below.

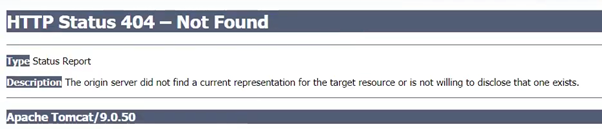

Now if we copy the DNS from the load balancer and paste it on the browser with port 8080, it can be seen as follows:

Amazing write-up!