Google announced a major algorithm update, BERT, on October 25, 2019. The update will affect 10% of searches and make the search landscape better for everyone. How will it make search better, and what does this update mean for you? Watch as Eric Enge, Digital Marketing Manager at Perficient Digital, and Jessica Peck, Marketing Technology Associate at Perficient Digital, discuss what BERT is, how it will affect SEO, and what you can do to optimize for it.

Transcript

Eric: Hi, everybody. Eric Enge here from Perficient Digital. With me today is our resident exBERT, Jess. Wait a minute, Jess. Were you ever named BERT?

Jess: I would say I’m less an exBERT and more a BERT enthusiast.

Eric: Got it.

Jess: We’ve gotten some contact with BERT through some of the internal work we’ve been doing, so I’m really excited to talk about it today.

Eric: Excellent. What’s interesting about today is that Google announced they’re going to apply BERT models to search. BERT models might be one of the biggest changes to the natural language processing landscape in the last few years. Google thinks applying BERT models to search – currently what they’re doing – will affect about 10 percent of searches and make the search landscape better for everyone. But, what exactly is BERT?

Jess: It’s not just a “Sesame Street” character, and I know I’m probably the three-thousandth person to make that joke. BERT stands for Bidirectional Encoder Representations from Transformers, which is a bit of a mouthful, but we can try and explain it here.

Eric: Here’s a brief natural language history lesson for you. Historically, natural language processing has been a way for machines to process words more accurately, more things than strings. But NLP had problems with word differentiation based on context, especially with homonyms. For example, the applicant was addressed kindly and he addressed the envelope have obvious differentiation to you and me, but not to a machine. BERT changes that.

Jess: Yes. BERT is deeply bidirectional. This means BERT takes context information from both the right and the left side of a token or word during training. Traditional NLP is monodirectional, so it either takes context from before or after the word. In your addressed example, more traditional NLP algorithms would either take the applicant into account or the envelope, depending on the direction they were reading in. BERT also looks at both composition and parsing to understand the sentences.

For that, they use a transformer. This has a set of encoders that are chained together. Each encoder then processes input vectors to generate encoding. It looks at words to encode them and contains information about all the parts of the inputs that are relevant to each other. This is all kind of architectural and technical, but it’s important in understanding exactly how cool BERT is. BERT has an attention mechanism that lets each token or word from an input sequence (sentences made of words) focus on any other token. This vastly improves the way BERT understands both composition and understanding.

Eric: So basically, BERT is the first deeply bidirectional, unsupervised language representation that is pre-trained using only a plain text corpus like Wikipedia, for example.

Jess: Yes. So, why bring BERT into search now? It’s about intents, right? From the Google post, BERT models can therefore consider the full context of a word by looking at the words that come

before and after it, which is particularly useful for understanding the intent behind search queries.

Eric: Right. Google getting more context means that more conversational, less coherent words in searches will be given more meaning. For example, “2019 best wines exported from France to USA” will have more accuracy because Google can take into context the “from” and the “to” in that particular search phrase. This will also help Google parse intents for long tail queries with homonyms. For example, “Jaguar car scoring” will be more likely to get you results about comparing cars rather than the latest from the Jaguars football team.

Jess: Yes.

Eric: Basically, it comes back to search becoming less of a question and more of a conversation. Google doesn’t just want to scan strings and send back a best guess. It wants to make search into a conversation between user and algorithm. BERT also allows for a level of understanding on the long tail that really helps this effort.

Jess: Yes. I think it’s interesting to compare this to our DPA study. I would say this kind of tokenization was probably the biggest challenge among all the different digital assistants. The voice apps we’ve built in-house have relied a lot on drawing direct synonyms between different sentences and words and more traditional tokenization. It will be really interesting to see how BERT can be used for more conversational actions and skills in the future. So, what do you think is the effect on SEO?

Eric: User intent is about to get a lot more important. Sites might see their pages dropped from long tail keywords, for example, where they were previously ranking highly.

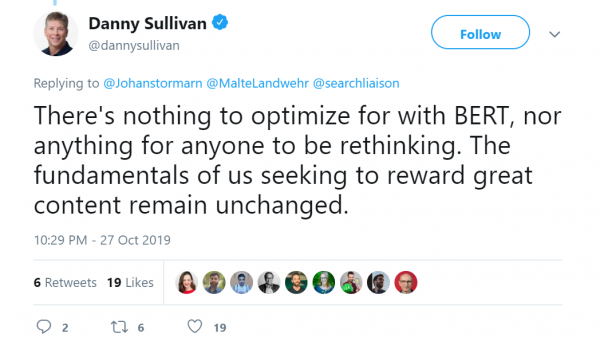

Jess: How would you say you can best optimize for BERT?

Eric: To quote John Mueller “with awesomeness.” Optimizing for BERT isn’t necessarily the point, but experiments and advances in machine learning and NLP from Google show us that the search engine wants to align with users as much as possible. They want user happiness more than anything else.” So, optimizing for BERT is optimizing for users.

Jess: A way you could do that is to align what content you have more with what the people are actually searching for.

Eric: Again, search is conversational now. If you suspect that BERT has knocked you from a ranking for a query, you’re probably not answering the user’s question on that page quite as well as some other pages. As Google improves machine learning in language understanding, it’s going to be more and more about having comprehensive conversational content served up to users and doing it rapidly.

Jess: Machines are getting better at understanding us. So, understanding them is getting us closer to understanding our users as well.

Eric: Exactly. Thank you, everybody.

Hello,

Great video, gonna watch it again. Thanks for good advices with bert update. I am wondering that these changes will be so big. Now we are waiting 🙂

Google is now working more towards quality content, and easily search-able content and I think BERT update will enforce the voice optimization, even more.