The Kafka is a stable and real-time communication and message delivery distribution system. Usually the enterprise Kafka application and system will ask for several machine servers supports. But for proof-of-concept or for special circumstances, we want to show you a simpler way to use the Kafka application. Below, I will show you how to install a Kafka cluster on Docker.

1.System requirement.

Before you start the installation of a Kafka cluster on Docker, you will need a list of required environment and software.

- Server: One PC or Server with 8GB will be ok.

- OS: Ubuntu 14.04 LTS + (Others that compatible with Docker are ok)

- Docker

- Zookeeper: 3 nodes based zookeepers on Docker or server are ok.

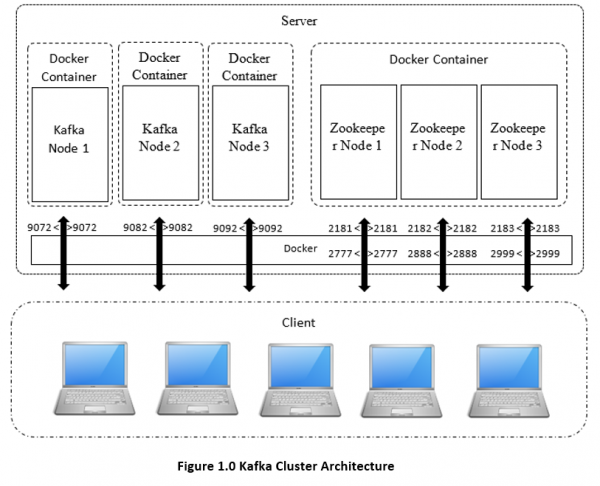

Tips: if you want to use the zookeeper on Docker, please make sure of the port mapping to the server from the Docker Container

2.Kafka cluster architecture.

As stated in Figure 1.0 as following, I am going to set up 3 nodes based on the Kafka Cluster over Docker. What’s more, we will have the zookeeper installed on Docker.

3.Kafka cluster configuration and installation.

First, I will show you an example of how to install a Kafka node in a Docker Container. The Docker container is required. If we want to use the Kafka node in a Docker Container, we need to setup the container with special settings like port. That’s very important because the clients outside can only access the Kafka node in a Docker Container by port mapping. Of course, it is better to keep the same port inside and outside of the Docker Container.

Run the command above and you will get a Docker Container with an identifier ‘077493067ec7’.

After creating an empty Docker Container, you will need to install JDK (7.0+ required) first. There are two ways to setup JDK in the Docker Container. One way is to install it by apt command. Another way is to install it manually. You can first download the JDK tar package on the official site. Then copy it into the Docker Container and set up the environment value for JDK runtime.![]()

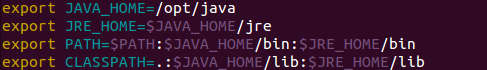

After uncompressing the JDK tar package file under a local folder (recommended /opt folder), you can configure the environment variables for JDK. Before you start this, I recommend that you install the vim application in the Docker Container. It will make it easier for file edition.

Open the ~/.bashrc file and add the following script in the file and save

Execute the script and activate the environment variables.

After the installation of JDK in the Docker container, you can start to install the Kafka node. First, you need to copy the Kafka tar package into the Docker container and decompress it under one folder.![]()

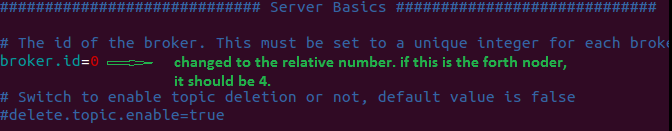

Open the uncompressed Kafka folder and edit the server.properties file under the config folder.

- Update the Kafka broker id.

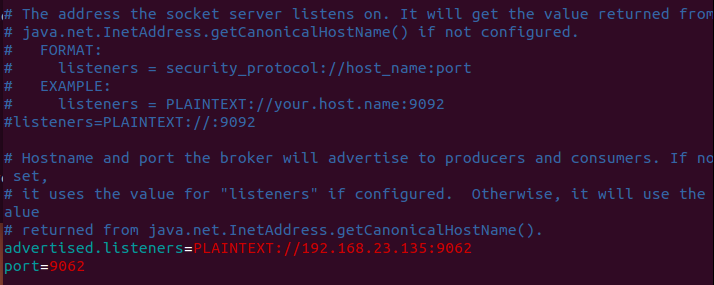

- Configure the port for Kafka broker node.

Pay attention to the IP address and port. The port number should be the same as the port mapped when the Docker Container is created. And the IP address used in the advertised.listener should be the Machine Server IP address instead of the Docker Container IP address.

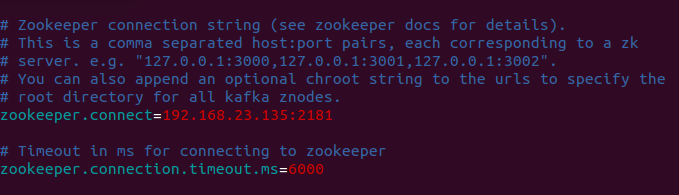

Pay attention to the IP address and port. The port number should be the same as the port mapped when the Docker Container is created. And the IP address used in the advertised.listener should be the Machine Server IP address instead of the Docker Container IP address. - Configure the Zookeeper address for Kafka broker node.

After saving, you can run the Kafka broker node by the command as below.![]()

When you want to create a Kafka cluster on a local server by Docker, you can repeat the above steps to create more. Therefore, it should be easy for you to have your own Kafka cluster ready in couple of hours.

Hey Iven, I appreciate your effort but this I would suggest you remove this article. It shows probably one of the worst ways to use Docker.

Thank you so much. Your article saved my idea 🙂

Thanks Petar. I knew the docker profile and docker image will be better way to use Docker. However, I just want to focus on the setup of Kafka. Because there are some special settings. So i just list it like a stupid way. But more clear and reproducible. To be honest, I am not good at Docker. Can you give me some suggestion of Docker Learning?