The Google Search Appliance has historically required Connectors to facilitate the indexing of non-HTTP content repositories. A Connector is a program that sits between the GSA and a repository, and pushes the GSA everything it needed to know to index the repository (including the content, metadata, and security information). A Connector can use any technique to interrogate the repository (APIs, database queries, etc.), and the Connector is wholly responsible for deciding what to send, and when.

But a new methodology is emerging. Google is transitioning from Connectors to Adaptors. You can read Google’s description of an Adaptor here. I’ll admit, I have read this a few times, and talked to Google engineers and my team members about it, but I didn’t immediately grasp the value. I am comfortable developing Connectors, so why change?

But a new methodology is emerging. Google is transitioning from Connectors to Adaptors. You can read Google’s description of an Adaptor here. I’ll admit, I have read this a few times, and talked to Google engineers and my team members about it, but I didn’t immediately grasp the value. I am comfortable developing Connectors, so why change?

Last week I was speaking to a group of prospective GSA customers at Google HQ. While I was taking some Q&A from the audience, it clicked. Someone asked me how connectors keeps track of new, updated, or deleted items to ensure the GSA is in sync with a repository. I mentioned that our Connectors either rely on an API that will stream us those events, or the Connector maintains a snapshot of the repository’s current state and does a periodic comparison to figure out if anything has changed.

I mentioned that this was a unique problem for Connectors. We know that Google’s web spiders (Googlebots) have crawled the web for almost 2 decades. The web spider periodically revisits pages to check for changes, and if a page disappears, it removes it from the search index. To be fair, that process can have some lag, and webmasters have been given new tools to make that process snappier, but it is very simple from the site-owner’s perspective.

That’s when I had my light-bulb moment – Adaptors are the webification of non-HTTP content repositories. Adaptors can make any content repository look and feel like a website. The GSA’s crawler takes over most of the burden of maintaining state, checking for changes, and removing pages that are no longer in the repository.

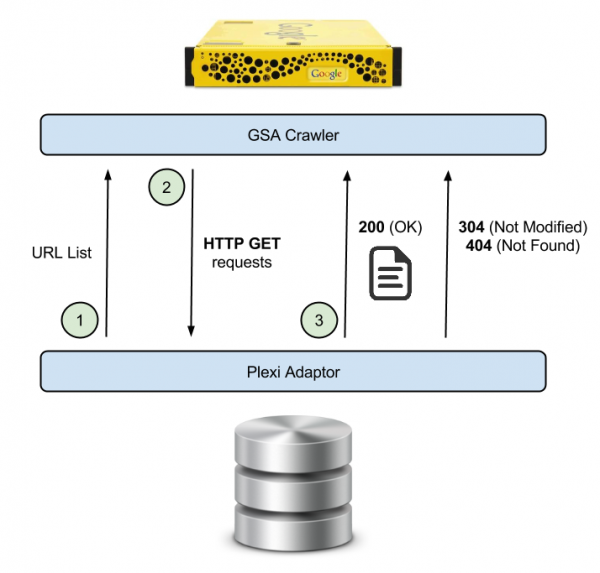

You can read through the Adaptor documentation to learn more about how this is accomplished, but the short version is that an Adaptor is a cap that sits on top of a content repository and exposes its content through standard HTTP conventions. The GSA makes GET requests to URLs exposed by the Adaptor. A 200 (OK) response returns the content that should be indexed, including documents like PDFs or Word files. Metadata and ACL’s are conveyed through special HTTP Headers in the response. A 404 (Not Found) response means the content is gone and should be removed from the index. The GSA crawler can send if-modified-since request headers, giving the Adaptor the opportunity to return a 304 (Not Modified) response to indicate the document has not changed.

The idea is elegant and simple because it matches the web crawling paradigm we have had for decades. And Adaptor performance can easily exceed that of a traditional Connector. Connectors are single-threaded pipes that push a serial stream of documents to GSA. Adaptors can take advantage of the full multi-threaded capability of the GSA’s web crawler, which can fetch many documents at a time.

Adaptors also have a few extra capabilities to solve the lag problem typically associated with web spiders. Adaptors can push to the GSA lists of URLs that need to be (re)crawled right away. The GSA will move those URLs to the top of the queue and process them as quickly as possible. If an Adaptor notices that a document has been deleted from a repository, it can use this mechanism to have the GSA recrawl the page immediately, which will yield a 404 (Not Found) response, and the document will be removed from the index. Adaptors are not required to do this, though. The GSA crawler will periodically revisit all URLs and find those 404 in due time. Without any extra coding, Adaptors offer a self-healing approach just like we have come to expect for website crawling.