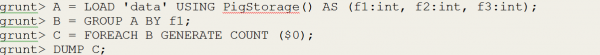

Much has been touted about Big Data being “schema-less,” thus minimizing data modeling, especially Physical Data Modeling. At a low level (e.g., Map Reduce level), there is some truth to this as Map Reduce works with key value pairs. In the Map phase of a Map Reduce job, the input data is read in and converted to key value pairs. However, when you use higher level tools and databases built on top of HDFS and MapReduce (the core of Hadoop) – (which us mere mortals who don’t care to write a Java program for every query will primarily use) – these all represent the data using a schema. For example, using Pig, the first step is to define the columns and datatypes for the input data, as you can see from this simple query from the Apache website:

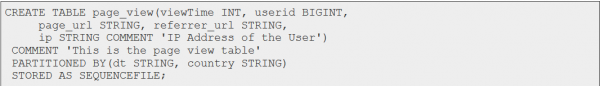

In Hive, you create tables – here’s an example again from the Apache website:

HBase is a wide column store and has column families which are roughly equivalent to tables. The column names can be completely variable and the number of columns can vary by row – so you could have a table with billions of rows and could have rows with 5 or 5 million columns. Without HBase you can’t do table joins – and so is definitely more of a “schema-less” nature.

HCatalog is a recent addition to the Hadoop stack to serve as a metadata repository about all the data stored in Pig & Hive tables and HBase column families. This could roughly equate with a relational database schema as expressed in the system catalog.

Even in the case of HBase, Data Modeling is still a valuable undertaking in order to design, rationalize, and communicate about data in Hadoop, even though database DDL would not be generated (I’m not aware of any data modeling tools which can generate table/column family structures in Hadoop – I’d be interested in hearing if this is on any modeling tools radar). With HBase, the data needs to be 100% denormalized, so a logical star schema could be used as it is easier for business people and data managers to comprehend, knowing that the star schema would, at the physical level, be converted into a single column family. Even if the names of columns are going to be wildly different, a data model would still be beneficial. For example, key columns can be identified, columns which would require indexing can be identified, and the granularity and relationships of the data can be understood.

In summary, “schema-less” doesn’t mean the data doesn’t have structure and data models can help us to design, rationalize, and communicate about data.

Are you interested in learning more about Big Data and its applications in Healthcare? Join us May 30th at 12pm EST for the webinar “Using Big Data for improved Healthcare Operations and Analytics.”