It is clear that there is still a misunderstanding of what a “robots.txt” file is used for and what meta name=”robots” is and does. Therefore, I thought why not break it down in laymans terms for everyone to hopefully help you when deciding which to employ and when.

It is clear that there is still a misunderstanding of what a “robots.txt” file is used for and what meta name=”robots” is and does. Therefore, I thought why not break it down in laymans terms for everyone to hopefully help you when deciding which to employ and when.

Robots.txt

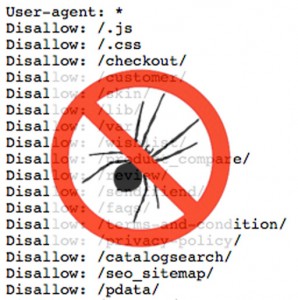

The robots.txt file is a simple text file that resides within the root directory of a website. The purpose of this file is to help search engines understand which content you would like them to illustrate to users within the search engine result pages (SERP’s) and which pages they should ignore. Google and Bing pay very close attention to this file, reason being, they do not want to potentially share information on websites which their webmasters have specifically requested be ignored.

Example: You are about to enter a building and on the front door reads a sign saying, “Do not enter the first door on the right upon entry – this area is strictly forbidden.” Knowing that you are only wanting to reach the top of the building for a brilliant view to take a quick shot with your camera, you adhere to their request. Google is doing the same thing when their spider enter a site to do a crawl. They look for two critical files; a sitemap.xml and robots.txt. The sitemap XML is a file which illustrates all or most of the pages within your site, as well as the last modified date to assist Google with a quick link to the majority of your content you want visible to users of search engines. Prior to running through all the links within the sitemap.xml file, the crawlers check to see whether or not there is a robots.txt file and which pages (or rooms of your building) they are not allowed to enter.

This file is extremely powerful and the search engines respect the directions given within it. However, many people make a few mistakes which often times prove to be extremely costly.

In order to allow Googlebot or other search engine crawlers to index your site, a simple command within a robots file could look like the following:

User-agent:

Allow: /

or

User-agent:

Disallow:

This, in essence, is telling all robots or crawlers that the whole building is fair game. Crawl away and take all content you interact with back to your indices to share with your users.

On the other hand, if someone includes:

User-agent: *

Disallow: /

This tells the robots that you do not want them to enter the building at all. You are basically adding a “no-entry” sign to the front door of the building with a “Trespassers will be prosecuted…private property” comment. So why would you ever use this? Most webmasters when developing a new site, develop a pre-production environment for testing purposes and to experience the site on the web or for sharing the new design and functionality with a client prior to rolling it live. That being said, they do not want the search engines viewing this test site or sharing it with the world quite yet…hence adding the “disallow everything” comment to the robots.

This causes problems for many site owners or developers, because it is a simple text file within the root directory and not dynamically updated via a content management system (CMS) or eCommerce platform. Developers will often times “unknowingly” publish all pre-production files live. It is at that time where the robots file (Disallow: /) moves its way to the root of their new live site. I have personally experienced this on numerous occasions by clients who come running to us believing their domain received a serious penalty from Google and we need to help them immediately, as they cannot find their new site in Google. As an SEO, there is always a quick checklist each of us has developed to run through while analyzing files like these to verify that nothing has been implemented incorrectly.

Unfortunately, that is not the only way in which you could block a search engine from serving up your content with the SERP’s. You could also have written the wrong code within your meta name=”robots” code within your page source.

Meta Name=”Robots”

The meta robots field within the header of your source code serves a similar purpose to that of the robots.txt file with a few exceptions. Contrary to the robots.txt file, this file does not act as a “no-entry” sign. Search Engines are still able to crawl the page, however, you are now telling them whether or not to index the page. That being said, depending on how you communicate this to them could mean different things at times.

![]()

For example: If you wanted to tell Google that you did not want a page in their search results, you would simply add:

<meta name=”robots” content=”noindex“/>

This tells Google that I don’t care whether you like the content on the page or not (obviously it was not blocked within the robots .txt file), I do not want you to show it within your SERP’s. The difference between this route vs. using the robots.txt file, is that it is just a guideline to the search engines and not a blockage. For the most part this will be obeyed, however, there are times where Google may still include the page within the SERP’s if it has received significant inbound links. While this is rare, it still happens.

Another feature of the meta robots that webmasters use are “follow” and “nofollow.” What do these mean? These are used to tell Google and Bing whether to pass authority or relevance to the links on the page. These attributes were originally developed to help block “link juice” flowing out of your site to spammers who have tried to leverage your comments section to drive anchor text links as exact match inbound links to their sites. Adding the “nofollow” to your page or backend code of your comments will in essence tell the robots that you do not want them passing any votes from you on this page to all links within the page.

There are four types of meta robots implementations:

<meta name=”robots” content=”index,follow”>

<meta name=”robots” content=”noindex,follow”>

<meta name=”robots” content=”index,nofollow”>

<meta name=”robots” content=”noindex,nofollow”>

“Index” refers to whether or not you want the robot to include your page within the search engines. “Follow” refers to whether of not you would like the relevance or “vote” of the links mentioned within the page to pass to their link destination.

Why would you ever use “noindex, follow”? There may be a time where you have a resource that you want to keep private from the world, however, you may have given it to a few people to access the valuable links within it. You are now telling the search engines that you do not want them indexing this page within the SERP’s, however that you do want them to pass the trust and relevancy of your “link juice” to the pages linked to within that page.

Additional Meta Name=”robots” that are commonly used include:

<meta name=”robots” content=”noodp,noydir”

Each are comprehensive site directories that search engines often times use to replace a meta descriptions on a page with the descriptions of your site from each of these sites.

ODP – Open Directory Project (www.dmoz.org)

YDIR – Yahoo Directory (dir.yahoo.com)