Dixon Jones is the Marketing Director of Majestic12 LTD, owners of a web-based technology used by the world’s leading SEOs to analyze how webpages on the internet connect between domains. It is the largest database of its kind that can be analyzed publicly in this way, with well over a trillion backlinks indexed.

Dixon Jones is also a founding director of an Internet Marketing company and has a decade of experience marketing online, primarily above the line, building Receptional up from a start-up in my front room to a reasonable size. From near the start, I did this with David to build a team of 15 Internet Marketing Consultants last time I counted and little sign of a slow down. The office has now become so tight that the landlord has agreed to build a considerable extension that would more than double our floor space. I don’t think he wants us to leave.

Other of Dixon Jones accolades (or chains, depending on your point of view) in the world of Internet Marketing include being a moderator on Webmasterworld which most webmasters have heard of. If you haven’t, I guess you are not a webmaster. To be fair, nor am I these days. I am an Internet marketer, but I don’t know how an Internet marketer can really understand the nuances of the Internet Marketing world without at least some understanding of web-servers and CMS systems.

Interview Transcript

Eric Enge: One of the landmark deals of the industry in 2009 and 2010 was the search agreement between Microsoft/Bing and Yahoo. I am sure one of the things that they consider to be a minor side effect was the announcement that this would result in Yahoo Site Explorer becoming obsolete. That leaves us with a situation where the SEO industry has lost its ability to analyze link structures and get access to link data, as links continue to play a huge role in rankings.

That means that other tools are required. I would say that Linkscape and Majestic-SEO are the two major contenders to benefit from everything has happened. Can you tell us anything regarding how you go about collecting your data?

Dixon Jones: Majestic-SEO was born out of an attempt to build a distributed search engine. By distributed I mean that instead of getting a massive data center the size of Google’s to try and crawl everything on the web, what we did about four years ago to get people to contribute their unused CPU cycles to our crawling efforts. We have more than 1,000 now, that have downloaded a crawler on their PC. When they get spare bandwidth, the crawler is then crawling the web from their PCs and servers. We have been able to crawl incredibly quickly, but it took a couple of years to get the crawl right and optimized.

As we built it up, we started to crawl the web from hundreds of websites and machines every single day. We didn’t try and collect all of the data about the Internet, because we realized early on just how much memory that would require. What we started doing was looking at the links, not the internal links, but simply those links between domains and sub-domains. We are looking at the link data that we think people would find difficult to analyze any other way. For internal links, you can use something like Xenu Link Sleuth link tool to analyze the link structure of a particular website.

A few years ago, the only data available that was giving us any kind of backlink information was Yahoo Site Explorer. We think that we overtook Yahoo Site Explorer a couple of years ago in terms of volume of links indexed, and we are now at 1.8 trillion URLs as of May 2010.

So there is an awful lot of data that we have collected over that time, and with the way that we are giving it back to people, it’s really easy to go and analyze everything from the ground up. Even the links that have been deleted remain in our database, so deleted links begin to show up over time as well. Then, of course, we are recording the anchor text, whether it’s Nofollow, an image link or a redirect. We pretty much have all the data that we need, and we provide a web-based interface into the data that SEOs can use.

Eric Enge: What is a typical capacity of one of these computers?

Dixon Jones: I can actually show you part of this, an example taken from our crawlers on this specific day. Someone in Malaysia is our top crawler today; he has crawled 35 million URLs. Magnus has done 18 million, and you can see all the different people that are coming in. We have 113 people crawling at the moment, and 214 million URLs crawled today.

|

The green line shows how we have been crawling over time, and you can see that in 2007 we figured out how to crawl better. We found a fairly steep increase in the number of URLs that we were crawling in any given day. There is also a little competition going on between the people who are crawling. We have someone who has done close to 30 million URLs and 809 megabytes of data overall.

Different PCs are obviously going to do different levels of crawling. If you find me somewhere down in that list, I am right near the bottom with my little local broadband connection at home, but you know there are all sorts of people doing the crawling. There are some pretty hefty services out there doing some of the crawling, and of course, we are doing some crawling directly as well, so it’s not all distributed. Nevertheless, the back of this beast was broken through distributed crawling, and it continues to be a major part of Majestic’s technology.

I think it’s probably best to go through how I use Majestic with my SEO hat on, and how we do it that way. There are different ways of using it and there are different people doing different things. On the Majestic-SEO homepage, there is a Flash video that gives a two-minute introduction on how to use the system, which I suggest that people watch.

It will require that you register, but it’s free. Some of the services do cost money to access, but it’s worth registering just to get access to some of these free tools. One of the first things that I’ll look at is the backlink history tool, which is just underneath the search box.

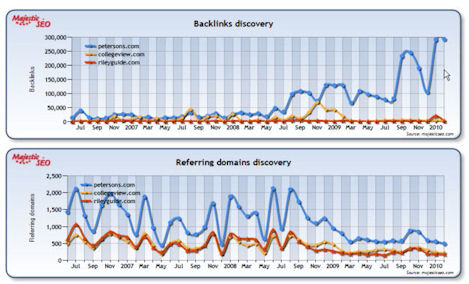

|

When an SEO receives a call from a client requesting links for their website, the SEO needs to very quickly ascertain whether the client’s website has any chance of getting to the top of a search engine. Once they are registered, one of these free tools allows SEOs to start comparing websites

You can see the number of backlinks that we found each month, and then the number of the referring domains that we found each month as well. The difference is quite extreme, as you can see petersons.com has lots and lots of backlinks coming in, but when we actually look at the referring domains, there is lot more parity between the three sites. Petersons.com is still collecting the links from more domains than anybody else on this bottom graph, but it is on a similar sort of scale. As Petersons starts getting links, that will dramatically increase the backlinks discovery rate. Site-wide, that link will give a huge number of links, but still from only one domain.

Eric Enge: Right, of course, one of the things that is interesting about that is, what really is the added value of getting a whole pile of site-wide links.

Dixon Jones: I usually use this domain discovery as a barometer of how good a link-building campaign is because this top one could be extremely confusing. It’s not to say that those links don’t necessarily all produce some kind of benefit, but you would assume that with this kind of difference, Google is working hard to work out the differences between site-wide menu links and their value, compared to an individual link at its value. It’s not to say that either one is valueless, but I imagine that they both have slightly different interpretations than Google.

|

On the left-hand side, there is a cumulative graph that I like to look at. In the second graph, we can see how the links are being built up over time. We can see that Collegeview.com and Rileyguide.com are very, very closely matched on the number of domains that are linking to them. This screenshot here is a good, quick way of having a look at how far you have to go with a customer to try and get realistic rankings.

You are going to have to make some kind of view into whether you are interested in trying to get backlinks from web pages or websites because it can mean two very different things.

We also have another tool that does the same sort of thing, but we can do about a hundred URLs at a time, and it’s still free. The bulk backlink checker link on the homepage will allow you to enter all the sites as a list. You can also get a CSV file, which is always useful as well. There is a list of at least 100 of them, and you very quickly get an idea of how many external backlinks we see for each of these domains and referring domains. Let’s use Hitwise, which has all of its websites within a given industry, as an example. You can enter all its sites in and see the referring domains by industry segments, or you could use a DMOZ directory category, Yahoo Directory category, or business.com, and that would give you some idea of all the sites in a particular vertical. You would very quickly be able to get an idea of a market using this bulk backlink checker.

I recommend that people register just so they can find all their own data for free. This way they won’t have to pay to get a full report on their own website. All they have to do is put up a verification file, Google analytics or anything that would verify to us that they own the site, and from there they can get a report of all the sites that they control. When they want to start seeing sites that they can’t control, that’s when a subscription becomes required, which costs about 10 dollars a month. I would say that you have got to be pretty much a small B-to-B business to use it, and by the time you get to see your real goal and you can start playing to you know some reasonably serious analyses, so it’s not that big.

On the homepage, one of the other things that you can do is set up all sorts of folders and things to help spread your report.

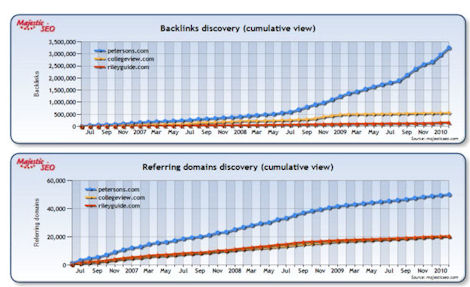

I have started by putting in one advanced report and one standard report. For the standard report, I analyzed this deep link:

|

One of the things that you can do with Majestic is analyze deep links rather than just domains. This is what we have with this particular deep link of education-portal.com/pages/Computer_Science_Academic_Scholarship.html. It comes out with an overview, which allows you to see the number of links coming into the domain itself, or to the subdomain, which is http://www.education-portal.com What’s interesting to point out here is that the root domain has a lot more links coming into it than the www.

Eric Enge: That’s pretty rare.

Dixon Jones: Yes. It has lots of other subdomains, and there seemed to be an issue with some of the domains. With the advanced report, we can check backlinks to the domain itself.

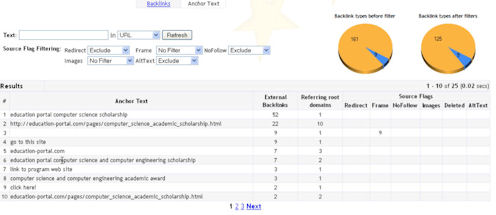

|

These are the main backlinks coming into the domain. What I’m going to do is take it right down to the URL. It obviously gives us fewer links, but I think this stands out because you can’t go to Yahoo very easily and find the backlink text to an internal URL. You can find some domains and things, but here we can start seeing the best links as ordered by AC Rank. AC Rank goes from 0 to 15 and it’s a very, very loose quality score, but it’s extremely transparent. It’s purely based on the number of links going into the page that is linking to your site.

It’s not trying to find the PageRank or the quality of the link in any high-developed metric. We found that our clients really want to make that judgment for themselves. At this stage, we do intend to improve AC Rank, and we will also likely come up with another quality metric. We do not intend to try and emulate PageRank in any way, we just haven’t sat down and looked at the way in which it was copyrighted. We want to come up with our own methodology for the quality of a page, but at this stage, we have a very vague but transparent quality score with AC rank.

On this URL, backlinks, we can start seeing the source URLs, the best links. If you click on anchor text, then we can start seeing things sorted completely differently. You start seeing the anchor text coming into this deep page. You would expect to have a wide variety of anchor text and of course, you would expect the URLs coming there as well. Again, you can export this as a CSV file, which is very useful.

Another tool, that I have written myself, goes and pings every one of these links. I can put them all in one particular URL and go validate all of those links and check them. Majestic crawls an awful lot of links, but it’s optimized to collect data, collect new links; we are less focused on verifying those links are still there after time. We do flag where the links are, and when they disappear all of these links that we have marked as deleted will get filtered out.

Nevertheless, it may be that there are plenty in there but they are deleted, that we haven’t got back to look. So I go and set up another little routine, where you can do it manually to go and visually check that these links still exist. At that stage, I can pull out some kind of quality score, whether it’s compete.com, PageRank, or any other metric that I want to use to judge the quality of the page and the incoming link as well. It’s not something that everyone can get their hands on, but it’s not a difficult script to do. We basically take all of these links in the CSV, then we plug them into another web-based system that we’ve built that just goes and physically checks whether the link still exists. Then we also can check for a quality metric on the website.

Going back to what we have here, you can very quickly start filtering out all the things that you don’t want. If an SEO decides that he is not really interested in NoFollow links, they can very quickly exclude them, and that changes the system. Links might go down, but now we are going to download a much more accurate representation of links, so that is going to start to carry juice should you decide to sort by anchor text or source URL. We are going to have a look at those, and now we can start seeing them in alphabetical order of URL and things like that as well, so you can sort them in any way that you want. We have some easy filters here with this standard report. For example, say I am only interested in ones that have “computer” in the anchor text. If we refresh our data, we can see all the links that have anchor text with the word computer in the data, and you can export that as CSV. You will very quickly start seeing a lot of stuff about a deep URL, and if you start doing that with the whole domain, you can have a lot more links as well. So please, if you compare us with Linkscape, then make sure that you are either comparing it to the domain as opposed to the URLs because we have so much data here.

If you were to export that into CSV, the CSV file that comes down is very easy to start manipulating and will allow people to see all the anchor text, external backlinks, root domains, and then flags as to whether they have redirections or no-follows on them. Of course, we have filtered all of those out from this section here, but if we did include those you’d have a much longer list. When you want to compare, you’ve got to make sure that you compared like with like.

It depends on the level of a customer’s subscription, but for something like the standard report, you would get at least 5,000 URLs. I think that Yahoo stops at 2,000, and we go up to 5,000. On higher levels, you can get 7,500. We have a lot more usually, but on the standard report, we limit it to the top URLs because it is a much, much easier thing for us to take as a subset of the main database. This way, it’s quicker for us to analyze, so it’s easier on our servers. For advanced reports, the amount that you can take depends on your usage level.

I can get 17 reports in this subscription this month, but if I tried to start getting Amazon’s backlinks, these would get extremely expensive because we have a number of URLs limits within URLs as well. This is why we are like to do standard reports because you can use these for sites like Amazon and eBay and get some good information without breaking the bank. If you really, really want to get all of the backlinks for eBay, that’s going to require quite a lot of effort.

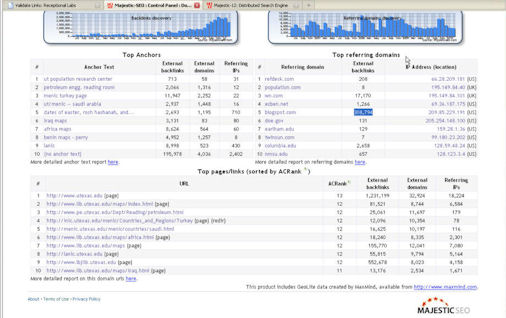

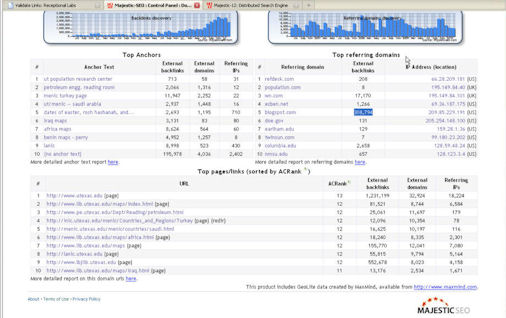

Here is a look at the advanced report for utexas.edu:

|

This is the default report that you get back for an advanced report, and this is for times when you really want to analyze in a lot more depth than you could with the standard report. This is laid out a bit differently, and the first thing I should talk about is that we have decided that people probably don’t want a number of the links that we have for SEOs. If I click on options, I can redefine search parameters within this report, and it doesn’t cost more to do that, you just have to decide what you want. So you can say, in this case, the default settings are, we don’t want any links that have NoFollow in them

Perhaps, we also don’t want any that are deleted because they aren’t useful for current SEO purposes. We keep the deleted links in our database because the information is extremely useful and valuable to see where somebody has been buying links or had an alliance with another website that’s now gone to dust. We also take out the mentions as well.

Eric Enge: To clarify, a mention essentially is when the URL is there, but it’s not actually a link?

Dixon Jones: Right. Somebody may be referencing StoneTemple Consulting but they only put PerficientDigital.com without making it an anchor statement (a href= “”). They have the domain, but they didn’t include an actual link, so it’s a mention. They are useful because if somebody has mentioned a site, but didn’t link to it, you might want to phone them up and ask them to add the link to provide proper credit.

To refine the report, you could target specific URLs. This becomes a useful tool to start dicing the data because there is an awful lot of it. If I go back to the control panel, we can see the extent that the filtering rules have already trimmed the data. We started with 33,000,000 backlinks to utexas.edu and we’ve filtered out about 3,000,000 of them. That leaves 30,000,000 coming from 314,000 domains.

The backlinks total can be misleading, which is why I look at the referring domains as much as anything. By using the analysis option, which is the same as the options button, there are essentially three different reports that are created, to analyze the three things that people want to look at.

The first thing is the top anchor text that people are using to link to you. Second is the referring domains that are coming into them, and third is the top pages on your website based on the number of external links coming into them. The homepage is almost always the most important page.

If you take www.utexas.edu, you can see some of the sub-domains that people are using on these sites. They’ve got www.lib.utexas.edu.p.utexas.edu; it seems that utexas.edu has sub-domains for all of its department areas.

They can be signs to build links independently whether on purpose or naturally. We can see that all these pages are bringing in links. Would you like to have a look at the anchor text, referring domain, or the strongest pages on utexas.edu?

Eric Enge: Let’s look at anchor text.

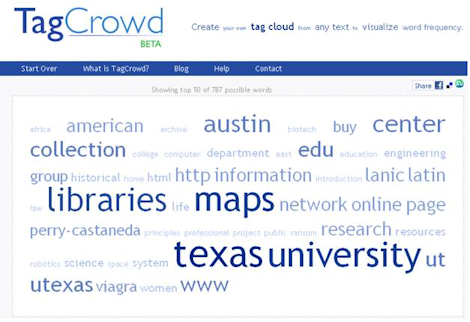

Dixon Jones: By clicking on the anchor text, more information is available and using a CSV file to extract this data is the most sensible tactic. You can either click explore by CSV or download all; explore by CSV takes the top 500, and download all takes everything.

|

With the anchor text, let’s take a look at Petroleum Eng Reading Room. There are 2,000 external backlinks here. A lot of these may be coming from other sub-domains within the texas.edu site. We only record links from other domains, but a lot of these other domains can be sub-domains, I don’t know. Looking for the phrase Petroleum Engineering Reading Room, we can see where the links are coming from such as archive.wn, nag.com, meratime.com, electricity.com, energyproduction.com, globalwarm.com.

Then we can look at these links for Petroleum Engineering Reading Room, and see which are related sites that link through to utexas.edu. On this anchor text, we can start seeing the spread of any one anchor text specifically. We can see that Petroleum Engineering Reading Room has 1,300 domains but only 12 IP numbers. This is significant because although they are coming from a lot of domains, the majority are related because they come from the same IP number.

Eric Enge: Right. Multiple sub-domains are counted separately.

Dixon Jones: Although these look like different URLs, they are completely different domains. By exporting and opening the CSC, we see that these aren’t sub-domains of utexas.edu, these actually are different web domains. That’s even better information really.

The major takeaway with this advanced report is that you can customize it with what you want to see and how much you want. If you really wanted to bring down 36,000,000 backlinks into a CSV file, if your software could handle it, you could analyze all those in spreadsheets. That’s Majestic SEO for you.

Eric Enge: Thank you Dixon!

Dixon Jones: Thanks Eric!