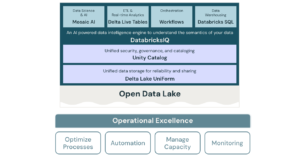

I have written about the importance of migrating to Unity Catalog as an essential component of your Data Management Platform. Any migration exercise implies movement from a current to a future state. A migration from the Hive Metastore to Unity Catalog will require planning around workspaces, catalogs and user access. This is also an opportunity to realign some of your current practices that may be less than optimal with newer, better practices. In fact, some of these improvements might be easier to fund than a straight governance play. One comprehensive model to use for guidance is the Databricks well-architected lakehouse framework. I have discussed the seven pillars of the well-architected lakehouse framework in general and now I want to focus on operational excellence.

Operational Excellence

I like Ops. DevOps. MLOps. DevSecGovOps. I am a fan of statistical process control in general and the Theory of Constraints in particular.

I like Ops. DevOps. MLOps. DevSecGovOps. I am a fan of statistical process control in general and the Theory of Constraints in particular.

“Any improvements made anywhere besides the bottleneck are an illusion.”

I prefer the following order of operations to implement ops. Step one, introduce monitoring. Nothing means anything without measurement. Step two, introduce capacity limits and quotas for each service to define boundaries. Step three, automate everything. Step four, break bottlenecks. There will never, ever be a step five.

Monitoring

Monitoring without alerting is not particularly helpful. Alerting without a chain of responsibility is also not particularly helpful. Enabling Unity Catalog gives your data the opportunity to granularly tag and manage your data platform. I recommend to evaluate your monitoring platform with the ultimate goal of self-service data. Monitor your lakehouse. Monitor your SQL Warehouse. Monitor your jobs. Thinking through this process, from what metrics you will monitor to who will be alerted to the responses you expect may infoirm how you set up your catalogs, workspace and tagging in Unity Catalog. Monitoring is not the only data point in setting up Unity Catalog, but I think its more important than many IT shops do before we start the migration.

Capacity Limits and Quotas

This is a real wake-up call in my opinion. Remember how we discussed cost optimization? Capacity limits and qotas are more about aligning costs with business value. The closer you can align your monitoring with distinct units of business values depending on your corporate environment. Is it business units? Lines of business? Unity Catalog resource quotas can change your perspective from a simple lift-and-shift from the Hive Metastore to a migration informed by practical business value. Attaching capacity limits and quotas on resources will drive this kind of thinking.

Automation

Terraform. Databricks Jobs. The tools don’t matter as much as just a commitment to Infrastructure-as-Code and Configuration-as-Code attitude. Just automate shell scripts with the Databricks CLI; its better than using the UI. I actually don’t want to spend much time on this bvecause I don’t want anyone to get bogged down in tool-wars.

Bottlenecks

Bottleneck identification becomes very easy when you have capacity limits and quotas aligned with the business metrics that the executive team uses if you are communicating clearly. You’re bottlenecks will be communicated very loudly and very clearly. You’ll be pretty happy you put in the automation work to do A/B testing and get feedback from your monitoring. Your team will get very good at different cost optimization techniques.

Conclusion

This topic isn’t technically difficult but its a heavy political lift. When I talk about operational excellence, people expect a deep dive into a tools comparison. That isn’t the difficult part in my opinion. Automate with Terraform. Monitoring isn’t even that hard; use the monitoring tools provided by Databricks and your cloud provider. The challenging part is taking advantage of your Unity Catalog migration to more closely align the business, technology and executive teams around your data, machine learning and artifical intelligence assets. On its face, this is the goals of governance. Data scientists, analysts and engineers need to be able to securely discover, access and collaborate on trusted data and AI assets to unlock the full potential of the lakehouse environment. In theory, this is everyone’s responsibility. In practice, it all falls to IT. Operational excellence is table stakes.