In this blog post I demonstrate how to import Oracle Database schemas from dump files into Autonomous Data Warehouse (ADW). In this post, I use SQL Developer to run the Data Import Wizard.

Quick links to other posts in this series:

- Export Data into Dump Files

- Option 1: Export DB Schemas Using SQL Developer

- Option 2: Export DB Schemas Using Data Pump Command

- Transfer Data Dump Files over to Oracle Cloud Object Storage

- Import Data Dump Files into ADW Using SQL Developer (this post)

A couple of pre-requisites before we run the SQL Developer Data Pump Wizard:

- Dump files have already been transferred to Oracle Cloud Object Storage. Refer to this blog post on how to do this for large files that would time out in an internet browser.

- Create ADW Credentials to connect to the Object Store locker:

- Create an Authentication Token in Object Storage in order to be able to access Object Storage from ADW.

The Authentication Token is specific to an Oracle Cloud User. So in the Infrastructure Dashboard upper left menu, navigate to Identity and then Users.

Select the user. In my case it is: oracleidentitycloudservice/mazen.manasseh@perficient.com

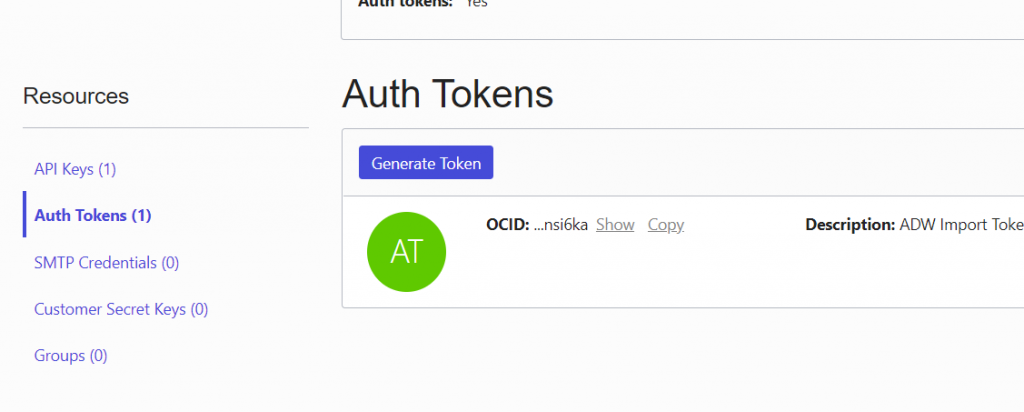

Under Resources, select Auth Tokens and click the Generate Token button.

Make sure to copy the generated token once it’s displayed because this won’t be accessible again. We will use this generated token as the Authentication token in the next step to create the ADW Credential to the Object Store.

- Run the following script against ADW to create the Credential to Object Store.

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => ‘ADW_CREDENTIAL’,

username => ‘oracleidentitycloudservice/mazen.manasseh@perficient.com’,

password => ‘<Enter the Authentication token here>’ );

END;

/

SQL Developer ADW Data Pump Import Wizard

In order to access the Data Pump Import Wizard in SQL Developer, first, display the DBA window from the View menu. Add the connection to the ADW database to import into. Then under that connection, navigate down to Data Pump -> Import Jobs. Right-click and select the Run Data Import Wizard.

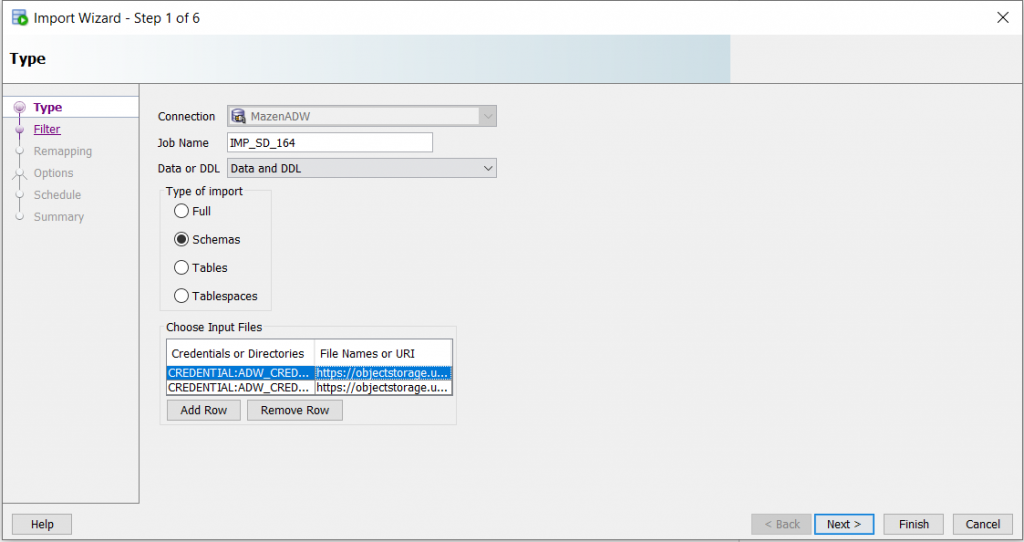

There are a few different types of data pump imports. In this example, I chose to do a Schema import. This allows me to merge several schemas from different source databases into the same target ADW.

We need to have the ADW Credentials created in ADW in order to access the Oracle Object Storage. This is the same as the second pre-requisite mentioned earlier in this blog.

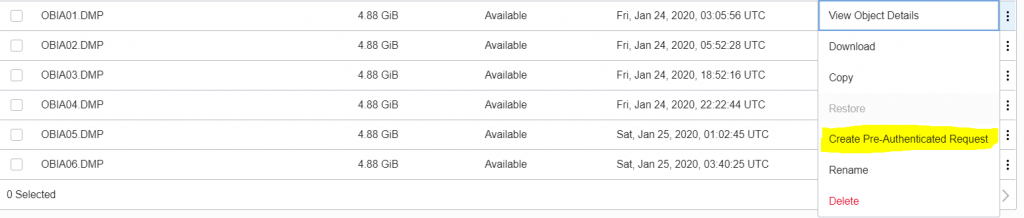

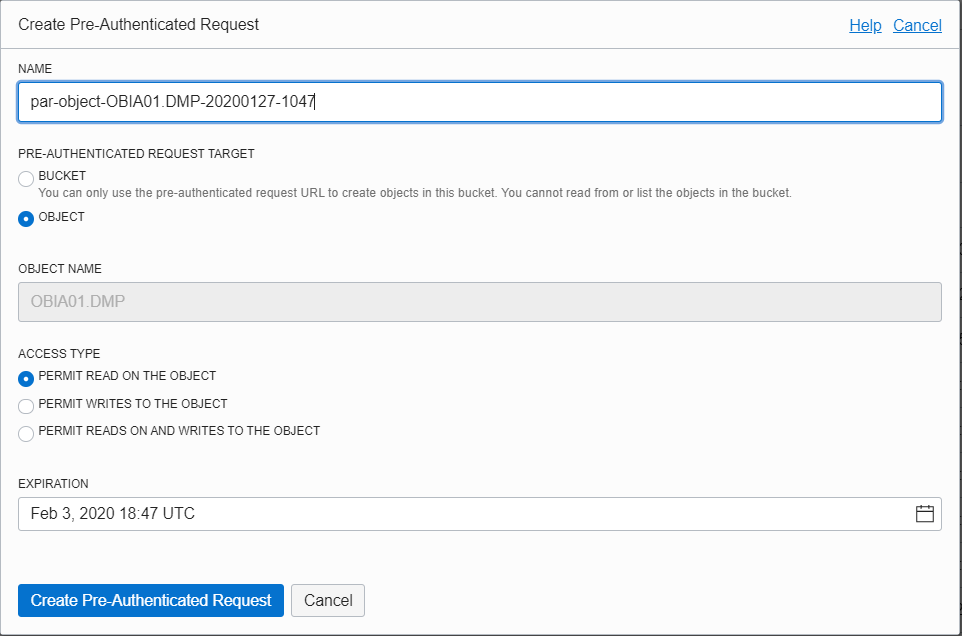

In addition, it is assumed that at this point, the DMP file(s) that will be imported into ADW are already available on Oracle Cloud Object Storage. In order for SQL Developer to access the DMP files from Object Storage, we need to create Pre-authenticated Requests for each file that will be used for the import. When we create a Pre-authenticated Request for an Object in Oracle Object Storage, we get the URI to the object that we can then use in the SQL Developer Import Wizard under “Choose Input Files”. To create a Pre-authenticated Request for an object, in this case a DMP file, navigate to Oracle Object Storage, and click on the bucket that contains the files. Next to the DMP file that will be imported, click on the menu and select: “Create Pre-authenticated Request”. Make sure to copy the URI generated so you can use it in SQL Developer. If you are importing from multiple DMP files simultaneously, repeat the create Pre-authenticated Request process for each DMP file and note down the URI for each of the DMP files.

Back to the SQL Developer Import Wizard, set the location of the DMP file(s) by selecting the Credential you created from the drop-down list and providing the pre-authenticated URI to the dump file(s). If the DB dump consists of multiple files, you will need to add a separate row for each of the dmp files under “Choose Input Files” and enter the corresponding pre-authenticated URI of each file.

Example of a pre-authenticated object URI:

https://objectstorage.us-ashburn-1.oraclecloud.com/p/P_62_yrQywjq94aCBcKxJ6OwyWTE/n/perficientbi/b/PerficientBucket/o/OBIA01.DMP

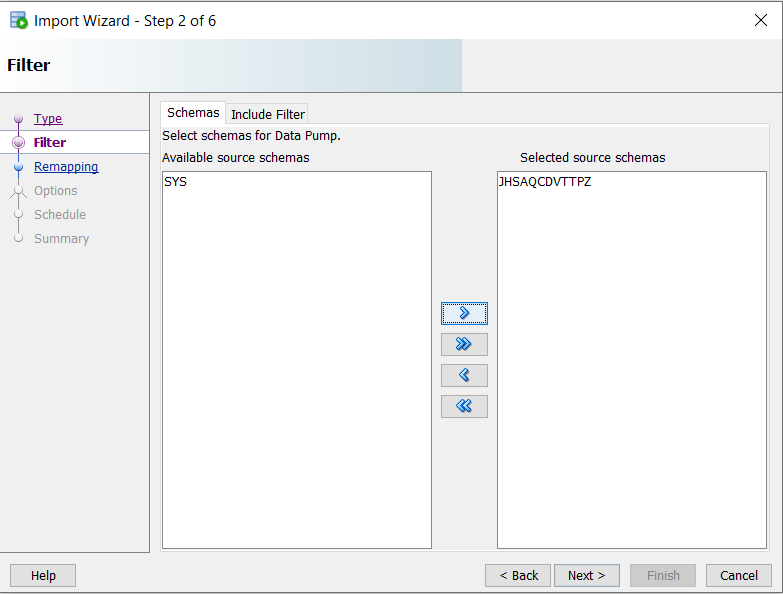

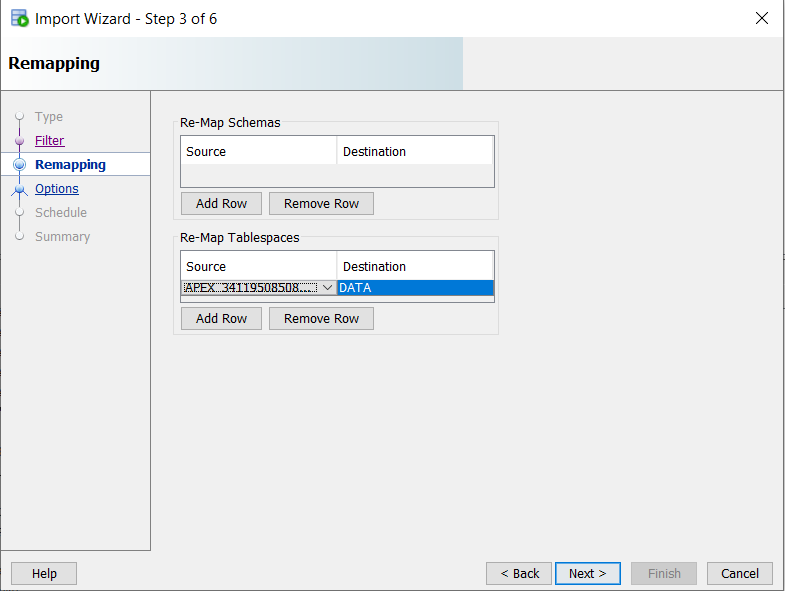

The credential connection is successful if you see the DMP schema(s) listed on the following screen. Select the source schema(s) that you would like to import into ADW.

In the following screen, I didn’t choose to re-map schema because I wanted the target schema name to be created in the same way as the source schema name. Note that it’s better not to create the schema manually before running the Import Wizard, and have the Import job create it. I mapped the source tablespace to the tablespace I want to use in ADW, and in this case, it’s called “DATA”.

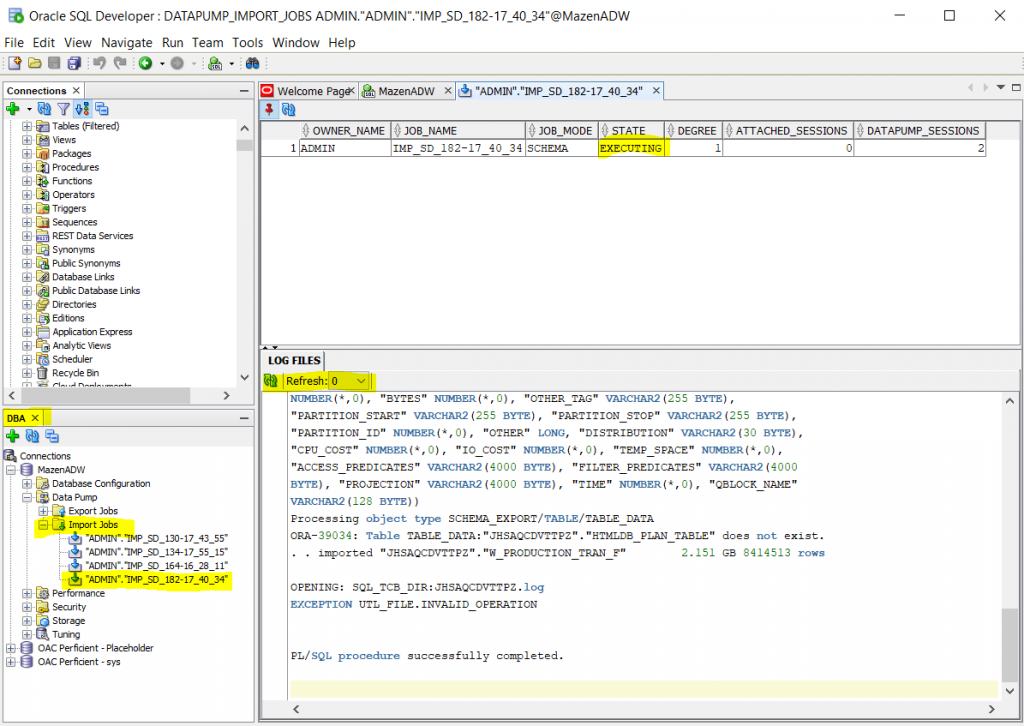

While the import job is running, we can easily monitor the log by clicking on the import job name from the DBA Import Jobs list. You can set a refresh interval on the Log File window and see any errors and which tables are being loaded with how much data.

The import job duration depends on how large the dump file(s) are. SQL Developer doesn’t have to remain open for the import to continue as the job is already executing on ADW itself and sourcing data from the Object Store.

Hi Mazen Manasseh,

Hope you are doing good and safe. Thank you so much for wonderful write. This really help me as beginner to understand the source data load to ADW database very briefly.Can I too have sample data to perform the test on my free ADW database? Or please suggest from where can get the same.

Thank you!

Thanks Raj. If you check out the schema called SSB on ADW, you will see a few sales tables with a large volume data set. This has sample data you can work with.

Hi Mazen Manasseh,

I trust you are dong good..

A million thanks for this great post. It is extremely helpful-

My use case is a bit different. I Have about 500 Dump files that should be imported to the ADW. These dumps are already available in an object store’S bucket.

My question is….. How can i orchestrate a bulk Data Pump import without having to create pre-Authenticate requests for each of the 500 dumpfiles Files.

something like using a wildcard MY_Dmp*:*

The pre-requisites are all in place i.e.

1) Dump Files already uploaded to the object store

2) ADW Credentials to connect to the Object Store

3) Authentication Token is created

Thanks for your response in Advance

You may want to look for a substitution variable to use as a filename suffix. For example, I know %U provides a 2-digit suffix. So if the filenames end with 01, 02, 03, etc. It reads them all. Ofcourse the pre-authenticated URI won’t work because each file has a different URI. So you want to try using object store credentials with the generic uri of the dump files (not the pre-authenticated ones).

Hello Mazen,

Thanks for such a succinct article.

Do you also have a post where you have used SQL Dev Web instead of locally installed SQL Dev?

The problem i am facing is that although i have gone 100% as per your article and other Oracle articles, i do not see my dump file (or for that matter any file), in the Bucket, when i go to Import the Dump using the Web interface.

Hi Mazen,

After selecting the credential directory and respected urls of each dump file getting an error like unable to process import, these dump files are encrypted.

How can I overcome this issue?

Is there any alternate way to decrypt the dump files in sqldeveloper?

can you please suggest a better approach?

I stored those dump files in an Azure storage container.

Thanks,

Anil.