This post is in a series of blog posts on migrating schemas from an Oracle DB (Cloud DBaaS) to Autonomous Data Warehouse (ADW). This post is focused on uploading the large Data Dump files from the DBaaS compute machine onto Cloud Object Storage using Swift REST.

Quick links to other posts in this series:

Summary Blog Post

- Export Data into Dump Files

- Option 1: Export DB Schemas Using SQL Developer

- Option 2: Export DB Schemas Using Data Pump Command

- Transfer Data Dump Files over to Oracle Cloud Object Storage

- Option 1: Swift REST Interface to Upload Files to Oracle Object Storage (this post)

- Option 2: OCI CLI Utility to Upload Files to Oracle Object Storage

- Import Data Dump Files into ADW Using SQL Developer

The other approach I explain in the other post uses the OCI CLI utility. This post looks at another approach, which is using Swift REST and is my preferred approach to transfer files from Oracle Compute (for DBaaS) to Oracle Cloud Object Store.

So an alternative to having to install, configure and use OCI CLI is to use the Rest API with a Swift Password (Auth Token). Using a REST curl command, we can upload files to Object Storage. On windows this requires installing curl, however, on Oracle Cloud Compute Linux machines, it is already available. So this approach works well if you want to SSH into an Oracle DBaaS Compute and upload the DB Dump files directly to Cloud Object Storage, without having to download the large DMP files locally. Because with REST we can upload directly from Oracle Cloud Compute to Oracle Object Storage, it is much faster than running OCI CLI from a local machine. While this approach doesn’t require the hassle of installing CLI, doing sophisticated file management (such as breakdown and parallel upload) will require scripting with REST, something that is not necessary with the CLI utility.

To do a file upload using REST:

- Create a Bucket on Oracle Object Storage

This bucket belongs to a compartment and serves to hold the files that will be uploaded to Object Storage. When uploading the DB DMP files, you will specify the Namespace and Bucket name for the Object Storage to upload into. So take note of these to use them later. - Create an Authorization Token (also referred to as Swift Token) for your user. Following the instructions “To create an auth token”. https://docs.cloud.oracle.com/iaas/Content/Identity/Tasks/managingcredentials.htm#create_swift_password

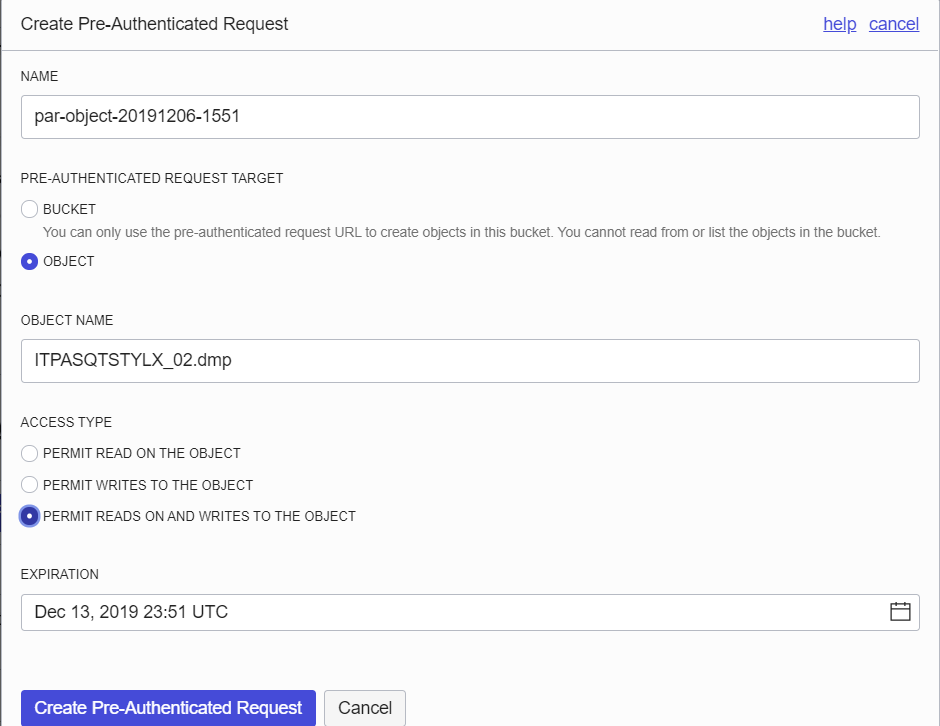

Take note of the token string to use in the curl command. - Create a pre-Authenticate Request on Object Storage.

Navigate to the Bucket created in step 1.

Click on the Create Pre-Authenticated Request Button.

Select Object and enter the name of the Dump file as you want it to be on Object Storage. (If you are uploading multiple files together, select the target as Bucket instead of Object.)

Select permit Reads and Writes and click Create.

Take note of the PRE-AUTHENTICATED REQUEST URL to use in the curl command in the next step as the destination filename of the PUT command. - Open a command line and run the following curl command:

curl -v -X PUT -u '<user>:<token string from step 2>' --upload-file <local file location> <Pre-authenticated Request URL from step 3>

For example:

curl -v -X PUT -u 'mazen.manasseh@perficient.com:reFdjuFa)krQ6{<{q' --upload-file /u01/app/oracle/admin/ORCL/dpdump/from_onprem/ITPASQTSTYLX_02.dmp https://objectstorage.us-ashburn-1.oraclecloud.com/p/4sS3kULX33GK9kL0fOwvHYcdYYmHUKE/n/idjuyvnx1qqz/b/MazenADWComp/o/ITPASQTSTYLX_02.dmp

Once done, remember to delete the pre-authenticated Request as it is no longer needed.

Is there a file size limit to upload using REST? i got this error, my dump file size is 380 GB

{“code”:”RequestEntityTooLarge”,”message”:”The Content-Length must be less than 53687091200 bytes (it was ‘397539438592’)”}* Closing connection 0

* schannel: shutting down SSL/TLS connection with objectstorage.us-ashburn-1.oraclecloud.com port 443

* schannel: clear security context handle

That’s a good point. The limit on OOS is 50GiB for each object. So if your file is larger than 50GiB, what you want to do is break it down into smaller segments so that each segment is not more than 50GiB. The maximum total file size of all the related segments that make up one file can be up to 10TiB. You want to use a multi-part upload approach. Following has more info on this: https://docs.cloud.oracle.com/en-us/iaas/Content/Object/Tasks/usingmultipartuploads.htm

In your case, either regenerate the DMP file and make sure that while doing the export, specify that each dump file has a max size of 50GB. This way the export will automatically generate the segments in separate smaller files. If you don’t want to regenerate the data dump export, there are ways to segment the already existing 380GB file into smaller segments. You may want to check the linux split command if that’s what you prefer to do, instead of re-exporting the data dump.

If you create the PRE-AUTHENTICATED REQUEST URL then you don’t need the auth-token.

After creating the PRE-AUTHENTICATED REQUEST URL the following curl command is all that is needed to upload a file.

curl -T /path/anyfile.txt

I would like to see a working example using the auth-token that doesn’t use the PRE-AUTHENTICATED URL as that’s really meant to be used temporarily.

Thanks

the curl command was changed when I posted so trying another way.

curl -T /path/anyfile.txt PRE-AUTHENTICATED-REQUEST-URL

Is it possible to upload a file to storage cloud without PRE-AUTHENTICATED-REQUEST-URL and rather user auth token in the curl command ?

I am getting error while uploading file.

curl -T selected_tables_11102019.dmp https://objectstorage.uk-london-1.oraclecloud.com/p………/DB-Dumps/o/

curl: (35) SSL connect error

Sounds like an issue with the provided user and authentication token.

Yes that is correct that a pre-authenticated request is not required with the authentication token.