This is part of a blog series on migrating data from Oracle Databases into Oracle Autonomous Data Warehouse (ADW). This blog discusses exporting Oracle Database Schemas using the Data Pump Command.

Quick links to other posts in this series:

Summary Blog Post

- Export Data into Dump Files

- Option 1: Export DB Schemas Using SQL Developer

- Option 2: Export DB Schemas Using Data Pump Command (this post)

- Transfer Data Dump Files over to Oracle Cloud Object Storage

- Import Data Dump Files into ADW Using SQL Developer

In this blog, I will describe the Data Pump command to export schemas from an Oracle Database using the command line. This is something that can be done from SQL Developer as well, instead of connecting to the Database machine to run the export command. Check my other post on how to export using SQL Developer.

- Before connecting through SSH to run the export, make sure there is a directory available in the DB to export the Dump files into. This directory needs to be created before you continue, in case you don’t already have a directory set up with the proper permissions to export files to. Use the following SQL command to create a directory if you don’t already have one. You can run this using SQL Developer or SQL Plus.

In the following example, the name of the DIRECTORY is DP_FROM_ONPREM.

CREATE DIRECTORY DP_FROM_ONPREM AS ‘/u01/app/oracle/admin/ORCL/dpdump/files’; - Connect to the DB machine with the command line. In my case, I am exporting schemas from DBaaS Cloud DB. So I use PUTTY using SSH to connect using oracle user and the DBaaS Cloud Compute private key.

- Run the following command:

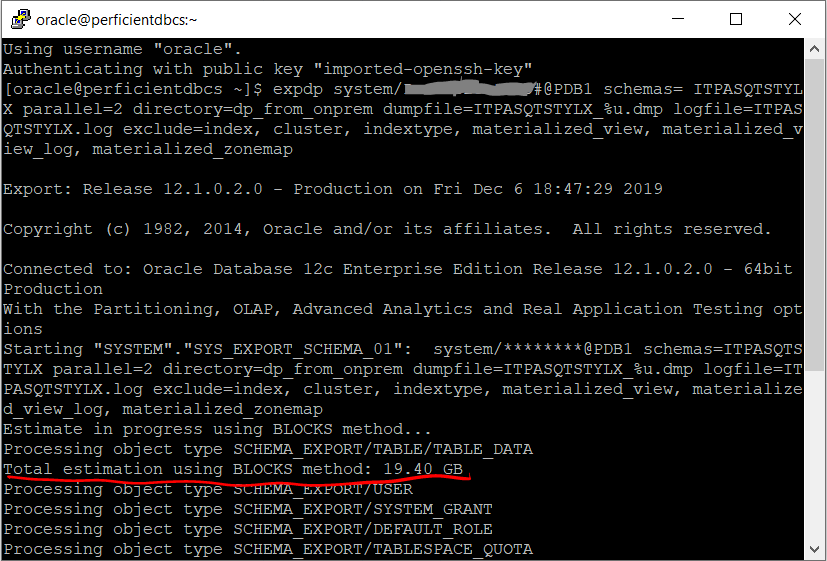

expdp system/******** schemas= ITPASQTSTYLX parallel=2 directory=dp_from_onprem dumpfile=ITPASQTSTYLX_%u.dmp logfile=ITPASQTSTYLX.log exclude=index, cluster, indextype, materialized_view, materialized_view_log, materialized_zonemap, db_link

In my example, the schema name I am exporting is ITPASQTSTYLX.

The directory is the same one created in step 1.

dumpfile is the parametrized name of the exported DMP files, since the export may consist of multiple files if the schema is large.

If your aim is to import this data dump into Autonomous Data Warehouse (ADW), it is recommended to use the exclude filter. Excluding certain object types like indexes is recommended because ADW is optimized to function without them.

As the export progresses, you will see the estimated size of the export among other information. The export duration depends on the size of the schema that is being exported. Notice that the command prompt shows the progress of the export and provides an estimate of the size of the data being exported.