Whenever I hear the word “container,” the first thing that comes up in my mind is the containers on large cargo ships. The same context applies to the containerized application in IT as well. The containers on the cargo ships are packed with goods to ship to their destinations. Similarly, in IT, we can use containers to deliver and manage our content much more efficiently.

Container technology has existed for a long time, but it was hard to use previously. This has changed in the last couple of years with the help of Docker. Docker gives a simple and straightforward way to interact with Linux kernel container technology and has changed IT operation and application deployment. With Docker, things are changing rapidly and getting better and better in the areas of application development, testing, deployment, management, performance, security, and scaling. You can utilize and integrate it with any level you need.

Well, “container” sounds cool and powerful, but who can use it? Will it support and work for a small client with the just a single server, mid-level clients with several servers, or for an enterprise client with 500+ servers? We will explore this further, but first, we need to understand what a container is in technical terms and how it’s unique compared to virtualization.

What is a Docker container?

Lots of people try to compare containers with virtualization, which is fine for easily understanding the structure of containers. But does it provide a complete understanding of how containers help fit into our existing setup or deployment and other processes? Let’s have a look.

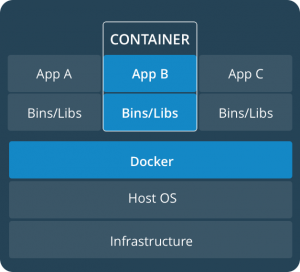

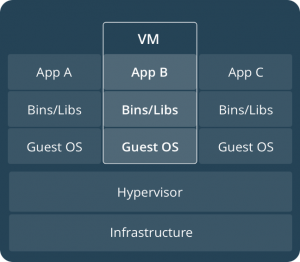

Containerization vs. virtualization; Docker.com

As the figures show, virtualization requires a hypervisor to run multiple OS images and applications combined. Whereas the container requires only a Docker center layer to interact with the base host OS infrastructure. Containerization ultimately saves system resources and gives a way to isolate applications with the different versions of applications and dependencies.

For an example of a traditional approach, let’s say you want to host multiple applications (Magento) with different PHP versions, web server (Nginx, Apache), and different components of caching. With the help of virtualization, this can be possible, but you will need different OS virtual machines and need to install the software you need. If you need five different PHP versions, then you would need to set five different virtual machines with five OS and different components.

Now, with the help of Docker, you only need the base host machine with single OS and Docker engine software. That’s it. Then you only have to use Docker images with different PHP versions and build your application containers. The best part of it is that you can move, migrate, or deploy these container images seamlessly to any environment like Dev, QA, UAT, staging, or production. It will give you the same result, regardless of your base OS.

How will this fit into your existing deployment process?

The traditional deployment process is where the developer stores their code in code repositories, like GIT, and sets up a local development environment, small virtual machines, Vagrant-like virtualization tools, etc. The developer then builds code, performs their testing, and pushes code into GIT for QA. The QA team would have their own QA setup, either in their working machine or hosted in a centralized location. The QA testing might fail because the developer performed their testing on a local machine and underlying packages like the OS, PHP, and web server on the dev environment don’t match with the QA instance. Then what? The developer has to rework on their build and not focus on anything else until the build moves successfully to production.

Docker containerization solves this problem. You can create Docker container images for application and dependencies like PHP, the web server, and caching. Then you upload the container images into the Docker registry (you can use Docker Hub or create your own Docker registry). The developer has to pull the same version of the container images, which is set according to production setup, and start developing an application on their local machines or in the dev environment.

Once the code is ready, the development team can perform their application functionality testing on their local system. They will able to see the same results QA is going to see, as QA will also pull the same version of application component images for testing. It saves lots of developer time and allows them to focus on the next task. By using modern continuous integration, continuous delivery (CI/CD) deployment process tools, you can automate this process and let the build move as per the defined pipelines. And no need to worry about unexpected issues may occur during QA, UAT, or the staging review. This automation and Docker containerization will save development hours, achieve your go-live timeline easily, and ultimately save money for the customer.

Similarly, containerization also helps system operational teams to keep container images up-to-date and perform patching and upgrading most efficiently. You can build the containerized application as a package and release it into your environments. These combinations reduce production uncertainty, application failure, downtime, and errors and give a better customer experience.

Is it that simple? Yes. If you build your containerized environment strategically, there will be fewer challenges.

What to consider while creating a containerized environment and build process?

Architectural design

As this is going to be at the heart of your deployment release cycle, you need to choose high-availability (HA) robust CI/CD automation that provides everyone with visibility, responsibility, and security.

Select the right tool

There are many tools available to perform similar tasks. Review your requirement, cost associated with it, and underlying hosting platform.

Select secure Docker images

Try to choose official Docker container images or build your own images as per the requirement. There are great tips available to follow. For example, choose the volume, port connectivity, size of Docker images, resource utilization, persistent data storage…etc. Also, store container images in your own Docker registry, or somewhere you can control your image registry securely.

Select the right hosting platform

Always try to choose the platform where you can have full control of whether it’s on-premises or with an IaaS solutions provider. Make sure to have an HA, scalable base on demand and data security.

As with a lot of software, containerized applications also require some kind of management, especially for big enterprise customers where an application runs on hundreds or thousands of application containers. It’s not possible to manage and monitor manually, but there are very good tools available to solve this problem. One of these is Kubernetes, which can do monitoring, management of container clusters, scaling, and rollout.

I hope this will help you to make a more robust, controlled, and secure CI/CD deployment process.