On March 8, 2017, I published the results of our study to see which was smarter, the Google Assistant on Google Home or Alexa on the Amazon Echo. As it happens, we tested Bing’s Cortana, and Apple’s Siri all at the same time. We also tested Google search for reference purposes, since it’s historically been the leading place to get direct answers for questions via its Knowledge Panel and Featured Snippets.

So here now we present our results for all of those Digital Personal Assistants (also known as Personal Digital Assistants).

Structure of the Test

We collected a set of 5,000 different questions about everyday factual knowledge that we wanted to ask each personal assistant and Google search. This is the same set of queries we used in the Google Home vs. Amazon Echo test linked to above. We asked each of the contestants the same 5,000 questions, and noted many different possible categories of answers, including:

- If the assistant answered verbally

- Whether an answer was received from a database (like the Knowledge Graph)

- If an answer was sourced from a third-party source (“According to Wikipedia …”)

- How often the assistant did not understand the query

- When the device tried to respond to the query, but simply got it wrong

All four of the personal assistants include capabilities to help take actions on your behalf (such as booking a reservation at a restaurant, ordering flowers, booking a flight), and that was not something we tested in this study. Basically, we focused on testing which of them was the smartest from a knowledge perspective.

Do People Ask Questions to Digital Personal Assistants?

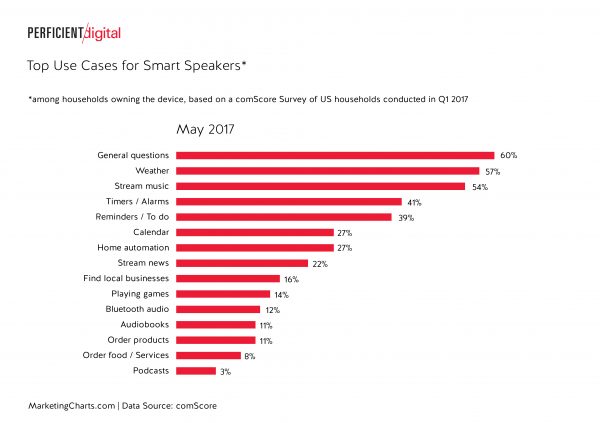

Before we dig into our results, it’s fair to ask whether or not getting answers to questions is a primary use for devices like Google Home, Amazon Echo, etc. As it turns out, a recent survey by Comscore showed asking general questions as the number one use for such devices!

Chart from MarketingCharts

Which Personal Assistant is the Smartest?

Here are the results of our research:

| Personal Assistant | % Questions Answered | 100% Complete & Correct |

|---|---|---|

| The Google Assistant on Google Home | 68.1% | 90.6% |

| Cortana | 56.5% | 81.9% |

| Siri | 21.7% | 62.2% |

| Alexa on the Amazon Echo | 20.7% | 87.0% |

| Google search (for comparison purposes) | % Questions Answered | 100% Complete & Correct |

|---|---|---|

| Google Search | 74.3% | 97.4% |

Note that the 100% Complete & Correct column requires that the question be answered fully and directly, and that very few of the queries were answered by any of the personal assistants in an overtly wrong way. You can see more details on this below.

[Tweet “Google Assistant answers the most questions, but Microsoft’s Cortana is not far behind. See study”]

Want more? Learn why digital personal assistants are so disruptive to marketing

Detailed Personal Assistant Test Results

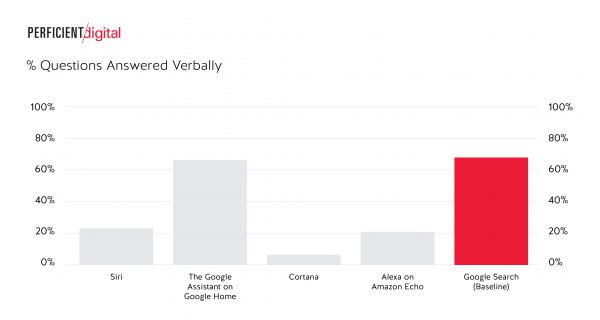

Let’s start by comparing how often our four contestants responded verbally to answer the questions asked:

As you can see, the Google Assistant on Google Home provided verbal responses to the most questions, but that does not mean that it gave the most answers, as the phone-based services (Google search, Cortana, Siri) all have the option of responding on screen only, and in fact each of them did so a number of times. It’s also interesting to see how non-verbal Cortana is in this particular test.

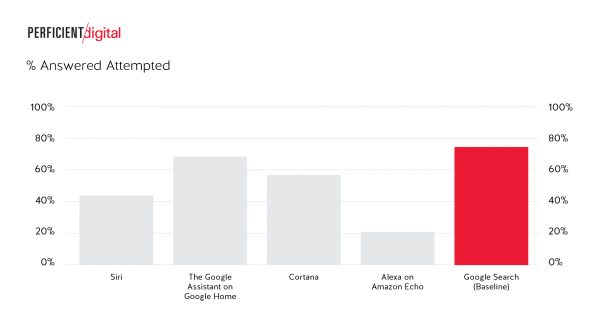

Here’s a look at the number of questions each attempted to answer with something other than a “Regular Search Result”:

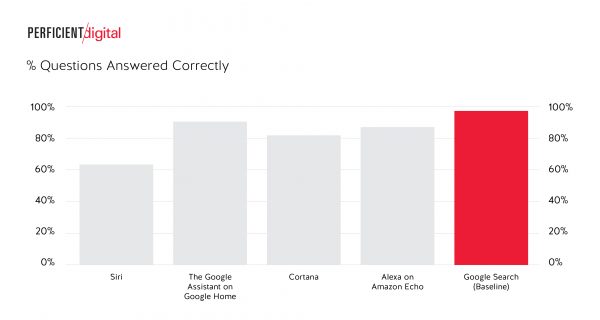

When the personal assistant (or Google search) responded with something other than a regular search result, how often did they respond to the question 100% correctly and completely? The data follows:

[Tweet “Google, Alexa & Cortana all answer ?’s correctly most of the time, but one answers far more questions”]

As it turns out, there are many different ways to not be 100% correct or complete:

- The query might have multiple possible answers, such as “how fast does a jaguar go.”

- Instead of ignoring a query that it does not understand, the personal assistant may choose to map the query to something it thinks of as “close” to what the user asked for.

- The assistant may have provided a partially correct response.

- The assistant may have responded with a joke.

- Or, it may simply get the answer flat out wrong.

An example of number 2 in the above list is the way that Siri responds to a query such as “awards for Louis Armstrong,” where it responds with a link to a movie about Louis Armstrong. These types of scenarios accounted for a large number of the “not 100% correct” scenarios on Siri.

Want more? Find out when and where people feel most comfortable using voice commands with smart devices

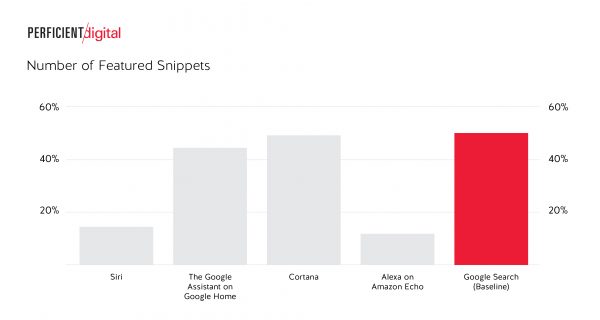

Featured Snippets

One of the big differentiators is the degree to which each personal assistant supports featured snippets. Let’s take a look at the data:

[Tweet “Study shows Cortana is catching up to Google Assistant in Featured Snippet answers.”]

A couple of major observations from this data:

- Cortana has become very aggressive with pushing out featured snippets. When we last ran this particular test including Cortana in 2014, it basically had zero featured snippets. Now here in 2017, it has almost as many as Google search or the Google Assistant does.

- The personal assistants that can’t leverage web crawling lag far behind in this type of answer.

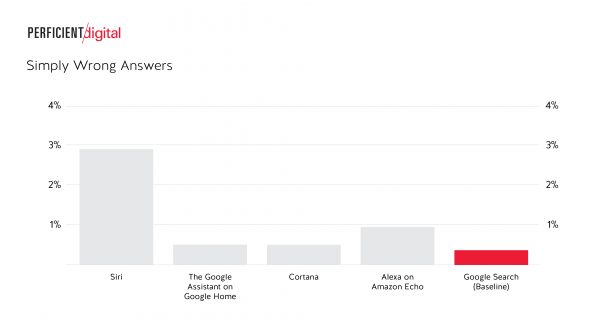

Examples of Wrong Answers

With that in mind, let’s take a look at the percentage of answered questions that were simply wrong:

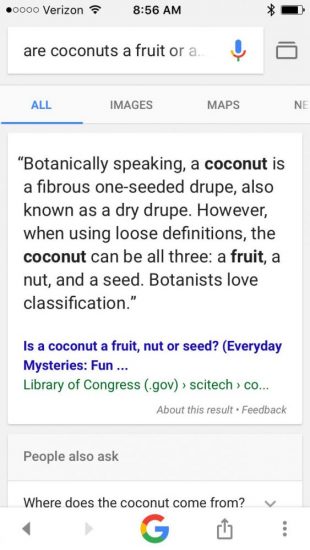

Note that in our test, we were not trying oddball queries with a goal of tricking the personal assistants into giving us grossly incorrect facts, so these may not be as spectacular as some of the errors shown in other articles (some of which I’ve contributed to). We also tested our non-personal assistant, Google search, and here is an example from that:

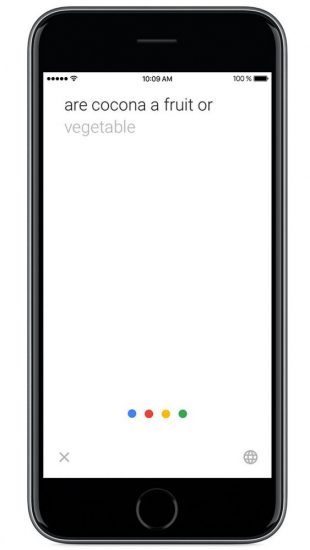

The actual question was “are cocona a fruit or a vegetable.” I checked this query personally, and saw that I could get Google search to recognize what I said (specifically, it recognized me as saying “cocona” in the query), as you can see here:

Now it turns out that cocona is actually a rare Peruvian fruit. Google search nonetheless insisted on translating the word into “coconut,” so I did not get the answer I wanted.

Now to look at our personal assistants, here’s an example of an error from Cortana:

For this one, it’s interesting that the response is for the highest paid actors. That’s likely to be frustrating to anyone who enters that query! Now, let’s look at one for Siri:

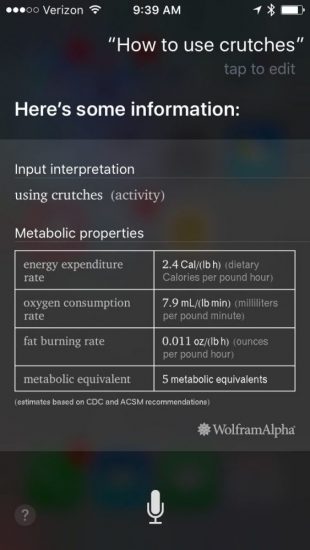

Siri does draw some of its answers from Wolfram Alpha, and this looks like it’s one of those. But, it seems to have gone off the deep end a bit here and returned something that is largely a nonsense response. Next up, here’s one from the Google Assistant on Google Home:

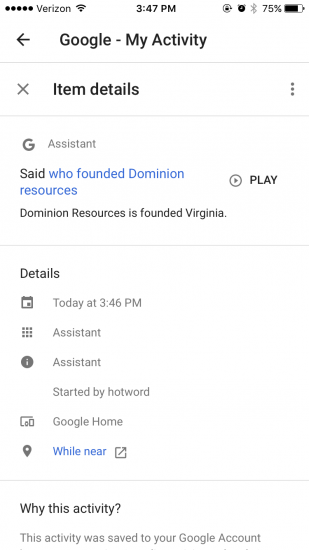

As you can see, the answer actually provides the location of Dominion Resources instead. Last, but not least, here’s one from Alexa on the Amazon Echo:

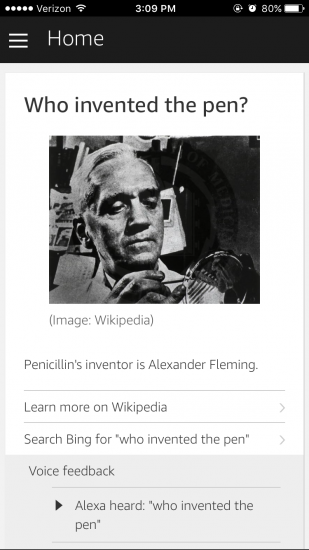

The answer given is for penicillin, not for the pen.

Which Personal Assistant is the Funniest?

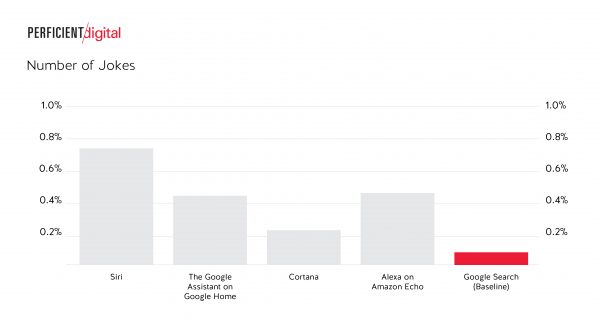

All of the personal assistants tell jokes in response to some questions. Here’s a summary of how many we encountered in our 5,000 query test:

Siri is definitely the leader here, but I find it interesting that the Google Assistant on Google Home is quite a bit funnier than Google search. For example, “do I look fat?” with Google search simply gives me a set of web search results, yet with the Google Assistant on Google Home the answer is, “I like you the way you are.”

There are a few jokes in Google search though. For example, if I search “make me a sandwich”, it does give me a set of regular search results, but its verbal response is “Ha, make it yourself.” With Siri, if you ask “what is love?”, you get a response that varies. One time you might get “I’m not going there,” but if you ask it again you may get a different answer. Interestingly, if you ask it a third time, it seems to give you a serious answer to the question, on the off chance that this is what you actually want.

If you ask Cortana “What’s the meaning of life,” it may say “we all shine on, my friend.”With Alexa, a query like “Who is the best rapper?” will net you the answer: “Eminem. Wait! I forgot about Dre.”

Summary

Google still has a clear lead in terms of overall smarts with both Google search and the Google Assistant on Google Home. Cortana is pressing quite hard to close the gap, and has made great strides in the last three years. Alexa and Siri both face the limitation of not being able to leverage a full crawl of the web to supplement their knowledge bases. It will be interesting to see how they both address that challenge.

One major area not covered in this test is the overall connectivity of each personal assistant with other apps and services. This is an incredibly important part of rating a personal assistant as well. You can expect all four companies to be pressing hard to connect to as many quality apps and service providers as possible, as this will have a major bearing on how effective they all are.

Want to keep learning? Check out all of our digital marketing research studies.

Did you know that you can “Ask Perficient Digital” SEO & digital marketing questions on Google Assistant and Amazon Alexa devices? Here’s how to do it!

Is google assistant better now? And how about the google allo app? How it might affec seo in future?

The Allo app is an adaptation of Google Assitant. Your other questions are answered in our study, I believe.

Great Post Eric, I rely heavily on Google assistant since i use android as my main phone, but my second pick would be Siri. Cortana simply doesn’t cut it for me, it’s sluggish and the voice recognition is still kind of bad. it does offers more accurate results when i use on my windows laptop.

I’d be really interested to see what results were produced from sequentially questioning. I recall seeing a demonstration of Cortana versus Siri a couple of years ago demonstrating this and Cortana was very impressive. Their understanding of pronouns was what really made that stand out when chaining together questions and understanding previous ones in context. Definitely good to see this research though. Nice work 🙂

It is unrealistic to expect a general assistant to be able to do everything.

You didn’t ask any of these assistant’s to read your email. Probably because you already guessed it is a bit too involved for them. They are generally best for single answer questions, rather than engaging in an activity.

We have produced an app called Speaking Email that addresses this shortcoming. It is a dedicated app for doing just email reading. Its smarts are around detecting email signatures, disclaimers, reply threads you have seen, and other clutter, and surfacing the important content. It has voice commands to archive, flag, trash, reply by dictation and forward.

These kind of dedicated voice apps could be the way of the future, which consumers wanting to use voice technology but the general assistants not having the smarts to engage in such involved activities.

Given email is the number one activity smartphones are used for.

Hi Mike. If you read our study introduction carefully I think you’ll find that it was never our intention to ask these assistants to “do everything.” We focused specifically on their ability to answer general knowledge questions, and that is all we measured.

Hi Dawn. Google voice search and the Google-based assistants are also very good at understanding sequential questions, as has often been demonstrated. However, you are right, testing which is best at such sequential questions could be an interesting followup study!

Hi Mark,

I’d be very interested to see how they fare when you start to add pronouns such as he, she, they, etc in there sequentially.

Yes, good point. Even when you ask quite sensible questions you get this abysmal response rate. My point was not so much your questions but the assistant having to be designed to handle anything and not being surprising this sets it up for disappointment. But perversely it’s such a pleasant surprise when it works we almost forgive them!

Very interesting research!

Please, can you give a link for 5000 questions set.

It’s really interesting to look through it.

Thanks in advance. 🙂

Could you please try same 5000 questions on a random human with no internet connection or books?

Unfortunately, that’s not something that we’re sharing at this time.

OK. I understand. Thanks for your respond and thanks for this research! 🙂

Thanks for sharing such an interesting post. So funny the questions about weather!

I got similar questions from people. Some of them think digital personal assistants are magic behind everything. That’s fun! Anyway, Google is visiting my office next week. I guess I’ll have a lot to ask them.

I’m using iPhone and used Android phone as well. With all my experiences I think Siri is the best of digital personal assistant on cellphone. Be a food blogger, I see that Siri can give me exactly what I want to find arround the food, healthy care. I think it’s base on the searching engine algorithm .

This is great articles. This showed me more helpful information. Thank you.

i wasn’t being fully understand about the Digital-personal-assistants but now by reading the post i had learn much more about the topic so from my heart i reall wanna thanks Eric Enge.

hi Eric , I would love to know if there are plans in future to make your database open source as it will benefit lot of people

and if you are planing to open source the data may I know how long will it take you guys to make it publicly available

We don’t plan to make it public at this time. Sorry about that!

It’s been almost a year since your original post Eric and Siri is still struggling to catch up.

I’m a diehard iPhone fan so Siri is an important aspect for me.

Google still beats the crap out of the competition with no legit competitor in sight.

Then stay tuned…Eric will be releasing an update to this study in the next two weeks…and it includes some interesting news about the progress of Siri.

I think if you use a personal assistant, make sure you train them well. Otherwise, they will do what they think you want.

My biggest gripe about VA’s is how long it takes to get them trained. Though once they are trained it is amazing.

Hi, great content but willing to know more about some free digital assistance that is open source which can be trained using programs. I will glad if you write on some content articles for that too.

Thanks for sharing.