In search engine optimization, auditing a website is a critical first step to understanding where the site is at today, and how to make critical improvements to it.

In this post I’m going to walk you through many of the most critical elements of a basic audit. Note that there is much more that you can do, so don’t treat these 15 items as a hard limit on how far you choose to go with your audits!

When we start an audit with a client website, I’m fond of telling them that I hope their site is in horrible shape. It may be non-intuitive, but the worse shape the site is currently in, the better off they are.

After all, it means that the audit will offer more upside to their business. At Perficient Digital, we’ve done audits that have led to more than double the traffic of a client’s site.

[Tweet “Implementing the recommendations of a good #SEO audit is often enough to significantly raise traffic.”]

An SEO audit can happen at any time in the lifecycle of a website. Many choose to do one during critical phases, like prior to a new website launch or when they’re planning to redesign or migrate an existing website.

However, audits can be an often-overlooked piece of a website’s strategy. And many don’t realize just how much the technical back end of a website impacts their SEO efforts moving forward.

What Are the Fundamental Components of an SEO Audit?

In a nutshell, here are the basic elements of any SEO Audit (click to jump to that section):

- Discoverability

- Basic Health Checks

- Keyword Health Checks

- Content Review

- URL Names

- URL Redirects

- Meta Tags Review

- Sitemaps and Robots.txt

- Image Alt Attributes

- Mobile Friendliness

- Site Speed

- Links

- subdomains

- Geolocation

- Code Quality

The SEO Audit – in Detail

Now, let’s look at the basic crucial elements of auditing a website from an SEO perspective in lot more detail …

1. Discoverability

You want to make sure you have a nice, accessible site for search engines crawlers. This means that a site’s content is available in HTML form, or relatively easy to interpret JavaScript. For example, Adobe Flash files are difficult for Google to extract information from, though Google has said that it can extract some information.

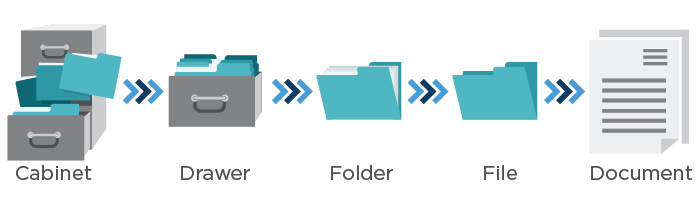

Part of having an accessible website for search engines and users is the information architecture on a site—how the content and “files” are organized. This helps search engines make connections between concepts and helps users find what they are looking for with ease.

To think about how to do this well, it’s helpful to compare it to how you deal with paper files in your office:

A well-organized site hierarchy also helps the search engines better understand the semantic relationships between the sections of the site. This gets reinforced by other key site elements like XML Sitemaps, HTML site maps, and breadcrumbs, all of which can help neatly tie the overall site structure together.

[Tweet “Well-structured site architecture helps search engines understand your site. Learn SEO audits at”]

2. Basic Health Checks

Basic health checks can provide quick red flags when a problem emerges, so it’s good to do these on a regular basis (even more often than you do a full audit). Here are four steps you can take to get a diagnosis of how a website is doing in the search engine results:

- Ensure Google Search Console and Bing Webmaster Tools accounts have been verified for the domain (and any subdomains, for mobile or other content areas). Google and Bing also offer site owner validation that allows you to see how the search engines view a site. Then, check these on a regular basis to see if you’ve received any messages from the search engine. If the site has been hit by a penalty from Google, you’ll see a message, and you’ll want to get to that as soon as possible. They’ll also let you know if the site has been hacked.

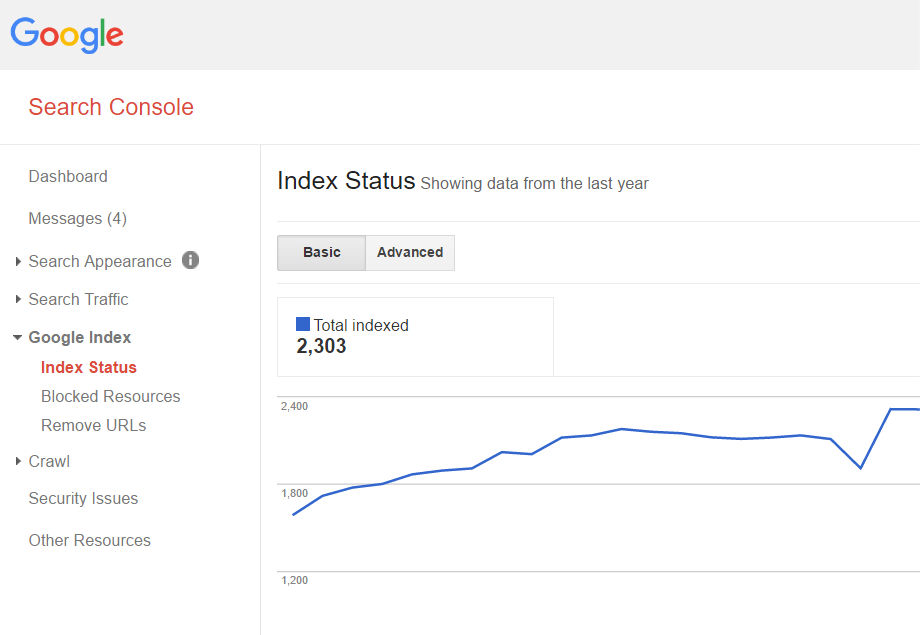

- Find out how many of a website’s pages appear to be in the search index. You can do this by going to Google Search Console as follows:

Has this number changed in an unexpected way since you last saw it? Sudden changes could indicate a problem. Also, does it seem like it matches up approximately with the number of pages you think exist?I wouldn’t worry about it being 20 percent smaller or larger than you think, but if it’s double, triple or more, or only about 20 percent of the site, you probably want to understand why.

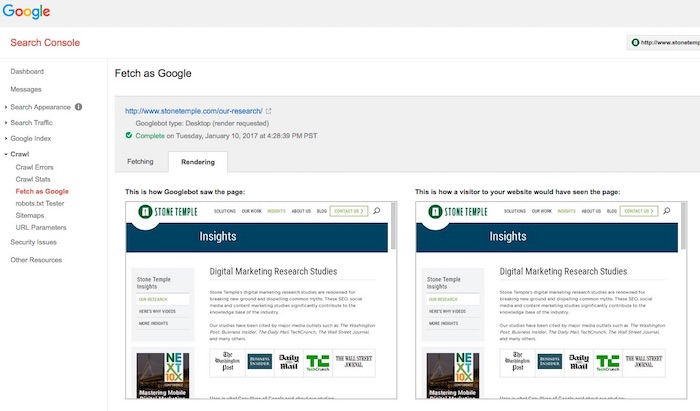

Has this number changed in an unexpected way since you last saw it? Sudden changes could indicate a problem. Also, does it seem like it matches up approximately with the number of pages you think exist?I wouldn’t worry about it being 20 percent smaller or larger than you think, but if it’s double, triple or more, or only about 20 percent of the site, you probably want to understand why. - Go into Google Search Console to make sure the cached versions of a website’s pages look the same as the live versions. Below you can see an example of this using a page on the Perficient Digital web site.

- Test searches of the website’s branded terms to make sure the site is ranking for them. If not, it could indicate a penalty. Check the Google Search Console/Bing Webmaster Tools accounts to see if there are any identifiable penalties.

[Tweet “Learn how to do a basic site health check as part of an #SEO audit.”]

3. Keyword Health Checks

You’ll want to perform an analysis of the keywords you’re targeting on the site. This can be accomplished by many of the various SEO tools available. One thing to look for in general is if more than one page is targeting or showing up in the search results for the same keyword (aka “keyword cannibalization”).

You can also use Search Console to see what keywords are driving traffic to the site. If you see critical keywords that used to receive traffic are no longer working (the rankings dropped) that could be a sign of a problem.

On the positive site of the ledger, look for “striking distance” keywords, those that rank in positions from five to 20 or so. These might be keywords where some level of optimization could move them up in the rankings. If you can move from position five to three or 15 to eight on a major keyword, that could result in valuable extra traffic and provide reasonably high ROI for the effort involved.

[Tweet “For great #SEO opportunities, look for striking distance keywords. Learn more at”]

4. Content Review

Here, we’re looking for a couple things:

- Content depth, quality, and optimization: Do the pages have enough quality information to satisfy a searcher? You want to make sure the number of pages with little or “thin” content is small compared to those with substantial content. There are many ways to generate thin content.One example is a site that has image galleries with separate URLs for each image. Another is a site with city pages related to their business in hundreds, or thousands, of locations where they don’t do business, and where there is really no local aspect to the product or services they are offering on their site. Google has no interested in indexing all those versions, so you shouldn’t be asking them to do so!This is often one of the most underappreciated aspects of SEO. At Perficient Digital, we’ve taken existing content on pages and rewritten it, and seen substantial traffic lifts. In more than one case, we’ve done this on more than 100 pages of a site and seen traffic gains of more than 150 percent!

- Duplicate content: A lot of websites have duplicate content without even realizing it. One of the first things to check is that the “www” version of the site and the “non-www” version do not exist at the same time (do they both resolve?). This can also happen with “http” and “https” versions of a site. Pick one version and 301 redirect the other to it. You can also set the preferred domain in Google Search Console (but still do the redirects even if you do this).

- Ad Density: Review the pages of your site to assess if you’re overdoing it with your advertising efforts. Google doesn’t like sites that have too many ads above the fold. A best practice to keep in mind is that the user should be able to get a substantial amount of the content they were looking for above the fold.

[Tweet “A thorough content review is an essential part of any #SEO audit. Learn more at”]

5. URL Names

Website URLs should be “clean,” short and descriptive of the main idea of the page and indicate where a person is at in the website. So, make sure this is part of the SEO audit. Ensuring URLs are constructed well is helpful for both website users and search engines to orient themselves.

For example: www.site.com/outerwear/mens/hats

[Tweet “URLs should be clean, short, and descriptive of the page main idea. More at”]

It’s a good idea to include the main keyword for the web page in the URL, but never try to keyword-stuff (for example, www.site.com/outerwear/mens/hat-hats-hats-for-men).

Another consideration are URLs that have tracking parameters on them. Please don’t ever do this on a website! There are many ways to implement tracking on a site, and using parameters in the URLs is the worst way to do this.

If a website is doing this today, you’ll want to go through a project to remove the tracking parameters from the URLs, and switch to some other method for tracking.

On the other hand, perhaps the URLs are only moderately suboptimal, such as this one:

http://www.site.com?category=428&product=80328

In cases like this, I don’t think that changing the URLs is that urgent. I’d wait until you’re in the midst of another larger site project at the same time (like a redesign).

6. URL Redirects

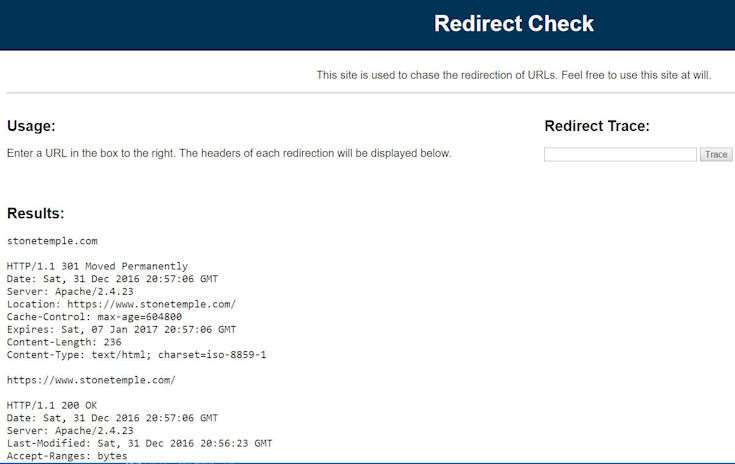

It’s a common best practice to ensure that a web page that no longer needs to exist on a website be redirected to the next most relevant live web page using a 301 redirect. There are other redirect types that exist as well, so be sure to understand the various types and how they function before using any of them.

[Tweet “Be sure to redirect pages that no longer need to be indexed in search to more useful pages. Learn how”]

Google recommends that you use 301 redirects because they indicate a page has permanently moved from one location to another, and other redirects, such as a 302, are used to signal that the page relocation is only temporary. If you use the wrong type of redirect, Google may keep the wrong page in its index.

It used to be the case that much less than 100 percent of the PageRank transferred to the new page through a redirect. In 2016, however, Google came out with a statement that there would be no PageRank value lost using any of the 3XX redirects.

To help check redirects, you can use tools like Redirect Check or RedirectChecker.org.

7. Meta Tags Review

Each and every web page on a site should have unique title tags and meta descriptions tags—the tags that make up the meta information that helps the search engines understand what the page is about.

[Tweet “Make sure every page on your site has unique title and description tags. Learn more at”]

This gives the website the ability to suggest to the search engines what text to use as the description of its pages in the search results (versus search engines like Google generating an “autosnippet,” which may not be as optimal).

It may also help avoid some pages of the website from being filtered out of the search results if search engines use the meta information to help detect duplicate content.

You’ll also want to take this opportunity to check for a robots metatag on the pages of the site. If you find one, there could be trouble. For example, an unintentional “noindex” or “nofollow” value could adversely affect your SEO efforts.

8. Sitemaps and robots.txt Verification

It’s important to check the XML Sitemap and robots.txt files to make sure they are in good order. Is the XML Sitemap up to date? Is the robots.txt file blocking the crawling of sections of a site that you don’t want it to? You can use a feature in the Google Search Console to test the robots.txt file. You can also test and add a Sitemap file there as well.

9. Image Alt Attributes

Alt attributes for the images on a website help describe what the image is about. This is helpful for two reasons:

- I. Search engines cannot “see” image files the way a human would, so they need extra data to understand the content of the image.

- II. Web users with disabilities, like those who are blind, often use screen-reading software that will help describe the elements on a web page, images being one of them, and these programs make use of the alt attributes.

It doesn’t hurt to use keyword-rich descriptions in the attributes and file names when it’s relevant to the actual image, but you should never keyword-stuff.

10. Mobile Friendliness

The amount of people that are searching and purchasing on their mobile devices is growing each year. At Perficient Digital, we have clients who get more than 70 percent of their traffic from mobile devices. Google has seen this coming for a long time, and has been pushing for websites to become mobile friendly for years.

Because the mobile device is such a key player in search today, at the time of writing, Google has declared it will have a mobile-first index. What that means is that it will rank search results based on the mobile version of a website first, even for desktop users.

One key aspect of a mobile-first strategy from Google is that its primary crawl will be of the mobile version of a website, and that means Google will be using the mobile crawl to discover pages on a site.

Most companies have built their desktop site to aid Google in discovering content, and their mobile site purely from a UX perspective. As a result, the crawl of a mobile site might be quite poor from a content discovery perspective.

Make sure to include a crawl of the mobile site as a key part of any audit of a site. Then compare the mobile crawl results with the crawl of the desktop site.

[Tweet “It is now essential for an SEO audit to include a mobile crawl of your site. Find out why at”]

If a website doesn’t have a mobile version, Google has said it will still crawl and rank the desktop version; however, not having mobile-friendly content means a website may not rank as well in the search results.

While there are a few different technical approaches to creating a mobile-friendly website, Google has recommended that websites use responsive design. There’s plenty of documentation on how to do that coming directly from Google, as well as tools that can help gauge a website’s mobile experience, like this one.

It’s worth mentioning Google’s accelerated mobile pages (AMP) here as well. This effort by Google is to give website publishers the ability to make their web content even faster to users.

While Google has said that AMP pages won’t receive a boost in ranking at the time of writing, page speed is, however, a signal. The complexity of the technical implementation of AMP pages is one of the reasons some may choose not to explore it.

Another way to create mobile experiences is via progressive web apps, which is an up-and-coming way to provide mobile app-like experiences on the web via the browser (without having to download an app).

The main benefit is the ability to access specific parts of a website in a way similar to what traditional apps can.

11. Site Speed

Site speed is one of the signals in Google’s ranking algorithm. Slow load times can cause the crawling and indexing of a site to be slower, and can increase bounce rates on a website.

Historically, this has only been a ranking factor when site speeds were very slow, but Google has been making noise that it will become more important over time. Google’s John Mueller has also indicated that a site that is too slow, and which is nominally mobile-friendly, may now be deemed as non-mobile friendly. However, currently, mobile-age speed is not currently treated by Google as a ranking factor.

[Tweet “Site speed will become increasingly important as a search factor. Are you ready?”]

In fact, site speed has become such an important element of the overall user experience, especially in mobile, that Google has said it wants above-the-fold content for mobile users to render in one second or less.

To help people get more visibility into site speed, Google offers tools such as the PageSpeed Insights tool and the site speed reports found in Google Analytics.

12. Links

Here, we’re looking at links in a couple different ways: internal links (those on the website itself) and external links (other sites linking to the website).

Internal Links

First, look for pages that have excessive links. You may want to minimize those. Second, make sure the web pages use anchor text intelligently without abusing it or it could look spammy to search engines. For example, if you have a link to the home page in the global navigation, call it “Home” instead of picking your juiciest keyword.

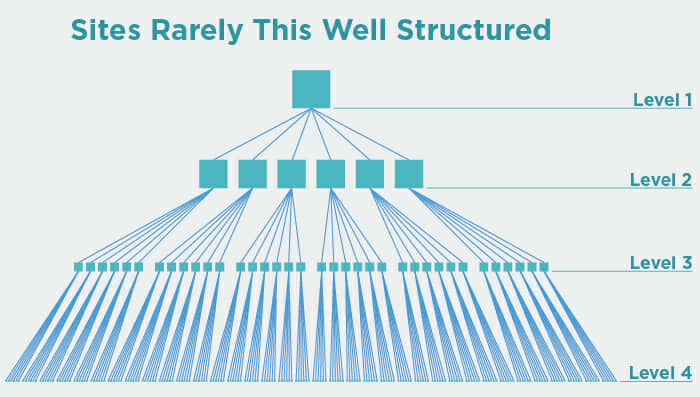

Internal links are what define the overall hierarchy of a site. The site might, for example, look like this:

The site above obviously has a well-defined structure, and that’s good. But in practice, sites rarely look like this, and some level of deviation from this is perfectly fine.

A home page may link directly to some of the company’s top products, as shown in Level 4 of the image, and that’s fine. However, it’s a problem if the site has a highly convoluted structure that has many pages that can only be reached after a large number of clicks if you try to navigate to them from the home page, or if each page is linking to too many other pages.

Look for these types of issues and try to resolve them to create something with a cleaner hierarchy.

[Tweet “Make sure your site has a clean internal link structure. Learn more at”]

External links

Also known as inbound links or backlinks, you’ll want to perform an analysis to ensure there aren’t any problems there, like a history of purchased links, irrelevant links, and links that look spammy.

You can use tools like Open Site Explorer, Majestic SEO, Ahrefs Site Explorer, SEMRush, and the Google Search Console/Bing Webmaster Tools accounts to collect data about links.

Personally, I like to use all of these sources, collect all of their output data, dedupe it and build one master list. None of the tools provides a complete list, so using them all will get you the best possible picture.

Look for patterns in the anchor text, like if too many of the links have a critical keyword for the site in them. Unless the critical keyword happens to also be the name of the company, this is a sure sign of trouble.

Also, check that there are links to pages other than the home page. Too many of these are another sure sign of trouble in the backlink profile. Lastly, check how the backlink profile for the site compares to the backlink profiles of its major competitors.

Make sure that there are enough external links to the site, and that there are enough high-quality links in the mix.

13. Subdomains

Historically, it’s been believed that subdomains do not benefit from the primary domain’s full trust and link authority. This was largely due to the fact that a subdomain could be under the control of a different party, and therefore in the search engine’s eyes, it needed to be separately evaluated.

For an example of a domain that allows third parties to operate subdomains of their site, consider Blogger.com, that allows people to set up their own blogs and operate them as subdomains of Blogspot.com.

For the most part, this is not really true today, and search engines are extremely good at recognizing whether or not the subdomain really is a part of the main domain, or if it’s independently operated.

I still recommend using a subfolder over a subdomain as the default approach to adding new categories of content to a site., However, if you already have it on a subdomain, I would not move it to a subfolder unless you have clear evidence of a problem, as there is a cost to site moves, and the upside of making the move is not enough to pay that cost.

[Tweet “You should prefer subfolders over subdomains when structuring a new site. Find out why at”]

For purposes of an audit, you need to make sure you include subdomains within the audit. As part of this, make sure your crawl covers them, and check analytics data to see if there is any clear evidence of a problem, such as it’s getting very little traffic, or recent material traffic drops.

For more on subdomains and their effect on SEO, see Everything You Need to Know About Subfolders, Subdomains, and Microsites for SEO.

14. Geolocation

For websites that aim to rank locally, for example, a chiropractor that’s established in San Francisco and wants to be found for “San Francisco chiropractor,” you’ll want to consider things like making sure the business address is on every page of the site, and claiming and ensuring the validity of the Google Places listings.

Beyond local businesses, websites that target specific countries or multiple countries with multiple languages have a whole host of best practice considerations to contend with.

These include things like understanding how to use hreflang tags properly, and attracting attention (such as links) from within each country where products and services are sold by the business.

15. Code Quality

A website with clean code that allows the search engines to crawl it with ease enhances the experience for the crawlers. W3C validation is the “gold standard” for performing a checkup on the website’s code, but is not really required from an SEO perspective (if search engines punished sites for poor coding practices, there might not be much left to show in the search results). Nonetheless, clean coding improves the maintainability of a site, and reduces the chances of errors (including SEO errors) creeping into the site.

Conclusion

An SEO audit can occur at any stage of the lifecycle of a website, and can even be performed on a periodic basis, like quarterly or annually, to ensure everything is on the up and up.

While there are different approaches to performing an SEO audit, the steps listed in this article serve as a solid foundation to getting to know the site better and how it can improve, so your SEO efforts get the most ROI.

This article adapted from the book The Art of SEO: Mastering Search Engine Optimization (3rd Edition), Eric Enge lead co-author.

Art of SEO Series

This post is part of our Art of SEO series. Here are other posts in the series you might enjoy:

Eric – this is a great, comprehensive list. I’m glad you mentioned #8, robots.txt and XML Sitemaps. I think these two (or more) specific files are often overlooked, but so critically important. I find a lot of novice WordPress users in particular ‘Discourage search engines’ without even knowing it. Google may say they don’t mind a ‘dirty’ XML Sitemap, but even a simple test in rebuilding clean Sitemaps, then using Search Console Crawl Stats, Sitemaps, and Index Status tools will beg to differ.

Really helpful Eric. Bookmarking this page right now. All the best in 2017 for you, Mark and the team.

Hi Eric – I do have a question but before that I want to say that I appreciate your useful and practical (and well-oganized!) approach to auditing a website for SEO, and while I’m doling out compliments, I do like your writing style in that you make things easy to understand.

What I was curious about is that the words “schema” and “markup” don’t appear here. Now I do realize you started out saying there’s other things that can and should be audited, and when i was reading the geo location section where your example is a San Francisco Chiropractor I thought for sure schema markup would be mentioned.

So my question is, did you leave that out on purpose? Maybe there’s a part 2 of this coming, or you feel that in an initial audit the above is enough “to chew on”?

Also: what’s your thoughts / experience on using Google Search Console Data Highlighter tool to generate JSON-LD Schema markup – do you think that tool is sufficient, or do you recommend using a different tool.

Thanks!

Hi David,

I do think there is value in Schema, and I would put it on the list, if my list had been 20 factors. We always include Schema in our audits, but we’re covering a lot more than the top 15!

As for the Google Search Console Data Highlighter tool, I haven’t tried it in a while. When I last used it, it was pretty limited in what it could do. Is it your experience that it does a complete job? I’d be interested to know.

Awesome! Thanks for putting this together.

I currently use Screaming Frog, Ahref and SEMRush for my content audit

What are your own favorite tools?

This was a great post about SEO! It’s a reenforces what I learned recently at the SEO BootCamp Rebecca Gills taught.

Thank you for sharing all this great information! SEO seems very daunting to me but I am starting to get feel for the process and what to do.

Justine

Great post. Awesome . Thanks for sharing

Thanks Eric. It’s very helpful for me.

Really interesting article. Is it necessary to redirect 404 pages which are no longer (because these old urls were not user friendly so I created new urls with same content) needed and do not have any backlinks?

For pages without backlinks, it’s not critical to redirect them. I do think that it speeds the process of Google understanding your site move if you do implement them, but Google will still eventually sort it out.

Indeed A very good explanation. Many thanks for subdomain part.

Hi, Eric. Great article. My SEO Audit process wasn’t broken down to the level that yours is but I’ll be making some changes to that. With regards to discoverability (Step 1) my first action is to head over to Google and search “site:[website domain]”. I’m sure you’re aware of this but other readers might not know about this quick and easy way to see what and how Google has indexed your website.

Thanks for posting!

Thanks for the valuable advise. But having too many 301 redirects (even they helps google to better understand your site) can also slow down your website. My site has around 150 old urls which are not useful anymore so in dilemma whether to redirect them or not. I think I would be giving more priority to site speed than google understanding my site structure.

Apart from this, Website navigation & UI big matters in SEO Audit & Also the social medium. How it helps for the site. How user believing the trust of a website using social media than the SSL. For perfect SEO security of the website & The flow of the website. How it is saying the customer.

Well, the above audits are very useful for an SEO Company. For their upcoming clients.

Good article Eric!

As for “It used to be the case that much less than 100 percent of the PageRank transferred to the new page through a redirect. In 2016, however, Google came out with a statement that there would be no PageRank value lost using any of the 3XX redirects.”

Are your experiences regarding the 3XX redirects in line with Google’s statement? In other words, to you believe them?

Good question. To be honest, we haven’t done a disciplined test to verify whether 301 does now lose PageRank or not. Would not be a bad thing for us to try! What’s your take on it?

Thanks for your response Eric!

I haven’t ran an experiment for it either, so my take is largely based on experience and discussions with others. I’d say you lose some authority with a redirect, but the majority is passed on (provided the redirect is relevant).

Excellent article, there are so many variables that can vary the SEO position of a site … but I think they have touched and explained in a simple way the most important.

Nice job on the article and thanks for a few reminders

Hi Eric, Thank you so much for sharing such a informative piece of work.

I am planning to relaunch blog on some different theme. Many pages of the blog already have back-links which will be removed. So, what do you think, should I 301 redirect all old pages to home page? Otherwise, there will be lots of 404 error pages. Or do you think, if there is any other option to tackle this scenario.

I would redirect the blog posts on the old URLs to the same post on the new theme (the new URLs). If for some reason, you’re not keeping the old post (the one with the links) then try linking to the closest category page on the site after the relaunch.

Thank you, I appreciate it. Let me give a try.

Hi Eric, this was a great article. I have a few questions about tracking parameters based on this “Another consideration are URLs that have tracking parameters on them. Please don’t ever do this on a website!”

Based on your experience, why are internal tracking parameters so toxic for the site? (Besides causing duplicate content if canonicals are not implemented or if these are not handled by the URL Parameter Tool in Search Console.)

Could you share a good way to track conversions or events?

Thanks in advance!

Hi Luis – you can use canonicals, but the search engine crawler will basically see different URLs every time they visit the site. I’d rather have them see the clean URLs every time they come to the site.

Part of the reason is that canonicals are a suggestion, and Google may ignore it, resulting in the wrong page being indexed.

The other reason is that they’re going to think that your URL space is different every time they visit it, and that’s not a good thing.

Lastly, use Google Analytics (or whatever analytice you use) instead, and you can get the same kind of data.

Great article. Now, it’s time to implement these seo audit strategies on my website.

Thanks admin.

Hello Eric!

Thanks for the share, great article. One quick question for you. How often do you perform a SEO audit for your site?

Filip

Frequency should vary by site size and complexity. The Perficient Digital site is not that complicated, so twice a year is probably frequently enough. For other sites, you may want to do it quarterly.

Thanks Eric for this great post.

There’s one question I have though. At what point do you know that the links on a page are excessive?

Once you exceed 100 links, it starts to be a lot. But, that’s not necessarily a hard limit. It depends on the context of your page, the authority of your site, and what the user experience is like.

Thanks Eric

Great post. Awesome . Thanks for sharing

Quick question Mark, do you think checking for schema markup can be part of an audit? Because it kinda helps too especially in ctr, so can that be included?

I’ll answer, since I wrote the article. Schema is definitely something good to include in an audit, particularly in those cases where it results in changes in the way your pages are presented by Google in the SERPs.

This is an extremely helpful article and gave me an idea about the subdomain thing. There little to no valuable information on how to deal with subdomains as far as SEO is concerned.

In fact, I have a question, do you think one should submit the sitemap of the subdomain separate from the sitemap of the main website.

Thanks for this informative post Eric, I do ensure that I use analytics tools, sitemap and good SEO plugins for the websites I create. I would like to ask your opinion about Google Ads, is it advisable to continue paying google to rank on the first page?

This is quite an interesting blog and is explained very well.

Ostensibly, I have started writing on the same niche, could you please share your views here : Google Penalties and How to check

sitemap and good SEO plugins for the websites. Thanks for this informative post Eric, I do ensure that I use analytics tools.

Great share! Thanks for the information. Keep posting!