This is Part IV in a multi-part series.

In Part I – We introduced the concept of analytically measuring the performance of delivery teams.

In Part II – We talked about how Agile practices enhance our ability to measure more accurately and more often.

In Part III – We introduced a system model for defining 3 dimensions of performance (Predictability, Quality and Productivity). We then got into specifics of how to measure the first dimension; ‘Predictability’

In this part, we talk about how to measure the second dimension; Quality

There has been probably more written with regard to quality metrics than on any other aspect of software development. And in fact, most quality tools produce a plethora of statistics, graphs, dashboards and ‘eye candy’, all asking for your attention – as the following collage demonstrates.

The real key in quality metrics is knowing which metrics will give you the biggest bang for your attention. Which ones require the least amount of effort to capture, yet will answer the questions you want to know the most.

While all projects ‘ask’ different questions – and sometimes at different times depending on where in their lifecycle they currently are – some general ‘tried and true’ metrics are worth mentioning here.

- # Open Defects (weighted / normalized*) – Current number of open defects (operational and functional) weighted by severity.

- Defect Arrival Rate (weighted / normalized*) – Rate at which defects are being discovered (weighted by severity). Should trend downwards as QA completes.

- Defect Closure Rate (weighted / normalized*) – Measure of development team capacity to close defects as well as an indirect measure of code structural integrity, decoupling, cross-team training, etc.

- Total Defects Discovered (weighted / normalized*) – Number of defects opened during formal QA, weighted by severity. This is tracked independently by the QA team. All things being equal, a lower number indicates a higher level of quality. Note that this is closely linked to productivity and predictability metrics since ‘gaming’ those metrics will naturally result in a higher weighted defects metric.

Note that in all the above quality metrics, the key is to ‘weigh and normalize’ each metric to account for the severity of the defects under measure as well as the normalization of those defects against the complexity (amount) of the work produced. The second concept – normalization – is needed to compare multiple delivery channels or variations in work being done over time. Complex development or larger number of development hours tends to produce a larger number of defects. One way to normalize is against total ‘development hours’ that went into the code being tested, although in the next section on productivity we’ll establish a better metric of ‘work completed’ and even tie all of these metrics dimensions (predictability, quality and productivity) together into a comprehensive dashboard.

For now, let’s assume you either normalize or you decide to simply weight and leave it at that. There are quite a number of useful graphs that can be produced just by having the statistics listed above. And the nice thing here is that most defect tracking tools will produce these graphs right out of the box. All you have to do is diligently capture the data.

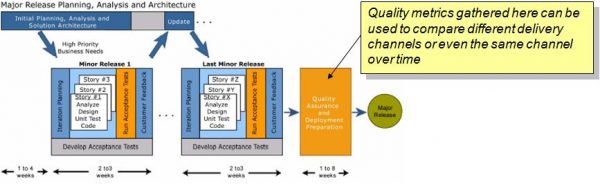

So where is the best place to capture testing metrics? Obviously in an Agile project, testing is something woven into the very fabric of the development iterations themselves. But to really measure the quality a delivery team is producing, you should be performing an independent quality check at the end of the iterations.

This final Quality Gate, can be of relatively short duration and may even leverage the automated tests and tools produced during the development iterations (since the quality of what is produced can also be measured by how completely they tested the code prior to this quality gate and testing scripts are a good measure of rigor in this department). But an effective Quality / Validation Gate will also add their own testing – including ad-hoc testing and business transaction level testing. In this approach, Quality Gate testing follows more of a Quality Control model, popular in manufacturing than a dumping ground for poorly tested software.

Next week in Part V, we’ll cover the final (and often most controversial) dimension: Productivity. Then we’ll wrap it all up in the final Summary post.