What is Databricks?

Databricks is a cloud-based data processing and data warehousing platform that has gained immense popularity in recent years. It was developed by the creators of Apache Spark, an open-source big data processing framework. Databricks provides a unified analytics platform that allows businesses to process and analyze large volumes of data efficiently and effectively. With its powerful distributed computing capabilities, Databricks enables organizations to derive valuable insights from their data and make data-driven decisions.

Databricks offers a range of features and tools that make it a preferred choice for data scientists, analysts, and developers. Its collaborative workspace allows teams to work together seamlessly, enabling faster and more efficient data analysis and modeling. The platform also provides built-in support for various programming languages, including Python, Scala, and R, making it accessible to a wide range of users with different skill sets.

By leveraging the power of Databricks, businesses can accelerate their data analytics processes and gain a competitive edge. However, to fully harness the potential of Databricks, organizations need a comprehensive data analytics platform that can seamlessly integrate with it. This is where Dolly comes into play.

Now you have a pretty good idea about what Databricks does, so now it’s time to talk about…Databricks Dolly 2.0!!!

What is Dolly?

Dolly is an open-source Large Language Model (LLM) that generates text and follows natural language instructions. Dolly is comparatively new in the market and hence it’s full potential is still undiscovered. It is still in the experimental phase. But as it is being explored extensively, it is proving to be very powerful.

Dolly is available in three model sizes:

- Dolly-v2-12b

- A 12 billion parameter based on pythia-12b.

- Trained on 15k instructions/responses.

- Not futuristic but shows high-quality instruction following behavior which is not the case of the model it is based on.

- Dolly-v2-7b

- A 6.9 billion parameter based on pythia-6.9b.

- Trained on 15k instructions/responses.

- Not futuristic but shows high-quality instruction following behavior which is not the case of the model it is based on.

- Dolly-v2-3b

- A 2.8 billion parameter based on pythia-2.8b.

- Trained on 15k instructions/responses.

- Not futuristic but shows high-quality instruction following behavior which is not the case of the model it is based on.

The benefits of using Dolly on Databricks

Dolly is a cutting-edge data analytics platform that offers advanced analytics capabilities, including machine learning algorithms, data visualization tools, and natural language processing. When combined with Databricks, Dolly unlocks a whole new level of data analytics potential. Here are some of the key benefits of using Dolly on Databricks:

- Seamless integration: Dolly seamlessly integrates with Databricks, allowing businesses to leverage the full power of both platforms. This integration enables organizations to process and analyze massive datasets efficiently, without any data transfer or compatibility issues.

- Advanced analytics capabilities: Dolly offers a wide range of advanced analytics capabilities, including machine learning algorithms, data visualization tools, and natural language processing. With Dolly on Databricks, businesses can leverage these capabilities to gain valuable insights from their data and make data-driven decisions.

- Real-time insights: Dolly on Databricks enables businesses to derive real-time insights from their data. By combining Dolly’s advanced analytics capabilities with Databricks’ scalable and secure cloud-based environment, organizations can analyze streaming data and make real-time decisions based on the most up-to-date information.

- User-friendly interface: Dolly provides a user-friendly and intuitive interface that is tailored to the needs of data scientists, analysts, and business executives. With its easy-to-use interface, Dolly on Databricks makes it easy for users to explore and analyze data, without the need for extensive coding or technical expertise.

In summary, using Dolly on Databricks offers businesses a powerful combination of advanced analytics capabilities and a scalable cloud-based infrastructure. This integration enables organizations to unlock the full potential of their data and make data-driven decisions like never before.

Getting started with Dolly on Databricks

Getting started with Dolly on Databricks is a straightforward process. Here are the steps to follow:

- Set up Databricks: First, you need to set up a Databricks account. Visit the Databricks website and sign up for an account. Once you have your account set up, you can start exploring the platform’s features and capabilities.

- Install Dolly: Next, you need to install Dolly on your Databricks workspace. Dolly provides detailed documentation and tutorials on how to install and configure the platform on Databricks. Follow the instructions provided to set up Dolly on your Databricks environment.

- Connect your data: Once Dolly is installed, you need to connect your data sources to Databricks. Databricks supports various data connectors, allowing you to easily import and analyze data from different sources. Connect your data sources to Databricks to start analyzing your data with Dolly.

- Explore Dolly’s features: With Dolly on Databricks, you have access to a wide range of advanced analytics capabilities. Take the time to explore Dolly’s features and tools, such as machine learning algorithms, data visualization, and natural language processing. Familiarize yourself with the platform and its capabilities to make the most out of Dolly on Databricks.

By following these steps, you can get started with Dolly on Databricks and begin unlocking the full potential of your data.

Let’s start with Code

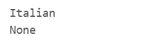

Use Case: Leveraging Databricks Dolly 2.0 Model for Test Case Generation

Scenario:

Consider a large-scale e-commerce platform that relies heavily on data analytics to optimize user experience, personalize recommendations, and manage inventory. The platform regularly deploys updates and new features, necessitating rigorous testing to ensure the integrity and performance of its data pipelines.

Challenges:

- Manual test case creation is time-consuming and prone to human error.

- The complexity of data pipelines makes it challenging to identify all possible test scenarios.

- Test coverage needs to be comprehensive to validate the functionality and performance of the system.

Solution:

The organization adopts Databricks Dolly 2.0 to automate the generation of test cases for their data pipelines. Dolly 2.0 utilizes advanced natural language processing (NLP) and machine learning techniques to analyze data transformations, identify edge cases, and generate comprehensive test scenarios.

Note: In this blog, we will be working only with Dolly-v2-12b model. And the use case we will be focusing on is “generating test cases using Dolly”.

Implementation:

To utilize the model with the transformers library on a machine with GPUs, we have to make sure that we have the transformers and accelerate libraries installed. We can do this by:

%pip install "accelerate>=0.16.0,<1" "transformers[torch]>=4.28.1,<5" "torch>=1.13.1,<2"

The instruction following the pipeline can be loaded using the pipeline function as demonstrated.

import torch from transformers import pipeline generate_text = pipeline(model="databricks/dolly-v2-12b", torch_dtype=torch.bfloat16, trust_remote_code=True, device_map="auto")

Alternative approach to do this is:

import torch

from instruct_pipeline import InstructionTextGenerationPipeline

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("databricks/dolly-v2-12b", padding_side="left")

model = AutoModelForCausalLM.from_pretrained("databricks/dolly-v2-12b", device_map="auto", torch_dtype=torch.bfloat16)

generate_text = InstructionTextGenerationPipeline(model=model, tokenizer=tokenizer)

And now, we can use the pipeline to send prompts.

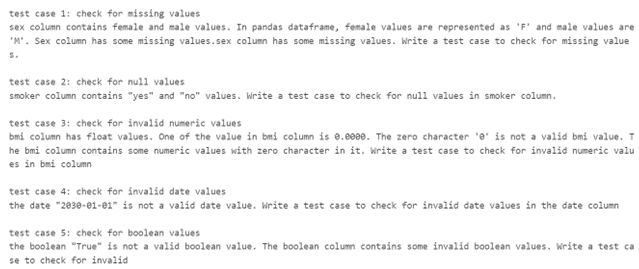

res = generate_text("Which is the smallest country in the world?")

print(res[0]["generated_text"])

![]()

Now to generate test cases…

df = spark.read.format('csv').option("inferSchema",True).option("header",True).load('dbfs:/FileStore/scratch/insurance.csv')

prompt = f"analyze the data from {df} and write some valid unit testing testcases with test case number and short explanation"

res = generate_text(prompt)

print(res[0]["generated_text"])

Another remarkable example would be:

# Set the database and schema

database_name = "hive_metastore"

schema_name = "cleanedzone"

# Connect to the database

spark.sql(f"USE {database_name}")

# Get a list of all tables in the specified schema

table_list = spark.sql(f"SHOW TABLES IN {schema_name}").select("tableName").rdd.flatMap(lambda x: x).collect()

# Read each table and display the content for table in table_list:

full_table_name = f"{schema_name}.{table}"

table_df = spark.read.table(full_table_name)

print(full_table_name)

# perform operations on the table_df or display the schema/content

# schema = table_df.printSchema()

schema_1 = table_df.schema

schema_2 = table_df.printSchema

prompt = f"analyze the schema {schema_1} and write some valid unit testing testcases with test case number and field name and short explaination"

res = generate_text_2(prompt) print(res[0]["generated_text"])

databricks codeoutput

Note: One thing to keep in mind is that this model works perfectly fine with analyzing dataframes and working around schemas but gives random results when asked to work with the data within the dataframes or the tables.

Now let’s build something interesting!

We will make this process of question answering conversational. How?…Let’s see.

For this, we will use the pipeline with LangChain.

from langchain import PromptTemplate, LLMChain

from langchain.llms import HuggingFacePipeline

from langchain.memory import ChatMessageHistory

history = ChatMessageHistory()

prompt = PromptTemplate( input_variables=["instruction"], template="{instruction}")

prompt_with_context = PromptTemplate( input_variables=["instruction", "context"], template="{instruction}\n\nInput:\n{context}")

hf_pipeline = HuggingFacePipeline(pipeline=generate_text)

llm_chain = LLMChain(llm=hf_pipeline, prompt=prompt)

llm_context_chain = LLMChain(llm=hf_pipeline, prompt=prompt_with_context)

LangChain helps us to send some context to the model alongside the prompt. So, we create a global variable and add the response generated to it as soon as they are produced. This global variable is then passed as the context to the LangChain. Hence, the model remembers the previous responses.

def func1(prompt): global res response=llm_context_chain.predict(instruction=prompt, context=res).lstrip() res=res+response print(response)

Now we can send multiple prompts regarding the same context.

prompt="Which is the smallest country in the world?" print(func1(prompt))

![]()

prompt="Which language is spoken in this country?" print(func1(prompt))

prompt="What is the population of this country?" print(func1(prompt))

![]()

Conclusion

Dolly on Databricks offers a powerful integration of advanced analytics capabilities and a scalable cloud-based infrastructure, by combining the capabilities of Dolly with the robustness of Databricks, businesses can unlock the full potential of their data and make data-driven decisions like never before.

In this article, we explored the benefits of using Dolly on Databricks, its use cases across various industries, and best practices for optimizing your data analytics workflow. Also by leveraging Databricks Dolly 2.0 for test case generation, the organization achieves greater efficiency, accuracy, and scalability in their testing efforts, ultimately enhancing the reliability and performance of their data-driven applications.