Caching is a concept harnessed to store frequently used data in the memory, file system, or database that helps to improve processing times. This strategy is most useful when data does not change frequently or is static in nature. In general, some benefits of caching include improved responsiveness, increased performance, and decreased network costs.

The Cache scope in MuleSoft is used to store reusable and frequently used data. There are different types of caching available, which will be discussed later. We can use the caching mechanism to improve performance by speeding up processing times and easing the load on Mule instances.

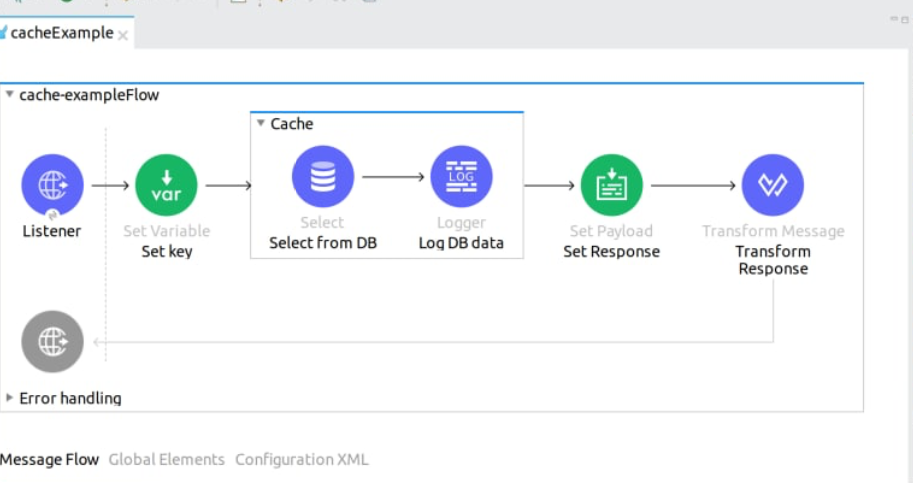

Here is a snippet that shows where data from a database call is stored in an internal in-memory cache via a caching strategy. When we retrieve the same data multiple times from a database, it gets it from the cache rather than calling it from the database for subsequent calls. This alleviates the load on the end systems and achieves the desired output.

The Caching Process

How precisely does the Cache scope in MuleSoft 4 work?

A flow is enclosed in a Cache scope, so whenever a request comes, it will perform the following actions:

- Checks if the request is repeatable or not. A repeatable payload means it’s a repeatable stream. If it is repeatable, only then it will come inside the Cache scope, or if it is not repeatable, it will go through the normal flow processing.

- Creates the key so the default mechanism generates a Sha- 256 key generator and combines it with Sha- 256 digest; then the key is created.

- Checks if the key is present in the cache. It could be a local cache, which means it is local in memory or the Object Store (could be a persistent Object Store, or it could be Object-Store V2). If the key is not found, it is considered a cache miss and will call the flow.

If the key is found, it will be called a Cache hit and will return a value from the cache that will be used by another processor. So, the processor inside the Cache scope will not be executed in case of a Cache hit. Before coming out of the Cache scope, it will store the value in the form of key-value pair in the cache.

Caching Configurations

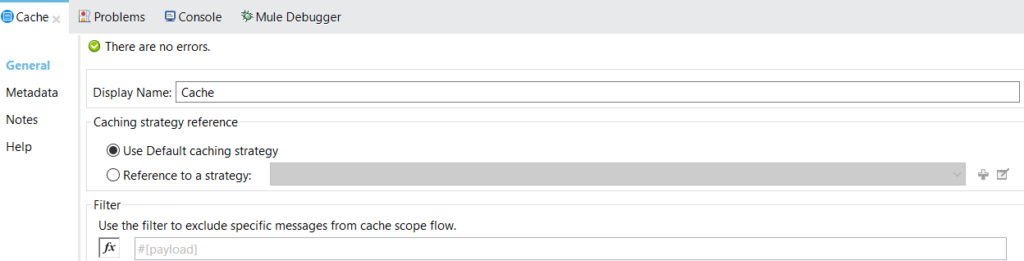

To use a caching strategy, you will need to have a Cache scope property panel or Global Elements configuration in Anypoint Studio. Mainly, there are two strategies of caching in Mule:

Default Caching: If you do not specify a caching strategy, it uses a default caching strategy. This strategy provides a basic caching mechanism. Everything will be cached in memory, which is a volatile ram and is non-persistent i.e., if you restart your application, the cached data will be lost. If you want to store a huge static payload, you must use a custom caching strategy.

Reference to a Strategy: You can create a custom cache strategy using this option. In this, you can use Object Store and then define the cache size, time to live, and other configurations as per your requirement.

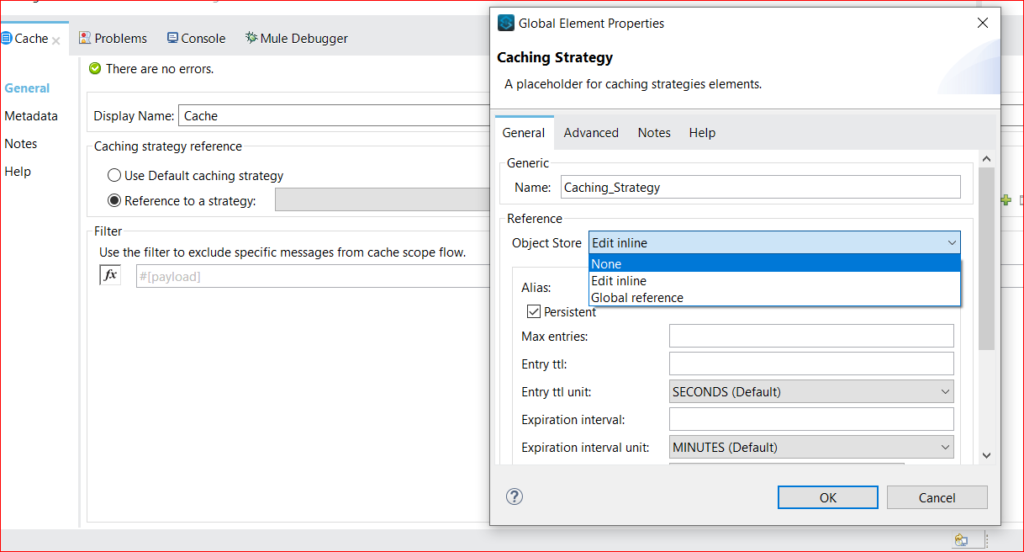

There are a few steps to configure a Cache Strategy:

- Open the Caching Strategy Configuration window.

- Define the name of the caching strategy.

- Define the Object Store by selecting between Edit Inline and Global Reference.

- Select a component for producing a key utilized for storing events inside the caching strategy.

- Open the Advanced tab in the property window to configure the advanced setting.

There are two different strategies for storing data in cache. The first one is non-persistent, while the alternative can be used for both options i.e., in-memory Object Store and persistent Object Store that will store in the same file system.

Also, to access the object store externally by another system/application as a REST API, we can enable Object Store V2 during cloud hub deployment. It can also be used if we want consistency or synchronization of cache across a cluster of nodes.

In conclusion, caching in MuleSoft helps process data faster. It’s effective for two types of tasks: 1) processing repeated requests for the same information; and 2) processing requests for information that include large repeatable streams. For example, the next time the Cache scope receives a duplicate message payload, it can send the cached response instead of starting the previously time-consuming process.

To learn more, review MuleSoft’s Cache scope documentation or contact us to discuss your enterprise’s integration strategy.

Perficient + MuleSoft

At Perficient, we excel in tactical MuleSoft implementations by helping you address the full spectrum of challenges with lasting solutions, rather than relying on band-aid fixes. The end result is an intelligent, multifunctional resource that reduces costs over time and equips your organization to proactively prepare for future integration demands.

We’re a Premier MuleSoft partner with more than 15 years of integration expertise across industries including financial services, healthcare, retail, and more. After MuleSoft’s acquisition by Salesforce, our continued innovation in the integration space offers more customized experiences on software developed by MuleSoft. We combine the MuleSoft product suite with our connectivity expertise to provide comprehensive solutions both on-premises and in the cloud.

Contact us today to learn how we can help you implement MuleSoft to solve your enterprise’s integration challenges.

References

https://docs.mulesoft.com/mule-runtime/4.3/cache-scope