Transmission Control Protocol (TCP) is a fundamental networking protocol. It is part of the Internet protocol suite and operates in the transport layer. All networking transmissions are broken up into packets. TCP guarantees that all packets arrive, in order, and without errors. This requires a lot of back-and-forth communication (Three-way handshake). Problem occurs when packet is not arrived within a stipulated time due to many factors. Network connection becomes very slow and cause frustration for end-user. In this article, explanation of a real time scenario of network latency and experiments on recovering the Linux server from latency is given.

LATENCY IN NETWORK:

RTT – ROUND TRIP TIME.

It is the time taken for a packet to travel from a specific source to destination and back again.

This is an important factor to know whether the acknowledgement for the packet has taken the right time.

In real terms, we execute ping command to the destination IP from Source to know the communication is alive or dead.

Latency is considered as the delayed time in communication where RTT is higher than normal.

Many other factors like distance between the source and destination, number of hops between them and their status etc also impacts the latency.

LINUX SPECIFIC:

To know the number of hops in between the source and destination, there are few inbuilt options available like traceroute, snoop tracing, tcpdump etc to get execution details from a patched Linux kernel.

TRACEROUTE COMMAND:

This command allows seeing the status of maximum 30 hops in between source and destination.

| Command: traceroute hostname |

It completes once the destination is reached or to a hop in between where the hop is broken so no further communication.

SNOOP TRACING:

Brief details about packet in transmission. It is very useful for packet level analysis and commonly used one for analysis.

TCPDUMP:

Wireshark can be used to fetch the TCP dump details. Socket level specific information can be fetched using ‘SS’command

Example:

Ss –inet

I,n,e,t are options to commands where

| -n, –numeric Do not try to resolve service names. Show exact bandwidth values, instead of human-readable. -e, –extended Show detailed socket information. i, –info Show internal TCP information -t, –tcp Display TCP sockets. |

All the above mentioned were basic info for packet analysis. TCP parameters which impact flow of packets are

1. CONGESTION CONTROL ALGORITHMS.There are many algorithms in place to determine the way packets are sent and acknowledgements are received. There is a term called congestion window. All algorithms are defined around this

Few examples

1. CUBIC: This is a traditional algorithm used in Linux kernel mainly for high bandwidth and high latency networks

2. RENO: This is also used as a traditional one its main purpose is for fast recovery.

3. BBR is the latest one (Bottleneck bandwidth and round-trip propagation time) found by Google. By using this you-tubes usage was made faster by 14% around the globe. When issue occurred the congestion, algorithm was checked for in Linux server.

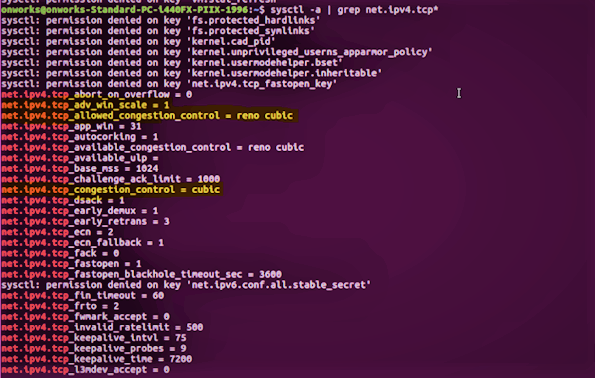

| sysctl -a | grep tcp_congestion_control |

This command displays the congestion algorithm currently used.

Sysctl -a diplays all system level configurtions

There are many network issues in using the traditional algorithms (CUBIC and RENO). So, Google came with the algorithm BBR to reduce the latency. This can be implemented only in Linux version 4.9 and above. The existing servers were lesser than 4.9 so upgrading the version at the critical moment was not feasible so it was kept as a future fix.

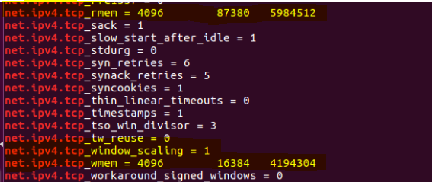

For immediate fix the TCP window scaling was changed. The 2nd factor which impacts network latency is TCP Receive window size. Tuning of TCP Window size depends on the below 4.

| TCP Window scaling factor Sender window size Receive window size TCP window size. |

Among them Receive window size is a key.

TCP is known well for its reliability and this is achieved by the three-way handshake.·

- Sender sends the data·

- Receiver receives it.·

- Receiver send acknowledgement back to sender saying it has received the data.

Sender can send the amount of data only which limits within the receiver size and it cannot send more than it even if it wants to. Moreover, sender can’t send additional data if it doesn’t receive acknowledgement from the receiver for the previously sent data. In a scenario if receive window size is set to 0, then sender can never send any information until it receives an acknowledgement from receiver along with non-zero window size value.

In our scenario the receiver size was set to a higher value appropriate for the network bandwidth, but the sender size was less. It was just 8k. TCP window size and sender size were both 8k.

Linux kernel always sets the receiver size to twice the sender size. This work is done by kernel.

So receiver has to be 16k but we can tune individual parameters also so receiver was set to 32k(kernel set the highest value as the final one).

Sender will only try to send 8k of data even though the receiver has a bandwidth to receive more data. After lot of experiments we tuned sendbuf value to 32k. This reduced network latency to a drastic level.

In a layman’s explanation:

Sendbuf is the amount of bytes, sender can send at one shot. Similarly Recvbuf is the amount of bytes, the receiver can receive at a single instance.Window size can be compared to a basket. Basket carrying data from sender to receiver. How much of data it can carry depends on the basket size (which in turn relies on the bandwidth. That is why there goes a calculation for determining the size which is Product of Bandwidth and Delay in sending data).

Few details on testing perspective:

We can manually set latency on network emulator(netem) on ethernet cable.

| tc qdisc add dev eth0 root netem delay 160ms |

- Tc is traffic controller (inbuilt in Linux, same not available in Solaris)

- Qdisc is queuing configuration in tc. It is the default one.

- Add – command of adding

- To ethernet device eth0

- With root permission on network emulator(netem)

- Of delay(Latency) for 160ms.

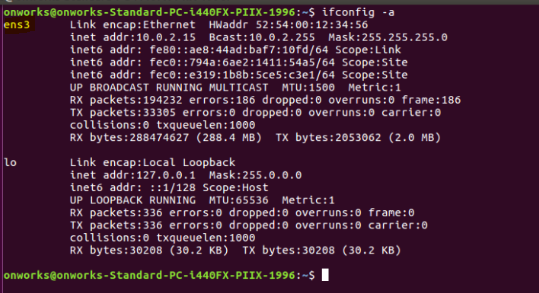

To know ethernet connected.

Here ens3 is to be used instead of eth0 Similarly to delete

| tc qdisc del dev eth0 root netem |

By setting latency and tuning the window parameters with sysctl, we can experiment and achieve the desired and appropriate values for our network.