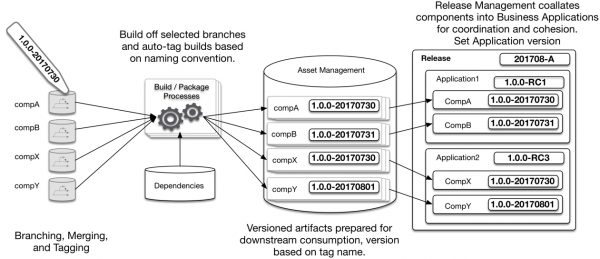

The following diagram is a generic representation of release management for a development organization. There are typically different development teams who develop individual and standalone pieces that go into a release. In the diagram, they are referred to as the component. Each component has its own development lifecycle, source code repository, and (probably its own) versioning paradigm. Each one of these built component versions will live in asset management as a possible release candidate. When it comes time to push appropriate versions out for a given release, somehow there needs to be an understanding of which are the correct versions for each component for that release that need to go out to a production environment at release time.

What’s the best way to deal with all this complexity?

Since Jenkins is by far the most popular build tool on the market and used by almost all our customers, this article will reference Jenkins. We’re big proponents for using the right tool for the job at hand. While Jenkins is a really great build/continuous integration tool, it also falls down very quickly as an enterprise release solution.

A typical organization has the following requirements for application deployments:

Most important is an audit trail of when and what components were deployed to production (audit of lower environments must be kept as well)

- Most important is an audit trail of when and what components were deployed to production (audit of lower environments must be kept as well)

- Component dependencies must be kept for a given release i.e. which versions of which components must be deployed together

- You must also understand which component versions are the right versions to be deployed for a given release — i.e. which versions have been completely tested and signed off to be deployed to production.

Forrester defines release management as: “the definition, support, and enforcement of processes for transferring software to production”. Code is continually propagated through various environments in order to pass different levels of quality scrutiny. Typically code that has passed all levels of scrutiny is deemed worthy of deploying to production. Thus it is important for a release management solution to have an environment construct so that deployed artifacts can be tracked from an environment perspective. What versions of which components were deployed to DEV, QA, UAT, etc. at what time and/or what is currently deployed to PROD is a simple question to ask in regards to release management and should be an easy question to answer. Also, when testing has been completed and passed for a given environment there must be a mechanism to mark a component version accordingly (i.e. metadata tag or property).

If you’re using a build tool like Jenkins to automate deployments, typically you can run the automation easily, however, the environment target is typically obscurely buried in a log file. One approach in Jenkins is to run a Jenkins build node on the deployment target. By doing so it’s a little easier to look at the logs specifically for that slave for environment information. However, if you’re using Jenkins build pipelines, Jenkins does not currently display pipeline execution results within the node history, thus it is still difficult to get an environment perspective of deployed code in Jenkins, especially when using Jenkins pipeline. Build tools were created to build application packages. If you’re using the best practice of build once, then deploy that build to any target, when you’re building you’re not considering deployment targets. Thus, most build tools do not effectively audit or manage deployment target environments.

Metadata is also king in release management. How do you keep track of which component versions go together for which application for which release, which versions of components have been successfully tested, which versions of components have stakeholder approval, etc.? In release management tools this is typically done with tags and some type of bill of materials (BoM) construct (sometimes call a “snapshot” or “dependency package”).

An approach I’ve seen done with Jenkins is to use an asset management tool like JFrog Artifactory to store artifact metadata, then use different Jenkins Masters to control different environments i.e. have a build Jenkins Master, then have separate Jenkins master for PROD deployment and/or UAT deployment and/or SIT deployment, etc. This is the only true way within Jenkins to have isolation and role-based security access on deployment environments. Here I’d also recommend a CloudBees version of Jenkins, instead of open source Jenkins as managing multiple open source Jenkins instances independently becomes tedious and resource consuming.

If you’re using a build tool to run deployment automation scripts as opposed to a release management tool, the information that answers the previously mentioned questions about passed tests, approvals, what component versions were tested together, etc. is typically kept in obscure log files. For a small example, imagine that you have 5 applications for a release and each application has 3 components. Your teams have build automation where each component is built once an hour during work hours and you have 2-week sprints. Those builds included tests and deployment scripts. If a release is just 1 sprint, that’s 1,200 build log files. If you’re not continuously manually tracking your build/deploy jobs to keep track of versions that past which level of tests and which versions were tested together, then you’ll have a lot of log files to comb through on release day to figure out what should be released.

Let me play devil’s advocate for a quick second. What if you’re in developing microservices; where container version dependencies are de-coupled from each other? You’d probably have a fairly straightforward build and deployment process for each microservice that could be easily automated in a Jenkins pipeline or similar build/deploy solution. The system as a whole would be highly fault tolerant and horizontally scalable. Such a system would eliminate the need for high-touch release management processes. Technical debt would quickly pass through this system with highly mature, automated deployment and validation. In an organization that practices true microservices application architecture the need for a release management solution is greatly reduced or eliminated. For a multi-component/application/system architecture that is highly dependent and requires any amount of coordination and/or orchestration between deployment, validation, and coordination of release time activities, a CI tool alone will not cut it. Again the reasons for this are simply the number of hands-on activities that are still commonplace during a release.

Build automation tools are usually great at what they were created to do i.e. BUILD. While a build tool could run automation scripts at release time, that doesn’t mean that tool is a good release management solution. Running automation scripts with a tool will ease some release pain and will provide you with a level of audit. However, as complexity and scale grows and as you must meet your organization’s release requirements, the need for a true release management solution becomes apparent.