On a recent project, I was faced with a requirement to scan the contents of a folder and load all the files into their respective staging tables. There were multiple file types – Customer file, Store file, Products file, Sales file, etc. Every day, we received zero to many files for each type of file. The information known at design time is the base file name for each file type and the format and frequency in which the files will arrive. The solution needed to be flexible so that it can handle multiple files in different formats arriving at any given frequency (daily/monthly/quarterly/yearly).

With that in mind, I created a master and detail table to store the file names and other information.

The FILE_MSTR table stores the metadata about the file type and has the following fields:

| FILE_MSTR_ID | FILE_BASE_NAME | FILE_EXT | FILE_FOLDER | FILE_FREQ | FILE_FORMAT | FILE_TGT_NAME | CREATE_DT | UPDATE_DT | FILE_ORDER | FILE_SERVER | FTP_USER_ID | FTP_PWD |

| 1 | data | .txt | /home/oracle/Desktop/aroy/files | Daily | Text | SRC_DATA.txt | 7/30/2014 | 7/30/2014 | 1 | ftp.***.com | ftpuser | **** |

Every new file type to be processed needs to be entered into the above table. As an example I have added one file type in the above table. See below guidelines for entering a new record in this table:

- FILE_MSTR_ID: Next available ID. The master Ids need to be in sequence.

- FILE_BASE_NAME: Base name of file (without the trailing datestamp). The ETL program will do a wildcard search with base name (E.g. BASE_FILE_NAME*.txt) and log the files in the FILE_DTL table

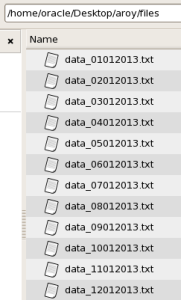

- FILE_FOLDER: This is the folder where the incoming files are expected to be dropped.

- FILE_FREQ: Frequency of the files

- FILE_FORMAT: File format

- FILE_TGT_NAME: This is the name of the file as defined under Models in ODI.

- CREATE_DT: Date when record was created

- UPDATE_DT: Date when record was last updated

- FILE_ORDER: Order in which the files need to be processed.

- FILE_SERVER: The server in which the file will be placed

- FTP_USER_ID: User ID

- FTP_PWD: Password

The FILE_DTL table is created empty to begin with:

| FILE_MSTR_ID | FILE_DTL_ID | DATE_SKEY | FILE_NAME | ODI_SESS_ID | CREATE_DT | UPDATE_DT |

| … | … | … | … | … | … | … |

I created a bunch of files with some test data in them:

Each file has three records and looks like this:

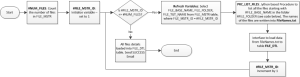

Once the files were ready, I created a package that loops through the FILE_MSTR table, and for each record, it lists all the files and loads the file name and other details into the FILE_DTL table. The looping is done using variables. The package follows the below logic:

The Jython code for the procedure PRC_LIST_FILES is:

import os

import fnmatch

ftrg = open('#FILE_FOLDER/FileNames.txt', 'w')

try:

mydir = '#FILE_FOLDER'

mylist = os.listdir(mydir)

for dirOrFile in mylist:

if fnmatch.fnmatch(dirOrFile, '#FILE_BASE_NAME'):

print >> ftrg, dirOrFile

finally:

ftrg.close()

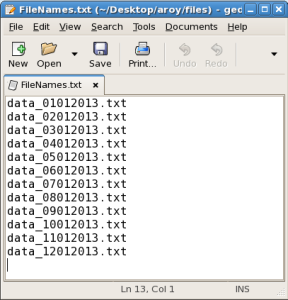

The PRC_LIST_FILES procedure creates a FileNames.txt, which looks like this:

After running the package, the FILE_DTL table gets loaded and looks like this:

| FILE_MSTR_ID | FILE_DTL_ID | DATE_SKEY | FILE_NAME | ODI_SESS_ID | CREATE_DT | UPDATE_DT |

| 1 | 1 | 20140730 | data_01012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 2 | 20140730 | data_02012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 3 | 20140730 | data_03012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 4 | 20140730 | data_04012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 5 | 20140730 | data_05012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 6 | 20140730 | data_06012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 7 | 20140730 | data_07012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 8 | 20140730 | data_08012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 9 | 20140730 | data_09012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 10 | 20140730 | data_10012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 11 | 20140730 | data_11012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

| 1 | 12 | 20140730 | data_12012013.txt | 1000001 | 7/30/2014 | 7/30/2014 |

Next step was to create another package that will loop through all the records in the FILE_DTL table, and load the data in the files into the target staging table:

Upon running the above package, data was loaded from all the files into the target staging table: