In Part 1 , we saw a small introduction to Social Bookmarking Sites and about the task. Let us now look into the Approach we employ here to predict the spammers.

THE APPROACH

Data Extraction & Data Cleaning

The dataset provided consists of bookmarks, tags & user ids, and is in the form of a sql dump, tools such as SQL Yog and Toad can be used to create the final database. Due to the skewed nature of the dataset (25000 spammers to 2000 non spammers) and its size (close to 3.5 GB or 3 million rows), it would make sense to use a random sample of 250 spammers and non-spammers for the data analysis, thereby reducing the data and have an equal number of spammers and non-spammers.

Derived Attributes

Apart from the attributes provided in the dataset, a few derived attributes can be created to facilitate the process of data analysis.

- distance from the root – gives the distance the url has from the root directory

- number of tags – indicates the number of tags a bookmark has, and the number of times a user tries to associate a bookmark with a tag

- domain – provides knowledge of distribution of bookmarks across various domains / geographies.

Text Mining

Text Mining is growing as an essential method of knowledge discovery from general and business documents. The primary task here is to analyze the tags posted by the users. We can categorize each of the words into various categories using a dictionary such as the Wordnet Dictionary. To perform the actual text mining, we can use one among the open source tools like Rapid Miner or Simstat with QDA Miner & Wordstat. The dataset is given as input and using the various categories of the dictionary, each tag from the dataset is assigned to a particular category. An important statistical measure which we obtain is TF-IDF– which is used to evaluate how important a word is to a document in a collection.

Modeling

Various training models can be used such as Classification Trees, Logistic Regression & Neural Networks, but Neural Networks would be the best modeling approach to go for in this case as it uses an exhaustive learning to learn the model through various iterations. Again while partitioning the dataset, it would be beneficial if atleast 60% of the data is used to train the model as this would improve the efficiency of the algorithm when predicting on the test data. Clementine can be used to perform the modeling task.

It is important while training the model to give more emphasis on the point that “a non-spammer should not be wrongly predicted as a spammer” even if the converse is true.

FINDINGS & RESULTS

The idea of eye balling data at the highest level is to see if there are any interesting features that really stand out. Some of them include:-

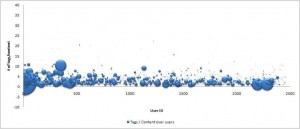

- The chances of a spammer posting more spam messages are higher than a non-spammer trying to bookmark the url. The below image clearly shows that the number of bookmarks posted by non-spammers are around 10 per content.

- From the distribution of domain across spammers, an important observation is that the cn domain constituted 7.9% for spammers in contrast to 0.12%for non-spammers.

When this model was applied on the test data (consisiting of 2000 users), an an overall accuracy of 70% was obtained in predicting a spammer. But what is more important is that only 4 non-spammers were wrongly predicted as spammers!

In Part 3 of this series, we will look at some of the possible alternatives and newer trends to the approach discussed here.