Meet Erin Zapata, a Practice Director based in Chicago, Illinois, whose empowering leadership and commitment to professional development have made a difference for Perficient’s Microsoft Business Unit (BU). As a member of Perficient’s Women in Technology (WiT) Employee Resource Group (ERG), Erin is dedicated to creating a supportive and inclusive workplace that fosters meaningful connections. With her exceptional skills in team management and project delivery, she champions client success and encourages a collaborative spirit that inspires those around her.

In this People of Perficient profile, we’ll explore Erin’s expertise, passion for continuous learning, and remarkable career journey over the past 13 years at Perficient.

What is your role? Describe a typical day in the life.

I began my career with Perficient as a Senior Technical Consultant after a decade at a large consulting firm. Over the years, I progressed through various levels. About four years ago, I was promoted to Delivery Director. Most recently, I have taken on the role of Practice Director of Modern Work and Security. At each stage, I felt prepared and empowered to embrace new responsibilities, thanks to the invaluable lessons learned along the way. Every engagement, pursuit, and customer interaction has imparted knowledge that I carry forward into each new initiative.

As a Practice Director, I have a handful of responsibilities in various areas. I work with my team to develop, modify, and execute the strategic vision for our Microsoft Modern Work and Security practices. I support with aligning the vision with business goals and market trends, while monitoring revenue and utilization. I oversee the project delivery of solutions, acting as a point of escalation for both internal and customer issues. From a team management perspective, I provide guidance to a team of skilled directors, consultants, architects, and developers. Lastly, I support pre-sales activities, collaborate with sales and marketing teams, and help pursuit teams through the sales cycle.

How do you explain your job to family, friends, or children?

When asked, “What do you do?” I typically say, “I help our sales organization sell Microsoft solutions, and our team helps our customers roll out Microsoft capabilities like Teams and SharePoint to their organizations.”

Whether big or small, how do you make a difference for our clients, colleagues, communities, or teams?

One of the most rewarding aspects of my role is fostering an environment where my team members can thrive. I am committed to ensuring that they are engaged in meaningful and enjoyable work, feel valued for their contributions, and receive the support they need to succeed. Their well-being and professional satisfaction are paramount, and I am dedicated to being their advocate.

What are your proudest accomplishments, personally and professionally? Any milestone moments at Perficient?

Personally, my greatest achievement is undoubtedly my children, of whom I am immensely proud. Family is always my top priority, and their well-being and happiness are essential.

Professionally, my most significant achievement was the successful delivery of an application for an exceptionally challenging project with 86 consultants in 11 different BUs. I am incredibly proud of our consultants for going live with this application. It was a remarkable demonstration of Perficient’s collaboration and dedication, which showcased our commitment to excellence even in the face of daunting circumstances.

With Perficient’s mission statement in mind, why do we obsess over outcomes?

Our mission to obsess over client outcomes is rooted in our commitment to excellence and our belief that our clients’ success is our success.

READ MORE: Perficient Obsesses Over Client Outcomes to Drive Client Success

What motivates you in your daily work?

I am inspired by the talent of our team and the collaborative environment we foster. The challenge of solving our customers’ complex problems keeps me engaged and passionate about what we’re accomplishing together. Knowing that our efforts make a meaningful difference for our clients drives my commitment each day.

READ MORE: Perficient Colleagues Make a Difference

What has your experience at Perficient taught you?

My experience at Perficient has taught me many valuable lessons, but two stand out. First, the importance of fostering a collaborative work environment where every team member feels valued and empowered, creating a positive workplace experience. Second, navigating complex challenges has taught me the significance of adaptability and maintaining a positive mindset.

What advice would you give to colleagues who are starting their career with Perficient?

My advice for new colleagues is to embrace continuous learning, stay curious, and ask questions. No one knows everything, and there are so many wonderful, bright people to learn from at Perficient who will help you grow in your career.

Why are you #ProudlyPerficient?

I am #ProudlyPerficient because of our commitment to excellence and innovation, as well as the amazing people I work with who keep me motivated and positive. I stay here because I feel supported by my leadership, and my colleagues are invested in my growth.

LEARN MORE: Perficient Fosters Growth for Everyone

What’s something surprising people might not know about you or your background?

Many years ago, I was a nationally ranked figure skater.

What are you passionate about outside of work?

Outside of work, I am passionate about family and community. Right now, our family focus revolves around academics and sports. My sons play multiple sports, and my husband dedicates his time to serving on the board of a community youth sports organization and coaching our boys’ football teams. I love watching them play, be part of a team, thrive in school, and actively participate in our community. I make it a priority to never miss my kids’ sporting or school events. Being there for them is critical to achieving and feeling that work-life balance.

It’s no secret our success is because of our people. No matter the technology or time zone, our colleagues are committed to delivering innovative, end-to-end digital solutions for the world’s biggest brands, and we bring a collaborative spirit to every interaction. We’re always seeking the best and brightest to work with us. Join our team and experience a culture that challenges, champions, and celebrates our people.

Learn more about what it’s like to work at Perficient at our Careers page. See open jobs or join our talent community for career tips, job openings, company updates, and more!

Go inside Life at Perficient and connect with us on LinkedIn, YouTube, X, Facebook, and Instagram.

]]>Abstract

We live in a time when automating processes is no longer a luxury, but a necessity for any team that wants to remain competitive. But automation has evolved. It is no longer just about executing repetitive tasks, but about creating solutions that understand context, learn over time, and make smarter decisions. In this blog, I want to show you how n8n (a visual and open-source automation tool) can become the foundation for building intelligent agents powered by AI.

We will explore what truly makes an agent “intelligent,” including how modern AI techniques allow agents to retrieve contextual information, classify tickets, or automatically respond based on prior knowledge.

I will also show you how to connect AI services and APIs from within a workflow in n8n, without the need to write thousands of lines of code. Everything will be illustrated with concrete examples and real-world applications that you can adapt to your own projects.

This blog is an invitation to go beyond basic bots and start building agents that truly add value. If you are exploring how to take automation to the next level, thiss journey will be of great interest to you.

Introduction

Automation has moved from being a trend to becoming a foundational pillar for development, operations, and business teams. But amid the rise of tools that promise to do more with less, a key question emerges: how can we build truly intelligent workflows that not only execute tasks but also understand context and act with purpose? This is where AI agents begin to stand out.

This blog was born from that very need. Over the past few months, I’ve been exploring how to take automation to the next level by combining two powerful elements: n8n (a visual automation platform) and the latest advances in artificial intelligence. This combination enables the design of agents capable of understanding, relating, and acting based on the content they receive—with practical applications in classification, search, personalized assistance, and more.

In the following sections, I’ll walk you through how these concepts work, how they connect with each other, and most importantly, how you can apply them yourself (without needing to be an expert in machine learning or advanced development). With clear explanations and real-world examples built with n8n, this blog aims to be a practical, approachable guide for anyone looking to go beyond basic automation and start building truly intelligent solutions.

What are AI Agents?

An AI agent is an autonomous system (software or hardware) that perceives its environment, processes information, and makes decisions to achieve specific goals. It does not merely react to basic events; it can analyze context, query external sources, and select the most appropriate action. Unlike traditional bots, intelligent agents integrate reasoning and sometimes memory, allowing them to adapt and make decisions based on accumulated experience (Wooldridge & Jennings, 1995; Cheng et al., 2024).

In the context of n8n, an AI agent translates into workflows that not only execute tasks but also interpret data using language models and act according to the situation, enabling more intelligent and flexible processes.

From Predictable to Intelligent: Traditional Bot vs. Context-Aware AI Agent

A traditional bot operates based on a set of rigid rules and predefined responses, which limits its ability to adapt to unforeseen situations or understand nuances in conversation. Its interaction is purely reactive: it responds only to specific commands or keywords, without considering the conversation’s history or the context in which the interaction occurs. In contrast, a context-aware artificial intelligence agent uses advanced natural language processing techniques and conversational memory to adapt its responses according to the flow of the conversation and the previous information provided by the user. This allows it to offer a much more personalized, relevant, and coherent experience, overcoming the limitations of traditional bots. Context-aware agents significantly improve user satisfaction, as they can understand intent and dynamically adapt to different conversational scenarios (Chen, Xu, & Wang, 2022).

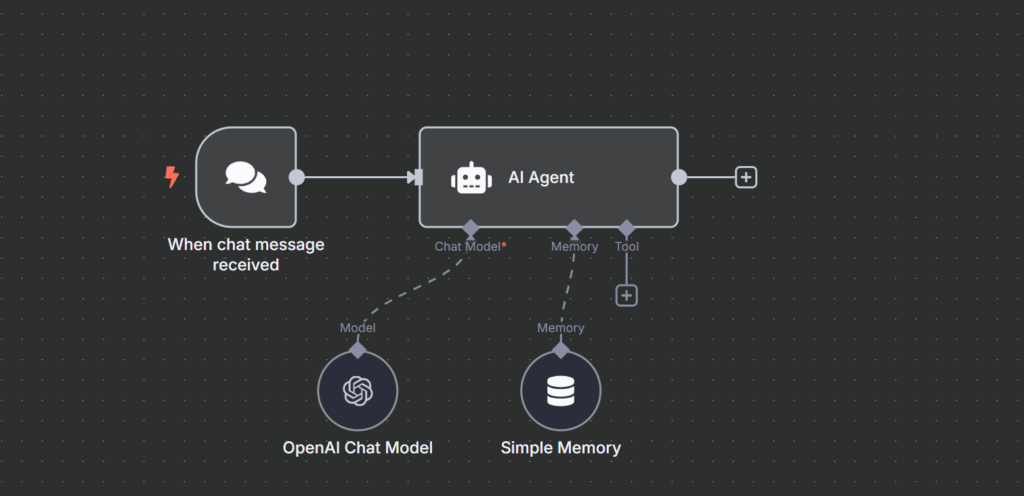

Figure 1: Architecture of an intelligent agent with hybrid memory in n8n (Dąbrowski, 2024).

How Does n8n Facilitate the Creation of Agents?

n8n is an open-source automation platform that enables users to design complex workflows visually, without the need to write large amounts of code. It simplifies the creation of intelligent agents by seamlessly integrating language models (such as OpenAI or Azure OpenAI), vector databases, conditional logic, and contextual memory storage.

With n8n, an agent can receive text input, process it using an AI model, retrieve relevant information from a vector store, and respond based on conversational history. All of this is configured through visual nodes within a workflow, making advanced solutions accessible even to those without a background in artificial intelligence.

Thanks to its modular and flexible design, n8n has become an ideal platform for building agents that not only automate tasks but also understand, learn, and act autonomously.

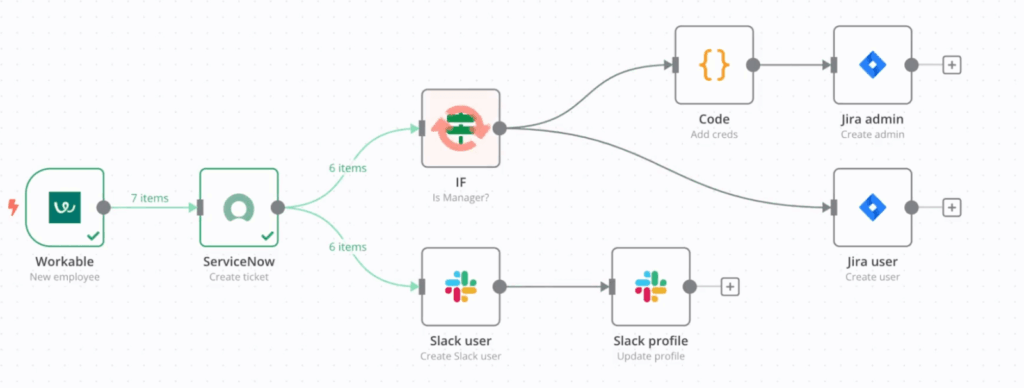

Figure 2: Automated workflow in n8n for onboarding and permission management using Slack, Jira, and ServiceNow (TextCortex, 2025).

Integrations with OpenAI, Python, External APIs, and Conditional Flows

One of n8n’s greatest strengths is its ability to connect with external tools and execute custom logic. Through native integrations, it can interact with OpenAI (or Azure OpenAI), enabling the inclusion of language models for tasks such as text generation, semantic classification, or automated responses.

Additionally, n8n supports custom code execution through Python or JavaScript nodes, expanding its capabilities and making it highly adaptable to different use cases. It can also communicate with any external service that provides a REST API, making it ideal for enterprise-level integrations.

Lastly, its conditional flow system allows for dynamic branching within workflows, evaluating logical conditions in real time and adjusting the agent’s behavior based on the context or incoming data.

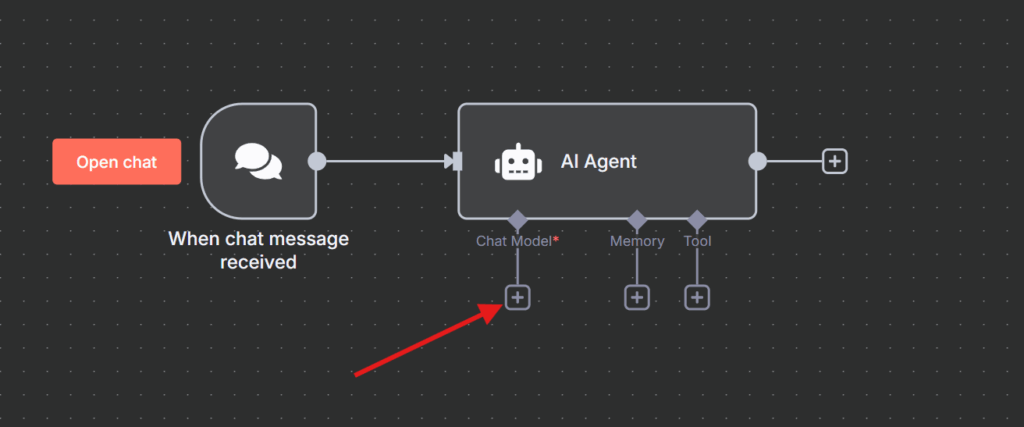

Figure 3: Basic conversational agent flow in n8n with language model and contextual memory.

This basic flow in n8n represents the core logic of a conversational intelligent agent. The process begins when a message is received through the “When chat message received” node. That message is then passed to the AI Agent node, which serves as the central component of the system.

The agent is connected to two key elements: a language model (OpenAI Chat Model) that interprets and generates responses, and a simple memory that allows it to retain the context or conversation history. This combination enables the agent not only to produce relevant responses but also to remember previous information and maintain coherence across interactions.

This type of architecture demonstrates how, with just a few nodes, it is possible to build agents with contextual behavior and basic reasoning capabilities—ideal for customer support flows, internal assistants, or automated conversational interfaces.

Before the agent can interact with users, it needs to be connected to a language model. The following shows how to configure this integration in n8n.

Configuring the Language Model in the AI Agent

As developers at Perficient, we have the advantage of accessing OpenAI services through the Azure platform. This integration allows us to leverage advanced language models in a secure, scalable manner, fully aligned with corporate policies, and facilitates the development of artificial intelligence solutions tailored to our needs.

One of the fundamental steps in building an AI agent in n8n is to define the language model that will be used to process and interpret user inputs. In this case, we use the OpenAI Chat Model node, which enables the agent to connect with advanced language models available through the Azure OpenAI API.

When configuring this node, n8n will require an access credential, which is essential for authenticating the connection between n8n and your Azure OpenAI service. If you do not have one yet, you can create it from the Azure portal by following these steps:

- Go to the Azure portal. If you do not yet have an Azure OpenAI resource, create one by selecting “Create a resource“, searching for “Azure OpenAI”, and following the setup wizard to configure the service with your subscription parameters. Then access the implemented resource.

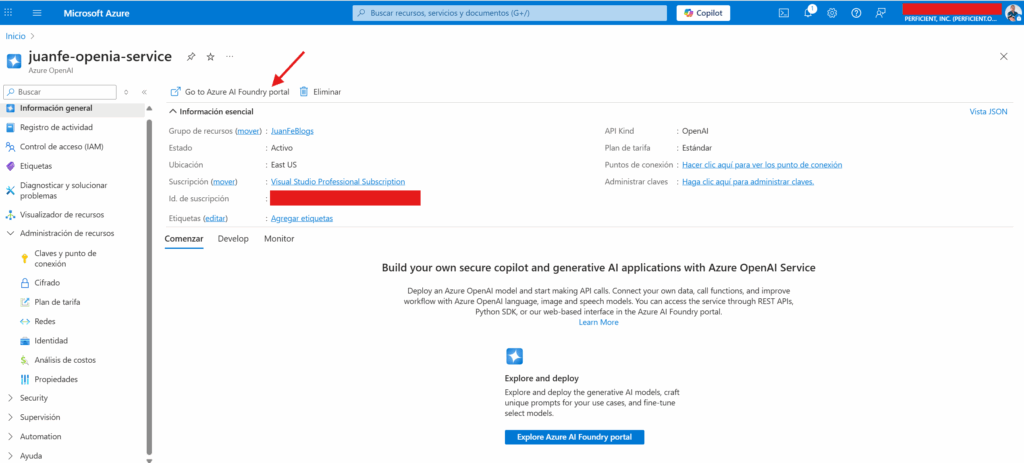

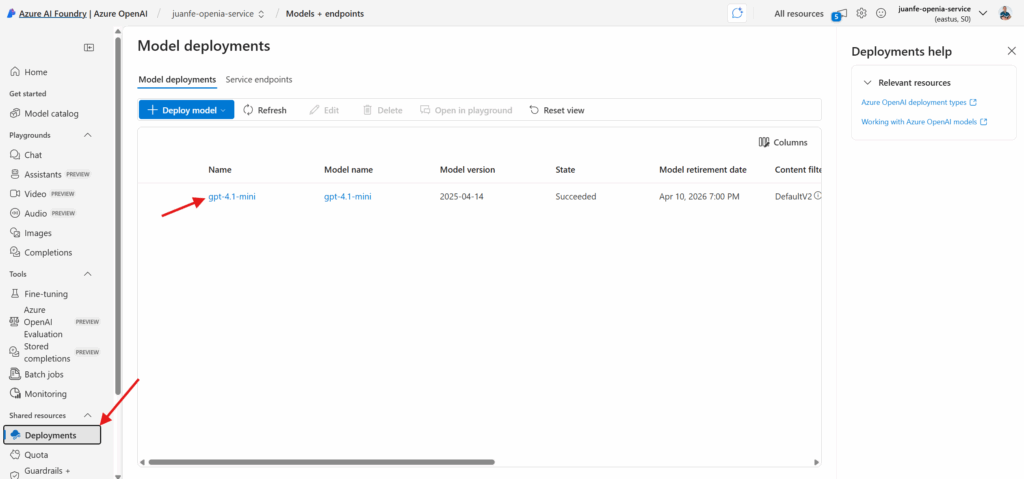

- Go to url https://ai.azure.com and sign in with your Azure account. Select the Azure OpenAI resource you created and, from the side menu, navigate to the “Deployments” section. There you must create a new deployment, selecting the language model you want to use (for example, GPT 3.5 or GPT 4) and assigning it a unique deployment name. You can also click on the Command-Go to Azure AI Foundry portal option as shown in the image.

Figure 4: Access to the Azure AI Foundry portal from the Azure OpenAI resource.

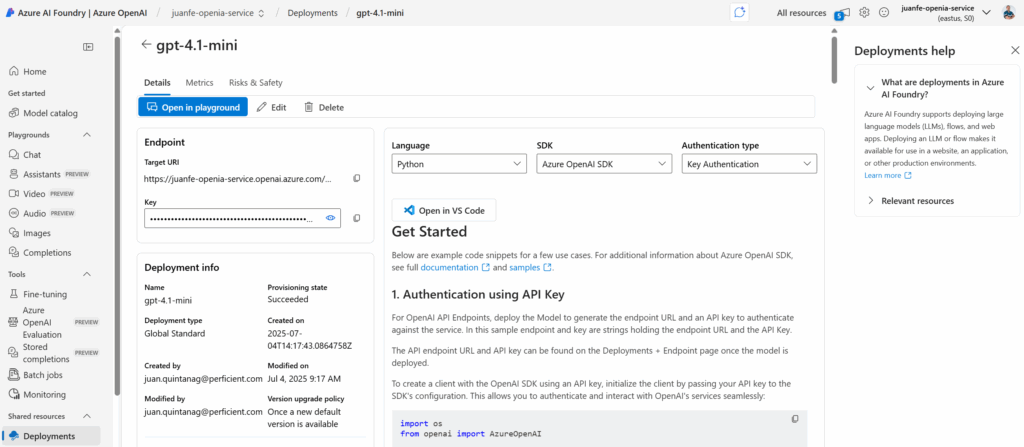

- Once the deployment is created, go to “API Keys & Endpoints” to copy the access key (API Key) and the endpoint corresponding to your resource.

Figure 5: Visualization of model deployments in Azure AI Foundry.

Once the model deployment has been created in Azure AI Foundry, it is essential to access the deployment details in order to obtain the necessary information for integrating and consuming the model from external applications. This view provides the API endpoint, the access key (API Key), as well as other relevant technical details of the deployment, such as the assigned name, status, creation date, and available authentication parameters.

This information is crucial for correctly configuring the connection from tools like n8n, ensuring a secure and efficient integration with the language model deployed in Azure OpenAI.

Figure 6: Azure AI Foundry deployment and credentialing details.

-

Step 1:

In n8n, select the “+ Create new credential” option in the node configuration, and enter the endpoint, the API key, and the deployment name you configured. But first we must create the AI Agent:

Figure 7: Chat with AI Agent.

Step 2:

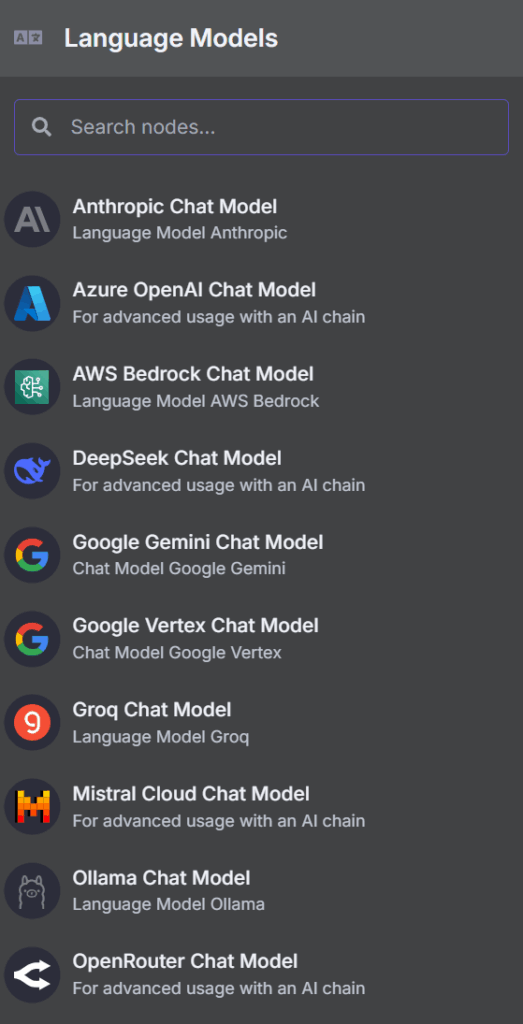

After the creation of the Agent, the model is added, as shown in the figure above. Within the n8n environment, integration with language models is accomplished through specialized nodes for each AI provider.

To connect our agent with Azure OpenAI services, it is necessary to select the Azure OpenAI Chat Model node in the Language Models section.

This node enables you to leverage the advanced capabilities of language models deployed in Azure, making it easy to build intelligent and customizable workflows for various corporate use cases. Its configuration is straightforward and, once properly authenticated, the agent will be ready to process requests using the selected model from Azure’s secure and scalable infrastructure.

Figure 8: Selection of the Azure OpenAI Chat Model node in n8n.

Step 3:

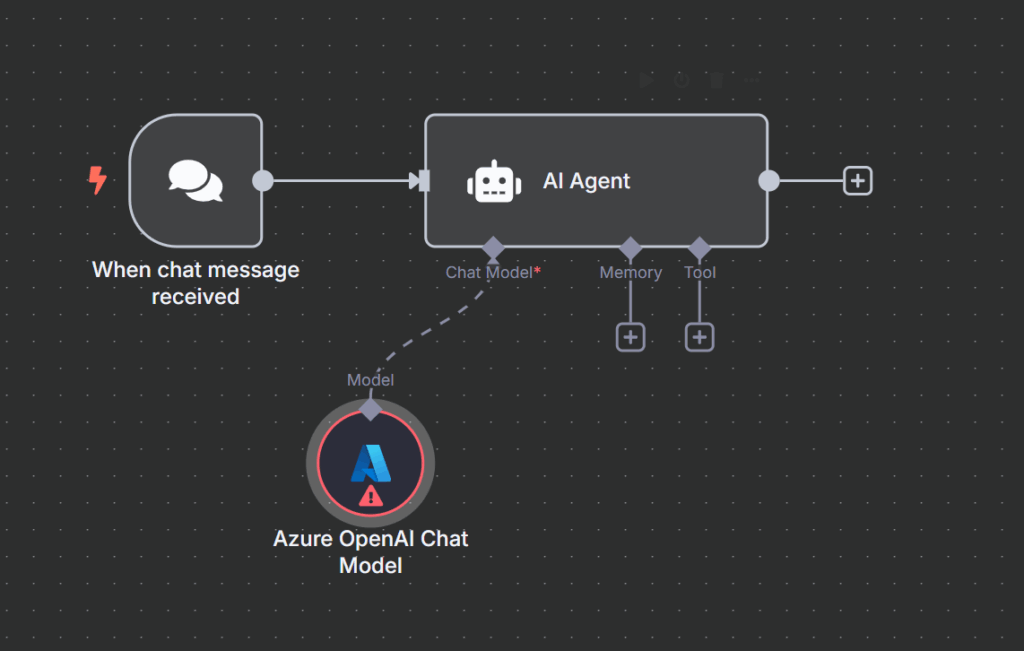

Once the Azure OpenAI Chat Model node has been selected, the next step is to integrate it into the n8n workflow as the primary language model for the AI agent.

The following image illustrates how this model is connected to the agent, allowing chat inputs to be processed intelligently by leveraging the capabilities of the model deployed in Azure. This integration forms the foundation for building more advanced and personalized conversational assistants in enterprise environments.

Figure 9: Selection of the Azure OpenAI Chat Model node in n8n.

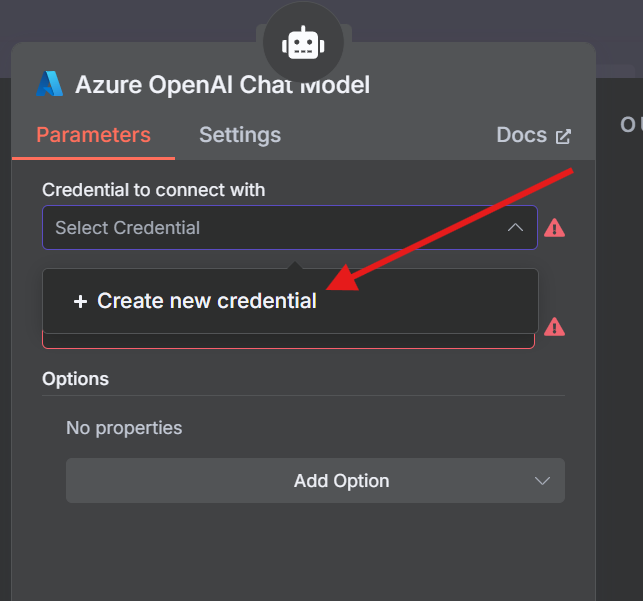

Step 4:

When configuring the Azure OpenAI Chat Model node in n8n, it is necessary to select the access credential that will allow the connection to the Azure service.

If a credential has not yet been created, you can do so directly from this panel by selecting the “Create new credential” option.

This step is essential to authenticate and authorize the use of language models deployed in Azure within your automation workflows.

Figura 10: Selection of the Azure OpenAI Chat Model node in n8n.

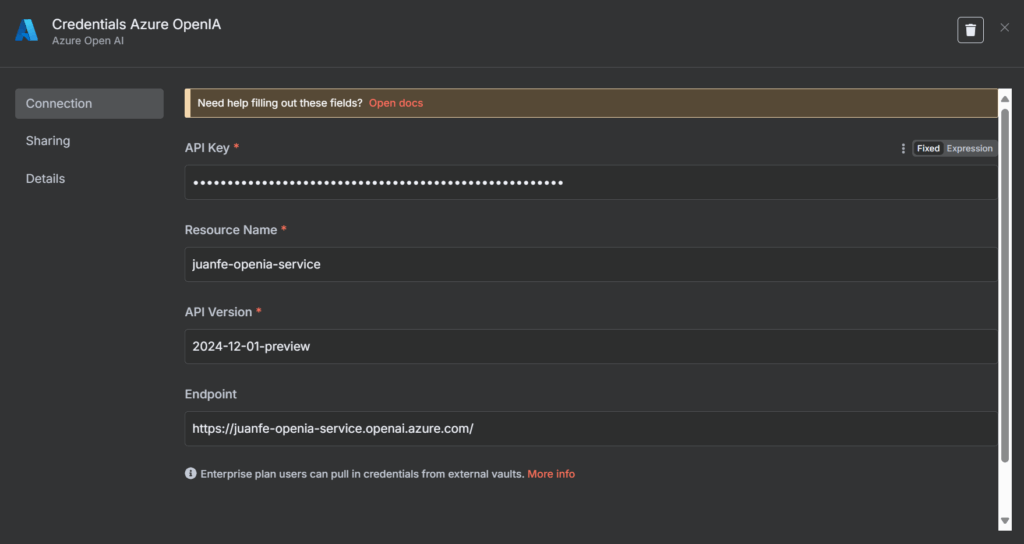

Step 5:

To complete the integration with Azure OpenAI in n8n, it is necessary to properly configure the access credentials.

The following screen shows the required fields, where you must enter the API Key, resource name, API version, and the corresponding endpoint.

This information ensures that the connection between n8n and Azure OpenAI is secure and functional, enabling the use of language models deployed in the Azure cloud.

Figure 11: Azure OpenAI credentials configuration in n8n.

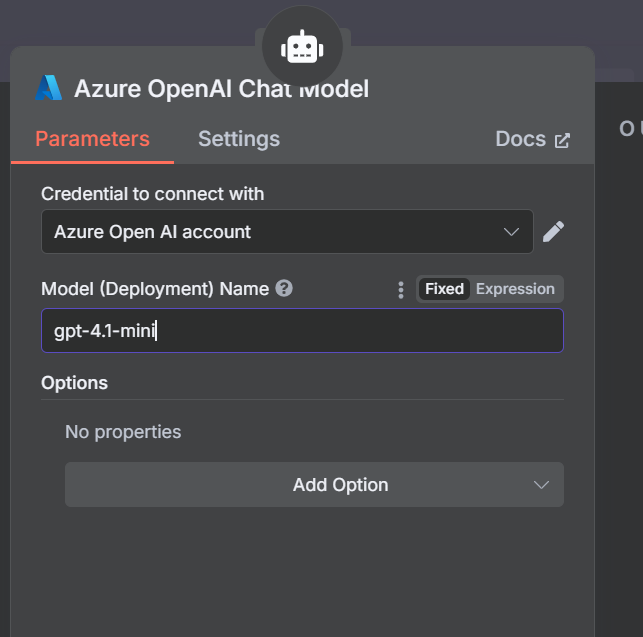

Step 6:

After creating and selecting the appropriate credentials, the next step is to configure the model deployment name in the Azure OpenAI Chat Model node.

In this field, you must enter exactly the name assigned to the model deployment in Azure, which will allow n8n to correctly use the deployed instance to process natural language requests. Remember to select the Model of the selected implementation in Azure OpenIA, in this case gtp-4.1-mini:

Figure 12: Configuration of the deployment name in the Azure OpenAI Chat Model node.

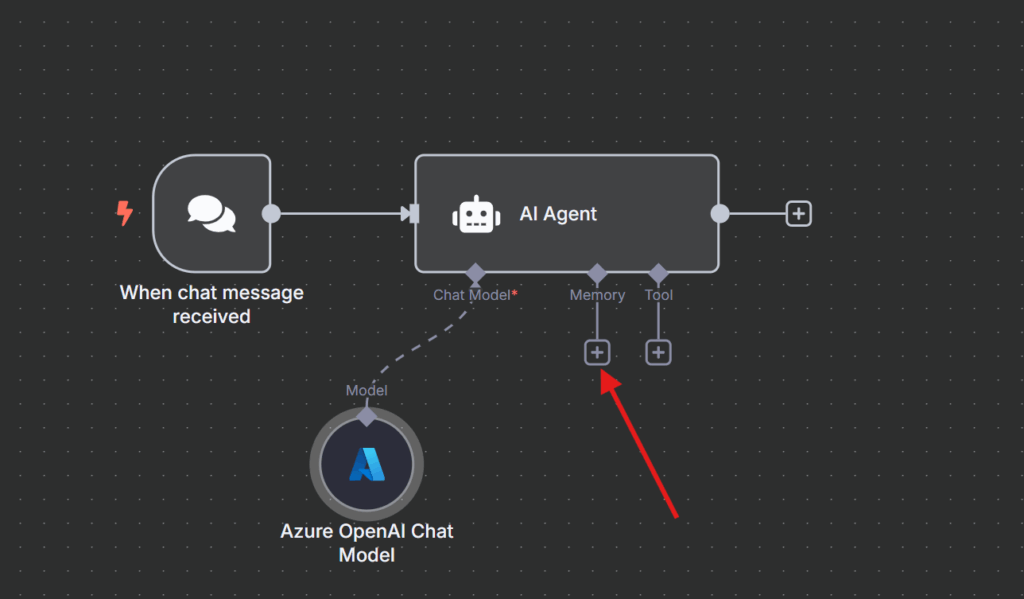

Step 7:

Once the language model is connected to the AI agent in n8n, you can enhance its capabilities by adding memory components.

Integrating a memory system allows the agent to retain relevant information from previous interactions, which is essential for building more intelligent and contextual conversational assistants.

In the following image, the highlighted area shows where a memory module can be added to enrich the agent’s behavior.

Figure 13: Connecting the memory component to the AI agent in n8n.

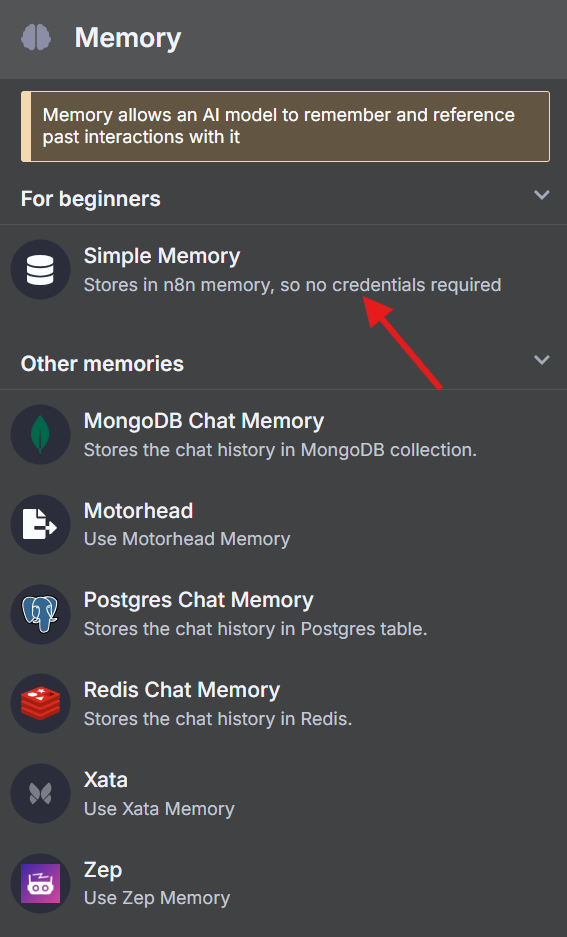

Step 8:

To start equipping the AI agent with memory capabilities, n8n offers different options for storing conversation history.

The simplest alternative is Simple Memory, which stores the data directly in n8n’s internal memory without requiring any additional credentials.

There are also more advanced options available, such as storing the history in external databases like MongoDB, Postgres, or Redis, which provide greater persistence and scalability depending on the project’s requirements.

Figure 14: Memory storage options for AI agents in n8n.

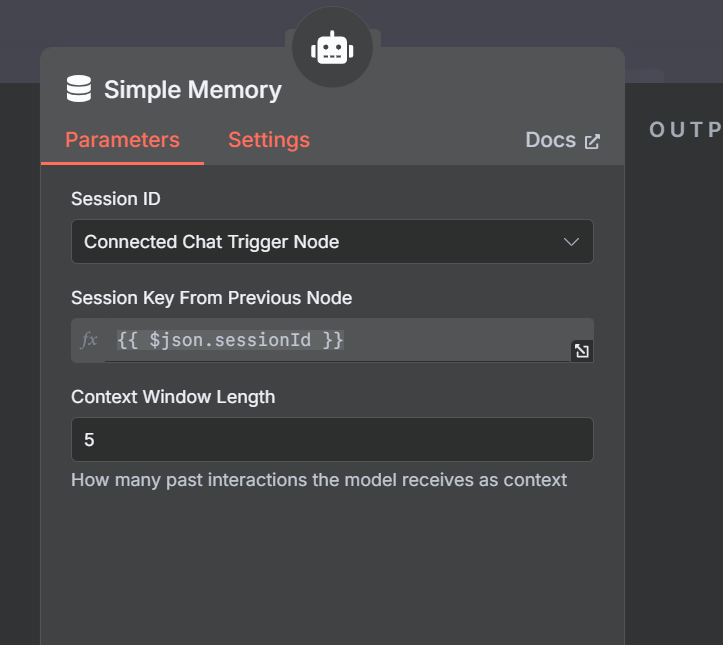

Step 9:

The configuration of the Simple Memory node in n8n allows you to easily define the parameters for managing the conversational memory of the AI agent.

In this interface, you can specify the session identifier, the field to be used as the conversation tracking key, and the number of previous interactions the model will consider as context.

These settings are essential for customizing information retention and improving continuity in the user’s conversational experience.

Figure 15: Memory storage options for AI agents in n8n.

Step 10:

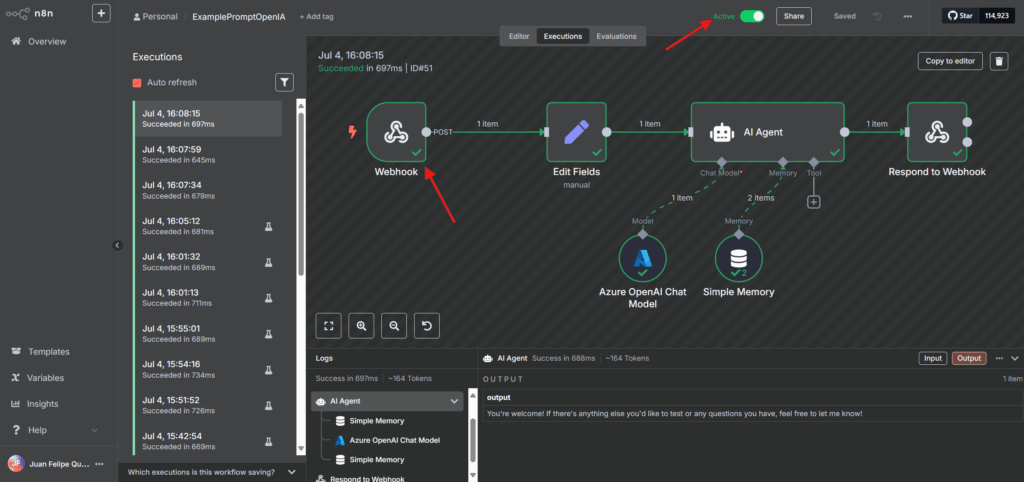

The following image shows the successful execution of a conversational workflow in n8n, where the AI agent responds to a chat message using a language model deployed on Azure and manages context through a memory component.

You can see how each node in the workflow performs its function and how the conversation history is stored, enabling the agent to provide more natural and contextual responses.

Figure 16: Execution of a conversational workflow with Azure OpenAI and memory in n8n.

Once a valid credential has been added and selected, the node will be ready to send requests to the chosen language model (such as GPT 3.5 or GPT 4) and receive natural language responses, allowing the agent to continue the conversation or execute actions automatically.

With this integration, n8n becomes a powerful automation tool, enabling use cases such as conversational assistants, support bots, intelligent classification, and much more.

Integration of the AI agent into the web application through an n8n workflow triggered by a webhook.

Before integrating the AI agent into a web application, it is essential to have a ready-to-use n8n workflow that receives and responds to messages via a Webhook. Below is a typical workflow example where the main components for conversational processing are connected.

For the purposes of this blog, we will assume that both the Webhook node (which receives HTTP requests) and the Set/Edit Fields node (which prepares the data for the agent) have already been created. As shown in the following image, the workflow continues with the configuration of the language model (Azure OpenAI Chat Model), memory management (Simple Memory), processing via the AI Agent node, and finally, sending the response back to the user using the Respond to Webhook node.

Figure 17: n8n Workflow for AI Agent Integration with Webhook.

Before connecting the web interface to the AI agent deployed in n8n, it is essential to validate that the Webhook is working correctly. The following image shows how, using a tool like Postman, you can send an HTTP POST request to the Webhook endpoint, including the user’s message and the session identifier. As a result, the flow responds with the message generated by the agent, demonstrating that the end-to-end integration is functioning properly.

Figure 18: Testing the n8n Webhook with Postman.

-

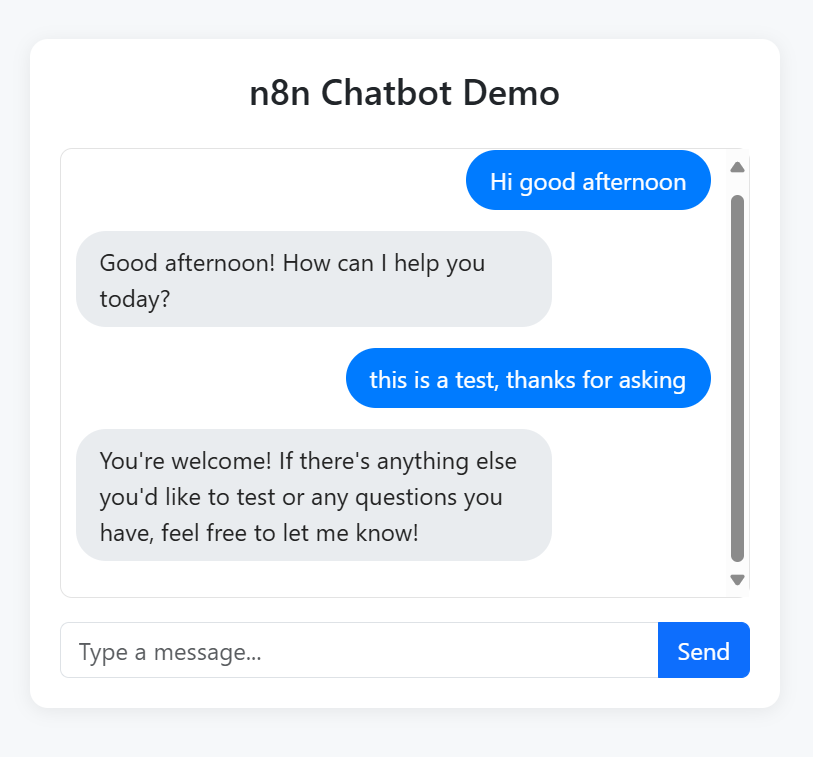

- Successful Test of the n8n Chatbot in a WebApp: The following image shows the functional integration between a chatbot built in n8n and a custom web interface using Bootstrap. By sending messages through the application, responses from the AI agent deployed on Azure OpenAI are displayed in real time, enabling a seamless and fully automated conversational experience directly from the browser.

Figure 19: n8n Chatbot Web Interface Working with Azure OpenAI.

-

Introductory Text: Before consuming the agent from a web page or an external application, it is essential to ensure that the flow in n8n is activated. As shown in the image, the “Active” button must be enabled (green) so that the webhook works continuously and can receive requests at any time. Additionally, remember that when deploying to a production environment, you must change the webhook URL, using the appropriate public address instead of “localhost”, ensuring external access to the flow.

Figure 20: Activation and Execution Tracking of the Flow in n8n.

- Successful Test of the n8n Chatbot in a WebApp: The following image shows the functional integration between a chatbot built in n8n and a custom web interface using Bootstrap. By sending messages through the application, responses from the AI agent deployed on Azure OpenAI are displayed in real time, enabling a seamless and fully automated conversational experience directly from the browser.

Conclusions

Intelligent automation is essential for today’s competitiveness

Automating tasks is no longer enough; integrating intelligent agents allows teams to go beyond simple repetition, adding the ability to understand context, learn from experience, and make informed decisions to deliver real value to business processes.

Intelligent agents surpass the limitations of traditional bots

Unlike classic bots that respond only to rigid rules, contextual agents can analyze the flow of conversation, retain memory, adapt to changing situations, and offer personalized and coherent responses, significantly improving user satisfaction.

n8n democratizes the creation of intelligent agents

Thanks to its low-code/no-code approach and more than 400 integrations, n8n enables both technical and non-technical users to design complex workflows with artificial intelligence, without needing to be experts in advanced programming or machine learning.

The integration of language models and memory in n8n enhances conversational workflows

Easy connection with advanced language models (such as Azure OpenAI) and the ability to add memory components makes n8n a flexible and scalable platform for building sophisticated and customizable conversational agents.

Proper activation and deployment of workflows ensures the availability of AI agents

To consume agents from external applications, it is essential to activate workflows in n8n and use the appropriate production endpoints, thus ensuring continuous, secure, and scalable responses from intelligent agents in real-world scenarios.

References

- Wooldridge, M., & Jennings, N. R. (1995). Intelligent agents: Theory and practice. The Knowledge Engineering Review, 10(2), 115–152.

- Cheng, Y., Zhang, C., Zhang, Z., Meng, X., Hong, S., Li, W., Zhao, J. (2024). Exploring Large Language Model based Intelligent Agents: Definitions, Methods, and Prospects. arXiv.

- Chen, C., Xu, Y., & Wang, Z. (2022). Context-Aware Conversational Agents: A Review of Methods and Applications. IEEE Transactions on Artificial Intelligence, 3(4), 410-425.

- Zamani, H., Sadoughi, N., & Croft, W. B. (2023). Intelligent Workflow Automation: Integrating Memory-Augmented Agents in Business Processes. Journal of Artificial Intelligence Research, 76, 325-348.

- Dąbrowski, D. (2024). Day 67 of 100 Days Agentic Engineer Challenge: n8n Hybrid Long-Term Memory. Medium. https://damiandabrowski.medium.com/day-67-of-100-days-agentic-engineer-challenge-n8n-hybrid-long-term-memory-ce55694d8447

- n8n. (2024). Build your first AI Agent – powered by Google Gemini with memory. https://n8n.io/workflows/4941-build-your-first-ai-agent-powered-by-google-gemini-with-memory

- Luo, Y., Liang, P., Wang, C., Shahin, M., & Zhan, J. (2021). Characteristics and challenges of low code development: The practitioners’ perspective. arXiv. http://dx.doi.org/10.48550/arXiv.2107.07482

- TextCortex. (2025). N8N Review: Features, pricing & use cases. Cybernews. https://cybernews.com/ai-tools/n8n-review/

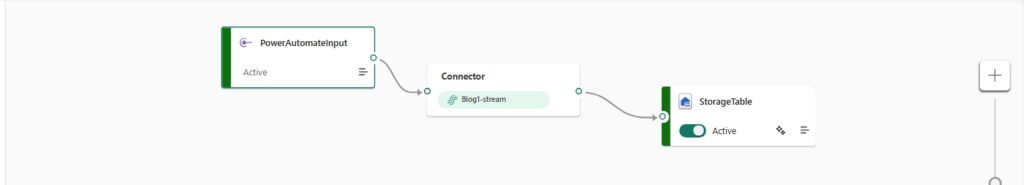

In this blog, we’ll walk through how to use Eventstream in Microsoft Fabric to capture events triggered by Power Automate and store them in a Lakehouse table. Whether you’re building dashboards, triggering insights, or analyzing user interactions, this integration provides a powerful way to bridge business logic with analytics.

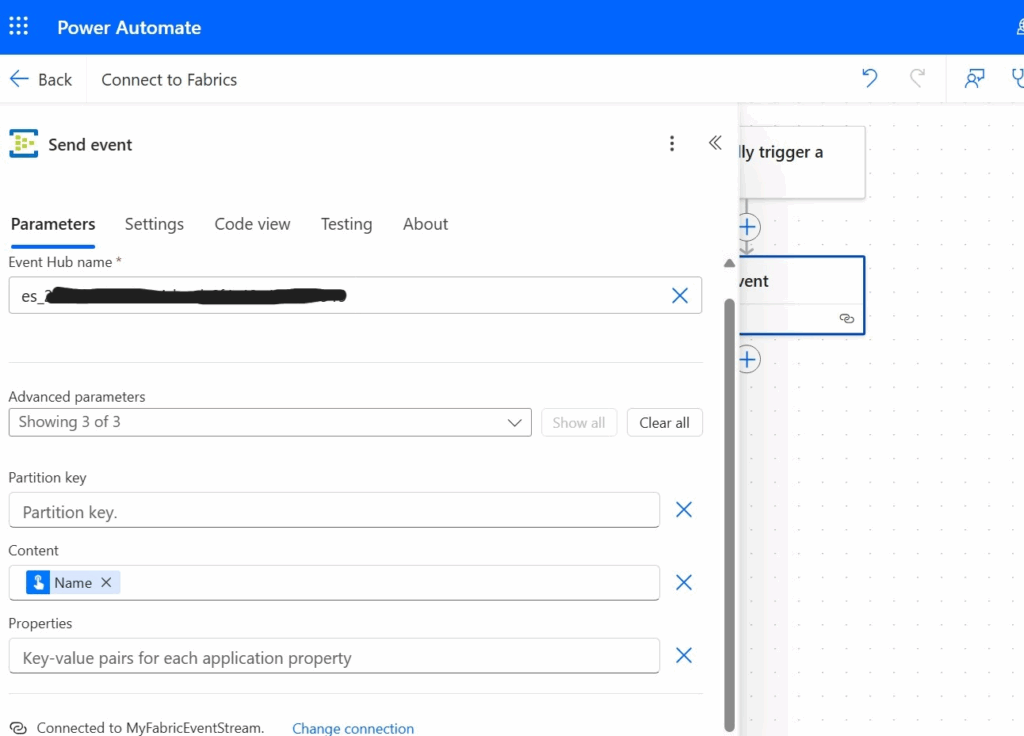

1. Create a Power Automate Flow to Post Data

Start by creating a Power Automate flow. Here’s what your flow should look like:

Input

You can choose any input you like. In this example, we’re using “Name”

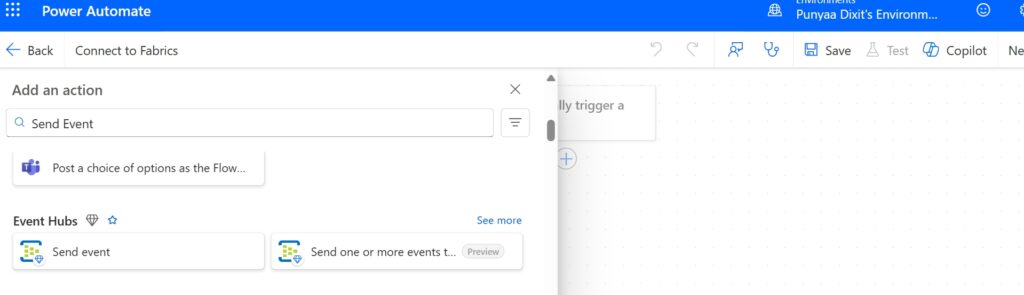

2. Choose the “Send Event” Trigger

Add the Send Event trigger to your flow.

Leave the flow as it is for now and move on to Microsoft Fabric.

3. Set Up Microsoft Fabric

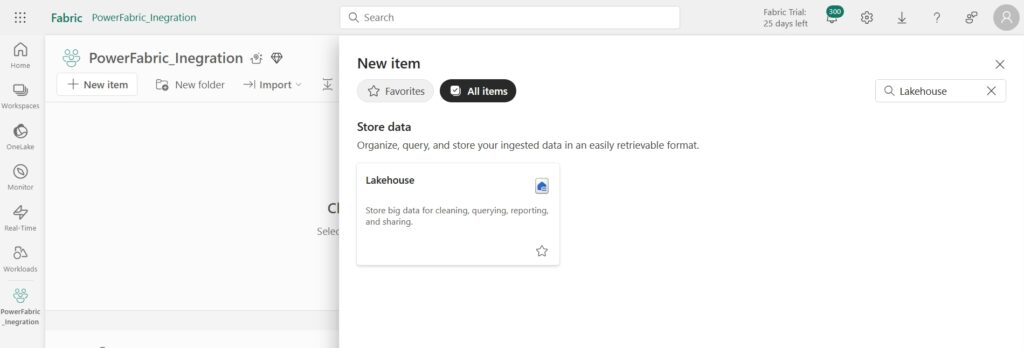

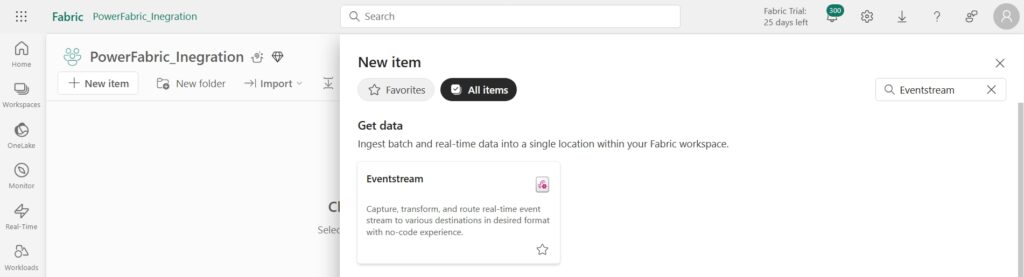

Go to https://app.fabric.microsoft.com, create a new Workspace (name it as you wish), then:

-

- Create a Lakehouse (using the + New Item button)

- Add an Eventstream (select Get Data)

In the Eventstream, choose Custom Endpoints.

4. Configure the Input and Publish

- Give your input a name of your choice.

- Click Publish.

After publishing, your input will be updated.

Go to Details, then to SAS Key Authentication, and copy the Event Hub Name and Primary Connection String.

5. Connect Power Automate to Microsoft Fabric

Return to Power Automate and:

- Use your Workspace Name to form a connection.

- Paste the Primary Connection String and click Create.

- Manually enter the Event Hub Name (it won’t appear dynamically).

Click Save to complete the connection.

Click Save to complete the connection.

Make sure to enter the data you want to pass (e.g., Name) in the Content field.

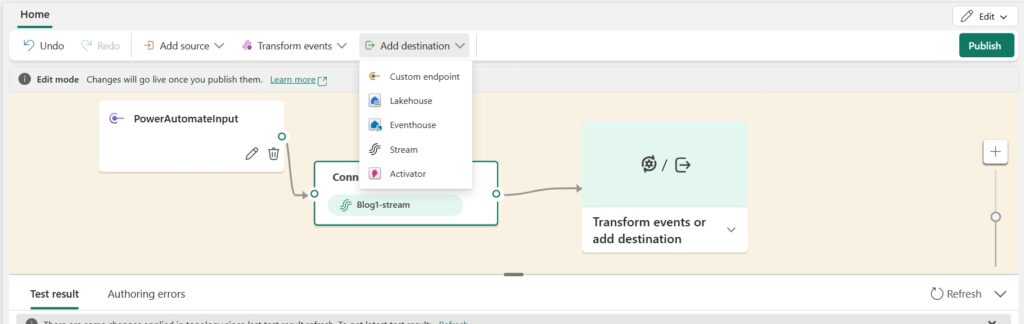

6. Set the Destination in Lakehouse

- Click on Lakehouse and connect it to your Workspace.

- For the table:

- Click Create New under the Delta Table option, or

- Create a table directly in the Lakehouse.

- Note: If data isn’t transferring, try creating the table in the Lakehouse first, then form the connection in Eventstream.

7. Finalize the Connection

- Form the connection.

- Click Publish.

And that’s it! Your data will now be stored in the Lakehouse.

Conclusion

Connecting Power Automate to Microsoft Fabric using Eventstream provides a robust and efficient solution for real-time data integration. Looking ahead, this setup can be extended to include:

- Advanced analytics with notebooks

- Real-time Power BI dashboards

- Integration with machine learning models

This unlocks deeper insights and intelligent automation across business processes.

]]>AI this, AI that. It seems like everyone is trying to shoehorn AI into everything even if it doesn’t make sense. Many of the use cases I come across online are either not a fit for AI or could be easily done without it. However, below I explore a use case that is not only a good fit, but also very much accelerated by the use of AI.

The Use Case

In the retail world, sometimes you have products that don’t seem to sell well even though they might be very similar to another product that does. Being able to group these products and analyze them as a cohort is the first useful step in understanding why.

The Data and Toolset

For this particular exercise I will be using a retail sales dataset from Zara that I got from Kaggle. It contains information about sales as well as the description of the items.

The tools I will be using are:

-

- Ollama (to run LLMs locally)

-

- Gemma3 LLM

-

- Python

-

- Pandas

-

- Langchain

-

- Python

High-level actions

I spend a lot of my time design solutions and one thing I’ve learned is that creating a high-level workflow is crucial in the early stages of solutioning. It allows for quick critique, communication, and change, if needed. This particular solution is not very complex, nevertheless, below are the high-level actions we will be performing.

- Load the csv data onto memory using Pandas

- Create a Vector Store to store our embeddings.

- Embed the description of the products

- Modify the Pandas dataframe to accommodate the results we want to get.

- Create a template that will be sent to the LLM for analysis

- Process each product on its own

- Get a list of comparable products based on the description. (This is where we leverage the LLM)

- Capture comparable products

- Rank the comparable products based on sales volume

- Output the data onto a new CSV

- Load the CSV onto PowerBI for visualization

- Add thresholding and filters.

The Code

All of the code for this exercise can be found here

The Template

Creating a template to send to the LLM is crucial. You can play around with it to see what works best and modify it to fit your scenario. What I used was this:

template = """<br> You are an expert business analyst that specializes in retail sales analysis.<br> The data you need is provided below. It is in dictionary format including:<br> "Product Position": Where the product is positioned within the store,<br> "Sales Volume": How many units of a given product were sold,<br> "Product Category": The category for the product,<br> "Promotion": Whether or not the product was sold during a promotion.<br> There is additional information such as the name of the product, price, description, and more.<br> Here is all the data you need to answer questions: {data}<br> Here is the question to answer: {question}<br> When referencing products, add a list of the Product IDs at the end of your response in the following format: 'product_ids = [<id1>, <id2>, ... ]'.<br>"""When we iterate, we will use the following as the question:

question = f"Look for 5 products that loosely match this description: {product['description']}?"The output

Once python does its thing and iterates over all the products we get something like this:

| Product ID | Product Name | Product Description | Sales Volume | Comparable Product 1 | Comparable Product 2 | … | Group Ranking |

| 185102 | BASIC PUFFER JACKET | Puffer jacket made of tear-resistant… | 2823 | 133100 | 128179 | … | 1 |

| 187234 | STRETCH POCKET OVERSHIRT | Overshirt made of stretchy fabric…. | 2575 | 134104 | 182306 | … | 0.75 |

| … | … | … | … | … | … | … | … |

Power BI

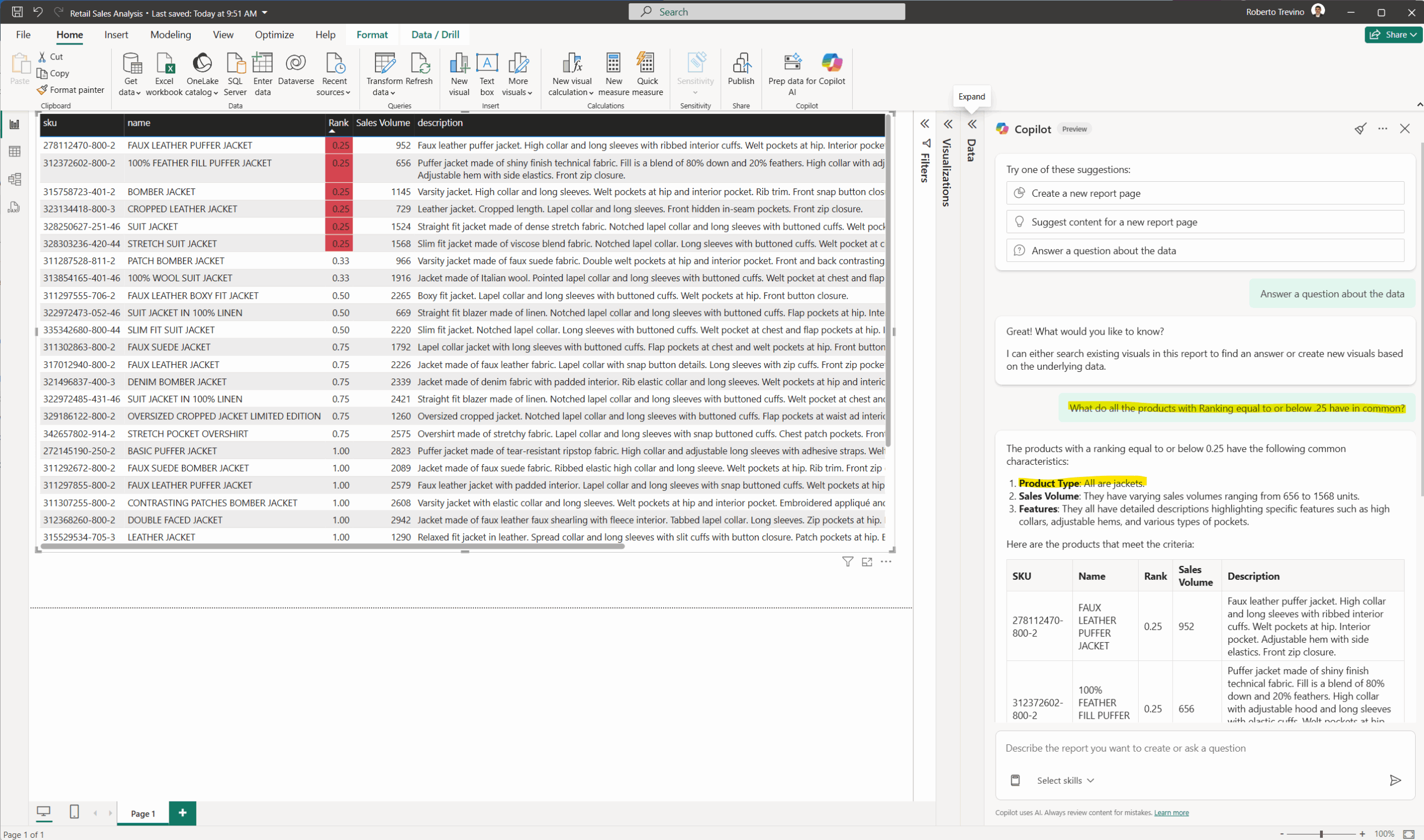

We then load the data onto Power BI to visualize it better. This will allow us to not only analyze the data using filtering and conditional formatting, but we can also explore the data even further with Copilot.

Look at the screenshot below. I’ve initially setup conditional formatting so that all the products that rank low within their group are highlighted.

I then used Copilot to ask how all of these relate to each other. It was quick to point out that all of them were jackets.

This arms us with enough information to go down a narrower search to figure out why the products are not performing. Some other questions we could ask are:

- Is this data seasonal and only includes summer sales?

- How long have these jackets been on sale?

- Are they all sold within a specific region or along all the stores?

- etc.

Conclusion

Yes, there are many, many use cases that don’t make sense for AI, however there are many that do! I hope that what you just read sparks some creativity in how you can use AI to further analyze data. The one thing to remember is that in order for AI to work as it should, it needs contextual information about the data. That can be accomplished via semantic layers. To know more, got to my post on semantic layers.

Do you have a business problem and need to talk to an expert about how to go about it? Are you unsure how AI can help? Reach out and we can talk about it!

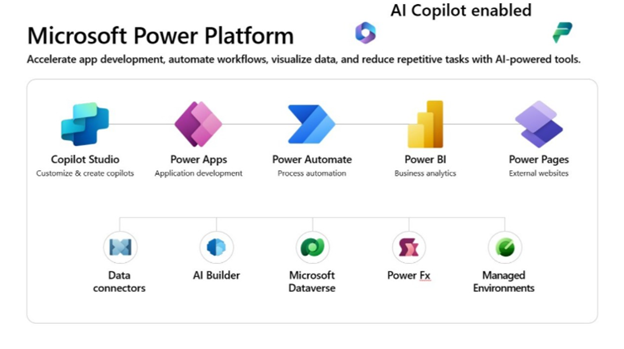

]]>Introduction to Copilot for Power Platform

Microsoft Copilot is a revolutionary AI-powered tool for Power Platform, designed to streamline the development process and enhance the intelligence of your applications. This learning path will take you through the fundamentals of Copilot and its integration with Power Apps, Power Automate, Power Virtual Agents, and AI Builder.

Copilot in Microsoft Power Platform helps app makers quickly solve business problems. A copilot is an AI assistant that can help you perform tasks and obtain information. You interact with a copilot by using a chat experience. Microsoft has added copilots across the different Microsoft products to help users be more productive. Copilots can be generic, such as Microsoft Copilot, and not tied to a specific Microsoft product. Alternatively, a copilot can be context-aware and tailored to the Microsoft product or application that you’re using at the time.

Microsoft Power Platform Copilots & Specializations.

Microsoft Power Platform has several copilots that are available to makers and users.

Microsoft Copilot for Microsoft Power Apps

Use this copilot to help create a canvas app directly from your ideas. Give the copilot a natural language description, such as “I need an app to track my customer feedback.” Afterward, the copilot offers a data structure for you to iterate until it’s exactly what you need, and then it creates pages of a canvas app for you to work with that data. You can edit this information along the way. Additionally, this copilot helps you edit the canvas app after you create it. Power Apps also offers copilot controls for users to interact with Power Apps data, including copilots for canvas apps and model-driven apps.

Microsoft Copilot for Microsoft Power Automate

Use this copilot to create automation that communicates with connectors and improves business outcomes. This copilot can work with cloud flows and desktop flows. Copilot for Power Automate can help you build automation by explaining actions, adding actions, replacing actions, and answering questions.

Microsoft Copilot for Microsoft Power Pages

Use this copilot to describe and create an external-facing website with Microsoft Power Pages. As a result, you have theming options, standard pages to include, and AI-generated stock images and relevant text descriptions for the website that you’re building. You can edit this information as you build your Power Pages website.

How Copilots Work

You can create a copilot by using a language model, which is like a computer program that can understand and generate human-like language. A language model can perform various natural language processing tasks based on a deep-learning algorithm. The massive amounts of data that the language model processes can help the copilot recognize, translate, predict, or generate text and other types of content.

Despite being trained on a massive amount of data, the language model doesn’t contain information about your specific use case, such as the steps in a Power Automate flow that you’re editing. The copilot shares this information for the system to use when it interacts with the language model to answer your questions. This context is commonly referred to as grounding data. Grounding data is use case-specific data that helps the language model perform better for a specific topic. Additionally, grounding data ensures that your data and IP are never part of training the language model.

Accelerate Solution Building with Copilot

Consider the various copilots in Microsoft Power Platform as specialized assistants that can help you become more productive. Copilot can help you accelerate solution building in the following ways:

- Prototyping

- Inspiration

- Help with completing tasks

- Learning about something

Prototyping

Prototyping is a way of taking an idea that you discussed with others or drew on a whiteboard and building it in a way that helps someone understand the concept better. You can also use prototyping to validate that an idea is possible. For some people, having access to your app or website can help them become a supporter of your vision, even if the app or website doesn’t have all the features that they want.

Inspiration

Building on the prototyping example, you might need inspiration on how to evolve the basic prototype that you initially proposed. You can ask Copilot for inspiration on how to handle the approval of which ideas to prioritize. Therefore, you might ask Copilot, “How could we handle approval?”

Help with Completing Tasks

By using a copilot to assist in your solution building in Microsoft Power Platform, you can complete more complex tasks in less time than if you do them manually. Copilot can also help you complete small, tedious tasks, such as changing the color of all buttons in an app.

Learn about Something

While building an app, flow, or website, you can open a browser and use your favorite search engine to look up something that you’re trying to figure out. With Copilot, you can learn without leaving the designer. For example, your Power Automate flow has a step to List Rows from Dataverse, and you want to find out how to check if rows are retrieved. You could ask Copilot, “How can I check if any rows were returned from the List rows step?”

Knowing the context of your flow, Copilot would respond accordingly.

Design and Plan with Copilot

Copilot can be a powerful way to accelerate your solution-building. However, it’s the maker’s responsibility to know how to interact with it. That interaction includes writing prompts to get the desired results and evaluating the results that Copilot provides.

Consider the Design First

While asking Copilot to “Help me automate my company to run more efficiently” seems ideal, that prompt is unlikely to produce useful results from Microsoft Power Platform Copilots.

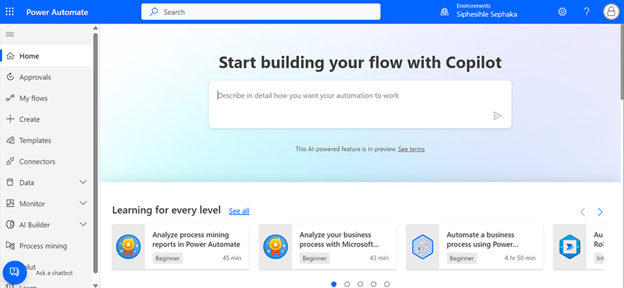

Consider the following example, where you want to automate the approval of intake requests. Without significant design thinking, you might use the following prompt with Copilot for Power Automate.

Copilot in cloud flow

“Create an approval flow for intake requests and notify the requestor of the result.”

This prompt produces the following suggested cloud flow.

While the prompt is an acceptable start, you should consider more details that can help you create a prompt that might get you closer to the desired flow.

A good way to improve your success is to spend a few minutes on a whiteboard or other visual design tool, drawing out the business process.

Include the Correct Ingredients in the Prompt

A prompt should include as much relevant information as possible. Each prompt should include your intended goal, context, source, and outcome.

When you’re starting to build something with Microsoft Power Platform copilots, the first prompt that you use sets up the initial resource. For Power Apps, this first prompt is to build a table and an app. For Power Automate, this first prompt is to set up the trigger and the initial steps. For Power Pages, this first prompt sets up the website.

Consider the previous example and the sequence of steps in the sample drawing. You might modify your initial prompt to be similar to the following example.

“When I receive a response to my Intake Request form, start and wait for a new approval. If approved, notify the requestor saying so and also notify them if the approval is denied.”

Continue the Conversation

You can iterate with your copilot. After you establish the context, Copilot remembers it.

The key to starting to build an idea with Copilot is to consider how much to include with the first prompt and how much to refine and add after you set up the resource. Knowing this key consideration is helpful because you don’t need to get a perfect first prompt, only one that builds the idea. Then, you can refine the idea interactively with Copilot.

6 Unique Copilot Features in Power Platform

-

Natural Language Power FX Formulas in Power Apps

Copilot enables developers to write Power FX formulas using natural language. For instance, typing /subtract datepicker1 from datepicker2 in a label control prompts Copilot to generate the corresponding formula, such as DateDiff(DatePicker1. SelectedDate, DatePicker2. SelectedDate, Days). This feature simplifies formula creation, especially for those less familiar with coding.

-

AI-Powered Document Analysis with AI Builder

By integrating Copilot with AI Builder, users can automate the extraction of data from documents, such as invoices or approval forms. For example, Copilot can extract approval justifications and auto-generate emails for swift approvals within Outlook. This process streamlines workflows and reduces manual data entry.

-

Automated Flow Creation in Power Automate

Copilot assists users in creating automated workflows by interpreting natural language prompts. For example, a user can instruct Copilot to “Create a flow that sends an email when a new item is added to SharePoint,” and Copilot will generate the corresponding flow. This feature accelerates the automation process without requiring extensive coding knowledge.

-

Conversational App Development in Power Apps Studio

In Power Apps Studio, Copilot allows developers to build and edit apps using natural language commands. For instance, typing “Add a button to my header” or “Change my container to align center” enables Copilot to execute these changes, simplifying the development process and making it more accessible.

-

Generative Topic Creation in Power Virtual Agents

Copilot facilitates the creation of conversation topics in Power Virtual Agents by generating them from natural language descriptions. For example, describing a topic like “Customer Support” prompts Copilot to create a topic with relevant trigger phrases and nodes, streamlining the bot development process.

-

AI-Driven Website Creation in Power Pages

Copilot assists in building websites by interpreting natural language descriptions. For example, stating “Create a homepage with a contact form and a product gallery” prompts Copilot to generate the corresponding layout and components, expediting the website development process.

Limitations of Copilot

| Limitation | Description | Example |

|---|---|---|

| 1. Limited understanding of business context | Copilot doesn’t always understand your specific business rules or logic. | You ask Copilot to "generate a travel approval form," but your org requires approval from both the team lead and HR. Copilot might only include one level of approval. |

| 2. Restricted to available connectors and data | Copilot can only access data sources that are already connected in your app. | You ask it to "show top 5 sales regions," but haven’t connected your Sales DB — Copilot can't help unless that connection is preconfigured. |

| 3. Not fully customizable output | You might not get exactly the layout, formatting, or logic you want — especially for complex logic. | Copilot generates a form with 5 input fields, but doesn't group them or align them properly; you still need to fine-tune it manually. |

| 4. Model hallucination (AI guessing wrong info) | Like other LLMs, Copilot may “guess” when unsure — and guess incorrectly. | You ask Copilot to create a formula for filtering “Inactive users,” and it writes a filter condition that doesn’t exist in your dataset. |

| 5. English-only or limited language support | Most effective prompts and results come in English; support for other languages is limited or not optimized. | You try to ask Copilot in Hindi, and it misinterprets the logic or doesn't return relevant suggestions. |

| 6. Requires clean, named data structures | Copilot struggles when your tables/columns aren't clearly named. | If you name a field fld001_status instead of Status, Copilot might fail to identify it correctly or generate unreadable code. |

| 7. Security roles not respected by Copilot | Copilot may suggest features that would break your security model if implemented directly. | You generate a data view for all users, but your app is role-based — Copilot won’t automatically apply row-level security filters. |

| 8. No support for complex logic or multi-step workflows | It’s good at simple flows, but not for things like advanced branching, looping, or nested conditions. | You ask Copilot to automate a 3-level approval chain with reminder logic and escalation — it gives a very basic starting point. |

| 9. Limited offline or disconnected use | Copilot and generated logic assume you’re online. | If your app needs to work offline (e.g., for field workers), Copilot-generated logic may not account for offline sync or local caching. |

| 10. Only works inside Microsoft ecosystem | Copilot doesn’t support 3rd-party AI tools natively. | If your company uses Google Cloud or OpenAI directly, Copilot won’t connect unless you build custom connectors or use HTTP calls. |

Build Good Prompts

Knowing how to best interact with the copilot can help get your desired results quickly. When you’re communicating with the copilot, make sure that you’re as clear as you can be with your goals. Review the following dos and don’ts to help guide you to a more successful copilot-building experience.

Do’s of Prompt-Building

To have a more successful copilot building experience, do the following:

- Be clear and specific.

- Keep it conversational.

- Give examples.

- Check for accuracy.

- Provide contextual details.

- Be polite.

Don’ts of Prompt-Building

- Be vague.

- Give conflicting instructions.

- Request inappropriate or unethical tasks or information.

- Interrupt or quickly change topics.

- Use slang or jargon.

Conclusion

Copilot in Microsoft Power Platform marks a major step forward in making low-code development truly accessible and intelligent. By enabling users to build apps, automate workflows, analyze data, and create bots using natural language, it empowers both technical and non-technical users to turn ideas into solutions faster than ever.

It transforms how people interact with technology by:

- Accelerating solution creation

- Lowering technical barriers

- Enhancing productivity and innovation

With built-in security, compliance with organizational governance, and continuous improvements from Microsoft’s AI advancements, Copilot is not just a tool—it’s a catalyst for transforming how organizations solve problems and deliver value.

As AI continues to evolve, Copilot will play a central role in democratizing software development and helping organizations move faster and smarter with data-driven, automated tools.

]]>Digital transformation in insurance isn’t slowing down. But here’s the good news: agents aren’t being replaced by technology. They’re being empowered by it. Agents are more essential than ever in delivering value. For insurance leaders making strategic digital investments, the opportunity lies in enabling agents to deliver personalized, efficient, and human-centered experiences at scale.

Drawing from recent industry discussions and real-world case studies, we’ve gathered insights to highlight four key themes where digital solutions are transforming agent effectiveness and unlocking measurable business value.

Personalization at Scale: Turning Data into Differentiated Experiences

Customers want to feel seen, and they expect tailored advice with seamless service. When you deliver personalized experiences, you build stronger loyalty, increase engagement, and drive better results.

Key insights:

- Personalization sits at the intersection of human empathy and machine accuracy.

- Leveraging operational data through platforms like Salesforce Marketing Cloud enables 1:1 personalization across millions of customers and prospects.

- One insurer saw a 5x increase in key site action conversions and converted 1.3 million unknown users to known through integrated digital personalization.

Strategic takeaway:

Look for platforms that bring all your customer data together and enable real-time personalization. This isn’t just about marketing. It’s a growth strategy.

Success In Action: Proving Rapid Value and Creating Better Member Experiences

Intelligent Automation: Freeing Agents to Focus on What Matters Most

Agents spend too much time on repetitive, low-value tasks. Automation can streamline these processes, allowing agents to focus on complex, high-value interactions that need a human touch.

Key insights:

- Automating beneficiary change requests reduced manual work and improved data accuracy for one major insurer.

- Another organization automated loan processing, which reduced processing time by 92% and unlocked $2M in annual savings.

Strategic takeaway:

Start with automation in the back-office to build confidence and demonstrate ROI. Then expand to customer-facing processes to enhance speed and service without sacrificing the personal feel.

Explore More: Transform Your Business With Cutting-Edge AI and Automation Solutions

Digitization: Building the Foundation for AI and Insight-Driven Decisions

Insurance is a document-heavy industry. Unlocking the value trapped in unstructured data is critical to enabling AI and smarter decision-making.

Key insights:

- Digitizing legacy documents using tools like Microsoft Syntex and AI Builder enabled one insurer to create a consolidated, accurate claims and policy database.

- This foundational step is essential for applying machine learning and delivering personalized experiences at scale.

Strategic takeaway:

Prioritize digitization as a foundational investment. Without clean, accessible data, personalization and automation efforts will stall.

Related: Data-Driven Companies Move Faster and Smarter

Agentic Frameworks: Guiding Agents with Real-Time Intelligence

The future of insurance distribution lies in human-AI collaboration. Agentic frameworks empower agents with intelligent prompts, decision support, and operational insights.

Key insights:

- AI can help guide agents through complex underwriting and risk assessment scenarios which helps improve both speed and accuracy.

- Carriers are increasingly testing these frameworks in the back office, where the risk is lower and the savings are real.

Strategic takeaway:

Start building toward a connected digital ecosystem where AI supports—not replaces—your teams. That’s how you can deliver empathetic, efficient, and accurate service.

You May Also Enjoy: Top 5 Digital Trends for Insurance in 2025

Final Thought: Technology as an Enabler, Not a Replacement

The most successful carriers seeing the biggest wins are those that blend the precision of machines with human empathy. They’re transforming how agents engage, advise, and deliver value.

“If you don’t have data fabric, platform modernization, and process optimization, you can’t deliver personalization at scale. It’s a crawl, walk, run journey—but the results are real.”

Next Steps for Leaders:

- Assess your data readiness. Is your data accessible, accurate, and actionable?

- Identify automation quick wins. Where can you reduce manual effort without disrupting the customer experience?

- Invest in personalization platforms. Are your agents equipped to deliver tailored advice at scale?

- Explore agentic frameworks. How can AI support—not replace—your frontline teams?

Carriers and brokers count on us to help modernize, innovate, and win in an increasingly competitive marketplace. Our solutions power personalized omnichannel experiences and optimize performance across the enterprise.

- Business Transformation: Activate strategy and innovation within the insurance ecosystem.

- Modernization: Optimize technology to boost agility and efficiency across the value chain.

- Data + Analytics: Power insights and accelerate underwriting and claims decision-making.

- Customer Experience: Ease and personalize experiences for policyholders and producers.

We are trusted by leading technology partners and consistently mentioned by analysts. Discover why we have been trusted by 13 of the 20 largest P&C firms and 11 of the 20 largest annuity carriers. Explore our insurance expertise and contact us to learn more.

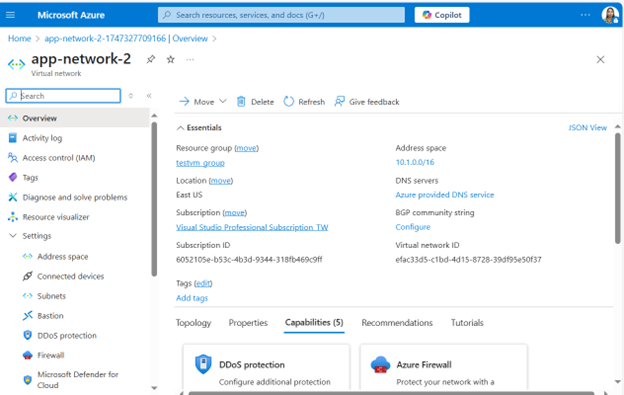

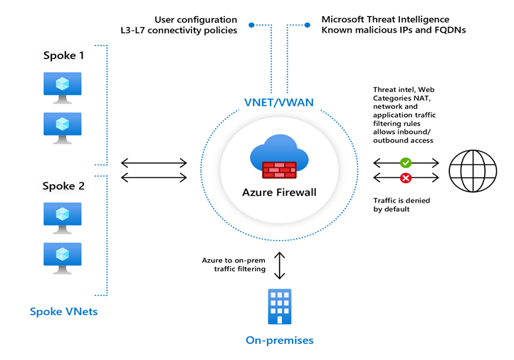

]]>Azure Firewall, a managed, cloud-based network security service, is an essential component of Azure’s security offerings. It comes in three different versions – Basic, Standard, and Premium – each designed to cater to a wide range of customer use cases and preferences. This blog post will provide a comprehensive comparison of these versions, discuss best practices for their use, and delve into their application in hub-spoke and Azure Virtual WAN with Secure Hub architectures.

What is Azure Firewall?

Azure Firewall is a cloud-native, intelligent network firewall security service designed to protect your Azure cloud workloads. It offers top-tier threat protection and is fully stateful, meaning it can track the state of network connections and make decisions based on the context of the traffic.

Key Features of Azure Firewall

- High Availability: Built-in high availability ensures that your firewall remains operational at all times.

- Scalability: Unlimited cloud scalability to handle varying workloads.

- Traffic Inspection: Inspects both east-west (within the same network) and north-south (between different networks) traffic.

- Threat Intelligence: Uses advanced threat intelligence to block malicious IP addresses and domains.

- Centralized Management: Allows you to centrally create, enforce, and log application and network connectivity policies across multiple subscriptions and virtual networks.

- Compliance: Helps organizations meet regulatory and compliance requirements by providing detailed logging and monitoring capabilities.

- Cost Efficiency: By deploying Azure Firewall in a central virtual network, you can achieve cost savings by avoiding the need to deploy multiple firewalls across different networks.

Why Azure Firewall is Essential

Enhanced Security

In today’s digital landscape, cyber threats are becoming increasingly sophisticated. Organizations need robust security measures to protect their data and applications. Azure Firewall provides enhanced security by inspecting both inbound and outbound traffic, using advanced threat intelligence to block malicious IP addresses and domains. This ensures that your network is protected against a wide range of threats, including malware, phishing, and other cyberattacks.

Centralized Management

Managing network security across multiple subscriptions and virtual networks can be a complex and time-consuming process. Azure Firewall simplifies this process by allowing you to centrally create, enforce, and log application and network connectivity policies. This centralized management ensures consistent security policies across your organization, making it easier to maintain and monitor your network security.

Scalability

Businesses often experience fluctuating traffic volumes, which can strain network resources. Azure Firewall offers unlimited cloud scalability, meaning it can handle varying workloads without compromising performance. This scalability is crucial for businesses that need to accommodate peak traffic periods and ensure continuous protection.

High Availability

Downtime can be costly for businesses, both in terms of lost revenue and damage to reputation. Azure Firewall’s built-in high availability ensures that your firewall is always operational, minimizing downtime and maintaining continuous protection

Compliance

Many industries have strict data protection regulations that organizations must comply with. Azure Firewall helps organizations meet these regulatory and compliance requirements by providing detailed logging and monitoring capabilities. This is particularly vital for industries such as finance, healthcare, and government, where data security is of paramount importance.

Cost Efficiency

Deploying multiple firewalls across different networks can be expensive. By deploying Azure Firewall in a central virtual network, organizations can achieve cost savings. This centralized approach reduces the need for multiple firewalls, lowering overall costs while maintaining robust security.

Azure Firewall Versions: Basic, Standard, and Premium

Azure Firewall Basic

Azure Firewall Basic is recommended for small to medium-sized business (SMB) customers with throughput needs of up to 250 Mbps. It’s a cost-effective solution for businesses that require fundamental network protection.

Azure Firewall Standard

Azure Firewall Standard is recommended for customers looking for a Layer 3–Layer 7 firewall and need autoscaling to handle peak traffic periods of up to 30 Gbps. It supports enterprise features like threat intelligence, DNS proxy, custom DNS, and web categories.

Azure Firewall Premium

Azure Firewall Premium is recommended for securing highly sensitive applications, such as those involved in payment processing. It supports advanced threat protection capabilities like malware and TLS inspection. Azure Firewall Premium utilizes advanced hardware and features a higher-performing underlying engine, making it ideal for handling heavier workloads and higher traffic volumes.

Azure Firewall Features Comparison

Here’s a comparison of the features available in each version of Azure Firewall:

| Feature | Basic | Standard | Premium |

|---|---|---|---|

| Stateful firewall (Layer 3/Layer 4) | Yes | Yes | Yes |

| Application FQDN filtering | Yes | Yes | Yes |

| Network traffic filtering rules | Yes | Yes | Yes |

| Outbound SNAT support | Yes | Yes | Yes |

| Threat intelligence-based filtering | No | Yes | Yes |

| Web categories | No | Yes | Yes |

| Intrusion Detection and Prevention System (IDPS) | No | No | Yes |

| TLS Inspection | No | No | Yes |

| URL Filtering | No | No | Yes |

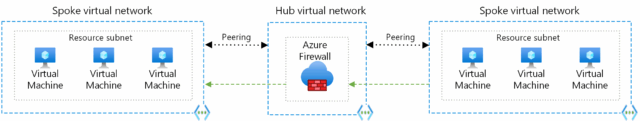

Azure Firewall Architecture

Azure Firewall plays a crucial role in the hub-spoke network architecture pattern in Azure. The hub is a virtual network (VNet) in Azure that acts as a central point of connectivity to your on-premises network. The spokes are VNets that peer with the hub and can be used to isolate workloads. Azure Firewall secures and inspects network traffic, but it also routes traffic between VNets .

A secured hub is an Azure Virtual WAN Hub with associated security and routing policies configured by Azure Firewall Manager. Use secured virtual hubs to easily create hub-and-spoke and transitive architectures with native security services for traffic governance and protection.

How Azure Firewall Works

Azure Firewall operates by using rules and rule collections to manage and filter network traffic. Here are some key concepts:

- Rule Collections: A set of rules with the same order and priority. Rule collections are executed in priority order.

- Application Rules: Configure fully qualified domain names (FQDNs) that can be accessed from a virtual network.

- Network Rules: Configure rules with source addresses, protocols, destination ports, and destination addresses.

- NAT Rules: Configure DNAT rules to allow incoming Internet or intranet connections.

Azure Firewall integrates with Azure Monitor for viewing and analyzing logs. Logs can be sent to Log Analytics, Azure Storage, or Event Hubs and analyzed using tools like Log Analytics, Excel, or Power BI.

Steps to Deploy and Configure Azure Firewall

Step 1: Set Up the Network

Create a Resource Group

Sign in to the Azure portal:

- Navigate to Azure Portal.

- Use your credentials to sign in.

- Create a Resource Group:

- On the Azure portal menu, select Resource groups or search for and select Resource groups from any page.

- Click Create.

- Enter the following values:

- Subscription: Select your Azure subscription.

- Resource group: Enter Test-FW-RG.

- Region: Select a region (ensure all resources you create are in the same region).

- Click Review + create and then Create.

- Create a Virtual Network (VNet)

- On the Azure portal menu or from the Home page, select Create a resource.

- Select Networking and search for Virtual network, then click Create.

- Enter the following values:

- Subscription: Select your Azure subscription.

- Resource group: Select Test-FW-RG.

- Name: Enter Test-FW-VN.

- Region: Select the same region as the resource group.

- Click Next: IP Addresses.

- Configure IP Addresses:

- Set the Address space to 10.0.0.0/16.

- Create two subnets:

- AzureFirewallSubnet: Enter 10.0.1.0/26.

- Workload-SN: Enter 10.0.2.0/24.

- Click Next: Security.

- Configure Security Settings:

- Leave the default settings for Security.

- Click Next: Tags.

- Add Tags (Optional):

- Tags are useful for organizing resources. Add any tags if needed.

- Click Next: Review + create.

- Review and Create:

- Review the settings and click Create.

Step 2: Deploy the Firewall

Create the Firewall:

- On the Azure portal menu, select Create a resource.

- Search for Firewall and select Create.

- Enter the following values:

- Subscription: Select your Azure subscription.

- Resource group: Select Test-FW-RG.

- Name: Enter Test-FW.

- Region: Select the same region as the resource group.

- Virtual network: Select Test-FW-VN.

- Subnet: Select AzureFirewallSubnet.

- Click Next: IP Addresses.

- Configure IP Addresses:

- Assign a Public IP Address:

- Click Add new.

- Enter a name for the public IP address, e.g., Test-FW-PIP.Click OK.

- Click Next: Tags.

- Add Tags (Optional):

- Add any tags if needed.

- Click Next: Review + create.

- Review and Create:

- Review the settings and click Create.

Step 3: Configure Firewall Rules

Create Application Rules

- Navigate to the Firewall:

- Go to the Resource groups and select Test-FW-RG.

- Click on Test-FW.

- Configure Application Rules:

- Select Rules from the left-hand menu.

- Click Add application rule collection.

- Enter the following values:Name: Enter AppRuleCollection.

- Priority: Enter 100.

- Action: Select Allow.

- Rules: Click Add rule.

- Name: Enter AllowGoogle.

- Source IP addresses: Enter *.

- Protocol: Select http, https.

- Target FQDNs: Enter www.google.com.

- Click Add.

- Create Network Rules

- Configure Network Rules:

- Select Rules from the left-hand menu.

- Click Add network rule collection.

- Enter the following values:

- Name: Enter NetRuleCollection.

- Priority: Enter 200.

- Action: Select Allow.

- Rules: Click Add rule.

- Name: Enter AllowDNS.

- Source IP addresses: Enter *.

- Protocol: Select UDP.

- Destination IP addresses: Enter 8.8.8.8, 8.8.4.4.Destination ports: Enter 53.

- Click Add.

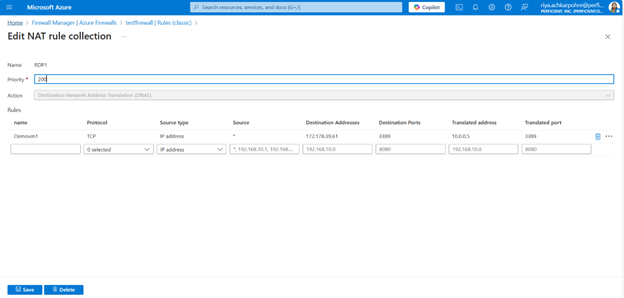

- Create NAT Rules

- Configure NAT Rules:

- Select Rules from the left-hand menu.

- Click Add NAT rule collection.

- Enter the following values:

- Name: Enter NATRuleCollection.

- Priority: Enter 300.

- Action: Select DNAT.

- Rules: Click Add rule.

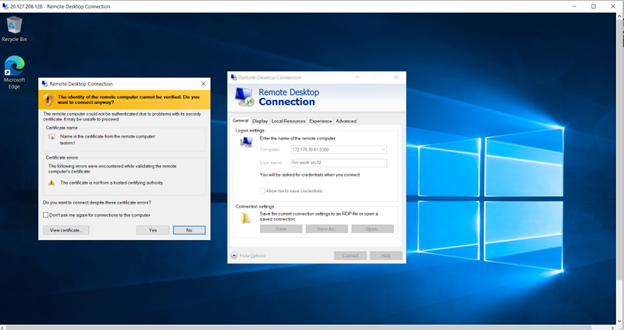

- Name: Enter AllowRDP.

- Source IP addresses: Enter *.Protocol: Select TCP.

- Destination IP addresses: Enter the public IP address of the firewall.

- Destination ports: Enter 3389.

- Translated address: Enter the private IP address of the workload server.

- Translated port: Enter 3389.

- Click Add.

- Configure NAT Rules:

Step 4: Test the Firewall

- Deploy a Test VM:

- Create a virtual machine in the Workload-SN subnet.

- Ensure it has a private IP address within the 10.0.2.0/24 range.

- Test Connectivity:

- Attempt to access www.google.com from the test VM to verify the application rule.

- Attempt to resolve DNS queries to 8.8.8.8 and 8.8.4.4 to verify the network rule.

- Attempt to connect via RDP to the test VM using the public IP address of the firewall to verify the NAT rule.

- Monitoring and Managing Azure Firewall

- Integrate with Azure Monitor:

- Navigate to the firewall resource.

- Select Logs from the left-hand menu.

- Configure diagnostic settings to send logs to Azure Monitor, Log Analytics, or Event Hubs.

- Navigate to the firewall resource.

- Integrate with Azure Monitor:

- Analyze Logs:

- Use Azure Monitor to view and analyze firewall logs.

- Create alerts and dashboards to monitor firewall activity and performance.

Best Practices for Azure Firewall

To maximize the performance of your Azure Firewall, it’s important to follow best practices. Here are some recommendations:

- Optimize Rule Configuration and Processing: Organize rules using firewall policy into Rule Collection Groups and Rule Collections, prioritizing them based on their frequency of use.

- Use or Migrate to Azure Firewall Premium: Azure Firewall Premium offers a higher-performing underlying engine and includes built-in accelerated networking software.

- Add Multiple Public IP Addresses to the Firewall: Consider adding multiple public IP addresses (PIPs) to your firewall to prevent SNAT port exhaustion.

In today’s world, the Internet of Things (IoT) is revolutionizing industries across the globe by connecting devices, systems, and people in ways that were once unimaginable. From smart homes to advanced manufacturing, IoT is creating new opportunities for innovation, efficiency, and data-driven decision-making. At the forefront of this transformation is Microsoft Azure, a cloud computing platform that has become a powerhouse for IoT operations.

In this blog, we’ll dive into the essentials of Azure IoT operations, how it simplifies and optimizes IoT workflows, and the key features that make it the go-to solution for businesses looking to scale their IoT systems.

What is Azure IoT?

Azure IoT is a set of services, solutions, and tools from Microsoft that allow businesses to securely connect, monitor, and control IoT devices across various environments. Azure IoT offers comprehensive capabilities for deploying IoT solutions, managing the entire device lifecycle, and providing data-driven insights that enhance decision-making processes.

At the core of Azure IoT is the ability to integrate IoT devices with cloud-based analytics, machine learning, and data processing tools, enabling businesses to leverage real-time data for more informed decisions.

Why Azure for IoT Operations?

Microsoft Azure is one of the leading cloud platforms that offer robust, scalable, and secure solutions for managing IoT operations. It is built on a foundation of powerful infrastructure that allows organizations to quickly deploy IoT systems and scale them based on evolving business needs. Here’s why businesses choose Azure for their IoT operations:

Scalability

Azure IoT can support millions of devices, allowing businesses to scale their IoT systems from a handful of devices to a vast ecosystem of connected devices across different locations. This scalability ensures that companies can grow their IoT initiatives without being limited by infrastructure constraints.

Security

Security is a major concern when managing IoT devices, as these devices are often vulnerable to cyberattacks. Azure IoT integrates comprehensive security measures, including device identity management, encryption, secure data transmission, and compliance with industry standards. This ensures that IoT operations are protected from potential threats.

Data Analytics and Insights

Azure provides a suite of analytics tools, including Azure Machine Learning, Power BI, and Azure Stream Analytics, that enable businesses to analyze data generated by their IoT devices in real-time. This enables predictive maintenance, real-time monitoring, and data-driven decision-making, ultimately improving operational efficiency.

Integration with Other Microsoft Services

Azure IoT seamlessly integrates with other Microsoft tools and services, such as Office 365, Microsoft Teams, and Azure Active Directory. This enables businesses to seamlessly integrate IoT data into their existing workflows and processes, fostering collaboration and facilitating more informed business operations.

Cross-Platform Compatibility

Azure IoT supports a wide variety of devices, operating systems, and protocols, making it a versatile solution that can integrate with existing IoT deployments, regardless of the technology stack. This interoperability allows businesses to get the most out of their IoT investments.

Key Components of Azure IoT Operations

Azure IoT comprises various tools and services that address different aspects of IoT operations, ranging from device connectivity to data processing and analytics. Let’s explore some of the key components:

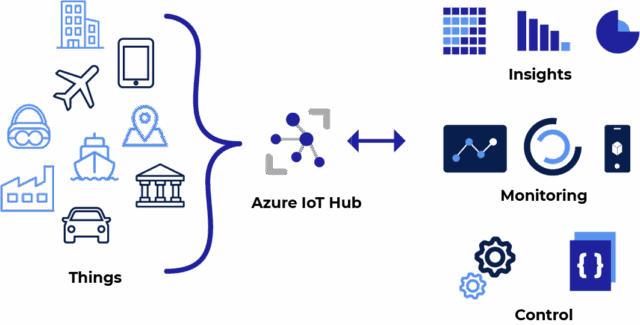

Azure IoT Hub

At the heart of Azure IoT operations is Azure IoT Hub, a fully managed service that enables secure and reliable communication between IoT devices and the cloud. It allows businesses to connect, monitor, and control millions of IoT devices from a single platform.

The IoT Hub provides two-way communication, allowing devices to send data to the cloud while also receiving commands. This bi-directional communication is crucial for remote monitoring, updating device configurations, and managing device health in real-time.

Azure Digital Twins

Azure Digital Twins is an advanced service that enables businesses to create digital models of physical environments. These models are used to visualize and analyze IoT data, providing a more comprehensive understanding of how devices and systems interact in the real world.

By utilizing Azure Digital Twins, organizations can optimize their operations, enhance asset management, and simulate scenarios for predictive maintenance and improved energy efficiency.

Azure IoT Edge

Azure IoT Edge enables businesses to run Azure services, including machine learning, analytics, and AI, directly on IoT devices at the edge of the network. This reduces latency and enables faster decision-making by processing data locally, rather than relying solely on cloud-based processing.

IoT Edge is ideal for scenarios where real-time data processing is critical, such as autonomous vehicles, industrial automation, or remote monitoring of remote assets.

Azure IoT Central

Azure IoT Central is a fully managed IoT SaaS (Software as a Service) solution that simplifies the deployment, management, and monitoring of IoT applications. With IoT Central, businesses can quickly deploy IoT solutions without requiring deep technical expertise in cloud infrastructure.

It offers an intuitive interface for managing devices, setting up dashboards, and creating alerts. IoT Central significantly reduces the complexity and time required to deploy IoT systems.

Azure Time Series Insights

Azure Time Series Insights is a fully managed analytics and storage service for time-series data. It is specifically designed for handling large volumes of data generated by IoT devices, such as sensor data, telemetry data, and event logs.

Time Series Insights offers powerful visualization and querying capabilities, enabling businesses to uncover trends and patterns in their IoT data. This is especially useful for monitoring long-term performance, detecting anomalies, and optimizing processes.

Optimizing IoT Operations with Azure

Azure IoT operations can be further optimized by integrating advanced technologies such as Artificial Intelligence (AI) and Machine Learning (ML). These technologies enable businesses to collect and store IoT data, as well as derive actionable insights from it.

Predictive Maintenance

By analyzing IoT data, Azure IoT can predict equipment failures before they occur. Using machine learning algorithms, businesses can identify patterns that indicate potential breakdowns and perform maintenance only when necessary, reducing downtime and maintenance costs.

Smart Automation

Azure IoT enables businesses to automate processes based on real-time data. For example, in smart factories, devices can automatically adjust production lines based on environmental conditions, inventory levels, or supply chain disruptions, increasing efficiency and reducing human error.

Energy Management

Azure IoT can help businesses optimize energy usage by continuously monitoring energy consumption and adjusting operations accordingly. Smart building solutions, for example, can automatically control lighting, heating, and cooling systems to reduce energy waste and lower costs.

Use Case

Azure IoT Operations Configuration Example: A Step-by-Step Guide

When configuring Azure IoT operations, you’re setting up a system where devices can securely connect to the cloud, send telemetry data, and receive commands for actions. Let’s walk through a practical configuration example using Azure IoT Hub, a key service in Azure IoT operations by following below link.

Tutorial: Send telemetry from an IoT Plug and Play device to Azure IoT Hub

Conclusion

Azure IoT operations are transforming how businesses leverage the Internet of Things to improve efficiency, enhance customer experiences, and unlock new revenue streams. With its powerful cloud infrastructure, end-to-end solutions, and integration with Microsoft’s suite of tools, Azure is a leading choice for businesses looking to capitalize on the potential of IoT.