In an era where artificial intelligence shapes every facet of our digital lives, a quiet revolution is unfolding in home labs and enterprise data centers alike. The AI Cluster paradigm represents a fundamental shift in how we approach machine intelligence—moving from centralized cloud dependency to distributed, on-premises deep thinking systems that respect privacy, reduce costs, and unlock unprecedented flexibility.

This exploration dives into the philosophy behind distributed AI inference, the tangible benefits of AI clusters, and the emerging frontier of mobile Neural Processing Units (NPUs) that promise to extend intelligent computing to the edge of our networks.

The Philosophy of Deep Thinking in Distributed Systems

Traditional AI deployment follows a client-server model: send your data to the cloud, receive processed results. This approach, while convenient, creates fundamental tensions with privacy, latency, and control. AI clusters invert this paradigm.

“Deep thinking isn’t just about model size—it’s about creating the conditions where complex reasoning can occur without artificial constraints imposed by network latency, privacy concerns, or API rate limits.”

An AI cluster operates on three core principles:

1. Locality of Computation

Data never leaves your network. Whether processing proprietary code, sensitive documents, or experimental research, the inference happens within your controlled environment. This isn’t just about security—it’s about creating a space for uninhibited exploration where the AI can engage with your full context.

2. Heterogeneous Resource Pooling

A cluster doesn’t discriminate between hardware. NVIDIA CUDA GPUs, Apple Silicon with Metal acceleration, and even CPU-only nodes work together. This democratizes AI access—you don’t need a $40,000 H100; your gaming PC, MacBook, and old server can contribute meaningfully.

3. Emergent Capabilities Through Distribution

When workers specialize based on their capabilities, the cluster develops emergent behaviors. Large models run on powerful nodes for complex reasoning, while smaller models handle quick queries on lighter hardware. The system self-organizes around its constraints.

Architecture of Thought: How AI Clusters Enable Deep Reasoning

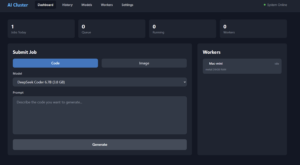

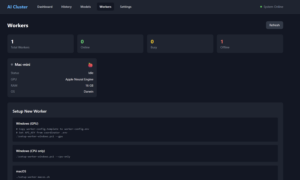

The AI Cluster architecture is deceptively simple yet profoundly effective. At its heart lies a coordinator—a Flask-based API server managing job distribution via Redis queues. Workers, running on diverse hardware, poll for jobs, download cached models, execute inference, and return results.

┌─────────────────────────────────────────────────────────────┐ │ User Request Flow │ ├─────────────────────────────────────────────────────────────┤ │ │ │ Browser/API Client │ │ │ │ │ ▼ │ │ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ │ │ │ Coordinator │───▶│ Redis Queue │───▶│ Workers │ │ │ │ (Flask) │ │ (Job Pool) │ │ (GPU/CPU) │ │ │ └─────────────┘ └─────────────┘ └─────────────┘ │ │ │ │ │ │ │◀────────────────────────────────────┘ │ │ │ Results + Metrics │ │ ▼ │ │ ┌─────────────┐ │ │ │ WebSocket │ ───▶ Real-time Progress Updates │ │ └─────────────┘ │ │ │ └─────────────────────────────────────────────────────────────┘

What makes this architecture conducive to deep thinking?

Asynchronous Processing: Jobs enter a queue, freeing users from synchronous waiting. This enables batch processing of complex, multi-step reasoning tasks that might take minutes rather than seconds.

Context Preservation: The system supports project uploads—entire codebases can be zipped and provided as context. When the AI generates code, it does so with full awareness of existing patterns, dependencies, and architectural decisions.

Model Selection Flexibility: From 6.7 billion parameter models for quick responses to 70 billion parameter behemoths for nuanced reasoning, the cluster dynamically routes jobs to appropriate workers based on model requirements and hardware capabilities.

The Tangible Benefits of Local AI Clusters

Beyond philosophical advantages, AI clusters deliver concrete benefits that compound over time:

| Benefit | Cloud API Approach | AI Cluster Approach |

|---|---|---|

| Cost | Per-token billing, unpredictable at scale | One-time model download, electricity only |

| Privacy | Data sent to third-party servers | Data never leaves your network |

| Availability | Dependent on internet, subject to outages | Works offline after initial setup |

| Rate Limits | Throttled during high demand | Limited only by your hardware |

| Customization | Fixed model versions, limited tuning | Choose any GGUF model, quantization level |

| Latency | Network round-trip overhead | Local network speeds (sub-millisecond) |

Real-World Scenario: Code Generation at Scale

Consider a development team generating AI-assisted code reviews for 1,000 pull requests monthly. With cloud APIs charging $0.01-0.03 per 1K tokens, costs quickly escalate to hundreds or thousands of dollars. An AI cluster running on existing hardware reduces this to electricity costs—often pennies per day.

The Mobile NPU Frontier: Extending Intelligence to the Edge

Perhaps the most exciting development in distributed AI isn’t happening in data centers—it’s happening in your pocket. Modern smartphones contain dedicated Neural Processing Units capable of running billions of operations per second with remarkable energy efficiency.

Understanding Mobile NPUs

Mobile NPUs are specialized accelerators designed for machine learning workloads:

- Apple Neural Engine: 16 cores delivering up to 35 TOPS (trillion operations per second) on iPhone and iPad

- Qualcomm Hexagon NPU: Integrated into Snapdragon processors, offering up to 45 TOPS on flagship Android devices

- Samsung Exynos NPU: Dedicated AI blocks for on-device inference

- Google Tensor TPU: Custom silicon optimized for Pixel devices

Why Mobile NPUs Matter for AI Clusters

The integration of mobile NPUs into AI cluster architectures represents a paradigm shift:

Ubiquitous Compute Availability

Every smartphone becomes a potential worker node. A team of 10 people effectively adds 10 NPU accelerators to the cluster during work hours—and these aren’t trivial resources. Modern mobile NPUs can run 3-7 billion parameter models in quantized formats.

Energy Efficiency Advantage

Mobile NPUs are engineered for battery-constrained environments. They deliver impressive performance-per-watt, often 10-100x more efficient than desktop GPUs for inference workloads. For always-on edge inference, this efficiency is transformative.

Latency at the Edge

For applications requiring immediate response—voice interfaces, real-time code suggestions, on-device translation—mobile NPUs eliminate network round-trips entirely. The AI thinks where you are, not where the server is.

Integration Pathways for Mobile NPU Workers

Integrating mobile devices into an AI cluster requires careful consideration of their unique constraints:

Mobile NPU Integration Architecture: ┌─────────────────────────────────────────────────────────────┐ │ Coordinator Server │ │ ┌─────────────────────────────────────────────────────┐ │ │ │ Job Queue with Device Capability Matching │ │ │ │ │ │ │ │ [Complex Job: 70B Model] ───▶ Desktop GPU Worker │ │ │ │ [Medium Job: 7B Model] ───▶ MacBook Metal │ │ │ │ [Light Job: 3B Model] ───▶ Mobile NPU Worker │ │ │ │ [Edge Job: 1B Model] ───▶ Any Available NPU │ │ │ └─────────────────────────────────────────────────────┘ │ └─────────────────────────────────────────────────────────────┘ Mobile Workers: ┌──────────────┐ ┌──────────────┐ ┌──────────────┐ │ iPhone 15 │ │ Pixel 8 │ │ Galaxy S24 │ │ Neural Eng │ │ Tensor TPU │ │ Exynos NPU │ │ (15 TOPS) │ │ (27 TOPS) │ │ (20 TOPS) │ └──────────────┘ └──────────────┘ └──────────────┘

The coordinator must understand device capabilities—battery level, thermal state, NPU availability, and supported model formats. Jobs are then intelligently routed:

- Background inference: When devices are charging and idle, they can process larger batches

- On-demand edge inference: Immediate local processing for time-sensitive requests

- Federated processing: Distribute large jobs across multiple mobile devices for parallel execution

Deep Thinking: The Cognitive Benefits of Distributed AI

Beyond technical metrics, AI clusters enable qualitative improvements in how we interact with artificial intelligence:

Unhurried Reasoning

Cloud APIs optimize for throughput and revenue. Local clusters optimize for quality. When you’re not paying per-token, you can allow the model to “think” longer, generate multiple candidates, and self-critique. This creates space for emergent reasoning patterns that rushed inference precludes.

Contextual Continuity

With project uploads and persistent context, the AI develops a coherent understanding of your work over time. It’s not starting from zero with each request—it’s building on accumulated knowledge of your codebase, your patterns, your preferences.

Experimental Freedom

Without cost concerns, developers explore more freely. Ask the AI to generate ten different implementations. Request detailed explanations of every design decision. Iterate on prompts until they’re perfect. This experimental abundance is where breakthrough insights emerge.

“The best tool is the one you use without hesitation. When AI assistance is free and private, you integrate it into your workflow at the speed of thought.”

Building Your Own AI Cluster: Key Considerations

For those inspired to build their own distributed AI infrastructure, consider these foundational elements:

Hardware Requirements

| Model Size | Minimum VRAM/RAM | Recommended Hardware |

|---|---|---|

| 3-7B (Q4) | 4-8 GB | Entry GPU, Apple M1, Mobile NPU |

| 13-14B (Q4) | 10-16 GB | RTX 3060+, Apple M1 Pro+ |

| 33-34B (Q4) | 20-24 GB | RTX 3090/4090, Apple M2 Max+ |

| 70B (Q4) | 40-48 GB | Multi-GPU, Apple M2 Ultra |

Network Architecture

Isolate your cluster on a dedicated subnet for security. The AI Cluster architecture uses 10.10.10.0/24 by default, with API key authentication and Redis password protection. All traffic stays internal—the coordinator never exposes endpoints to the internet.

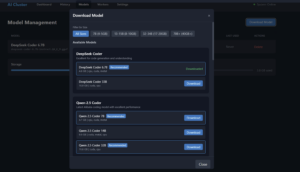

Model Selection Strategy

Choose models that match your primary use cases:

- Code generation: DeepSeek Coder V2 (16B), Qwen 2.5 Coder (32B)

- General reasoning: Mixtral, Llama 3

- Quick responses: Smaller 7B models with aggressive quantization

The Future: Convergence of Cloud, Edge, and Mobile

The trajectory is clear: AI inference is becoming increasingly distributed. The future cluster won’t distinguish between a rack-mounted server and a smartphone—it will see a heterogeneous pool of capabilities, dynamically allocating workloads based on real-time conditions.

Key developments to watch:

- Improved mobile inference frameworks: Core ML, NNAPI, and TensorFlow Lite are rapidly closing the gap with desktop frameworks

- Federated learning integration: Clusters that not only infer but continuously improve through distributed training

- Hybrid cloud-edge architectures: Local clusters handling sensitive/frequent workloads while burst capacity comes from cloud providers

- Specialized edge accelerators: Dedicated NPU devices (like Coral TPU) at $50-100 price points

Conclusion: Thinking Without Boundaries

AI clusters represent more than a technical architecture—they embody a philosophy of democratized intelligence. By distributing computation across diverse hardware, keeping data private, and eliminating usage costs, we create conditions for genuine deep thinking.

The addition of mobile NPUs extends this philosophy to its logical conclusion: intelligence that follows you, processes where you are, and thinks at the speed your context demands.

Whether you’re a solo developer in a home lab or an enterprise team building internal AI infrastructure, the principles remain constant: maximize locality, embrace heterogeneity, and design for the deep thinking that emerges when artificial intelligence is liberated from artificial constraints.

Start Your Journey

The AI Cluster project is open source under AGPL-3.0, with commercial licensing available. Explore the architecture, deploy your first worker, and experience what it means to have an AI that truly works for you.

Components included: Flask coordinator, universal Python worker, React dashboard, and comprehensive documentation for Proxmox deployment.