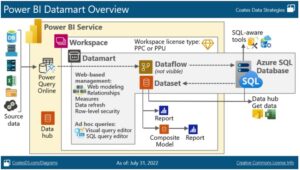

Microsoft Power BI is a data visualization tool that turns data into an opportunity. We want data that’s ready for analytics, to populate visuals, reports and dashboards, so we can quickly turn our volumes of data into actionable insights. Datamart, Dataflow and Dataset are all Power BI components dealing with data. In this blog let’s learn the differences between these three components, when and where one can use each, how they work together besides other components of Power BI.

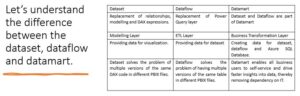

Dataset:

Power BI datasets are considered as a source of data what we used for reporting and visualization. Datasets are the object that contains the connection to the data source, data tables, the relationship between tables, DAX calculations and the data itself. Power BI Dataset also enable us to establish connection to published report, and create your own report based on published dataset. All tables and columns can be viewed in data tab of Power BI Desktop and the entire dataset is displayed in Power BI Service under ‘My Workspace’. So, anything somehow related to the data is a part of the dataset.

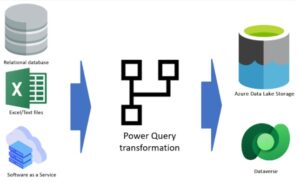

Dataflow:

Dataflow is the data transformation component of Power BI, which is independent of any other Power BI artifacts. It is a power query process that runs in the cloud and stores the data in Azure Data Lake storage or Dataverse. Dataflow is run by Power Query engine and Power Query Editor Online. If you are consistently re-using same tables in multiple files, dataflow is highly recommended in such scenarios that means dataflow will provide you a re-usable ETL (Extract-Transform-Load) component.

Datamart:

Datamart comprised of three basic components Dataflow, Azure SQL Database (as a data warehouse), and Dataset. Datamart comes with numerous amounts of benefits such as relational database analytics can be easily performed by self-serviced users which means there is no need of database administration. Datamart provide end-to-end data ingestion, preparation, and exploration with SQL, including no-code experiences.

Which should I use?

When implementation stages include getting data from the source, doing the transformation, loading the data, writing DAX expressions, and then visualization. Power BI Datamart allows us to build most of that in one combined structure.

When it seems that transformation is difficult. In such cases data transformation needs to be isolated from the data source so that just in case the source changes, the solution should work with minimal changes required. This can be solved by including dataflows in the transformation architecture.

Whereas when it comes to dataset, it itself is an important component. If someone is implementing an architecture in which the data transformation is done using another service such as Azure Data Factory and the data warehouse is in Azure Synapse. Such data models can be built using Power BI Dataset with some calculations on top.

Happy Reading!!

Nice & Informative blog…keep it up!

Great content Divya! Thank you for sharing

Thank you!