Essbase provides two methods of clustering: active-active and active-passive. As opposed to read-only active-active cluster more suitable for reporting purpose, an active-passive cluster supports write-back to pair with Hyperion Planning, for example. Active-passive Essbase clustering works very well in high availability (HA) environments where a fail over event from primary to secondary servers located in two different locations would trigger a recovery response in real time or, during a disaster recovery (DR) exercise. In this blog, we go over details to build an active-passive Essbase cluster on Windows servers in a HA system using SQL Server as relational database.

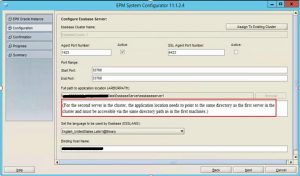

An active-passive Essbase cluster has two nodes: a primary and a secondary. We configure Essbase on each node or server as usual, using EPM configurator. Note that the familiar Essbase ARBORPATH needs to be a shared location (on either SAN or NAS storage) between the nodes – the fine print on server configuration screen states as such:

On secondary node, click ‘Assign to Existing Cluster’ to join the cluster first defined during primary node configuration. To enable fail-over, additional configuration is necessary:

- In Essbase opmn.xml file on primary node, find following xml tags and make required changes, for instance host names and ports, Essbase cluster name, service weight, etc. Note that SSL is not enabled (set to false) in this case.

<notification-server interface=”any”>

<ipaddr remote=”primary host“/>

<port local=”6711″ remote=”6712″/>

<ssl enabled=”false” wallet-file=”E:\Oracle\Middleware\user_projects\epmsystem1\config\OPMN\opmn\wallet”/>

<topology>

<nodes list=”primary host:6712,secondary host:6712″/>

</topology>

</notification-server>

<ias-component id=”EssbaseCluster name“>

<process-type id=”EssbaseAgent” module-id=”ESS” service-failover=”1″ service-weight=”101″>

<process-set id=”AGENT” restart-on-death=”false”>

- In Essbase opmn.xml on secondary node, find following xml tags and make similar changes:

<ssl enabled=”false” wallet-file=”E:\Oracle\Middleware\user_projects\epmsystem1\config\OPMN\opmn\wallet”/>

<topology>

<nodes list=”secondary host:6712,primary host:6711″/>

</topology>

<ias-component id=”Essbase cluster name“>

<process-type id=”EssbaseAgent” module-id=”ESS” service-failover=”1″ service-weight =”100″>

<process-set id=”AGENT” restart-on-death=”false”>

- In Essbase.cfg, add this line without the quotes “FailoverMode true”

Now comes an important caveat. Since SQL Server is the database repository, DataDirect driver protocol must be updated in EPM registry or Essbase will crash on startup. Always, back up your Shared Services database before making any registry change.

- Search EPM registry for “DataDirect 7.1 SQL Server Protocol”. There should be two occurrences one for each Essbase node. Make note of the unique Object ID strings.

- Update Driver descriptor for each node:

epmsys_registry.bat updateproperty #<unique Object ID #1>/@BPM_SQLServer_DriverDescriptor “DataDirect 7.1 SQL Server Wire Protocol”

epmsys_registry.bat updateproperty #<unique Object ID #2>/@BPM_SQLServer_DriverDescriptor “DataDirect 7.1 SQL Server Wire Protocol”

The cluster is now ready. On startup, by default the Essbase agent lives on primary node. If primary Essbase service or server goes offline, the agent will seamlessly ‘fail over’ to live on secondary node with no interruptions to other dependent applications.

A second caveat regarding SQL Server: databases in a HA solution are likely also clustered using SQL mirroring. In a DR scenario where primary SQL node is unavailable and the mirror is switched to its secondary node, a bit of intervention is needed before Essbase cluster can successfully fail over. I will go over steps to resolve such issue in another blog.