Imagine a large organization with many teams and Jenkins pipeline jobs. Or imagine one or two people who have to maintain many different Jenkins pipeline jobs within a Jenkins Master. For anyone who has been responsible for maintaining source code of any scale, the source code 101 tactic of good source code maintainability is the implementation of consistency and reuse. There are a few mechanisms within Jenkins that we’ll call more intermediate or advanced features, but are relatively simple to implement, i.e. global properties and shared libraries. In this blog, we’ll give an explanation and a few examples of these features.

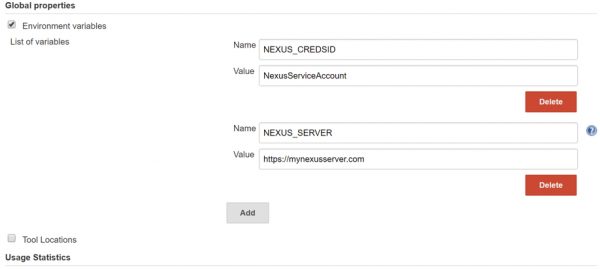

Global properties can be found at Jenkins->Manage Jenkins->Configure System under the Global properties section. They are installed by the Global Variable String Parameter Plugin. These global properties can be used by every Jenkins job that is tied to a particular master.

These simple string name/value pairs can help a great deal with reuse and maintainability. In the case of a recent project, we used Sonatype Nexus Repository v3 to store all of our packages built by Jenkins. The current Sonatype plugin does not support Nexus 3, so we used the Nexus Artifact Uploader Jenkins plugin. This plugin was easy to use, however, it cannot refer to the Nexus login information as specified in Jenkins->Manage Jenkins->Configure System. Instead you need to explicitly provide the Nexus repository URL, protocol and credentials within the pipeline stage block. We definitely don’t want to have to specify the Nexus Repo URL and login credentials (we use a service account for all uploads) in every pipeline job as those parameters should never really change. Also, if they were to change, we would prefer to make the update only in one place.

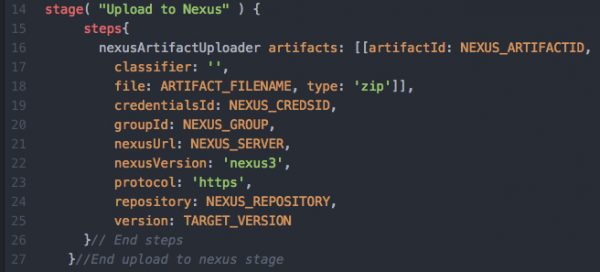

The great thing about pipeline code is that global properties and local variables are used in the same fashion. In the example pipeline stage code above, NEXUS_SERVER (line 21) and NEXUS_CREDSID (line 19) are global properties defined within the Jenkins Master (the value of NEXUS_CREDSID is actually the identifier of a Jenkins credential). The other variables (NEXUS_ARTIFACTID, ARTIFACT_FILENAME, etc.) are defined at the job level as these need to be changed for each job. We use this exact stage block in all of our pipeline jobs to upload any built package to Nexus. Thus we only need to specify the NexusURL and credentialsID parameters one single time within global properties for all jobs to use. If for whatever reason the Nexus URL needs to change, or our service account needs to change, we can update these properties in one place to take effect in all jobs.

Jenkins has also made it possible to build your own helper functions through the use of Shared Libraries. As the number of Jenkins pipelines grow within your organization you’ll probably notice some repeating job patterns. As usage maturity grows, at some point we mostly stop coding pipeline jobs from scratch. Rather, we look for an existing job with flows similar to what we need in a new job, we copy that code and modify it accordingly. As we continually copy code for new jobs, we start to notice that we’re reusing the same code over and over again in many jobs. Rather than repeating code patterns in multiple locations (does not comply with principle of DRY), take things a step further and define that code pattern as a specific helper function that is managed and maintained in one global location. This is Shared Libraries in a nutshell.

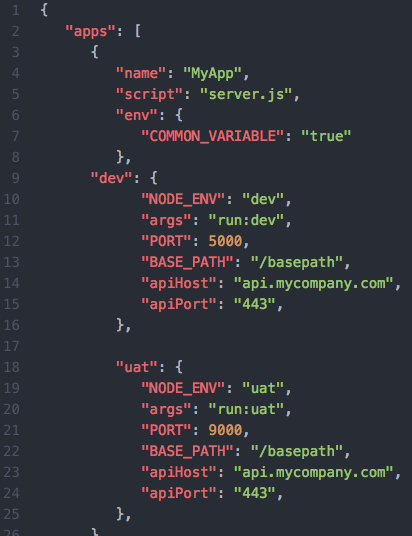

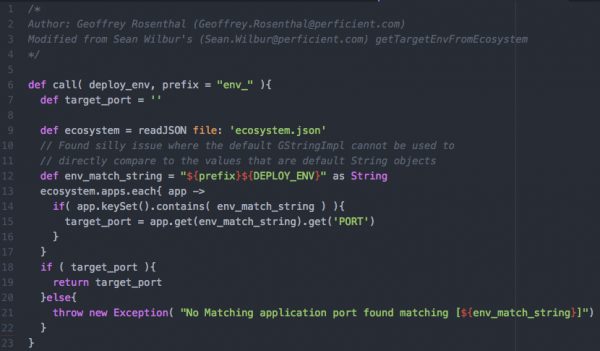

In our project we were building Node.js applications. We were also using Jenkins to automate scripts to deploy the application to an environment. Our application included an ecosystem.json file (PM2-Deployment) which defined environment-specific properties (DEV, QA, UAT, PROD) for our application. For the deployment stages of our pipeline we needed to read the ecosystem.json file in order to find and use the environment specific properties for our deployment stages. We identified this phase of the pipeline as a common pattern and created a shared library for it, then simply referenced the function in each pipeline job. Using this approach ensures consistency, correctness, and lowers the overall maintenance burden for this section of code.

Here is a snippet of our ecosystem.json file:

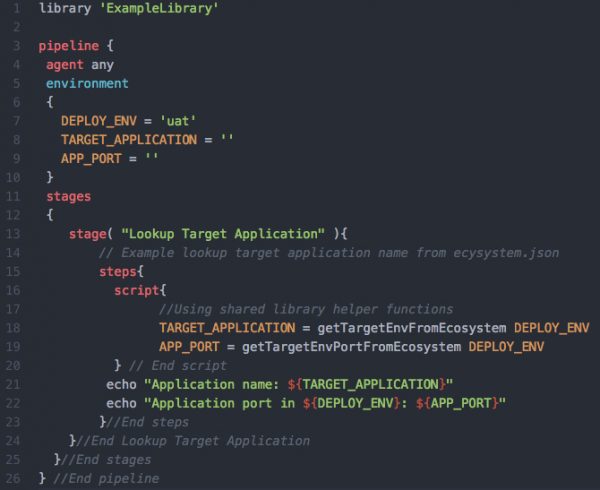

Here is our simple pipeline script with the stage to read our ecosystem.json file. Note that this script also requires the Pipeline: Groovy Plugin, and the Pipeline Utility Steps Plugin.

In this example, we read the ecosystem.json file and search through the various apps objects within the ecosystem file for the various environment properties we need. These properties are saved and used in subsequent pipeline stages when calling our deployment script. Note that since this is scripted Groovy, if the job is running for the first time and the user running the job does not have appropriate privileges, you might need a Jenkins administrator to approve the running of these scripts (In-process Script Approval). This is a safeguard within Jenkins to only allow execution of approved, arbitrary code.

Our organization is using good reusability practices when writing our Node.js applications, thus we’ll follow this same pattern with our ecosystem file and application deployment processes. We’ll have this same stage code repeated in many Jenkinsfile pipeline files. Wouldn’t it be nice if we could streamline our reuse and not have to copy/paste this code many times in many Jenkins files?

Jenkins Shared Libraries provides you the capability to author and share commonly used helper functions and call those functions within a job. You can roughly equate a shared library to your own custom Jenkins plugin, but without the formality of the Jenkins plugin architecture. Shared libraries also come with some security and access benefits. Since an administrator needs to explicitly add a shared library at a desired context, you don’t need to deal with the clunky In-process Script Approval mechanism mentioned previously. You can add a shared library globally within a Jenkins master (Manage Jenkins->Configure System->Global Pipeline Libraries). You can also add a shared library at the project folder level (Configure->Pipeline Libraries). Adding a shared library globally or at the project level gives you some flexibility to setup specific libraries for specific teams or for everyone using a Jenkins master. See the Jenkins documentation for more details about shared library structure and adding libraries to Jenkins.

We modified our pipeline example above that reads an ecosystem file and returns desired properties. We created a shared library with helper functions that, when called with an environment parameter, will return the requested property for our application for the specified environment. Again, the Jenkins documentation is fairly complete for shared libraries, so take a look for more details.

Some highlights in our new pipeline code:

- Note the included shared library on line 1

- We removed the PREFIX variable from the pipeline and placed it in the shared library files. Our standard is for all ecosystem.json files to use the same environment prefix, thus we account for this in the shared library instead of in individual pipeline files

- Lines 18 and 19 for finding the TARGET_APPLICATION and APP_PORT are now simplified to a single call of our helper function in our shared library

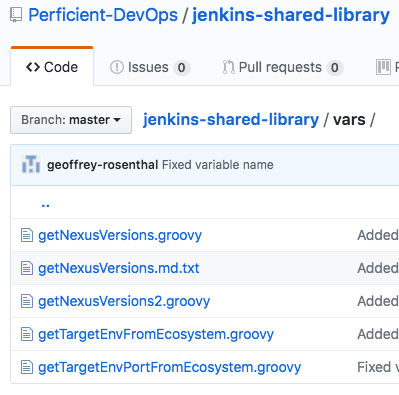

Our shared library follows the standard structure as defined in the Jenkins Share Library documentation (note that in our case our shared library is in GitHub):

Our two helper functions follow the same pattern. Here is a screenshot of our getTargetEnvPortFromEcosystem.groovy:

Since Jenkins pipeline scripts are written in Groovy syntax (Pipeline syntax documentation), it makes sense that shared libraries are written in Groovy and that the pipeline script that we used in the first example can be copied almost verbatim into a Groovy file.

In this blog we introduced global properties and shared libraries in Jenkins. These features promote reuse and long-term maintainability. If many pipeline scripts need the same global variable, define that variable as a Jenkins Global Property. If many pipeline scripts reuse the same script function, put that script in a shared library. For all companies leveraging Jenkins or CloudBees Jenkins for continuous integration and delivery, the consistency and control provided by global properties and shared libraries is bound to improve your Jenkins experiences and perhaps even your overall quality of life (which may be a little bit of a stretch, but we’re hoping).