This is Part V in a multi-part series.

Part I introduced the concept of analytically measuring the performance of delivery teams.

In Part II – We talked about how Agile practices enhance our ability to measure more accurately and more often.

Part III defined a system model for defining 3 dimensions of performance (Predictability, Quality and Productivity). We then got into specifics of how to measure the first dimension; ‘Predictability’

Part IV covered the second of the three dimensions; Quality

In this part, we broach the often controversial subject of ‘Productivity’; demonstrating that you can think analytically even about the most sensitive and seemingly ambiguous topics.

So how ‘productive’ are your developers?

This is probably the most highly charged and contentious area of measurement, not only because of the difficulty in normalizing and isolating its’ measurement, but also in the sensitivity to the implications of the measurement. Nobody likes to hear that their team has ‘low productivity’ – and in fact, most IT managers would probably be very surprised at how much ‘non-productive’ time is spent developing software.

If you doubt the last statement, let me just relate that I’ve often observed the following:

- IT Managers that purely measure productivity by how many lines of code a developers is churning out – regardless if that code actually efficiently written, matches up with requested requirements, maintainable or guarantees quality (code reviews, test first approach, fully regression tested against breaking other code), etc.

- IT Managers that don’t want developers to spend time testing.

- Software organizations that rely too heavily on tacit knowledge, usually constrained to a few developers which ensures job security for a few at the cost of increased risk to the organization.

- IT Managers (and Executives) that will proclaim that their development teams operate at near

- 90% ‘efficiency’ and want to use 40 hours per week / developer as the baseline for team velocity calculations (yet generally have no previous iteration metrics – probably because the development team games the metrics to meet the 40 / hour velocity expectation).

By the way – I’m not kidding on the last one. It comes up more that you’d think. In fact, I cannot even tell you how many times I’ve talked to an IT Manager that claims their organization is an expert in Scrum and yet is unaware that typical velocities are generally far below ideal hours.

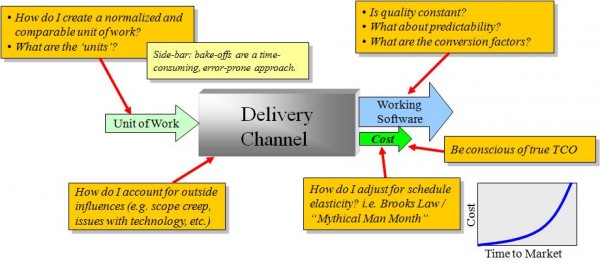

But perceptions aside, let’s drift back to our original model and break down that Delivery Channel as a black box a little bit further. In doing so, we’ll highlight the various challenges with such a simplistic model.

As you can see from the above diagram, there are a number of complexities that make the normalization of the inputs and outputs to a Delivery Channel challenging, including the inter-dependencies of other dimensions of measurement (Quality and Predictability) themselves.

But does this mean we just throw up our hands and say that it’s impossible to analytically compare and contrast the effectiveness of various delivery teams? Well, this is exactly what some IT organizations do. But as we’ll see, there are indeed concrete ways to normalize the inputs and outputs of a Delivery Channel (Units of Work, Working Software and Costs) in such a way as to make measurement, comparison and trending practical.

First, let’s look at a “Unit of Work”. The key question here is how to standardize the measurement of a unit of work across an IT organization? This turns out to be more dependent on adopting a rigorous process of estimation and tracking than a purely theoretical modeling exercise. It’s interesting to note that over the years, several studies have been done to ascertain what affect technology and industry domain has on the actual complexity of software development.

As much as most IT organizations would like to believe, the answer to both is ‘not much’. With few exceptions, almost ALL industries carry with them a similar level of complexity to their business domain problem and almost ALL technologies carry with them a similar level of complexity to their use. This explains the fact that despite all of the increased abstraction of software frameworks and tools through the years, software development hasn’t really gotten ‘faster’. This is because as tools become more powerful, we leverage it to design more complex solutions. Most solutions today could not be built by development teams by using assembly language from scratch in the same amount of time that they are today.

This also explains the anecdotal evidence I’ve observed where almost every single executive in every single industry and customer I’ve ever met with, makes a point to stress something along the lines of, “Our industry is far more complex than other industries”. The only conclusion to be drawn from this what I mentioned earlier – that ALL industries carry with them a unique set of complexities that need to be addressed, and it’s actually the ‘uniqueness’ of the complexities that makes domain knowledge in a particular industry so valuable.

So let’s assume then that we can measure units of work in a way that at least we can incrementally standardize across a particular IT organization by following a standardized process and making adjustments as we get better and better at estimating work – as measured through Predictability improvements discussed earlier.

The actual mechanics of the process we follow is actually less important than following a process in a consistent manner. Over the years, there have been several different yardsticks by which we measure software development units of work. Some are better than others.

| Measurement Method | Pros / Cons |

| Lines of Code |

|

| Function Points |

|

| Feature Driven Development |

|

So if the above matrix seems transparently weighted towards following a Feature Driven approach, it’s on purpose. There has been an evolution over time, especially in the Agile world, that using ‘features’ to define units of work is especially efficient with regard to minimizing ancillary documentation throughout the process – even if initially the business analysts resist leaving the island of the large ‘thud factor’ requirements documents. The extra step in that case is required regardless of the approach.

While Feature Driven Development (FDD) also describes and Agile process in itself, note that for our purposes here (standardization of Units of Work), I am simply borrowing the concept of ‘Features’ to decompose and draw boundaries around the work. This part of FDD can actually be incorporated into a waterfall process (if you absolutely must).

As I stated earlier however, it’s more important that an IT organization have some standard of measure, rather than how specifically it chooses to measure. Rather than getting hung up on a specific method, pick one and start measuring. You can always improve, refine and even change measurement methods over time. Don’t get caught up on finding the ‘perfect’ measurement approach or arguing endlessly about which is better. Remember that If you did nothing more than count lines of code, you’d probably be doing better than over 2/3 of the organizations out there that don’t consistently measure anything. In other words – you’d be in the top 1/3 of all IT organizations, just by doing something.

So assuming you are measuring something, there are a few things to be conscious of with regard to how you estimate costs associated with that unit of work:

Be conscious of TCO

Although your department charge-back model may not account for everything they are still real costs to the company. Be a good corporate citizen (or at least balanced with respect to evaluating alternatives) and account for:

- Employees – salary and benefits, utilization, training time, training investments, hiring / turn-over, infrastructure and management

- Contractors – rates, conversion costs, utilization, ramp-up time, turn-over, infrastructure, management.

- Offshore – infrastructure (localized environments, communications, licensing, etc.), audits, management, turn-over, ramp-up / transition time, knowledge management / transition (KM/KT) costs, etc.

Use dollars instead of hours

Too many organizations get hung up on hours – but it all really boils down to costs and in fact it’s difficult to capture actual TCO as above with pure hours calculations. Costs tend to leak into features that way. Using costs also ensures that conversion factors are consistent and allows a more direct correlation to business value and comparison to alternatives (build vs buy vs SAAS / hosted) decisions.

One standard unit of work that tends to apply well is what I’ll call “Effective Blended Rate”. In short Effective Blended Rate (EBR) is the cost spent per feature-point (where feature-point is the your standardized measure of features). You could also easily substitute cost per KLOC (thousand lines of code) or whatever your measure is.

Having an EBR is important when comparing multiple delivery channels. For example, in a build vs hosted solution (SAAS) you could estimate features for a particular solution and easily compare the cost to build vs the cost to host. Sounds too simple right? Well – the key that made this simple was deciding on using a measurement of work (feature-points) that directly correlates to business value.

Another type of EBR might be cost per feature-point per resource:

EBR = $ / feature-point / resource

Assuming that you can keep feature-points near standard to ideal hours, then this works nicely to get you in the true ballpark of an effective ‘bill rate’ for a resource. (although due to realistic velocities of say 25-30 hours per week, you have to recalibrate your thinking around what an effective rate per hour actually looks like – higher than contractual rate / hour).

This works well with multi-shore comparisons to pure onshore (US) teams. Consider the following example.

- You are trying to compare two teams, on completely US based (onshore / onsite) and the other a multi-shore team (30% US / 70% offshore). The US team is composed of 10 developers, each with a TCO hourly cost of $100 / hour.

- The multi-shore team produces that same number of feature-points every iteration, but is composed of 4 US developers and 9 offshore developers (total team size = 13 or 30% higher with regard to iteration velocity (number of hours per iteration) than the completely US team.

- Let’s also say that the offshore developers fully loaded cost is $35 / hour.

- At those rates, the blended rate of the US team is $100 / hour and the blended rate of the multi-shore team is $55 / hour. But looking at just that statistic would be a mistake since the all onshore / onsite team is more ‘productive’ than the multi-shore team (most likely from a combination of communication efficiencies, higher industry domain knowledge in the developers and perhaps slightly higher overall seniority of the onshore team).

- But let’s normalize this around feature-points. Let’s say that the current number of feature-points per three week iteration (by both teams) is 780 feature-points. Using this statistic, the Effective Blended Rate of each team now works out to:

EBR (onshore) = $154 / feature-point = (10 dev x $100 / hour x 120 hours) / 780 feature-points

EBR (multi-shore) = $110 / feature-point = ((4 x $100) + (9 x $35) x 120 hours) / 780 feature-points

- The above still leans towards the multi-shore team being more ‘efficient’ with regard to overall implementation cost, even though that model requires 30% more contingency hours to get the same work done. In fact, a team of 5 onshore and 12 offshore (17 total developers), which represents a contingency of 70% additional, results in an EBR of $141 / feature-point.

- I think you can see from the above example why there is such a strong push to multi-shore teams. For organizations that take the time to make them work effectively (typical contingency ‘adds’ across the industry are roughly 20% – 50% depending on project complexity). You can also see why pure financial models – or getting hung up around pure ‘developer hours’ can be so misleading since pure hours tells you very little.

Quite simply – if an IT Organization doesn’t make a priority of measuring, then it really is missing an opportunity to manage its costs effectively and truly understands its break-even points in making effective offshore decisions.

So if you’re not yet convinced, consider also that over time, the productivity of multi-shore service arrangements tend to increase in the near term, but decrease over a period of 1-2 years. The reasons have to do with complacency, diminishing returns on additional cost cutting (yielding more junior resources), growths in project turn-over, etc.).

But if an IT Organization does a good job of either measuring directly, or having it’s service provider regularly report (auditable) metrics – then you place the challenge where it belongs. On maximizing your organizations Effective Blended Rate and maximizing the value for your IT spend.

What sort of dashboard might you expect from a service provider in this regard? The diagram below provides an actual (scrubbed) example:

Notice that we’ve added something as well. The gray area represents how we can account for the interdependence of other measurable areas (quality and predictability) as well as external influences (things the team dealt with but had no control over such as a missed dependency or in this case having operations perform a VM upgrade mid-iteration. Also notice that we have a factor for where the team sits with regard to where they were operating with regard to the schedule elasticity curve (i.e. Brooke’s Law / mythical man month). More specifically, if a team is pushed harder than it recommends to make a date, they effectively get ‘credit’ associated with the inefficiencies of operating further up the curve.

Notice that there is a certain element of subjectivity to these influencers, at least in the short term. But over a very short period of time (3-4 iterations) an IT organization that was putting a priority on capturing metrics, would be able to better quantify these factors. In fact, you would be surprised as to how frighteningly accurate these metrics and factors can become over time – given an analytical attention to measuring and tracking.

Whew…. we got through that (even with a lot on the table to digest and discuss!)

In our final segment we’ll summarize and wrap this topic up.