As the name Apache SOLR suggests, SOLR is developed by Apache foundation in JAVA language, and this has base libraries from the Apache LUCENE project, which provides a searching algorithm to SOLR.

As the name Apache SOLR suggests, SOLR is developed by Apache foundation in JAVA language, and this has base libraries from the Apache LUCENE project, which provides a searching algorithm to SOLR.

SOLR, an integral part of many applications, has search functionality including Google, Amazon and many enterprise applications. (This post from Lucidworks includes a list of companies that use SOLR.)

SOLR provides full-text search, highlight text on hit, real-time indexing, database integration, clustering support and document-based indexing, all of which provide faster execution in less time. SOLR engine can be easily integrated with all application or web servers. This document illustrates the steps to setup SOLR and integrate SOLR with the Jboss AS 7 application server.

Install and Configure SOLR

Download SOLR and JBOSS:

SOLR

http://www.apache.org/dyn/closer.cgi/lucene/solr/5.2.1

JBOSS

http://jbossas.jboss.org/downloads/

Jboss windows installation steps

http://knowledgespreading.blogspot.in/2013/04/jboss-711-installation-on-windows-7.html

Note: JBoss configuration steps are not shown here because this document mainly focuses on SOLR.

SOLR Indexing Mechanism

SOLR has a different mechanism for the way the indexes have been shared to the client:

Collection

A single search index.Shard

A logical section of a single collection (also called Slice). Sometimes people will talk about “Shard” in a physical sense (a manifestation of a logical shard)Replica

A physical manifestation of a logical Shard, implemented as a single Lucene index on a SolrCoreLeader

One Replica of every Shard will be designated as a Leader to coordinate indexing for that ShardSolrCore

Encapsulates a single physical index. One or more make up logical shards (or slices) which make up a collection.Node

A single instance of SOLR. A single Solr instance can have multiple SolrCores that can be part of any number of collections.Cluster

All of the nodes you are using to host SolrCores

Above are indexing mechanisms used by SOLR. Here, I focus on how to create SOLR core and enable indexing in JBoss because I am going to use single instance for search mechanism, in case of multiple instances and indexing need to be shared across the clusters, then use SOLR collection.

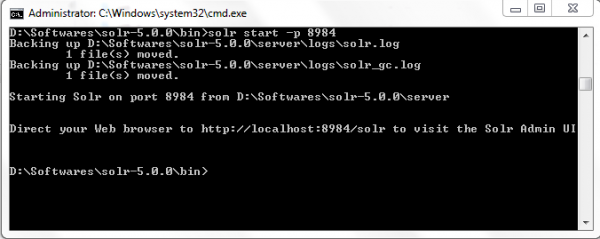

Starting SOLR

Once installing SOLR, set up SOLR home path in the OS, so it can be accessed easily.

The first step: because the SOLR package includes the jetty server bundled, we will configure SOLR by starting the jetty server using below command.

<SOLR installation path>\bin> solr start -p 8984

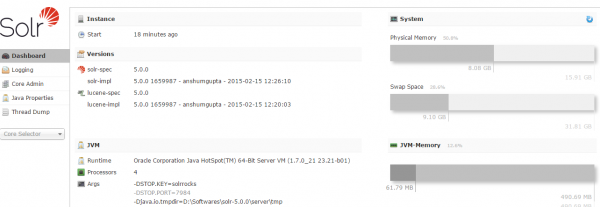

Now SOLR engine starts listening in port 8984. The port number needs to be mentioned while starting the SOLR, so I used 8984. Once started, hit URL http://localhost:8984/solr/.

The SOLR console should look like below.

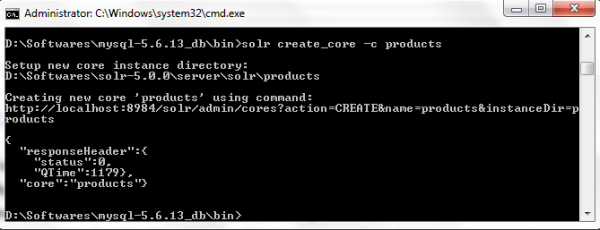

Creating Core

Core is integral part of SOLR search engine, as it contains all data related to search content. Ideally it acts as vault where it stores all search data under <data> directory, and folder <index> contains documents with indexed format, indexing will be based on the identifier mentioned in the configuration file, which we will see in later part of this document.

The following commands will create the new core named “Products.”

SOLR create_core -c products

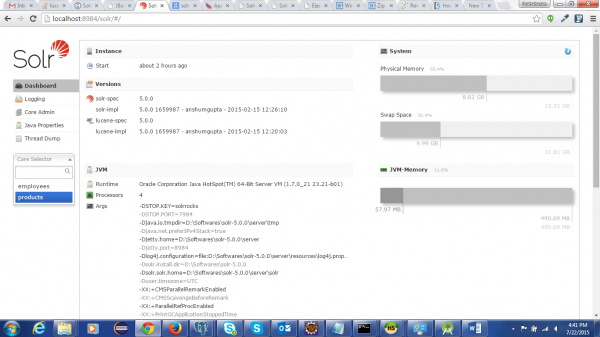

Now in the SOLR admin console, we will be able to see the newly created core “Products.”

Configure Core Data-Import Handler

There are different ways to source data for SOLR engine for indexing. The widely used ways are .csv, database. In this example, I have used database as a source for data indexing, and this setup is mostly used in many enterprise applications because SOLR supports real-time indexing. That means if there are any updates to database tables, SOLR will automatically update its documents, and the search results returned will match exactly with database data with minimum lapse time.

Create a database called products in mysql and create some stub data for testing. Execute below SQL insert query to have test data for our testing.

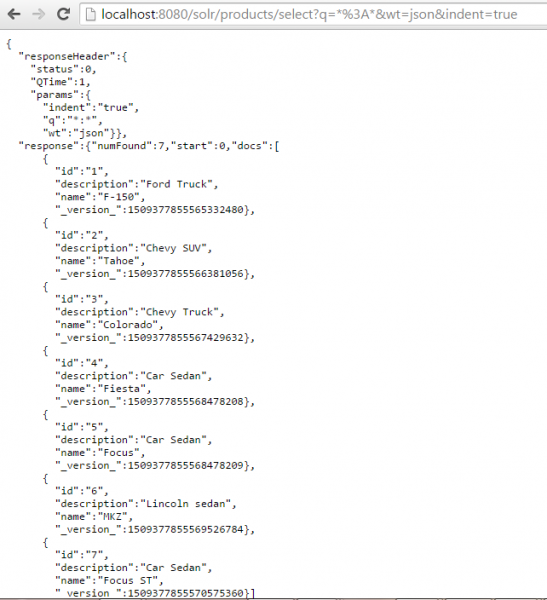

INSERT INTO `products` (`product_id`, `name`, `description`) VALUES (1, ‘F-150’, ‘Ford Truck’), (2, ‘Tahoe’, ‘Chevy SUV’), (3, ‘Colorado’, ‘Chevy Truck’), (4, ‘Fiesta’, ‘Car Sedan’),(5, ‘Focus’, ‘Car Sedan’), (6, ‘MKZ’, ‘Lincoln sedan’), (7, ‘Focus ST’, ‘Car Sedan’);

Now we need to configure core for importing data from database, which is now source for search data.

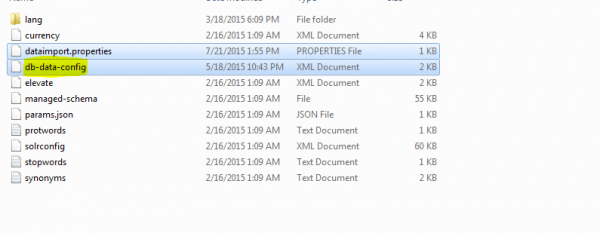

Create db-data-config file under <core>\conf\ folder, file defines the properties database type, credentials to connect to db, and document details, which corresponds to the database table for indexing. Now the folder should like the below structure.

Configure db-data-configure file based on the database used. I have the attached file, contain configuration details for mysql, and I have defined the table to which SOLR will create the indexed document based on id “product_id”. Note, the ID should be unique for each record; it can’t be duplicated because SOLR applies an indexing mechanism based on the ID we specify. In the case of configuration issues, SOLR will throw an error while in the indexing stage, so keep looking at the logs in case SOLR is not recognizing the core. This whole mechanism is handled by the data import-request handler (Wiki offers more info on this), which may include multiple features:

<dataConfig>

<!– The first element is the dataSource, in this case an MYSQL database.

The path to the JDBC driver and the JDBC URL and login credentials are all specified here.

Other permissible attributes include whether or not to autocommit to Solr,the batchsize

used in the JDBC connection, a ‘readOnly’ flag –>

<dataSource type=”JdbcDataSource”

driver=”com.mysql.jdbc.Driver”

url=”jjdbc:mysql://localhost:3306/products”

user=”admin”

password=”admin” />

<!– a ‘document’ element follows, containing multiple ‘entity’ elements.

Note that ‘entity’ elements can be nested, and this allows the entity

relationships in the sample database to be mirrored here, so that we can

generate a denormalized Solr record

for one item, for instance –>

<document name=”products”>

<!– The possible attributes for the entity element are described below.

Entity elements may contain one or more ‘field’ elements, which map

the data source field names to Solr fields, and optionally specify

per-field transformations –>

<!– this entity is the ‘root’ entity. –>

<entity name=”products” query=”select product_id as id, name, description from products”>

<field name=”product_id” column=”product_id” />

<field name=”name” column=”name” />

<field name=”description” column=”description” />

</entity>

</document>

</dataConfig>

I have provided basic select SQL. This can be extended to complex select statements like combining two or three tables and combined into one document, can able to specify fields that should not get indexed to improve search performance.

*******************Important******************

If any custom field types which are not part of managed-schema file, we have to specify them explicitly in the schema file as defined below. SOLR will ignore these fields from indexing in case it is not defined.

*******************Important******************

To emphasis more on above point, let us assume we have to define a custom type filed called “product_make”

<field name=”product_make” column=”make” />

In this case, product_make (also for other fields, name & description) is not valid field type as per the schema file, to make it as valid we have to explicitly define this type in the schema file. All we have to add is, below line to the manage-schema file.

<field name=”product_make” type=”string” multiValued=”false” indexed=”false” stored=”true” />

I don’t want the above field to be indexed because I marked it as false.

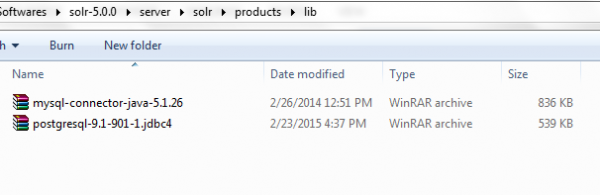

Finally, place the database driver files under <core path>\lib, create lib folder if it is not there under core.

Enable Data-Import Handler

The data import handler will provide below functionalities:

- Based on the configuration, it reads data from database, which is going to be the source for search content.

- Build SOLR documents by aggregating data from multiple columns and tables according to configuration, as I said before, columns are made optional by marking it as indexed=”false”.

Note: Avoid marking many columns as indexed if the content is not primarily required to get indexed because it will increase the search time over the document.

- Full imports and delta imports are supported.

- Detect inserts/update deltas (changes) and do delta imports (assume a last-modified timestamp column for this to work)

- Read and Index data from xml/(http/file) based on configuration

- Make it possible to plugin any kind of data source (ftp, stp etc.) and any other format of user choice (JSON, csv etc.)

Enable the data import handler by adding the code below to solrconfig.xml, which has data import libraries and configuration xml that define database details along with document configuration details for indexing.

<lib dir=”../../../contrib/dataimporthandler/lib/” regex=”.*\.jar” />

<lib dir=”../../../dist/” regex=”solr-dataimporthandler-\d.*\.jar” />

<requestHandler name=”/dataimport” class=”org.apache.solr.handler.dataimport.DataImportHandler”>

<lst name=”defaults”>

<str name=”config”>db-data-config.xml</str>

</lst>

</requestHandler>

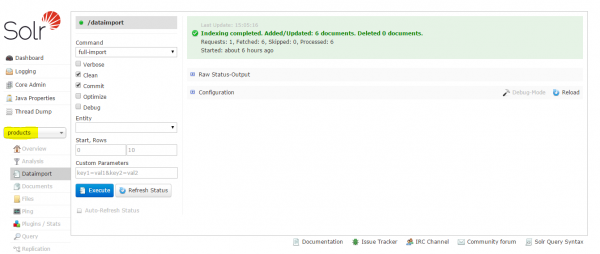

All set; now stop and start the SOLR in case it is running. To get the latest files refreshed, go to admin page. Select the core that we need to import data, in our case “Products.”

Then, under data import tab, execute data import handler. After you refresh, the import handler will import data from database, and create a document. The document is a set of fetched/processed records in indexed format; here, based on product_id (marked field indexed=”true”), all the records are indexed.

Search Query

The below query returns all data in the indexed document.

http://localhost:8984/solr/products/select?q=*%3A*&wt=json&indent=true

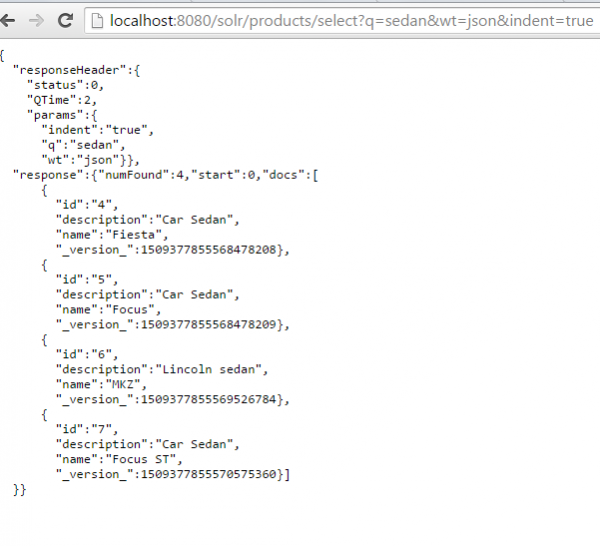

Below query returns results matches either product name or description “Sedan”. Results consists the data with exact and partial matches.

http://localhost:8984/solr/products/select?q=Sedan&wt=json&indent=true

Above, all of the steps explain how to configure SOLR engine on standalone mode. Further in the document, I am going to explain how to integrate SOLR search engine with JBoss AS7 server, so that it will be accessed by java applications.

JBOSS SOLR Integration

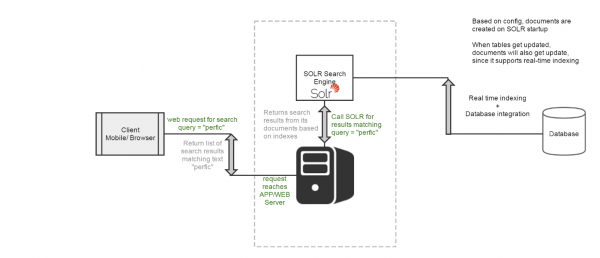

Flow diagram

Figure 1: SOLR integration with JBoss AS 7 server – Flow Diagram

SOLR integration with JBOSS required few configuration changes to JBOSS core files. In case of using SOLR version below 4.1, then need additional configuration for processing no-ASCII characters in query string.

http://wiki.apache.org/solr/SolrJBoss

SOLR Home

Setting SOLR home path can be done in many ways, whereas here I have set SOLR home path in Jboss standalone.xml file

<System-properties>

<property name=”solr.solr.home” value=”<solr path>\server\solr”/>

</system-properties>

Alternatively SOLR home path is set in Solr.war web.xml file which is eventually deployed to JBOSS server.

Add below entry to the Solr.war web.xml.

<env-entry>

<env-entry-name>solr/home</env-entry-name>

<env-entry-type>java.lang.String</env-entry-type>

<env-entry-value><solr path>\server\solr </env-entry-value>

</env-entry>

Now install Solr.war file into JBOSS and all set to access SOLR search engine from the application.

Spring SOLR Integration

SOLR maven dependency

We follow embedded SOLR approach, since we need to access the SOLR from the java application, i.e. we need to have SOLR execution with in the java process.

Add embedded SOLR maven dependency to the service pom.xml

<dependency>

<groupId>org.apache.solr</groupId>

<artifactId>solr-core</artifactId>

<version>5.1.0</version>

</dependency>

Search Implementation

Service configuration to invoke SOLR core, need to do few spring configuration as said below

Step 1:

Add below bean definition to the spring service context file, and notice, SOLR is just like an another application deployed to JBoss server, which in turn access the SOLR search engine configured as SOLR home path in JBoss system variables.

<bean id=“solrSearch” class=“com.reports.SolrSearchServiceImpl”>

<constructor-arg type=“java.lang.String” value=“http://localhost:8080/solr”/>

</bean>

Note: Here, I have given local host for testing, in case of production, value should map to production host name/URI.

Step 2:

Service provides search for single word search or phrase against the document. Based on the SOLR configuration @see db-data-config.xml, search terms are searched against the SOLR documents which has table rows indexed based on query definition. Full search, phrase, or wild card searches are done based on passing query filters in SOLR query

Service Class:

public SolrSearchServiceImpl(String url) {

this.url = url;

}

public Map<String, String> searchForTerm(String searchTerm) throws SearchException {

SolrQuery solrQuery = new SolrQuery();

// search string from the front end eg:- “Escape”

solrQuery.setQuery(processSearchTerm(searchTerm));

SolrClient server = new HttpSolrClient(url);

//url – passing which core solr need to query for getting search results.

//here the core we created is “products”

QueryResponse response = server.query(“products”, solrQuery); SolrDocumentList documentList = response.getResults();

}

Unit Testing

Case 1:- Text Search

Junit test case, which test for single word search query.

@Test

public void testSingleSearch() {

String searchText = new String(“Truck”);

try {

SolrSearchServiceImpl.searchForTerm(searchText);

} catch (SearchException sExp) {

sExp.printStackTrace();

Assert.fail();

}

}

Output: – SOLR return search result matching word “Truck” return vehicle data which matches “F-150”.

PRODUCTS FILTERED FROM SOLR for search query = Truck is F-150.

PRODUCTS FILTERED FROM SOLR for search query = Truck is Colorado.

Case 2:- Column Search

In case of searching for particular column data in the document, we can add the column name which we need to search in specific in the search query.

@Test

public void testColumnSearch() {SearchBO searchBO = new SearchBO();

searchBO.setSearchTerm(“name:Focus”);

try {

SolrSearchServiceImpl.searchForTerm(searchBO);

} catch (SearchException sExp) {

Assert.fail();

}

}

Output: SOLR returns search results from the column name = “name,” results shown below returns two results matching the search text “Focus”

PRODUCTS FILTERED FROM SOLR for search query = name:Focus is Focus

PRODUCTS FILTERED FROM SOLR for search query = name:Focus is Focus ST

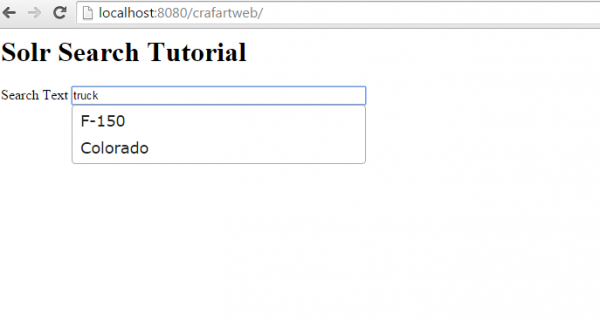

Search From Client

I have created a JSP page for testing from the client side. When I type in the text box, it invokes back-end SOLR service, which I described in the previous step. Based on the search text entered, the engine will return the responses in JSON format, which is handled in front end and displayed in the browser.

Figure 2:- Text search from browser

Search.jsp

<%@ taglib uri=”http://java.sun.com/jsp/jstl/core” prefix=”c”%>

<%@ page isELIgnored=”false”%>

<c:set var=”context” value=”${pageContext.servletContext.contextPath}” />

<head>

<title>Solr Searh</title>

<link rel=”stylesheet” href=”${context}/resources/javascript/jquery-ui-1.10.3/themes/base/jquery-ui.css” type=”text/css”>

<link rel=”stylesheet” href=”${context}/resources/javascript/jquery-ui-1.10.3/themes/base/jquery.ui.accordion.css” type=”text/css”>

<script src=”${context}/resources/javascript/jquery-core/jquery-1.11.0.min.js”></script>

<script src=”${context}/resources/javascript/jquery-ui-1.10.3/ui/jquery-ui.min.js”></script></head>

<html>

<div>

<h1>Solr Search Tutorial</h1>

<div style=”width: 50%; float: left;”>

<label style=”width: 25%”>Search Text</label> <input id=”searchText” type=”text” style=”width: 50%” />

</div>

</div>

</html><!– home page search box ends here –>

<script type=”text/javascript”>

<!– search auto complete functionality script –>

$(document).ready(

function() {

$(“#searchText”).autocomplete(

{

source : function(request, response) {

var params = $(“#searchText”).val();

var searchBO = {};

searchBO.searchTerm = params;

var postData = JSON.stringify(searchBO);

$.ajax({

url : “search/products”,

dataType : “json”,

contentType : “application/json”,

type : “post”,

data : postData,

cache : false

}).done(

function(modelMap) {

response($.each(

modelMap.searchResults,

function(productId,

searchTerm) {

return {

lable : productId,

value : searchTerm

}

}));

});

return products;

},

minLength : 0

});

});

</script>

Conclusion

This document covered the basic concepts of SOLR and mainly focused on integration with Jboss AS 7. We also covered how to invoke the SOLR engine from a java application using spring framework. In terms of search functionality, SOLR supports many features ranging from auto suggest, faceting, highlighting, text search and many more. Here, I have covered only text search with real-time indexing connecting database. Please visit https://cwiki.apache.org/confluence/display/solr/Searching for a full list of search features offered by SOLR engine.